Domestic big model debut Chinese logical reasoning, "Tiangong big model 4.0" o1 version is here!

I didn't realize that technology was evolving so fast. Recently, people are already imagining life after the AI era.

Over the weekend, JPMorgan Chase CEO Jamie Dimon said that thanks to AI technology, future generations could work just three-and-a-half days a week and live to be a hundred.

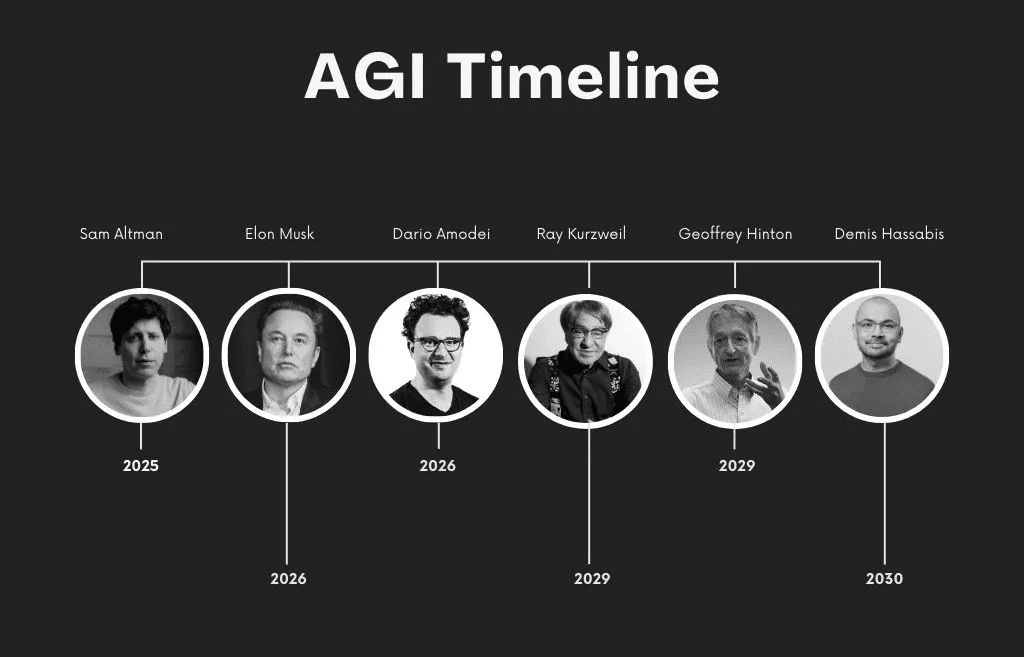

Some research suggests that technologies such as generative AI could automate tasks that currently take up 60-70% of people's work time. Where will the technology needed for these changes come from? It has to be breakthrough AI, and some have compiled predictions from various AI bigwigs about when general artificial intelligence (AGI) will emerge.DeepMind's Hassabis, for one, thinks we're two to three major technological innovations away from the emergence of AGI.

Like OpenAI CEO Sam Altman, who even thinks AGI will be here next year. Come to think of it.The reason for such confidence may lie in the fact that people have recently made large models learn to 'reason'The

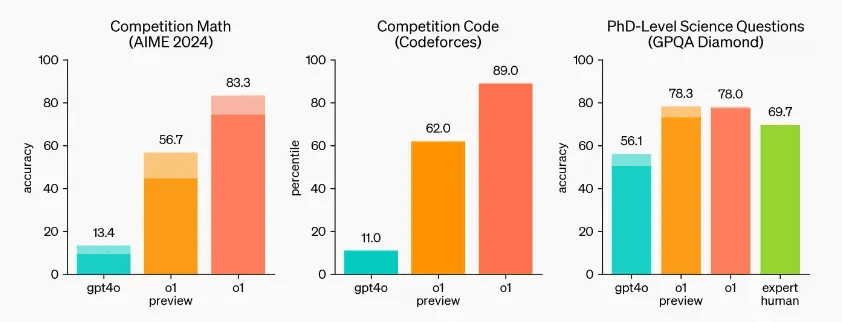

Just in September, OpenAI officially made public the unprecedented large model of complex reasoning, o1, a major breakthrough in that the new model has both general-purpose capabilities and the ability to solve harder problems than previous scientific, code, and mathematical models have been able to do. Experimental results show that o1 significantly outperforms GPT-4o in the vast majority of reasoning tasks.

OpenAI has opened up a new direction for the ability of big models: "whether they can think and reason like a human" has become an important indicator to judge their ability. If the new models released by vendors don't have some chain of thought, I'm afraid they will be embarrassed to show them.

Until now, however, the official version of o1 is still delayed. o1's supremacy is being challenged by the AI community, especially the big domestic modeling companies, and is starting to take the lead in some authoritative reviews.

Today.China's first o1 model with Chinese logical reasoning ability is here, it is the "Skywork 4.0" o1 version (English name: Skywork o1) launched by Kunlun World Wide Web.. This is the company's third big move on big models and related applications in almost a month, after theTinker AI Advanced Search,Real-time voice dialog AI assistant Skyo Sequential appearances.

From now on, Skywork o1 will open the internal test, if you want to experience it, please apply now.

Apply at www.tiangong.cn

Three models side by side

The New Battlefield of Reasoning

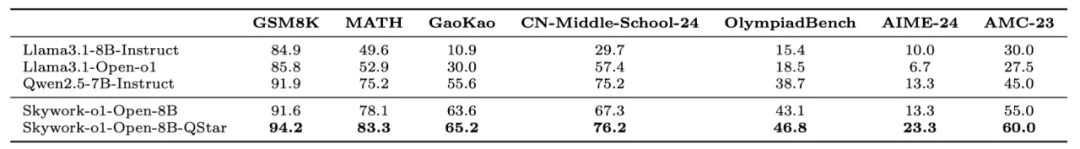

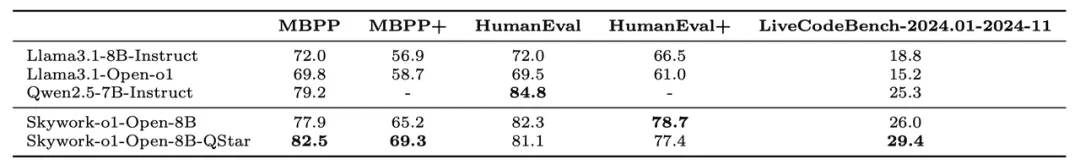

This time, Skywork o1 includes the following three models, both an open version that gives back to the open source community and a more capable specialized version.

Among other things, the open-source version of Skywork o1 Open parameter is 8B, achieving significant improvements in various mathematical and code metrics, and pulling the performance of Llama-3.1-8B to the same ecosystem SOTA, surpassing Qwen-2.5-7B instruct. at the same time, Skywork o1 Open unlocks mathematical inference tasks (e.g., 24-point computation) that are not possible with more massive models such as GPT-4o. This also opens up the possibility of deploying inference models on lightweight devices.

In addition, Kunlun will also open source two Process-Reward-Models (PRMs) for reasoning tasks, which are Skywork o1 Open-PRM-1.5B cap (a poem) Skywork o1 Open-PRM-7BThe previously open-sourced Skywork-Reward-Model only scores the entire model response. While the previously open-source Skywork-Reward-Model only scores the entire model response, Skywork o1 Open-PRM can be refined to score each step in the model response.

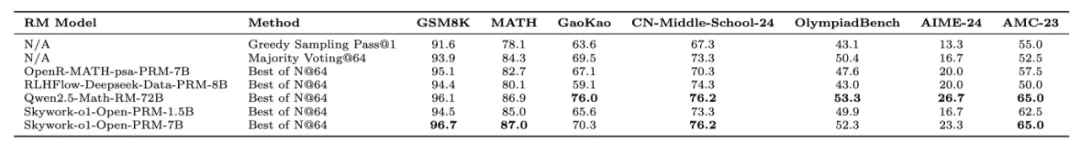

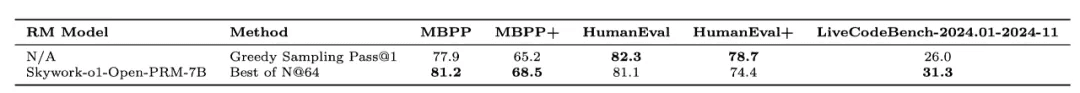

Compared to existing PRMs in the open source community, Skywork o1 Open-PRM-1.5B can achieve 8B modeling results, such as RLHFlow's Llama3.1-8B-PRM-Deepseek-Data and OpenR's Math-psa-7B.Skywork o1 Open-PRM-7B is more powerful, able to simultaneously approach or even surpass Qwen2.5-Math-RM-72B by a factor of 10 on most benchmarks.

According to the report.Skywork o1 Open-PRM is also the first open source PRM for code-based tasks.. The following table shows the evaluation results on the math and code review sets using Skywork-o1-Open-8B as the base model and using different PRMs.

Note: Except for Skywork-o1-Open-PRM, other open source PRMs are not specifically optimized for code-based tasks, so no comparison is made for code-based tasks.

A detailed technical report will also be released shortly. The model and associated presentation are currently open-sourced at Huggingface.

Open source address: https://tinyurl.com/skywork-o1

Skywork o1 Lite With the ability to think in a complete manner and achieve a faster speed of reasoning and thinking, they are especially good at Chinese logic and reasoning, math and other problems.Skywork o1 Preview This is the full version of the reasoning model, with self-developed online reasoning algorithms, compared with the Lite version can present a more diverse and deeper thinking process, to achieve a more complete and higher quality reasoning.

You may ask what makes Skywork o1 different from current work on reproducing o1 models that puts a lot of effort into the inference level.

Kunlun said that the series of models endogenized the ability to think, plan and reflect on the model output, and reasoned, reflected and verified step by step in slow thinking, unlocking the typical advanced version of complex human thinking abilities such as "deep thinking" to ensure the quality and depth of the answer.

Of course, we still have to see how the Skywork o1 turns out in the field.

first-hand experience

This time, Skywork o1 completely nailed the reasoning.

I got the test qualification in advance and examined all aspects of the reasoning ability of the Skywork o1 series models, especially the Lite and Preview versions. The following figure shows the interface of Skywork o1 Lite.

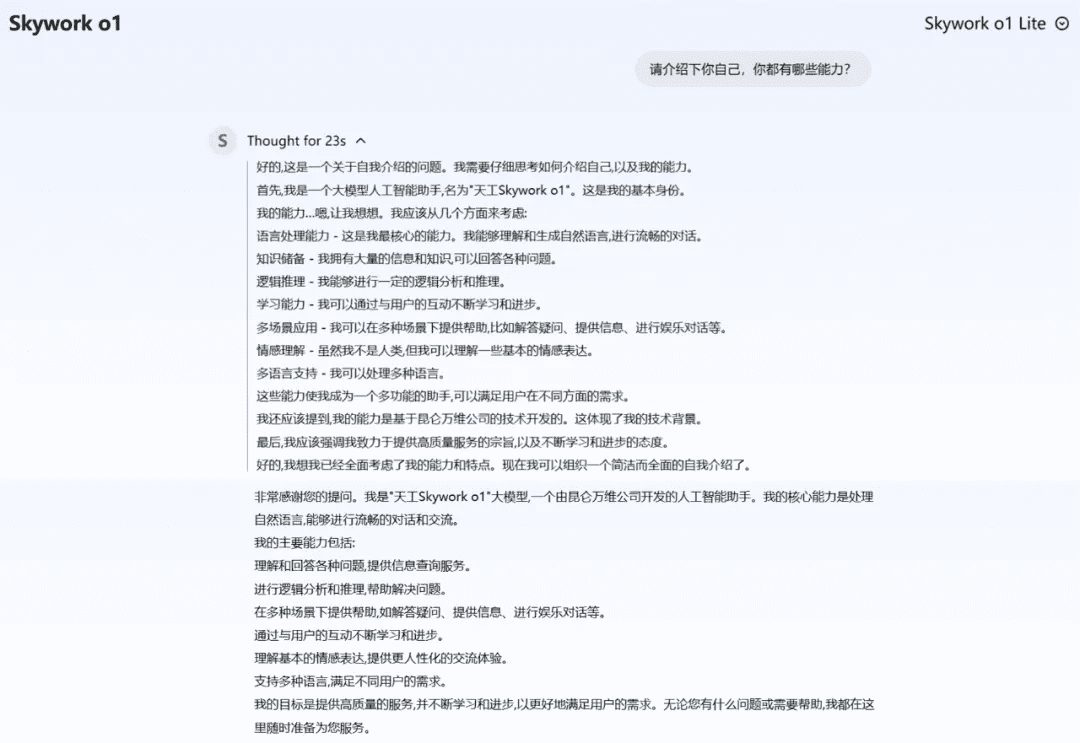

We start by letting Skywork o1 Lite introduce itself, and we can see that the model does not give the answer directly, but ratherVisualize the complete thinking process including problem orientation, self-competence profiling, etc. to the userand willShow thinking time, which is a distinguishing feature of today's reasoning models.

Moving on to the official test, we scoured various types of reasoning questions to see if we could actually wrap our heads around the Skywork o1.

Compare sizes and count "r" problems, no more cartwheels!

Previously, large models have often flopped when faced with some seemingly simple problems of comparing sizes and counting. Now these problems are no longer a problem for Skywork o1 Lite.

In comparing whether 13.8 is greater than 13.11, Skywork o1 Lite gives a complete chain of thought to find out that the key to solving the problem lies in the size of the decimal places. The model also self-reflects, double-checking the conclusions it has reached and reminding of easy points to answer incorrectly.

Similarly, in correctly answering the question "How many "r "s are in Strawberry?" Skywork o1 Lite is also the complete chain of thinking, verifying, and confirming.

When answering questions with scrambled items, Skywork o1 Lite quickly clears the mind of distractions.

Playing with brain teasers without falling into language traps

Big models sometimes get confused by brain teaser questions in Chinese context, leading to giving wrong answers. This time Skywork o1 Lite can easily take down such questions.

Two father and son pairs only caught three fish, but each got one, and Skywork o1 Lite was able to figure out what was going on.

Get a variety of common sense and say goodbye to retarded attributes

The ability of a large model to approach the human level of common-sense reasoning is one of the most important indicators of its ability to improve its credibility, enhance its decision-making capabilities, and expand its applications in multiple domains. both Skywork o1 Lite and Preview perform well in this regard.

For example, the distinction between length (inches, centimeters, yards) and units of mass (kilograms).

For example, why salt water ice cubes melt more easily than plain water ice cubes.

Another example is a person standing on a perfectly stationary boat, and when jumping backwards the boat moves forwards.Skywork o1 Lite explains the physics behind the phenomenon clearly.

Become a problem solver, and you'll have no trouble with the college entrance exams!

Mathematical reasoning is a fundamental capability for solving complex tasks, and large models with strong mathematical reasoning capabilities help users solve interdisciplinary complex tasks efficiently.

What is the 10th term of the sequence "2, 6, 12, 20, 30..." in the sequence problem "2, 6, 12, 20, 30..."? What is the 10th term of this sequence?". Skywork o1 Lite observes the characteristics of the number arrangement, finds a pattern, verifies the pattern, and finally gives the correct answer.

When solving the problem of combinations (how many choices to make a team of 3 out of 10), Skywork o1 Preview had the correct answer after thinking about it in full link.

For another dynamic programming (coins with values 1, 3, and 5, how many coins are needed to make 11?) problem, Skywork o1 Lite gives the optimal solution.

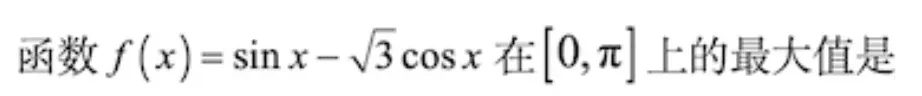

We're going to make the Skywork o1 Lite a little more difficult by giving it a couple of high school math questions from the 2024 National College Entrance Exam National Paper A Math (Wen).

It starts with a probability question (what is the probability that A, B, C, and D are in a row, C is not at the head of the row, and either A or B is at the end of the row), and Skywork o1 Lite quickly gives the correct answer.

Then there are function questions (  ), Skywork o1 Lite solutions and answers in one go.

), Skywork o1 Lite solutions and answers in one go.

Very thoughtful and logical thinker.

Logical reasoning with large models is one of the core capabilities for realizing stronger general-purpose AI, and Skywork o1 Lite is quite good at answering such questions. For example, in the classic lying problem, Skywork o1 Lite is able to distinguish who is telling the truth and who is lying from the perspective of logical self-consistency.

The Skywork o1 Lite is not blinded by paradoxes either.

Impartiality in the face of moral dilemmas

Ethical decision-making is largely an important factor in ensuring the safe development of AI, adherence to societal norms of morality, and enhancing user trust and acceptance, and it is all the more important for big models to be careful with what they say.

Instead of giving an absolute answer to the age-old dilemma of "saving your wife or saving your mom", Skywork o1 Lite weighs the pros and cons and gives sound advice.

There is also the dilemma of "saving more or less", and Skywork o1 Preview does not jump to conclusions, but offers some deeper thoughts.

It's a retarded test, and you can hold it.

The Skywork o1 Lite is able to easily answer retarded questions that are often used to test the intelligence of large models, such as the difference between getting a perfect score of 750 on a college entrance exam and getting into a 985 exam.

Then there's 'can you eat lunch meat at night', and the Skywork o1 Lite is clearly not misled by the name of the food.

Code issues can also be fixed

Skywork o1 Lite is able to solve some code problems, such as the Number of islands problem on LeetCode.

The question is "Given a 2-dimensional grid map with "1" (land) and "0" (water), count the number of islands. Islands are surrounded by water and are formed by connecting adjacent land horizontally or vertically, and you can assume that all four sides of the grid are surrounded by water."

At this point, we can draw the next wave of conclusions:

On the one hand, the "small" problems that the big models used to roll over are a piece of cake in the eyes of Skywork o1 with its reasoning power. On the other hand, throughComplete chain of thought and planning, self-reflection and self-validationThe Skywork o1 is also capable of thinking through complex problem scenarios and producing more accurate and efficient results.

In this way, the much stronger reasoning ability compared to the past will inspire the potential of Skywork o1 to be applied in more diverse pendant tasks and domains, especially logical reasoning and complex scientific and mathematical tasks that are easy to roll over. Meanwhile, the launch of Skywork is also bound to further optimize the effectiveness of tasks in the areas of high-quality content generation and deep search, such as creative writing.

Domestic o1 model

Self-research technology driven

Previously, we have already witnessed a series of generative AI vertical applications proposed by Kunlun World Wide, including but not limited to the direction of search, music, games, socialization, and AI short plays. Behind this, in the research and development of the basic technology of the big model, Kunlun Wanwei has long had a layout.

Since 2020, Kunlun Wanwei has been continuously ramping up its AI big model investment, with the company releasing its own AIGC model series just a month after ChatGPT went live. In many verticals, Kunlun has already launched applications, including Melodio, the world's first AI streaming music platform, Mureka, an AI music creation platform, and Mureka, an AI short drama platform. SkyReels And so on.

On the basic technology level, Kunlun has already built up a whole industry chain layout of "arithmetic infrastructure - big model algorithm - AI application", of which the "Tiangong" series of big models is the core.

In April of last year, Kunlun World Wide released its self-developed "Tiangong 1.0" model. In April this year, the Tiangong model was upgraded to version 3.0, adopting the MoE hybrid expert model with 400 billion parameters, and choosing to open source at the same time. Now, Tiangong 4.0 version realizes the capacity improvement in logical reasoning tasks based on the method of intelligent emergence.

Technically, the Skywork o1's performance on logical reasoning tasks is dramatically improved thanks to Skywork's three-stage self-developed training program, including the following:

firstlyReasoning and reflection skills trainingSkywork o1 builds high-quality step-by-step thinking, reflection, and validation data through a self-developed multi-intelligencer system, supplemented by high-quality, diverse long-thinking data for continued pre-training and supervised fine-tuning of the base model.

secondlyReasoning Intensive LearningThe Skywork o1 team has developed the latest Skywork o1 Process Reward Model (PRM) for step-by-step reasoning enhancement, which not only effectively captures the impact of intermediate and thinking steps on the final answer of a complex reasoning task, but also combines with self-developed step-by-step reasoning enhancement algorithms to further strengthen the model's reasoning and thinking capabilities.

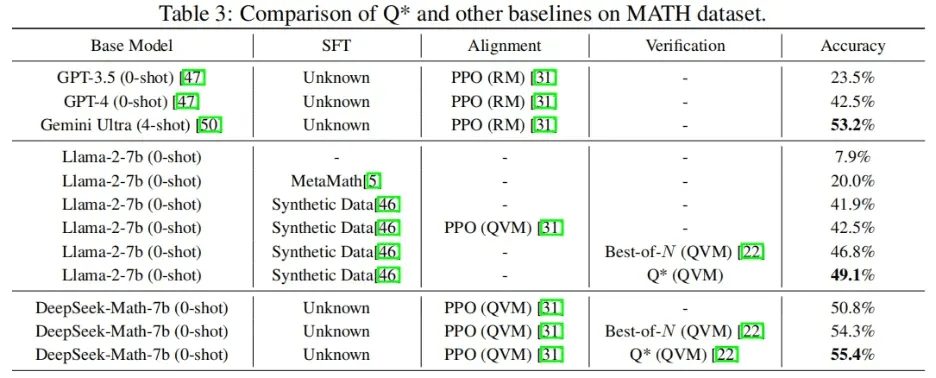

thirdlyReasoningThe reasoning is based on Tiangong's own Q* online reasoning algorithm. Based on Tiangong's self-developed Q * online reasoning algorithm with the model to think online and find the best reasoning path. This is also the first time in the world that the Q * algorithm is realized and made public, which can significantly improve the inference ability of LLM on datasets such as MATH and reduce the demand for computing resources.

On the MATH dataset, Q * helps DeepSeek-Math-7b improve to an accuracy of 55.4%, outperforming the Gemini Ultra.

Q * Algorithm paper address: https://arxiv.org/abs/2406.14283

It can be seen that Kunlun Wanwei's technology has reached the industry's leading level, and has gradually stood firm in the highly competitive generative AI field.

Compared to the current blossoming of generative AI applications, research has begun to enter the 'deep water' at the level of basic technology. Only those companies that have accumulated for a long time will be able to build a new generation of applications that will change our lives.

We look forward to Kunlun Wanwei bringing us more and more powerful technologies in the future.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...