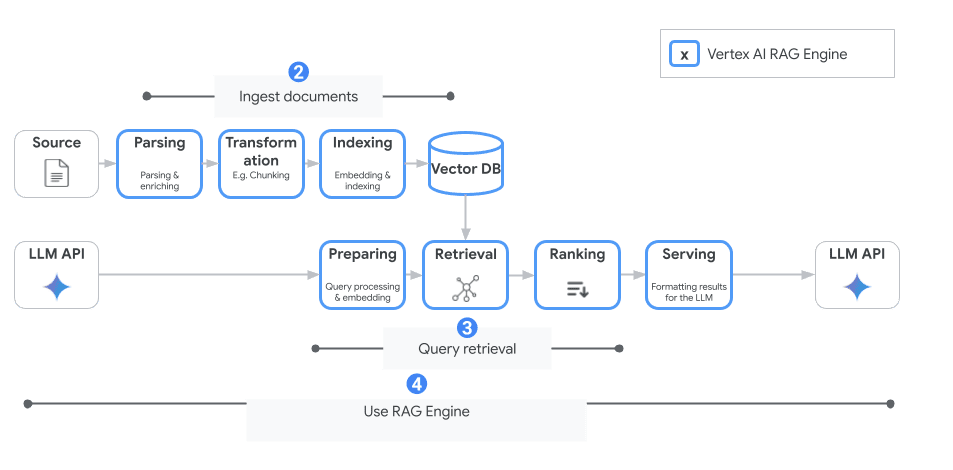

Google releases Vertex AI RAG engine: one-stop-shop for building reliable search-enhanced generative applications

Generative AI and big language modeling (LLM) are transforming industries, but two key challenges can hinder enterprise adoption: disillusionment (generating incorrect or meaningless information) and limited knowledge beyond their training data. Retrieval-enhanced generation (RAG) and grounding provide solutions by connecting LLMs to external data sources, allowing them to access up-to-date information and generate more authentic and relevant responses.

This paper explores the Vertex AI RAG engine and how it can help software and AI developers build robust, fact-based generative AI applications.

What is RAG and why do you need it?

The RAG retrieves relevant information from the knowledge base and provides it to the LLM, enabling it to generate more accurate and informed responses. This is in contrast to relying solely on the LLM's pre-trained knowledge, which may be outdated or incomplete.RAG is critical for building enterprise-grade generative AI applications that require the following capabilities:

- Accuracy: Minimize hallucinations and ensure that responses are fact-based.

- Update: Access the latest data and insights.

- Domain expertise: Utilize a specialized knowledge base for specific use cases.

RAG vs Grounding vs Search

- RAG: A technique for retrieving relevant information and providing it to the LLM to generate a response. This information may include fresh information, topics and context or factual basis.

- Grounding: Ensure the reliability and trustworthiness of AI-generated content by anchoring it to verified sources.Grounding can use RAG as a technique.

- Search: A method for quickly finding and delivering relevant information from data sources based on text or multimodal queries driven by advanced AI models.

Introducing the Vertex AI RAG Engine

The Vertex AI RAG Engine is a hosted orchestration service that simplifies the complex process of retrieving relevant information and making it available to LLMs. This allows developers to focus on building their applications rather than managing infrastructure.

Key benefits of the Vertex AI RAG engine:

- Easy to use: Get started quickly with a simple API for rapid prototyping and experimentation.

- Hosting Choreography: Dealing with the complexity of data retrieval and LLM integration eliminates the need for developers to manage the infrastructure.

- Customization and open source support: Choose from a variety of parsing, chunking, annotation, embedding, vector storage and open source models, or customize your own components.

- High-quality Google components: Leverage Google's cutting-edge technology for optimal performance.

- Integration flexibility: Connect to various vector databases such as Pinecone and Weaviate, or use Vertex AI Vector Search.

Vertex AI RAG: a range of solutions

Google Cloud offers a range of RAG and grounding solutions to meet different levels of complexity and customization:

- Vertex AI Search: A fully managed search engine and retriever API that is ideally suited for complex enterprise use cases that require high out-of-the-box quality, scalability, and fine-grained access control. It simplifies connectivity to a variety of enterprise data sources and supports searching across multiple sources.

- Totally DIY RAG: For developers looking for complete control, Vertex AI provides separate component APIs (e.g., Text Embedding API, Ranking API, Grounding on Vertex AI) to build custom RAG pipelines. This approach offers the most flexibility, but requires a significant amount of development work. Use this approach if you need very specific customizations or want to integrate with an existing RAG framework.

- Vertex AI RAG engine: It is ideal for developers looking for a balance between ease of use and customization. It allows for rapid prototyping and development without sacrificing flexibility.

Common industry use cases for RAG engines:

1. Financial services: personalized investment advice and risk assessment:

Problem: Financial advisors need to quickly consolidate large amounts of information (including client profiles, market data, regulatory filings, and internal research) in order to provide tailored investment advice and accurate risk assessments. Manually reviewing all this information is time-consuming and error-prone.

RAG Engine Solution: The RAG engine ingests and indexes relevant data sources. Financial advisors can then query the system using client-specific information and investment objectives. the RAG engine will provide concise, evidence-based responses, extracting information from relevant documents, including citations, to support recommendations. This improves advisor efficiency, reduces the risk of human error, and enhances the personalization of advice. The system can also flag potential conflicts of interest or regulatory violations based on information found in ingested data.

2. Health care: accelerated drug discovery and personalized treatment protocols:

Problem: Drug discovery and personalized medicine rely heavily on the analysis of large datasets of clinical trials, research papers, patient records and genetic information. Sifting through this data to identify potential drug targets, predict a patient's response to treatment, or generate personalized treatment regimens is extremely challenging.

RAG Engine Solution: With appropriate privacy and security measures, a RAG engine can ingest and index large amounts of biomedical literature and patient data. Researchers can then pose complex queries, such as "What are the possible side effects of drug X in a patient with genotype Y?" The RAG engine will synthesize relevant information from a variety of sources, providing researchers with insights they may have missed in their manual searches. For clinicians, the engine can help generate recommended personalized treatment plans based on a patient's unique characteristics and medical history, supported by relevant research.

3. Legal: Enhance due diligence and contract review:

Problem: Legal professionals spend a great deal of time reviewing documents during the due diligence process, contract negotiations and litigation. Finding relevant provisions, identifying potential risks and ensuring compliance with regulations is time-consuming and requires deep expertise.

RAG Engine Solution: The RAG Engine ingests and indexes legal documents, case law and regulatory information. Legal professionals can query the system to find specific clauses in contracts, identify potential legal risks and research relevant precedents. The engine highlights inconsistencies, potential liabilities and relevant case law, which greatly speeds up the review process and improves accuracy. This helps to speed up the completion of transactions, reduce legal risks and utilize legal expertise more effectively.

Getting Started with the Vertex AI RAG Engine

Google offers a number of resources to help you get started, including:

- Getting Started Notebook:

- Documentation: Comprehensive documentation guides you through the setup and use of the RAG Engine.

- Integration: Examples with Vertex AI Vector Search, Vertex AI Function Store, Pinecone, and Weaviate

- https://github.com/GoogleCloudPlatform/generative-ai/blob/main/gemini/rag-engine/rag_engine_vector_search.ipynb

- https://github.com/GoogleCloudPlatform/generative-ai/blob/main/gemini/rag-engine/rag_engine_feature_store.ipynb

- https://github.com/GoogleCloudPlatform/generative-ai/blob/main/gemini/rag-engine/rag_engine_pinecone.ipynb

- https://github.com/GoogleCloudPlatform/generative-ai/blob/main/gemini/rag-engine/rag_engine_weaviate.ipynb

- Assessment framework: Learn how to use the RAG engine to evaluate searches and perform hyperparameter tuning:

Building fact-based generative AI

Vertex AI's RAG engine and a range of grounding solutions enable developers to build more reliable, authentic and insightful generative AI applications. By leveraging these tools, you can unlock the full potential of LLM and overcome the challenges of disillusionment and limited knowledge, paving the way for wider adoption of generative AI in the enterprise. Choose the solution that best fits your needs and start building the next generation of intelligent applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...