GPUStack: Managing GPU clusters to run large language models and quickly integrate common inference services for LLMs.

General Introduction

GPUStack is an open source GPU cluster management tool designed for running Large Language Models (LLMs). It supports a wide range of hardware, including Apple MacBooks, Windows PCs, and Linux servers, making it easy to scale the number of GPUs and nodes to meet growing computing demands. GPUStack provides distributed reasoning with single-node, multi-GPU, and multi-node reasoning and services, compatibility with the OpenAI API, and simplified user and API key management, as well as real-time monitoring of GPU performance and utilization. It is compatible with the OpenAI API, simplifies user and API key management, and monitors GPU performance and utilization in real-time. Its lightweight Python package design ensures minimal dependencies and operational overhead, making it ideal for developers and researchers.

Function List

- Supports a wide range of hardware: Compatible with Apple Metal, NVIDIA CUDA, Ascend CANN, Moore Threads MUSA and more.

- Distributed inference: supports single-node multi-GPU and multi-node inference and services.

- Multiple inference backends: support for llama-box (llama.cpp) and vLLM.

- Lightweight Python packages: minimal dependencies and operational overhead.

- OpenAI Compatible API: Provides API services that are compatible with the OpenAI standard.

- User and API Key Management: Simplifies user and API key management.

- GPU Performance Monitoring: Monitor GPU performance and utilization in real time.

- Token usage and rate monitoring: Efficiently manage token usage and rate limiting.

Using Help

Installation process

Linux or MacOS

- Open the terminal.

- Run the following command to install GPUStack:

curl -sfL https://get.gpustack.ai | sh -s -

- After installation, GPUStack will run as a service on the systemd or launchd system.

Windows (computer)

- Run PowerShell as an administrator (avoid using PowerShell ISE).

- Run the following command to install GPUStack:

Invoke-Expression (Invoke-WebRequest -Uri "https://get.gpustack.ai" -UseBasicParsing).Content

Guidelines for use

initial setup

- Accessing the GPUStack UI: Open in browser

http://myserverThe - Use the default username

adminand the initial password to log in. Method to get the initial password:- Linux or MacOS: run

cat /var/lib/gpustack/initial_admin_passwordThe - Windows: running

Get-Content -Path "$env:APPDATA\gpustack\initial_admin_password" -RawThe

- Linux or MacOS: run

Creating API Keys

- After logging into the GPUStack UI, click on "API Keys" in the navigation menu.

- Click the "New API Key" button, fill in the name and save it.

- Copy the generated API key and save it properly (visible only at creation time).

Using the API

- Setting environment variables:

export GPUSTACK_API_KEY=myapikey

- Use curl to access OpenAI-compatible APIs:

curl http://myserver/v1-openai/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $GPUSTACK_API_KEY" \

-d '{

"model": "llama3.2",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello!"}

],

"stream": true

}'

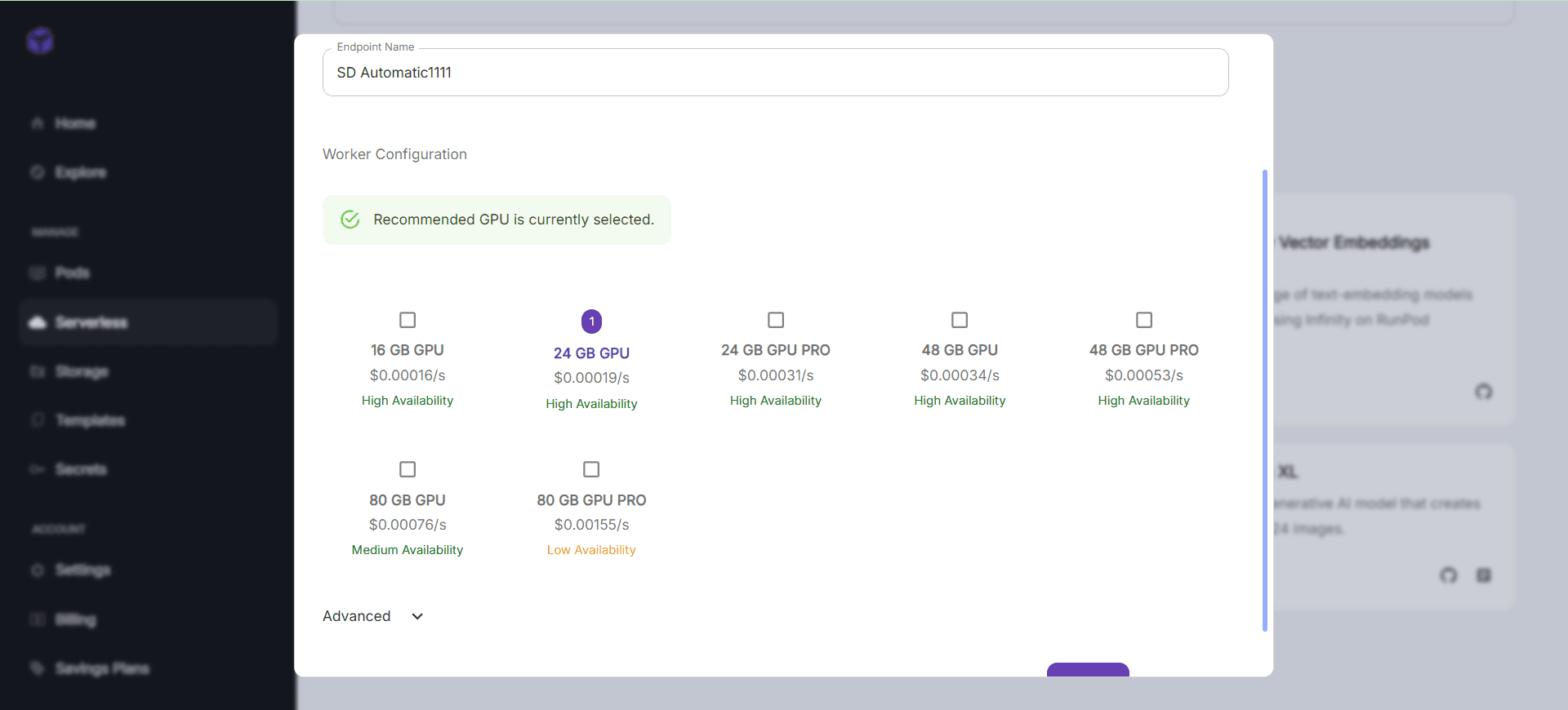

Run and Chat

- Run the following command in a terminal to chat with the llama3.2 model:

gpustack chat llama3.2 "tell me a joke."

- Click on "Playground" in GPUStack UI to interact.

Monitoring and Management

- Monitor GPU performance and utilization in real time.

- Manage user and API keys, track token usage and rates.

Supported models and platforms

- Supported models: LLaMA, Mistral 7B, Mixtral MoE, Falcon, Baichuan, Yi, Deepseek, Qwen, Phi, Grok-1, and others.

- Supported multimodal models: Llama3.2-Vision, Pixtral, Qwen2-VL, LLaVA, InternVL2, and others.

- Supported platforms: macOS, Linux, Windows.

- Supported gas pedals: Apple Metal, NVIDIA CUDA, Ascend CANN, Moore Threads MUSA, with future plans to support AMD ROCm, Intel oneAPI, Qualcomm AI Engine.

Documentation and Community

- Official documentation: visit GPUStack Documentation Get the full guide and API documentation.

- Contribution Guide: Reading Contribution Guidelines Learn how you can contribute to GPUStack.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...