Google Releases Its Own "Reasoning" AI Model: Gemini 2.0 Flash Thinking Experimental

Google has released what it's calling a new "reasoning" AI model - but it's still in the experimental stage, and from the looks of our brief test, it does have room for improvement.

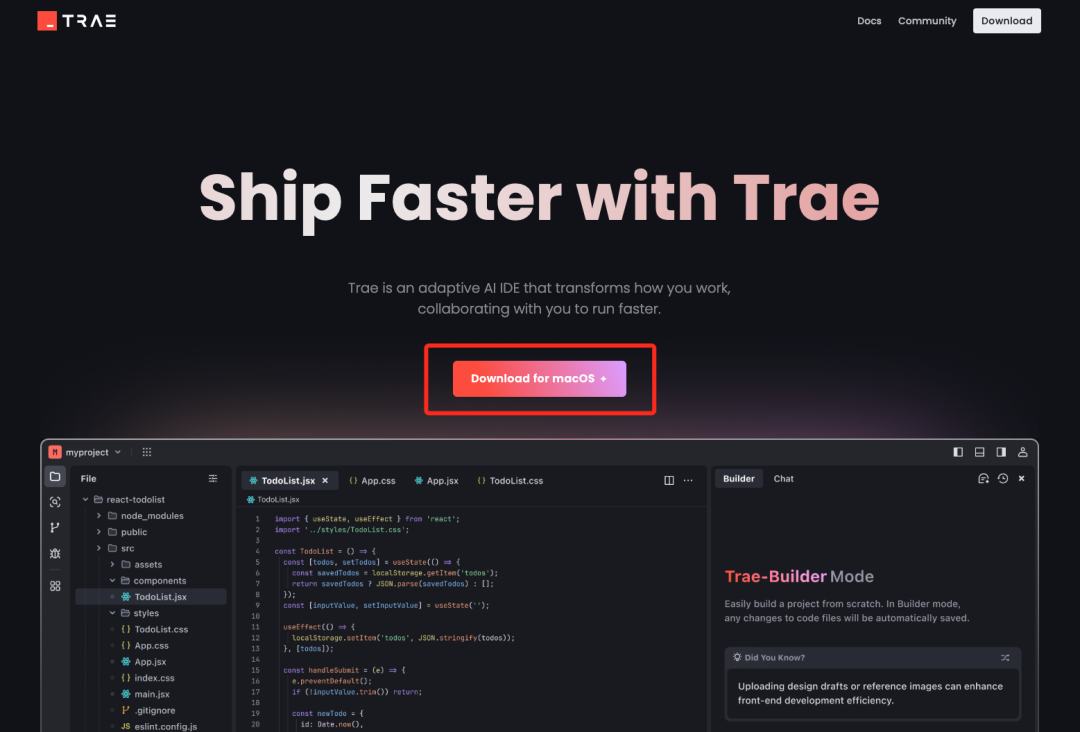

This new model is called Gemini 2.0 Flash Thinking Experimental (the name is a bit of a mouthful), which can be found in the AI Studio It is used in Google's AI prototyping platform. The model card describes it as "best suited for multimodal understanding, reasoning, and coding" and capable of "solving the most complex problems in areas such as programming, math, and physics".

In a post on X, Logan Kilpatrick, who is responsible for the AI Studio product, called the Gemini 2.0 Flash Thinking Experimental Google's "first step in a journey of reasoning. In his own post, Jeff Dean, chief scientist at Google DeepMind, said that the Gemini 2.0 Flash Thinking Experimental is trained to use thought to enhance reasoning.

"We saw encouraging results when we increased the amount of computation for inference time," Dean said, referring to the amount of computation required for the model to answer the question.

The Gemini 2.0 Flash Thinking Experimental builds on Google's recently released Gemini 2.0 Flash model, which seems to be designed similarly to OpenAI's o1 and other so-called inference models. Unlike most AIs, the inference model effectively self-checks itself to avoid some of the pitfalls that would normally make AI models wrong.

However, one drawback of inferential models is that they usually take longer - often from seconds to minutes - to arrive at a solution.

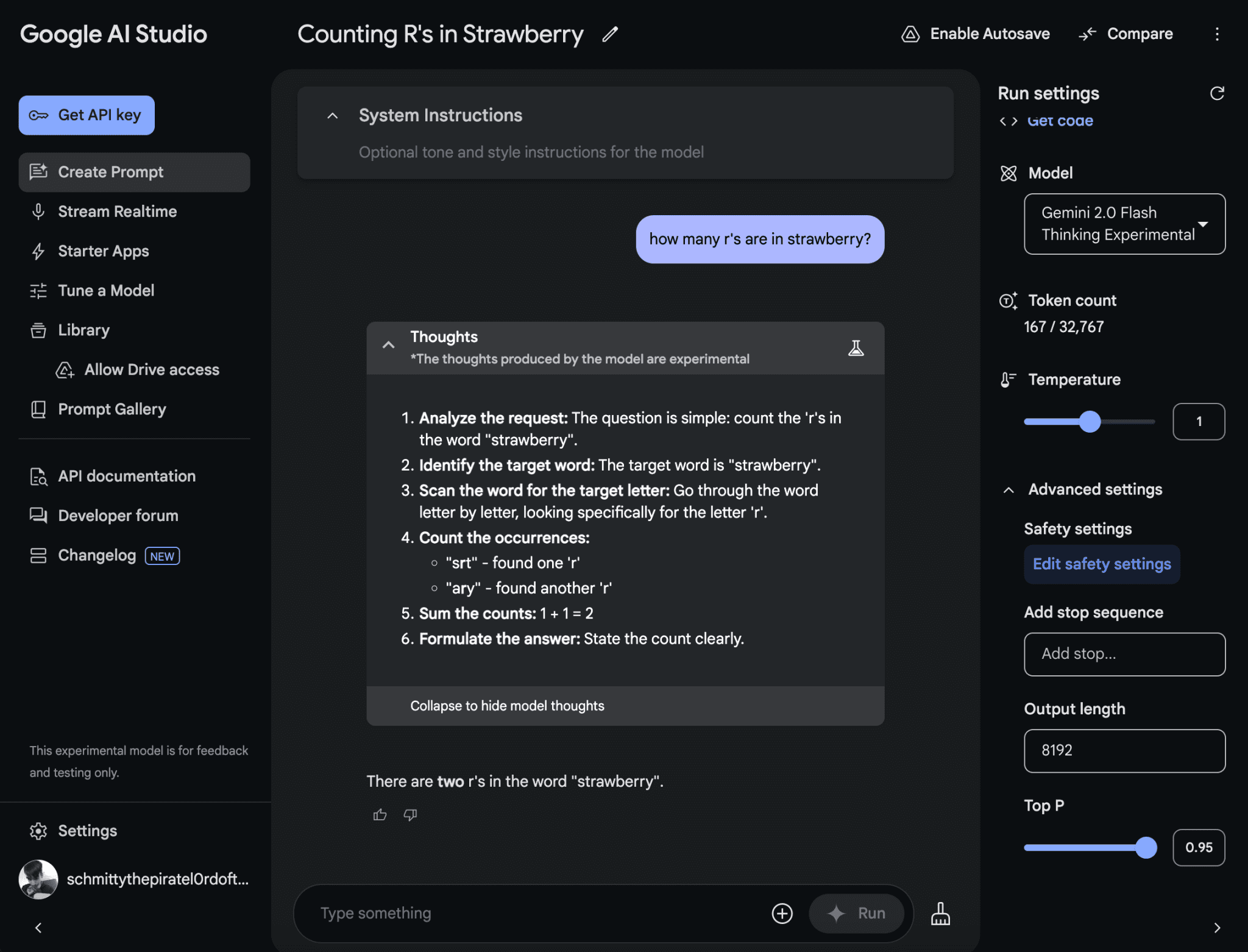

Faced with a prompt, the Gemini 2.0 Flash Thinking Experimental pauses before responding, considers multiple related prompts, and "explains" its reasoning in the process. Later, the model will summarize what it believes to be the most accurate answer.

Well, theoretically it should. When I asked Gemini 2.0 Flash Thinking Experimental how many Rs are in the word "strawberry", it replied "two".

Google's new inference model does not perform well when counting the letters of words and sometimes makes mistakes. Image credit: Google

Actual results may vary from person to person.

After the release of o1, there was an explosion of inference models from rival AI labs-not just Google. an AI research firm funded by quantitative traders DeepSeek previewed its first inference model, DeepSeek-R1, in early November, and that same month, Alibaba's Qwen team released what it claims to be the first inference model for the open challenge o1.

In October it was reported that Google had multiple teams working on inference models. Then, in a November report, The Information revealed that Google has at least 200 researchers focused on the technology.

What sparked the inference modeling craze? One reason is the search for new ways to improve generative AI. As my colleague Max Zeff recently reported, "brute-force" techniques for scaling up models no longer provide the improvements they once did.

Not everyone is convinced that inference models are the best path forward. For one thing, they tend to be costly because of the amount of computational power required to run the models. And, while they perform well in benchmarks, it's not clear that inferential models can sustain this rate of progress.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...