GLM-TTS - Open Source Industrial Grade Speech Synthesis System by Smart Spectrum AI

What is GLM-TTS

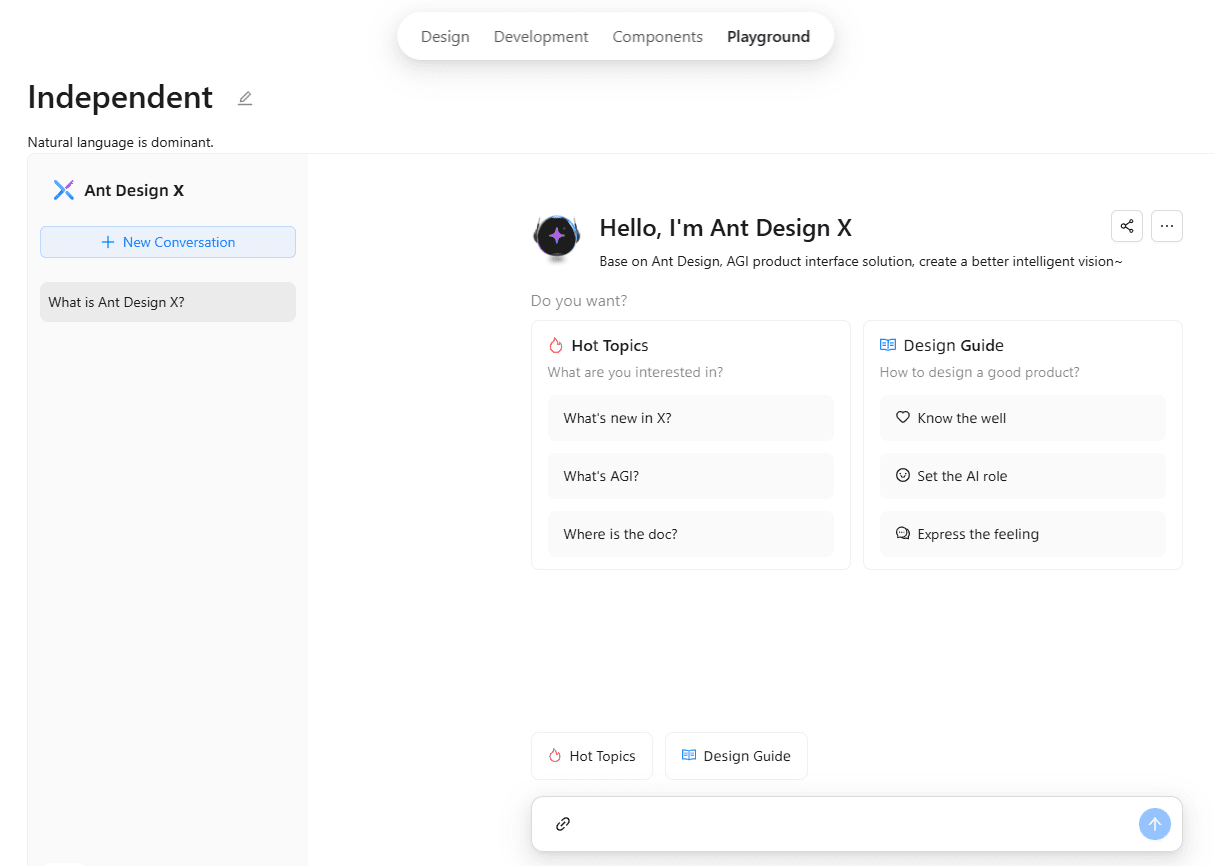

GLM-TTS is an open source industrial-grade speech synthesis system with powerful speech synthesis capabilities. It adopts a two-stage generation architecture: the first stage converts text into speech token sequences, and the second stage converts the token sequences into high-quality audio. The system supports tone cloning with only 3 seconds of speech samples, and improves emotional expression and naturalness of speech through multi-reward reinforcement learning.GLM-TTS reaches the top level of open-source models in pronunciation accuracy, timbre similarity, and emotional expression, for example, in the tested-tts-eval test set, the Character Error Rate (CER) is as low as 0.89%, and the timbre similarity (Sim) is 76.4%. (GLM-TTS provides support for a variety of application scenarios, including dialect cloning, multi-emotion expression, and fine-grained pronunciation control in educational assessment. Streaming inference is supported for real-time interactive applications. Users can experience it online through audio.z.ai and Smart Spectrum Clear Speech APP, or access the business through the open platform API.The model weights, inference scripts and other resources of GLM-TTS have been open-sourced in GitHub, Hugging Face and Magic Hitch community, which is convenient for developers to deploy and secondary development.

GLM-TTS Functional Features

- zero-sample speech cloning: It takes only 3 seconds of voice samples to replicate the speaker's timbre and speaking habits, quickly generating a personalized voice.

- Multi-Reward Reinforcement Learning: Significantly enhance the naturalness and emotional expressiveness of speech by incorporating multi-dimensional reward mechanisms such as character error rate, timbre similarity, emotional expression and laughter.

- High-quality speech synthesis: The generated speech is natural and smooth, with accurate pronunciation and sound quality comparable to that of commercial systems, and is suitable for reading aloud, dubbing, and many other scenarios.

- Multilingualism and Emotional Support: It supports mixed text in Chinese and English, and can automatically match the emotional style according to the text content to meet diversified needs.

- Streaming Reasoning and Real-Time Interaction: Supports real-time streaming audio generation, which is suitable for online interactive applications, such as intelligent customer service and voice assistants.

- Open source and flexible deployment: Model weights, inference scripts, and other resources are open-sourced on GitHub, Hugging Face, and the Magic Hitch community, facilitating rapid deployment and secondary development for developers.

- Refined Pronunciation Control: Solve the problem of pronouncing polyphonic and rare characters through the hybrid input of "phoneme + text", and improve the accuracy of pronunciation.

Core Benefits of GLM-TTS

- Efficient Tone Reproduction: It takes only 3 seconds of voice samples to accurately replicate the speaker's tone and style, quickly generating a personalized voice.

- Rich in emotional expression: Significantly improve emotional expression and naturalness of speech through multi-reward reinforcement learning, supporting multiple emotional styles.

- High quality voice outputThe voice generated is natural and smooth, with accurate pronunciation and sound quality comparable to commercial systems, and is suitable for a wide range of professional scenarios.

- Multi-language support: Supports mixed Chinese and English text to meet the needs of internationalized applications.

- Real-time interactive capabilities: Supports streaming reasoning and is suitable for real-time interactive applications such as intelligent customer service and voice assistants.

- Open Source and Ease of Use: Model weights and inference scripts open source for rapid deployment and secondary development by developers.

- Refined Pronunciation Control: Solve the problem of pronouncing polyphonic and rare words through phoneme-level input to improve pronunciation accuracy.

- Low data training: Only 100,000 hours of data are needed to achieve excellent results and significantly reduce training costs.

- Flexible tone customization: Adopt LoRA fine-tuning technology to quickly customize high-quality tones and reduce development costs.

What is the official website of GLM-TTS

- GitHub repository:: https://github.com/zai-org/GLM-TTS

- HuggingFace Model Library:: https://huggingface.co/zai-org/GLM-TTS

People for whom GLM-TTS is indicated

- Speech technology developers: High-quality speech synthesis technology is needed for application development, such as intelligent voice assistants and voice interaction systems.

- content creator: Produce audiobooks, podcasts, and audio content that requires rapid generation of personalized speech.

- Practitioners in the education sector: Used in educational software and online courses to provide vivid voice explanations and personalized voice feedback.

- Customer Service: Build an intelligent customer service system to provide a natural and smooth voice interaction experience.

- entertainment industry: Produce animation, game, movie and TV voice-overs, and quickly generate multiple styles of voice content.

- Dialect and small language researchers: Utilize its dialect cloning capabilities to study and preserve dialects and minor languages.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...