GLM-4.6V - Wisdom Spectrum AI open source multimodal large language model series

What is GLM-4.6V?

GLM-4.6V is an open source multimodal large language modeling series by Smart Spectrum AI, the series contains two versions:GLM-4.6V (106B-A12B), the basic version for cloud and high-performance cluster scenarios, adopts the Mixed Expert (MoE) architecture, with a total number of about 106 billion and 12 billion activated parameters, which is suitable for processing large-scale multimodal tasks.GLM-4.6V-Flash (9B).The lightweight version for local deployment and low-latency applications, with 9 billion parameters, can run on consumer-grade hardware and supports fast inference and real-time interaction. The model has excellent performance on more than 30 mainstream multimodal evaluation benchmarks, such as MMBench and MathVista, and the performance under the same parameter scale reaches the SOTA level, which is a cutting-edge achievement in the field of current multimodal large models.

Functional features of GLM-4.6V

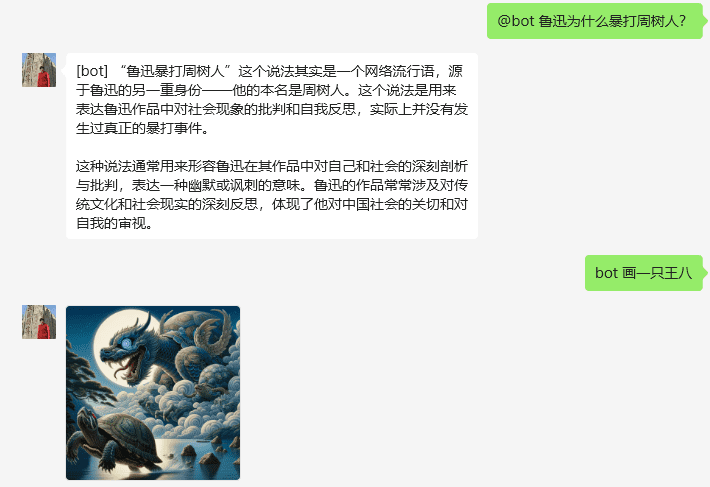

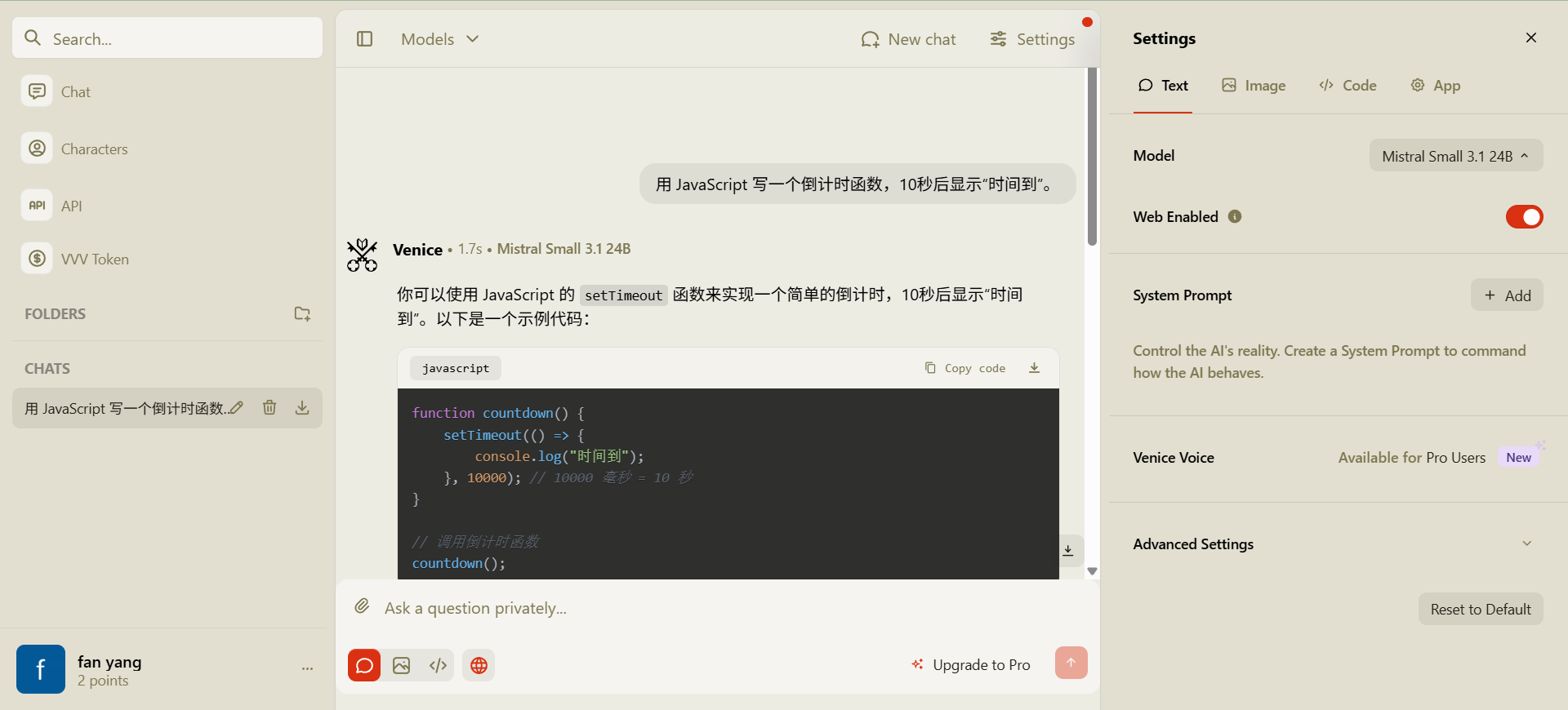

- Native multimodal tool invocation capabilities: Images, screenshots, etc. can be used directly as tool parameters without conversion to text, and the visual results returned by the tool can also be directly involved in the subsequent reasoning, forming a complete perception-understanding-execution closed loop.

- Extra Long Context Window: The context window extends to 128k tokens during training, and is able to handle multimodal content such as long documents, videos, and complex diagrams, preserving memory of earlier inputs and cross-modal reasoning.

- High performance and low cost: Compared to the previous generation GLM-4.5V, the API call price is reduced by 50%, with an input of $1/million tokens and an output of $3/million tokens, balancing performance and cost.

- Wide range of application scenarios: Supporting tasks such as mixed-arrangement graphic creation, visually-driven shopping guides, front-end replication and interaction development, and long document/video comprehension, it provides a technical base for multimodal Agent applications.

Core Benefits of the GLM-4.6V

- Native tool invocation capabilities: For the first time, the tool invocation capability is natively integrated into the visual model, which can directly use multimodal data such as images and screenshots as input parameters of the tool, without the need to convert them into text descriptions first. The visual results returned by the tool (e.g. charts, webpage screenshots) can be directly analyzed by the model and integrated into the inference chain, forming a complete closed loop of "perception-understanding-execution", which significantly improves the processing efficiency and accuracy of multimodal tasks.

- Extremely long contextual processing capabilities: The context window extends to 128k tokens and can handle complex documents up to 150 pages long, 200 pages of PPT, or 1 hour of video, maintains memory of early inputs and performs cross-image and cross-document reasoning for scenarios such as long-document analysis and video comprehension.

- Highly accurate visual understanding: Excellent performance in visual tasks such as chart recognition, handwritten text recognition, character recognition, object material judgment, etc., with a significant reduction in illusions. Supports arbitrary aspect ratio and 4K resolution image input, and has strong processing capability for non-standard size images (e.g. UI screenshots, scanned documents).

- Multi-modal output capability: The output is no longer limited to text, but can generate mixed-text content, including images, tables, web page screenshots, etc., and can screen, integrate and quality control these results, which is suitable for content creation, graphic report generation and other scenarios.

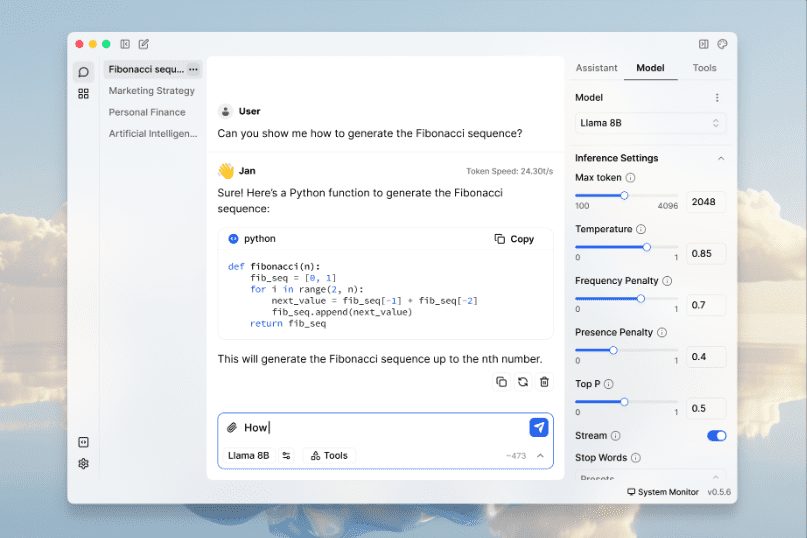

- Programming and front-end development support: Optimized for front-end scenarios, it can upload webpage screenshots or designs to generate pixel-accurate HTML/CSS code, support multiple rounds of visual interaction debugging based on screenshots, and can automatically locate and correct code fragments to improve front-end development efficiency.

- Cost-effectiveness advantages: Compared to the previous generation model, the API call price is reduced by 50%, only $1 per million tokens for input and $3 for output, which is more suitable for large-scale image input scenarios. The lightweight version (9b parameter) can run on consumer GPUs, lowering the deployment threshold.

- Open Source and Ecological Support: It is completely open source, provides model weights, inference code and sample projects, supports mainstream inference frameworks (e.g., VLLM, SGLang, XLLM), and can be deployed in GPU and domestic NPU environments, which makes it easy for developers to customize the development and integrate it into existing systems.

- leading performanceThe GLM-4.6V-Flash is an excellent performer on more than 30 mainstream multimodal benchmarks such as MMBench, MathVista, OCRbench, etc. The overall performance of GLM-4.6V-Flash in the 9b version exceeds that of Qwen3-VL-8B, and the 106b parameterized version outperforms that of the 2x parametric version, Qwen3-VL-235B.

What is the official website of GLM-4.6V?

- GitHub repository:: https://github.com/zai-org/GLM-V

- HuggingFace Model Library:: https://huggingface.co/collections/zai-org/glm-46v

- Technical Papers:: https://z.ai/blog/glm-4.6v

People for whom GLM-4.6V is intended

- front-end developerThe model optimizes the front-end reproduction and multi-round visual interaction development capability, which can upload webpage screenshots or design drafts to generate high-quality HTML/CSS/JS code, support multi-round interaction modification, shorten the link from "design draft to runnable page", and improve the efficiency of front-end development.

- Document and video processorsIt can handle long documents (e.g., financial reports of listed companies) and long videos, extract core indicators uniformly across documents, understand the hidden signals in reports and charts, and automatically summarize them into a comparative analysis table; it can carry out global combing and fine-grained reasoning on long videos to accurately locate the key points in time, which is suitable for understanding and researching complex contents.

- Multimodal Intelligent Customer Service Developer: Combining visual and textual information to provide precise answers and suggestions, and supporting multi-round conversations, it can enhance customer service efficiency and provide users with more comprehensive and accurate services.

- Researchers and Data Analysts: In the field of scientific research and data analysis, it can handle complex multimodal data, such as papers and research reports, to help extract key information, perform data analysis and reasoning, and assist in scientific research and decision making.

- educator: It can be used for the creation and support of teaching content, such as generating illustrated teaching materials, parsing complex learning documents, etc., to help students better understand and master their knowledge.

- AI developers and researchers: As an open-source model, it provides AI developers and researchers with a powerful technological pedestal that can be used for further research and development to explore new applications and technological innovations in multimodal AI.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...