GLM-4.5V - Multimodal Open Source Visual Reasoning Model by Smart Spectrum

What is GLM-4.5V?

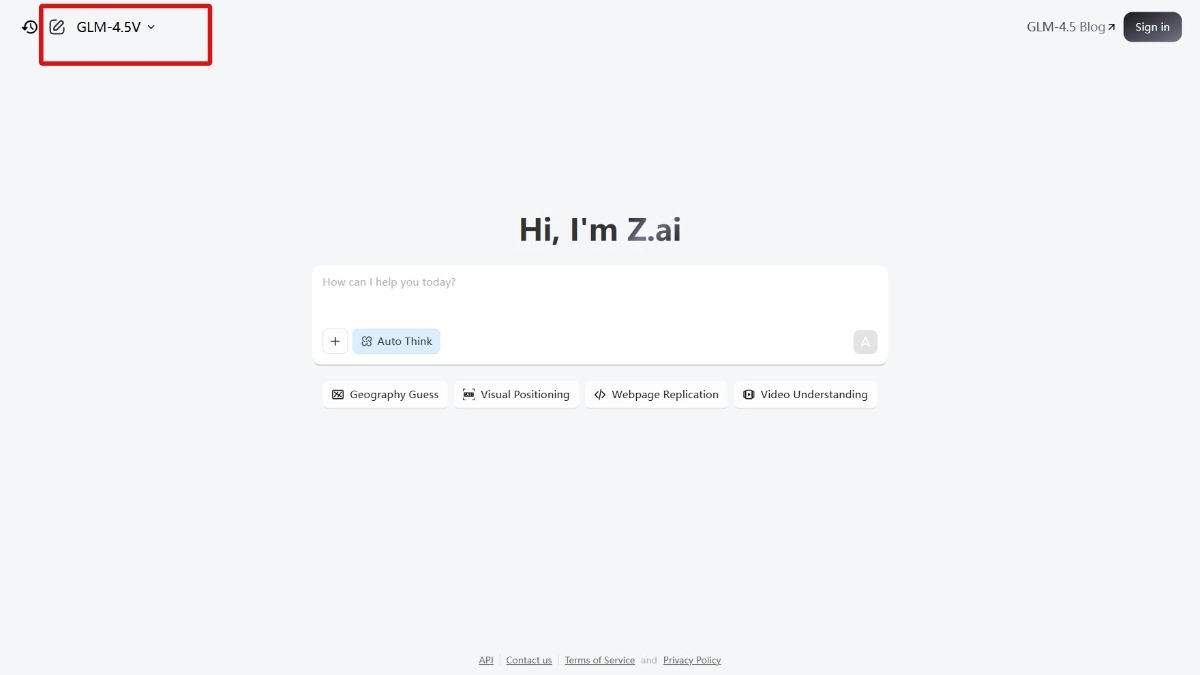

GLM-4.5V is the world's leading open source visual inference model launched by Smart Spectrum, with 106 billion total parameters and 12 billion activated parameters. The model is based on a new generation of text base modelsGLM-4.5-AirGLM-4.5 is trained to have strong visual understanding and reasoning capabilities, and can handle a wide range of visual content such as images, videos, documents, and so on. The model performs well in multimodal tasks, covering scenarios such as visual Q&A, image description generation, video comprehension, and web front-end replication, while supporting flexible switching between fast response and deep inference.GLM-4.5V achieves SOTA performance in 41 public visual multimodal lists, and realizes full-scenario visual inference through efficient hybrid training, providing cost-effective multimodal AI solution for enterprises and developers.

Functional Features of GLM-4.5V

- graphical reasoning: Can understand objects, character relationships, and background information in complex scenes.

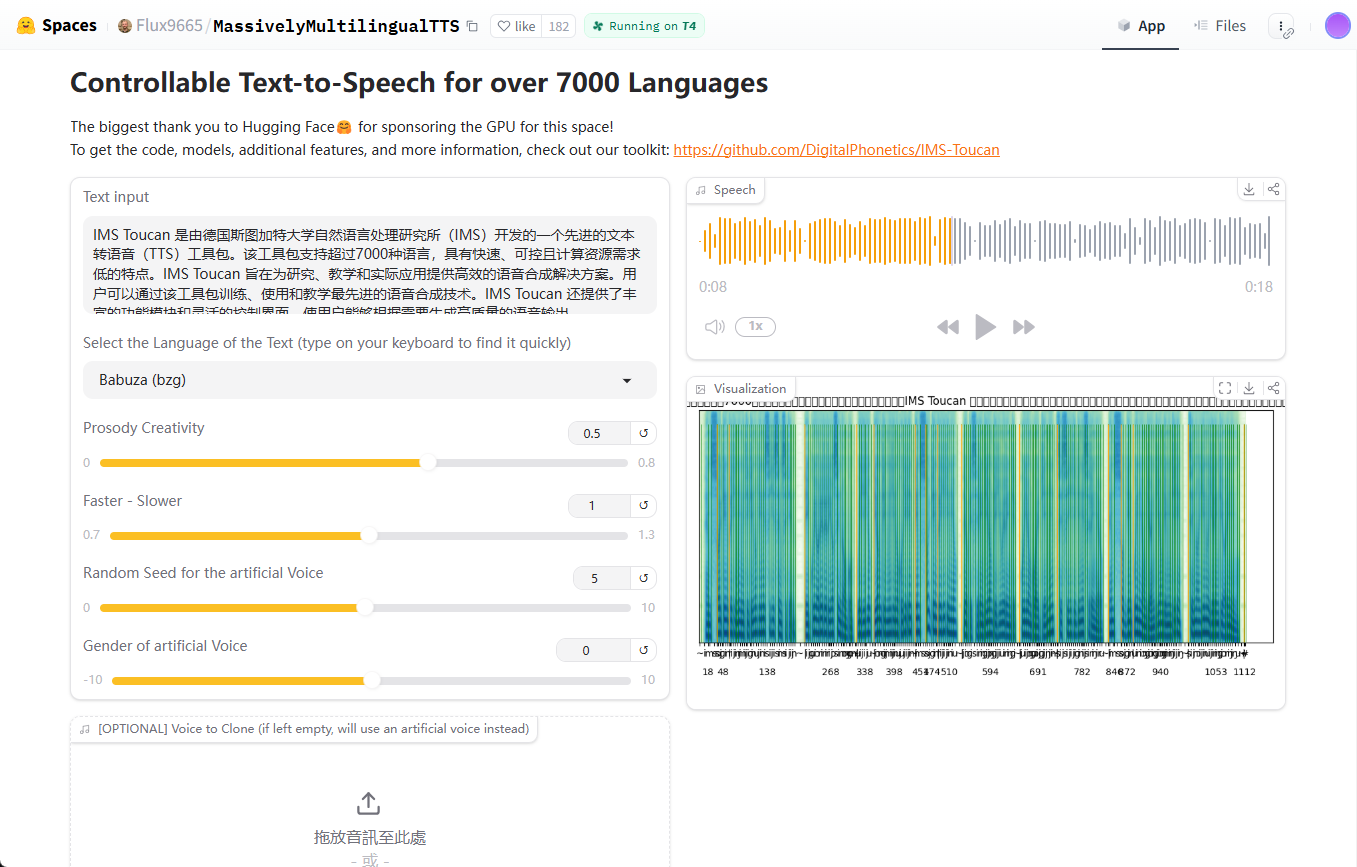

- Video comprehension: Supports the analysis of long video content, including split-scenes, event recognition and key information extraction.

- Multimodal interaction capabilities::

- Text and visual integration: Support for generating images from text descriptions, or generating text descriptions from images.

- cross-modal generation: The ability to convert visual content to text, or text content to visual content.

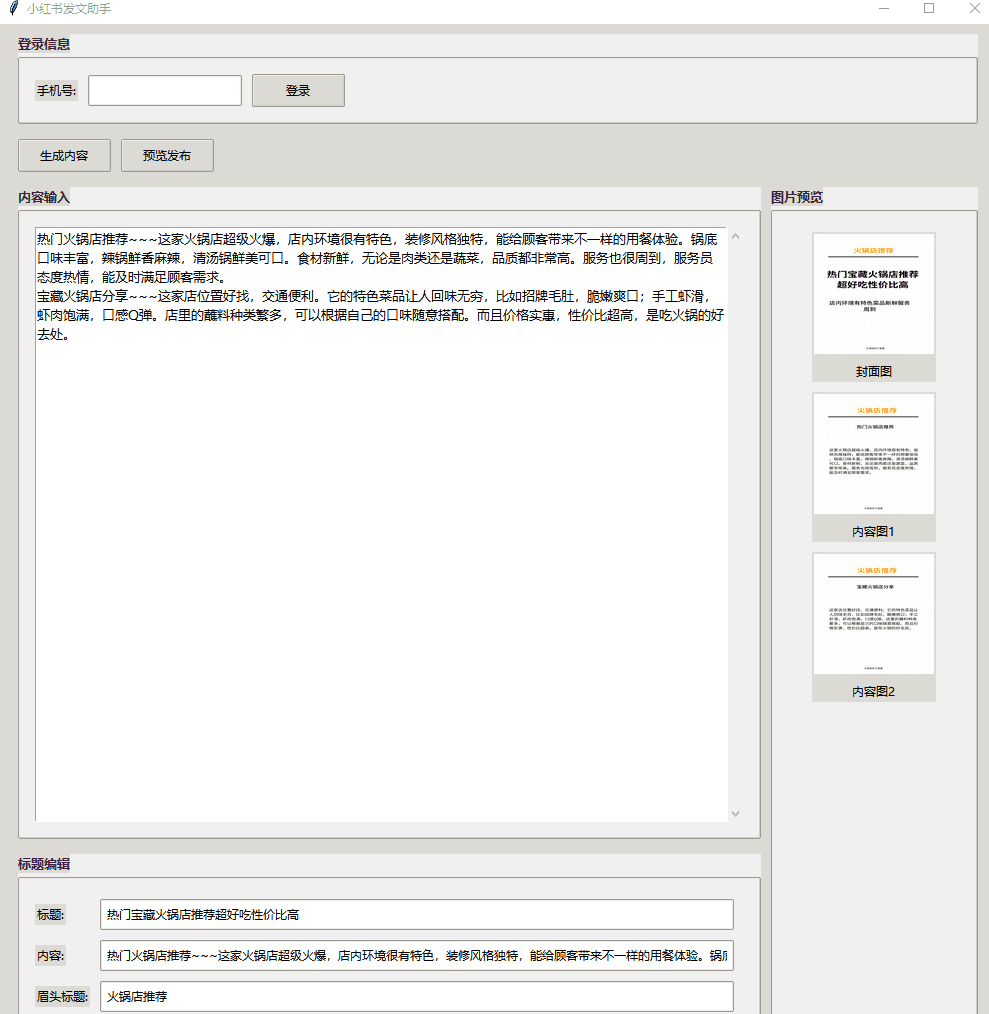

- Web Front End Replica: It can generate front-end code based on web design drawings for rapid web development. Users only need to upload webpage screenshots or interactive videos, and the model can generate complete HTML, CSS and JavaScript code.

- Touhou Games: Supports image-based search and matching tasks. For example, finding specific target images quickly in complex scenes, suitable for security surveillance, smart retail and entertainment game development.

- Complex Documentation Interpretation: Ability to work with long documents and complex charts, extracting, summarizing and translating information. Supports exporting your own "point of view", not just simple information extraction.

Core Benefits of the GLM-4.5V

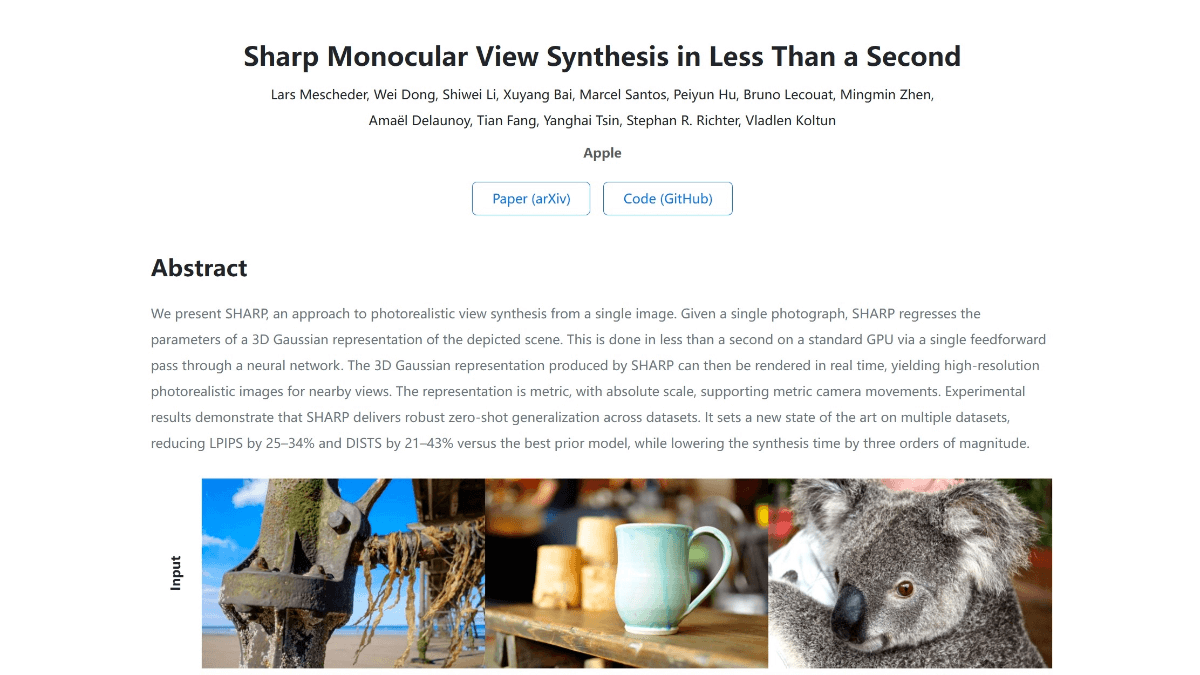

- Strong visual comprehension and reasoning: Deep understanding of complex visual content, including images, videos, and documents. Recognizes not only objects, scenes, and people relationships, but also performs advanced reasoning, such as inferring contextual information from subtle clues in an image

- Multimodal interaction and generation capabilities: Support for seamless integration of text and visual content, with the ability to generate images from text descriptions, or text descriptions from images. The model supports the implementation of cross-modal generation, such as converting visual content to text, or converting text content to visual content.

- Efficient Task Adaptation and Reasoning Model: Through efficient hybrid training, it is equipped with full-scene visual reasoning capabilities and can handle a wide range of tasks such as image reasoning, video comprehension, GUI tasks, and parsing of complex diagrams and long documents.

- Cost-effective and rapid deployment: Balancing inference speed and deployment cost while maintaining high accuracy. Its API call price is as low as $2/M tokens for input and $6/M tokens for output, with a response speed of 60-80 tokens/s.

- Open source and broad community support: Provide multiple channels such as GitHub repository, Hugging Face model repository and Magic Ride community to facilitate developers' quick start and secondary development, and provide desktop assistant application to support real-time screenshot and screen recording, so as to facilitate developers' experience of modeling capabilities.

- Wide range of application scenarios: For a variety of real-world application scenarios, including web front-end replication, visual quizzing, graph-seeking games, video comprehension, image description generation, and complex document interpretation.

What is GLM-4.5V's official website?

- GitHub repository:: https://github.com/zai-org/GLM-V/

- HuggingFace Model Library:: https://huggingface.co/collections/zai-org/glm-45v-68999032ddf8ecf7dcdbc102

- Technical Papers:: https://github.com/zai-org/GLM-V/tree/main/resources/GLM-4.5V_technical_report.pdf

- Desktop Assistant Application:: https://huggingface.co/spaces/zai-org/GLM-4.5V-Demo-App

People for whom GLM-4.5V is suitable

- developers: Provides developers with powerful multimodal development capabilities to help them quickly build applications such as visual quizzing, image generation, video analytics, and more.

- business user: Enterprises use visual understanding capabilities to optimize business scenarios such as security surveillance, smart retail, and video recommendation.

- research worker: Researchers are leveraging GLM-4.5V's open-source models and datasets to conduct cutting-edge research in the areas of multimodal reasoning, visual language fusion, and more.

- regular user: Ordinary users use features such as image description and video comprehension to improve content creation efficiency and information access.

- Educators and students: Educators and students to aid teaching and learning and enhance the educational experience.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...