glhf.chat: running almost (all) open source big models, free access to GPU resources and API services (beta period)

General Introduction

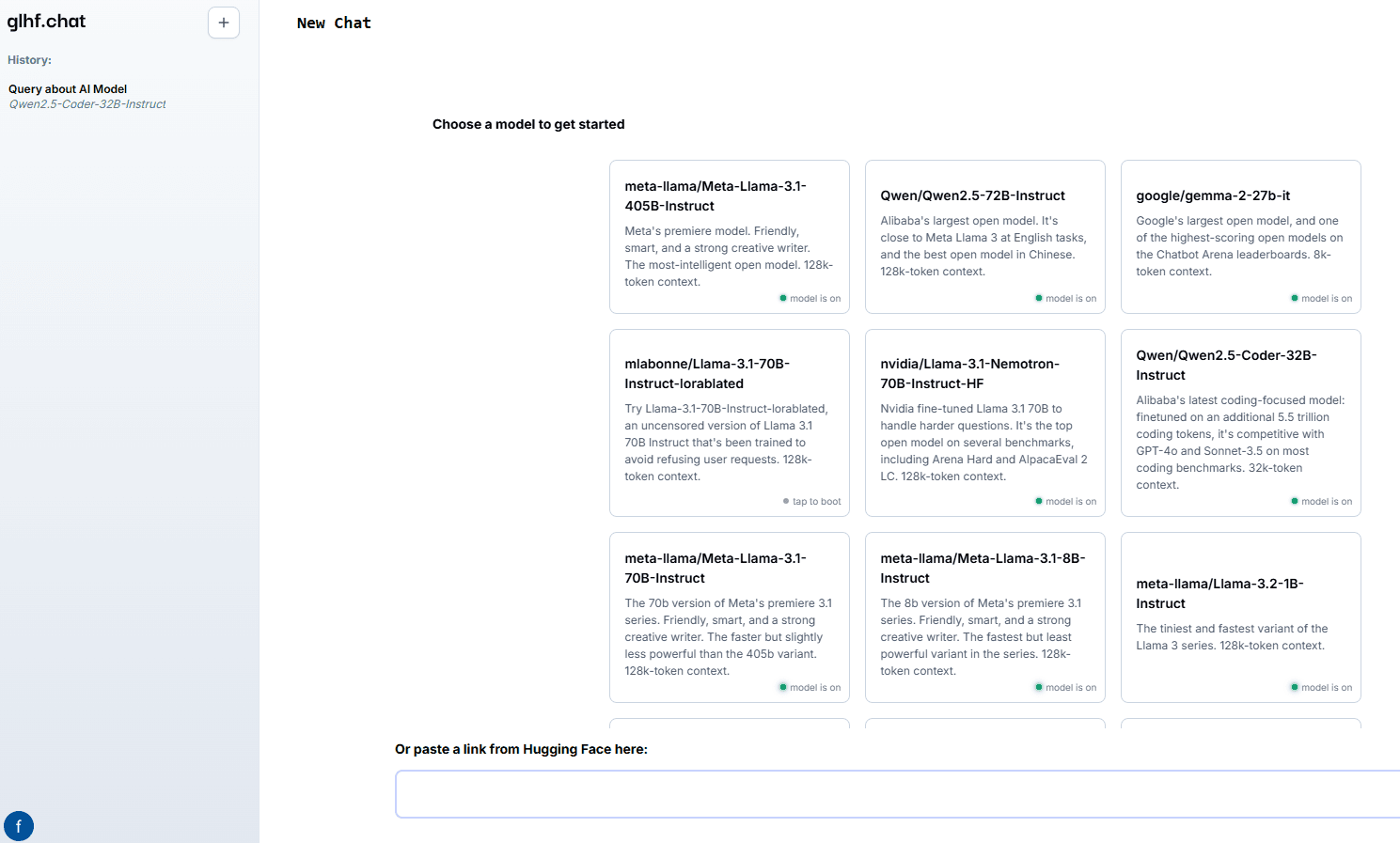

good luck have fun (glhf.chat) is a website that provides an open source big model chat service. The platform allows users to run almost any open source big model using vLLM and a custom auto-scaling GPU scheduler. Users can simply paste a link to the Hugging Face repository and interact using the chat interface or OpenAI-compatible APIs. The platform is being offered for free during the beta period and will be available in the future at a lower price than major cloud GPU providers.

Function List

- Support for a variety of open source big models, including Meta Llama, Qwen, Mixtral, etc.

- Provides access to up to eight Nvidia A100 80Gb GPUs

- Reasoning services for automated agent popularity models

- On-demand startup and shutdown of clusters to optimize resource usage

- Provides OpenAI-compatible APIs for easy integration

Using Help

Installation and use

- Register & Login: Accessglhf.chatand register for an account, and log in when you're done.

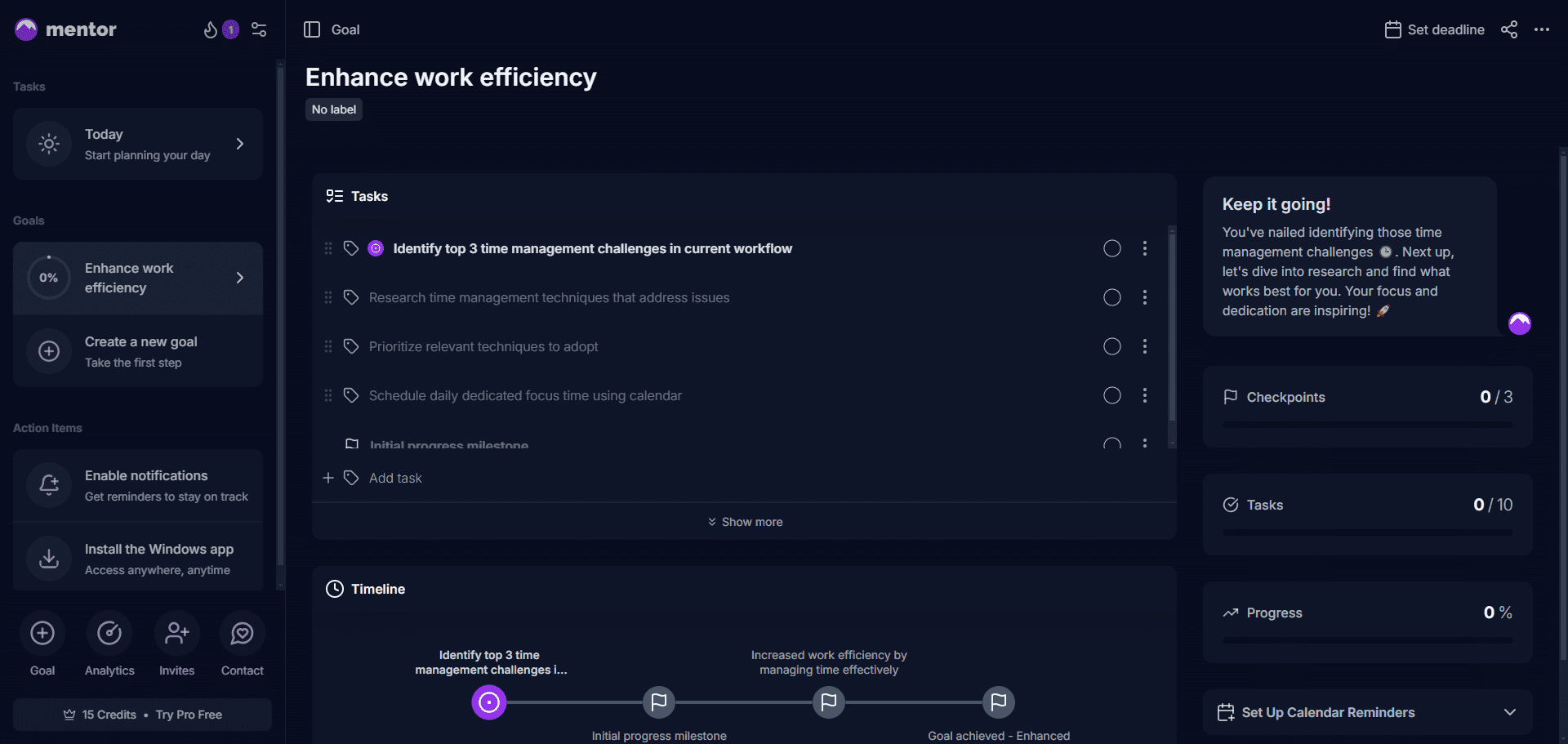

- Select Model: Select the desired macromodel on the platform's homepage. Supported models include Meta Llama, Qwen, Mixtral, and more.

- Paste the link: Paste the Hugging Face repository link into the specified location and the platform will automatically load the model.

- Using the Chat Interface: Interact with the model through the chat interface provided on the website, enter questions or commands, and the model will generate responses in real time.

- API integration: Integrate the platform's features into your own applications using the OpenAI-compatible APIs; see the website's Help Center for API documentation.

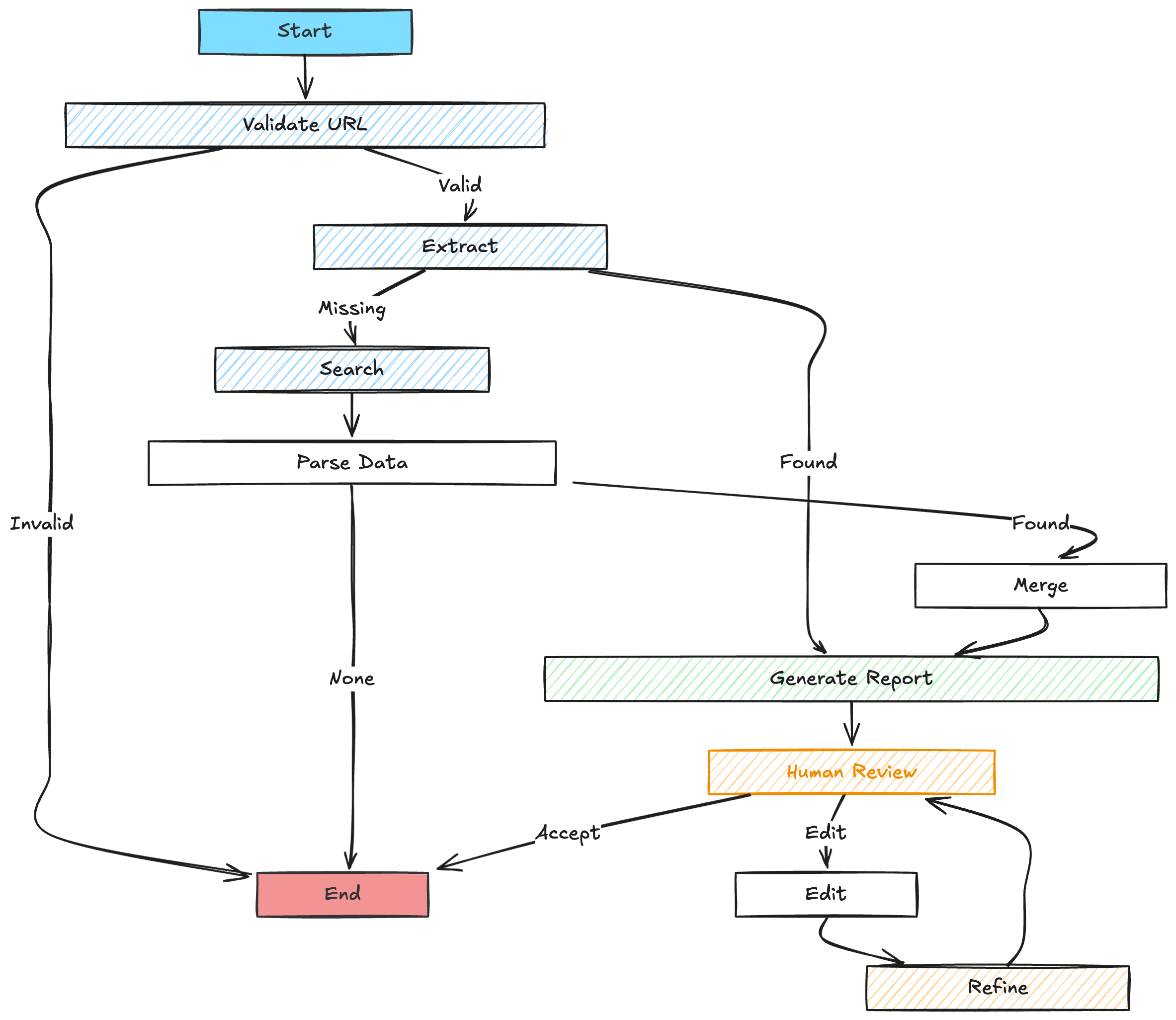

Detailed function operation flow

- Model Selection and Loading::

- After logging in, you will be taken to the model selection page.

- Browse the list of supported models and click on the desired model.

- Paste the link to the Hugging Face repository in the pop-up dialog box and click the "Load Model" button.

- Wait for the model to finish loading, the loading time depends on the model size and network conditions.

- Chat Interface Usage::

- Once the model is loaded, enter the chat screen.

- Enter a question or instruction in the input box and click Send.

- The model generates a reply based on the input and the reply is displayed in the chat window.

- Multiple questions or commands can be entered in succession, and the model will process and respond to them one by one.

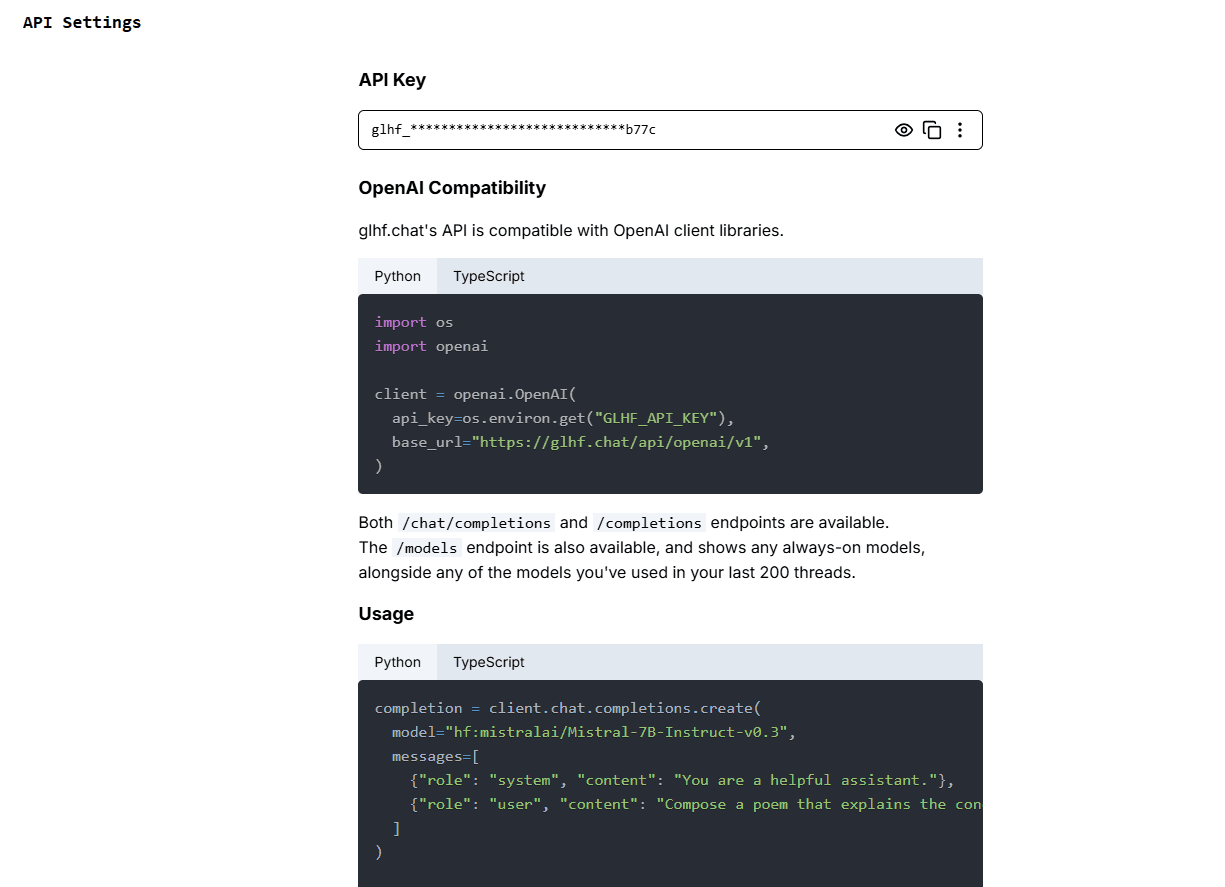

- API Usage::

- Visit the API documentation page for API keys and instructions.

- Integrate the API in your application and follow the sample code provided in the documentation to call it.

- Send a request through the API to get a model-generated response.

- The API supports a variety of programming languages, see the documentation for specific sample code.

Resource management and optimization

- Automatic Expansion: The platform uses a customized GPU scheduler that automatically scales up and down GPU resources based on user demand to ensure efficient utilization.

- on-demand activation: For infrequently used models, the platform starts clusters on demand and automatically shuts them down when they are used up, saving resources.

- Free Test: During the beta test period, users will have free access to all the features offered by the platform, and a discounted pricing plan will be available at the end of the test.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...