FunClip: Intelligent editing of video content into short clips, easy to realize accurate video clip extraction/cropping

General Introduction

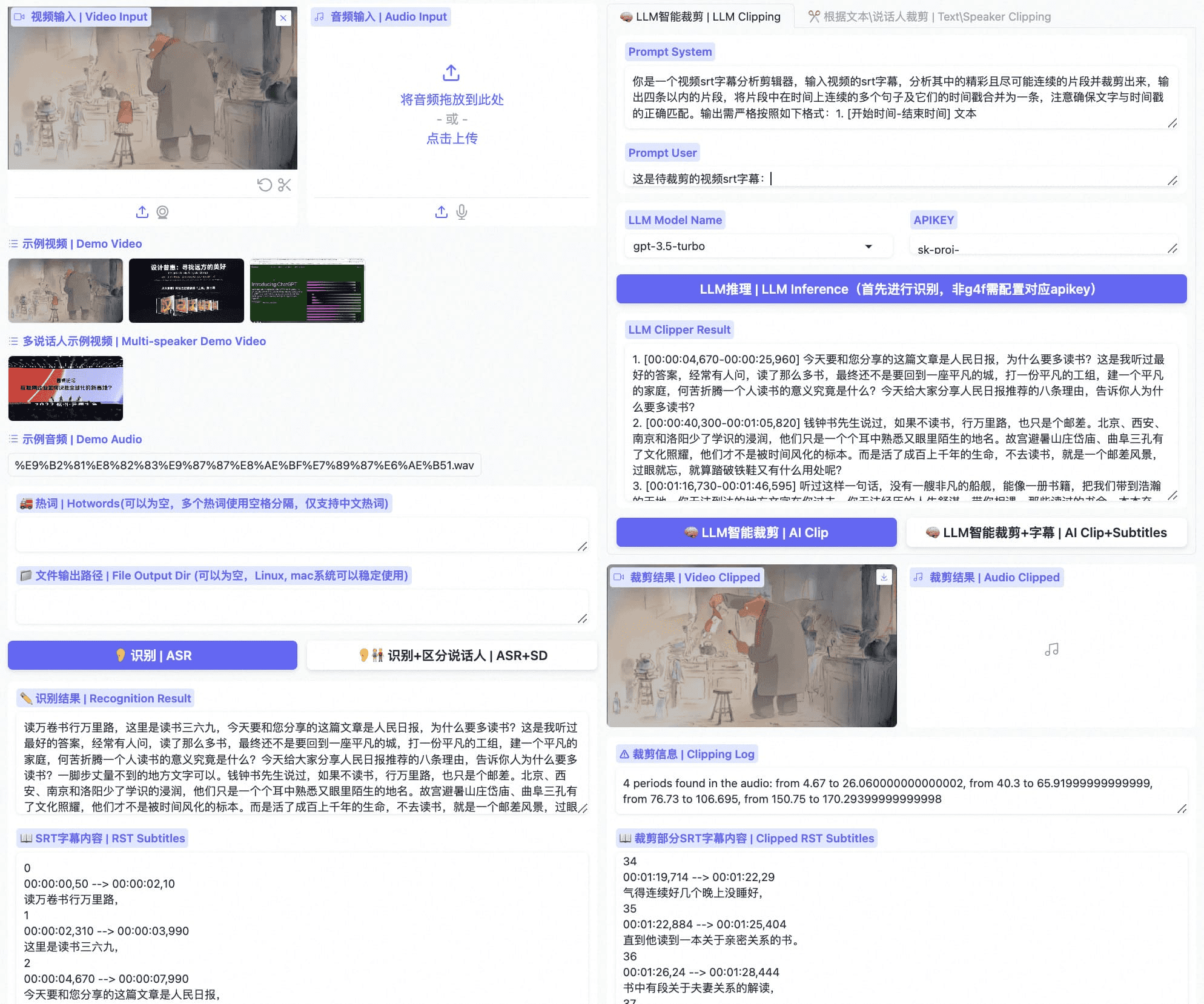

FunClip is a fully open-source localized automated video editing tool developed by the TONGYI Speech Lab of Alibaba Dharma Institute. The tool integrates the industrial-grade Paraformer-Large speech recognition model, which can accurately recognize the speech content in the video and convert it to text. What's special is that FunClip supports intelligent editing through Large Language Model (LLM) and integrates speaker recognition function, which can automatically recognize the identity of different speakers. Users can select the text clips of interest and export the corresponding video clips with one click through a simple interface operation. The tool supports multi-segment free editing and can automatically generate complete SRT subtitle files and subtitles for target segments, providing users with a simple and convenient video processing experience. The latest version supports bilingual recognition and provides rich subtitle embedding and export functions, making it a powerful and easy-to-use open source video processing tool.

FunClip Optimized - Private-ASR

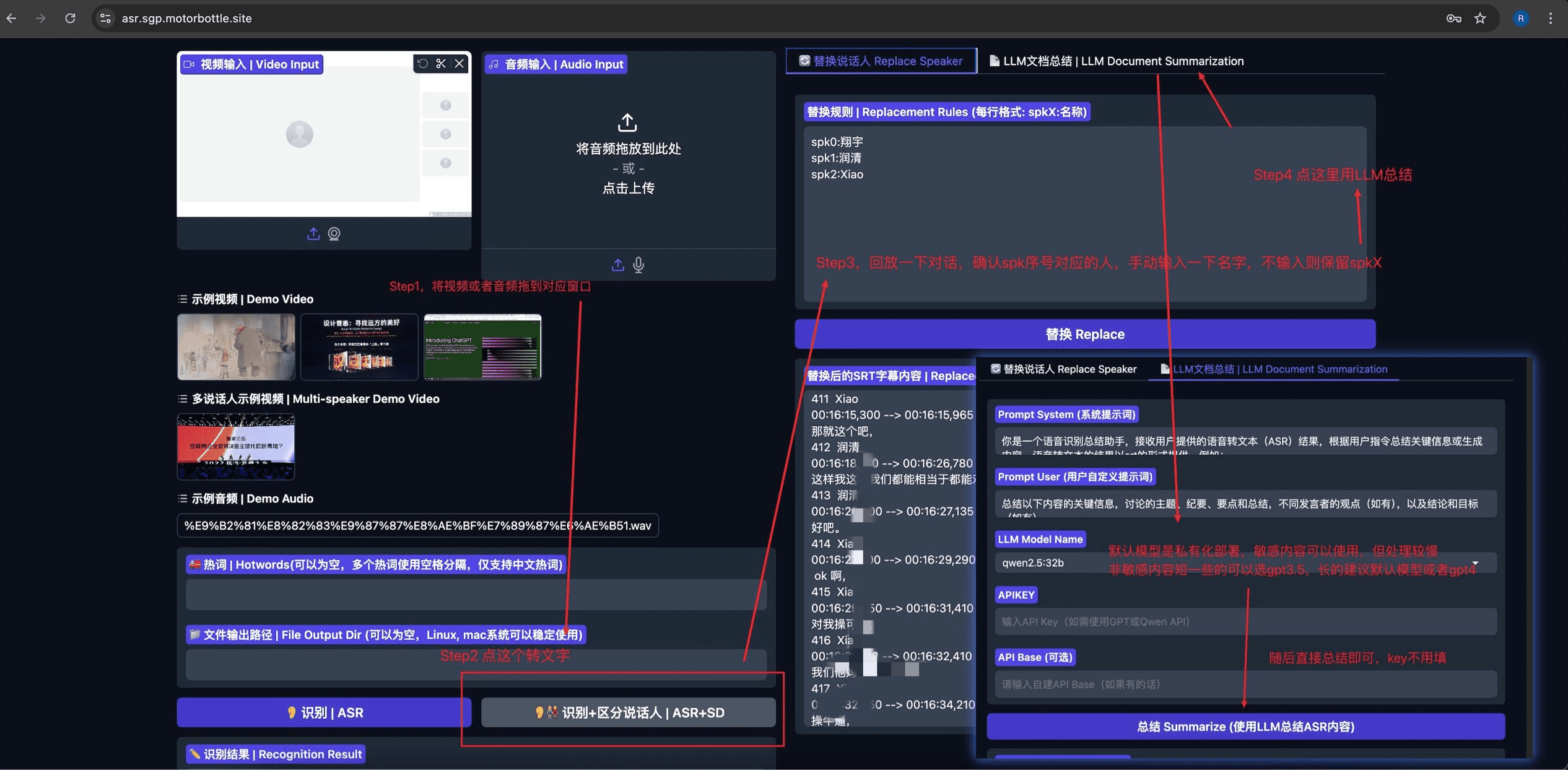

Private-ASR Based on open source projects FunClip Modified to integrate automatic speech recognition (ASR), speaker separation, SRT subtitle editing, and LLM-based summarization. The project uses Gradio Provides an intuitive and easy-to-use user interface.

Function List

- Accurate Speech Recognition: Integration of Alibaba's open source Paraformer-Large model, support for Chinese and English speech recognition

- LLM Intelligent Clip: Supports intelligent analysis of content and automatic determination of clip points through the Large Language Model (LLM)

- Speaker Recognition: Integration of CAM++ speaker recognition model, which can automatically recognize the identity of different speakers

- Hot word customization: Support SeACo-Paraformer's hot word customization function to improve the accuracy of specific word recognition.

- Multi-segment editing: support free selection of multiple text segments for batch editing

- Subtitle Generation: Automatically generates full video SRT subtitles and target clip subtitles.

- Bilingual support: support Chinese and English video recognition and editing

- Local deployment: completely open source, support for localized deployment, to protect the privacy of data security

- Friendly interface: based on the Gradio framework development, providing simple and intuitive Web interface

Using Help

1. Installation and deployment

Basic Environment Installation

- Clone the code repository:

git clone https://github.com/alibaba-damo-academy/FunClip.git

cd FunClip

- Install Python dependencies:

pip install -r ./requirements.txt

Optional feature installation (for embedded subtitles)

To use the subtitle embedding feature, you need to install ffmpeg and imagemagick:

- Ubuntu:

apt-get -y update && apt-get -y install ffmpeg imagemagick

sed -i 's/none/read,write/g' /etc/ImageMagick-6/policy.xml

- MacOS:

brew install imagemagick

sed -i 's/none/read,write/g' /usr/local/Cellar/imagemagick/7.1.1-8_1/etc/ImageMagick-7/policy.xml

- Windows:

- Download and install imagemagick from the official website: https://imagemagick.org/script/download.php#windows

- Find the Python installation path and change the

site-packages\moviepy\config_defaults.pyhit the nail on the headIMAGEMAGICK_BINARYInstallation path for imagemagick - Download the font file:

wget https://isv-data.oss-cn-hangzhou.aliyuncs.com/ics/MaaS/ClipVideo/STHeitiMedium.ttc -O font/STHeitiMedium.ttc

2. Methods of use

A. Local Gradio Service Usage

- Start the service:

python funclip/launch.py

# 使用 -l en 参数支持英文识别

# 使用 -p xxx 设置端口号

# 使用 -s True 开启公共访问

- interviews

localhost:7860, follow the steps below:

- Step 1: Upload Video File

- Step 2: Copy the desired text fragment to the "Text to Clip" area.

- Step 3: Adjust subtitle settings as needed

- Step 4: Click "Clip" or "Clip and Generate Subtitles" to edit.

B. LLM Smart Clips

- After the recognition is complete, select the large language model and configure apikey

- Click on the "LLM Inference" button and the system will automatically combine the video subtitles with the preset cue words.

- Click the "AI Clip" button to automatically extract timestamps for clipping based on the output of the large language model.

- The output of a large language model can be optimized by modifying the cue words

C. Command-line use

- Speech Recognition:

python funclip/videoclipper.py --stage 1 \

--file examples/video.mp4 \

--output_dir ./output

- Video Clip:

python funclip/videoclipper.py --stage 2 \

--file examples/video.mp4 \

--output_dir ./output \

--dest_text '待剪辑文本' \

--start_ost 0 \

--end_ost 100 \

--output_file './output/res.mp4'

Additionally, users can experience FunClip's features through the following online platforms:

- ModelScope space:FunClip@Modelscope Space

- HuggingFace Space:FunClip@HuggingFace Space

If you encounter problems with usage, you can get community support through the pinned group or WeChat group provided by the project.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...