FunASR: Open Source Speech Recognition Toolkit, Speaker Separation / Multi-Person Conversation Speech Recognition

General Introduction

FunASR is an open source speech recognition toolkit developed by Alibaba's Dharma Institute to bridge academic research and industrial applications. It supports a wide range of speech recognition functions, including speech recognition (ASR), voice endpoint detection (VAD), punctuation recovery, language modeling, speaker verification, speaker separation, and multi-person conversational speech recognition.FunASR provides convenient scripts and tutorials to support inference and fine-tuning of pre-trained models, helping users to quickly build efficient speech recognition services.

Supports various audio and video format inputs, can recognize dozens of hours of long audio and video into text with punctuation, supports hundreds of requests for transcription at the same time Supports Chinese, English, Japanese, Cantonese and Korean.

Online experience: https://www.funasr.com/

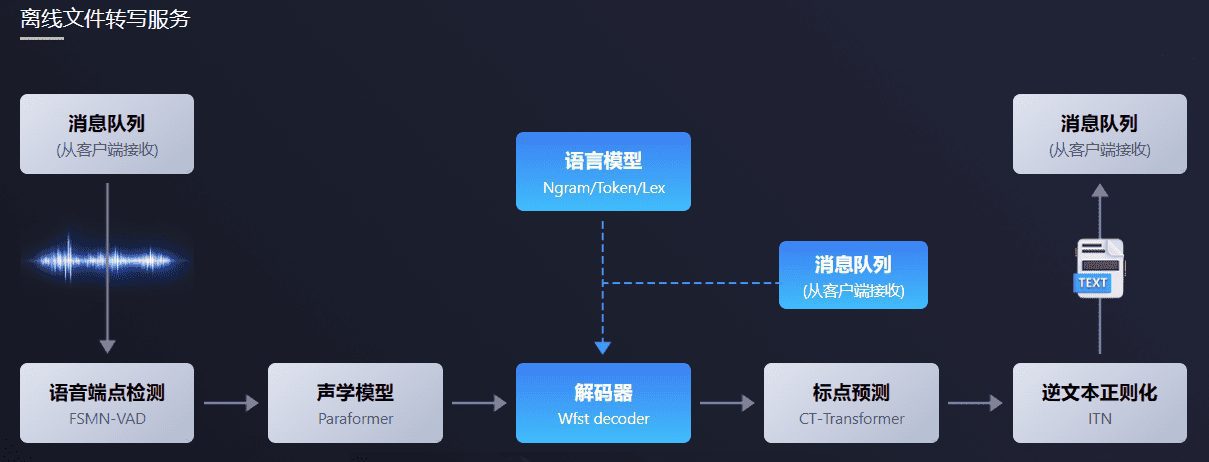

FunASR offline file transcription software package provides a powerful speech offline file transcription service. With a complete speech recognition link, combining speech endpoint detection, speech recognition, punctuation and other models, it can recognize dozens of hours of long audio and video into punctuated text, and it supports hundreds of requests for simultaneous transcription. The output is punctuated text with word-level timestamps and supports ITN and user-defined hot words. Server-side integration of ffmpeg, support for various audio and video formats input. The package provides html, python, c++, java and c# and other programming languages client , the user can directly use and further development.

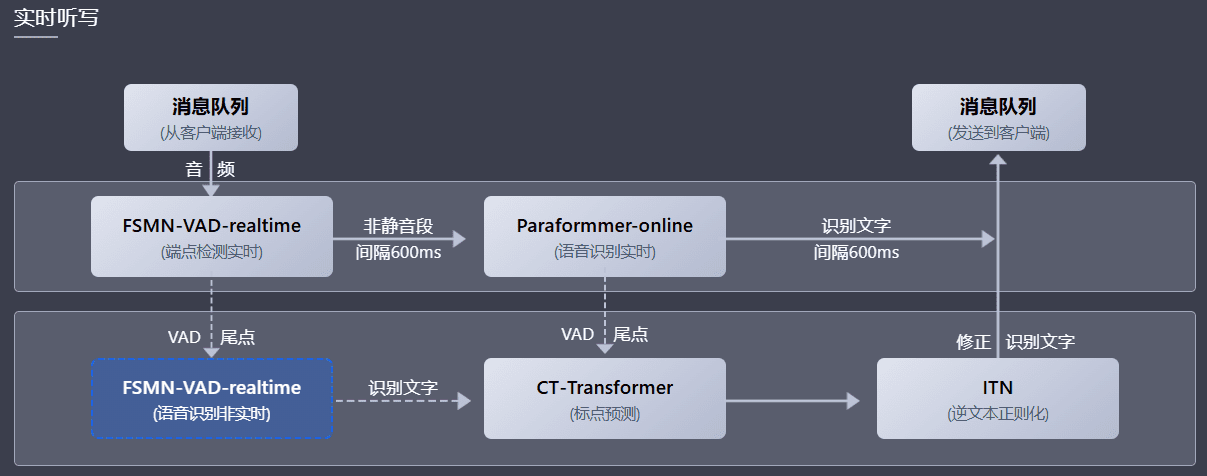

FunASR real-time speech dictation software package integrates real-time versions of speech endpoint detection models, speech recognition, voice recognition, and punctuation prediction models. Using multi-model synergy, both real-time speech to text, but also at the end of the sentence with high-precision transcription text correction output, the output text with punctuation, support for multiple requests. According to different user scenarios, it supports three service modes: real-time speech dictation service (online), non-real-time sentence transcription (offline) and real-time and non-real-time integrated collaboration (2pass). The software package provides html, python, c++, java and c# and other programming languages client, users can directly use and further development.

Function List

- Speech Recognition (ASR): Supports offline and real-time speech recognition.

- Voice Endpoint Detection (VAD): detects the beginning and end of the voice signal.

- Punctuation Recovery: Automatically add punctuation to improve text readability.

- Language Model: Supports the integration of multiple language models.

- Speaker verification: verifies the identity of the speaker.

- Speaker separation: distinguishing the speech of different speakers.

- Speech recognition for multiple conversations: supports speech recognition for multiple simultaneous conversations.

- Model inference and fine-tuning: provides inference and fine-tuning functions for pre-trained models.

Using Help

Installation process

- environmental preparation::

- Ensure that Python 3.7 or above is installed.

- Install the necessary dependency libraries:

pip install -r requirements.txt

- Download model::

- Download pre-trained models from ModelScope or HuggingFace:

git clone https://github.com/modelscope/FunASR.git cd FunASR

- Download pre-trained models from ModelScope or HuggingFace:

- Configuration environment::

- Configure environment variables:

export MODEL_DIR=/path/to/your/model

- Configure environment variables:

Usage Process

- speech recognition::

- Use the command line for speech recognition:

python recognize.py --model paraformer --input your_audio.wav - Speech recognition using Python code:

from funasr import AutoModel model = AutoModel.from_pretrained("paraformer") result = model.recognize("your_audio.wav") print(result)

- Use the command line for speech recognition:

- voice endpoint detection::

- Use the command line for voice endpoint detection:

python vad.py --model fsmn-vad --input your_audio.wav - Speech endpoint detection using Python code:

from funasr import AutoModel vad_model = AutoModel.from_pretrained("fsmn-vad") vad_result = vad_model.detect("your_audio.wav") print(vad_result)

- Use the command line for voice endpoint detection:

- Punctuation recovery::

- Use the command line for punctuation recovery:

python punctuate.py --model ct-punc --input your_text.txt - Punctuation recovery using Python code:

from funasr import AutoModel punc_model = AutoModel.from_pretrained("ct-punc") punc_result = punc_model.punctuate("your_text.txt") print(punc_result)

- Use the command line for punctuation recovery:

- Speaker verification::

- Use the command line for speaker verification:

python verify.py --model speaker-verification --input your_audio.wav - Speaker verification using Python code:

from funasr import AutoModel verify_model = AutoModel.from_pretrained("speaker-verification") verify_result = verify_model.verify("your_audio.wav") print(verify_result)

- Use the command line for speaker verification:

- Multi-Conversation Speech Recognition::

- Speech recognition for multi-person conversations using the command line:

python multi_asr.py --model multi-talker-asr --input your_audio.wav - Speech recognition for multi-person conversations using Python code:

from funasr import AutoModel multi_asr_model = AutoModel.from_pretrained("multi-talker-asr") multi_asr_result = multi_asr_model.recognize("your_audio.wav") print(multi_asr_result)

- Speech recognition for multi-person conversations using the command line:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...