FramePack: 6G low graphics memory fast raw long video open source project

General Introduction

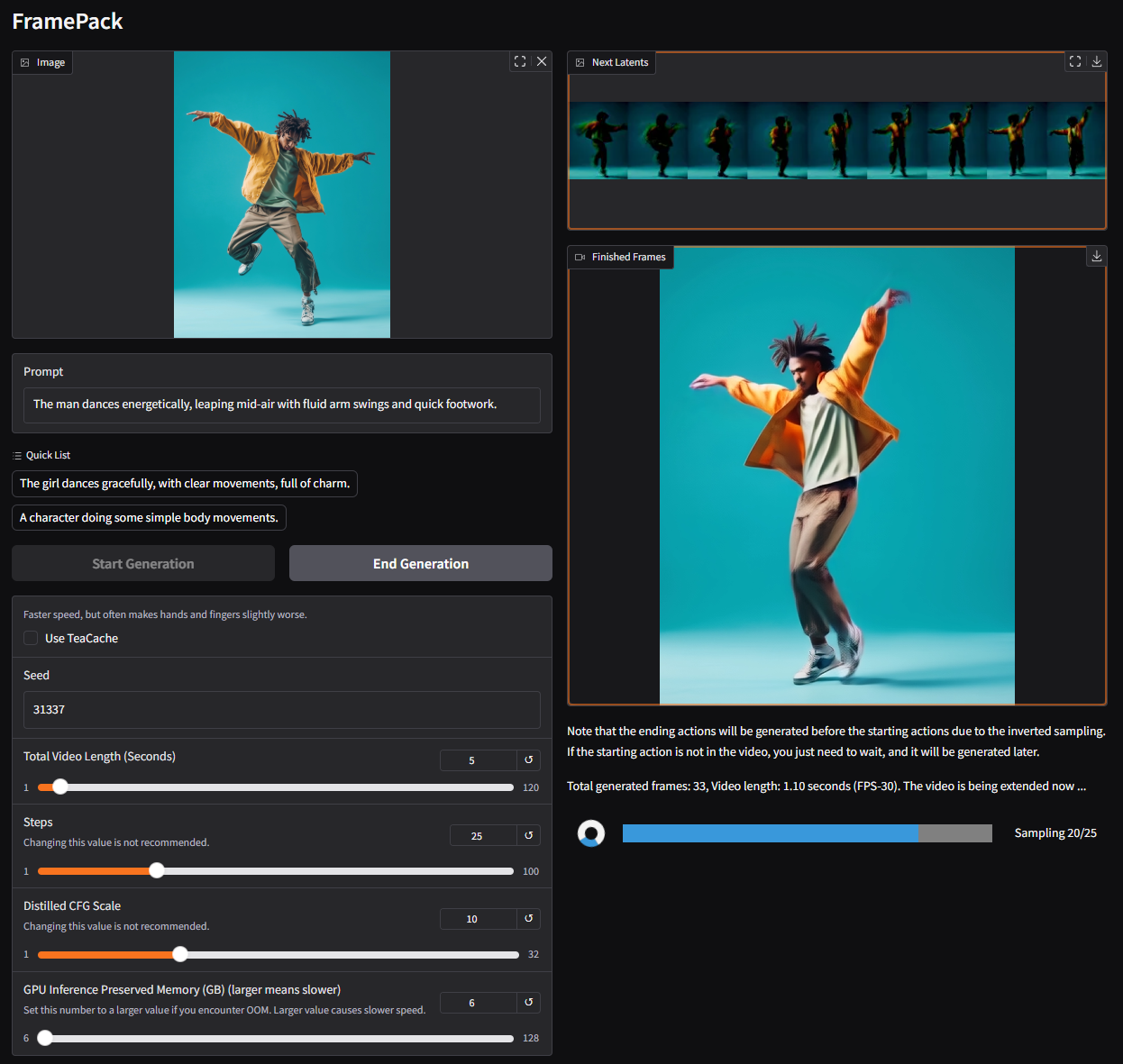

FramePack is an open source video generation tool focused on making video diffusion techniques more practical. It decouples the generation effort from the length of the video by compressing the input frames to a fixed length through a unique next frame prediction neural network. This means that even when generating long videos, the graphics memory requirements don't increase significantly. framePack can generate thousands of frames at 30fps with as little as 6GB of graphics memory, making it suitable for the average consumer GPU. developed by Lvmin Zhang, the project is optimized based on the Hunyuan video model, which has only 1.3 billion model parameters and balances efficiency with light weight. framePack FramePack provides an easy-to-use Gradio interface that supports image-to-video generation at an optimized speed of up to 1.5 seconds per frame. It is suitable for content creators, developers and general users interested in video generation.

Function List

- Image to Video Generation: Generate dynamic video from a single image with support for long video extensions.

- Low RAM Optimization: Generate 60 seconds of 30fps video with as little as 6GB of RAM.

- Next frame prediction: generating workload independent of video length by compressing the context.

- Gradio User Interface: Provides intuitive features for uploading images, entering prompts and previewing generated videos.

- Multiple Attention Mechanism Support: PyTorch, xformers, flash-attn and sage-attention are supported.

- Cross-platform compatibility: Supports Linux and Windows, compatible with NVIDIA RTX 30XX/40XX/50XX series GPUs.

- Optimized generation speed: Optimized with teacache to generate up to 1.5 seconds per frame.

- Batch training support: supports super large batch training similar to image diffusion.

Using Help

Installation process

The installation of FramePack is more technical, and is suitable for users with some experience in configuring Python and GPU environments. Below are detailed installation steps for Windows and Linux systems, as well as references to the official GitHub page and web resources.

Environmental requirements

- operating system: Linux or Windows.

- GPUs: NVIDIA RTX 30XX/40XX/50XX series with fp16 and bf16 support (GTX 10XX/20XX not tested).

- display memory: Minimum 6GB (generates 60 seconds of 30fps video).

- Python version: Python 3.10 is recommended (note: Python versions need to be strictly matched, otherwise library incompatibilities may result).

- CUDA: A GPU-compatible version of CUDA (e.g. CUDA 12.6) needs to be installed.

Installation steps

- Clone FramePack Warehouse

Open a terminal or command prompt and run the following command to clone the project:git clone https://github.com/lllyasviel/FramePack.git cd FramePack - Creating a Virtual Environment

To avoid dependency conflicts, it is recommended that you create a Python virtual environment:python -m venv venvActivate the virtual environment:

- Windows:

venv\Scripts\activate.bat - Linux:

source venv/bin/activate

- Windows:

- Installing PyTorch and dependencies

Install PyTorch matching the CUDA version (CUDA 12.6 for example):pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu126Install project dependencies:

pip install -r requirements.txt - (Optional) Installation of Sage Attention or Flash Attention

To improve performance, you can install Sage Attention (you need to choose the right wheel file for your version of CUDA and Python):pip install https://github.com/woct0rdho/SageAttention/releases/download/v2.1.1-windows/sageattention-2.1.1+cu126torch2.6.0-cp312-cp312-win_amd64.whlNote: Sage Attention may slightly affect the quality of the generation, and can be skipped for first time users.

- Launching the Gradio Interface

After the installation is complete, run the following command to launch the interface:python demo_gradio.pyUpon startup, the terminal will display something like

http://127.0.0.1:7860/You can access the Gradio interface of FramePack by visiting the URL in your browser.

Common Installation Problems

- Python version mismatch: If the dependency installation fails, check that the Python version is 3.10. Use the

python --versionView the current version. - dependency conflictIf

requirements.txtThe library versions in theav,numpy,scipyversion specified in the previous version, reinstall the latest version:pip install av numpy scipy - insufficient video memory: If the video memory is less than 6GB, the generation may fail. It is recommended to use a GPU with higher memory or to shorten the video length.

Usage

The core function of FramePack is to generate video from a single image and support controlling the video content by cue words. Below is the detailed operation procedure.

1. Access to the Gradio interface

activate (a plan) demo_gradio.py After that, the browser will display the FramePack user interface. The interface is divided into two parts:

- Left: Upload images and enter prompt words.

- Right: Displays the generated video and a latent preview (latent preview).

2. Uploading images

Click on the Image Upload area on the left and select a local image (recommended resolution 544x704 or similar size). framePack will generate video content based on this image.

3. Input prompts

Enter a short sentence describing the action of the video in the Cue Word text box. Succinct, action-oriented cue words are recommended, for example:

The girl dances gracefully, with clear movements, full of charm.A robot jumps energetically, spinning in a futuristic city.

FramePack officially recommends generating action cues via GPT in the format:

You are an assistant that writes short, motion-focused prompts for animating images. When the user sends an image, respond with a single, concise prompt describing visual motion. describing visual motion.

4. Video generation

Click the Generate button in the interface and FramePack will start processing. The generation process takes place in segments, with each segment generating about 1 second of video, which is gradually stitched together to form a complete video. The progress of the generation is shown by a progress bar and a potential preview. The generated file is saved in the ./outputs/ folder.

5. Viewing and adjustment

At the beginning of the generation process, you may only get a short video (e.g. 1 second). This is normal and you will need to wait for more clips to be generated to complete the full video. If you are not satisfied with the result, you can adjust the cue word or change the input image to regenerate it.

Optimize generation speed

- start using teacache: Enabling teacache in the generation settings increases the speed from 2.5 to 1.5 sec/frame.

- Faster generation with high performance GPUs: e.g. NVIDIA 4090.

- Disable sage-attention: If sage-attention is installed, it can be disabled for the first test to ensure quality generation.

Featured Function Operation

Low Memory Generation

FramePack's core strength is its low video memory requirements. By compressing the input context, only 6GB of video memory is required to generate 60 seconds of 30fps video. No additional configuration is required and the system automatically optimizes the graphics memory allocation.

Next frame prediction

FramePack generates video frame by frame using a next frame prediction neural network. Users can view potential previews of each generated segment in real time in the Gradio interface to ensure that the video content is as expected.

Gradio Interface

Gradio has a simple and intuitive interface that supports fast uploading and previewing. Users can use the --share parameter to share the interface to the public network, or via the --port cap (a poem) --server Parameters custom port and server address:

python demo_gradio.py --share --port 7861 --server 0.0.0.0

application scenario

- content creation

FramePack is suitable for video creators to generate short dynamic videos. For example, generating a dance video from a character image for social media content production. The low video memory requirement makes it easy for the average user to get started. - game development

Developers can use FramePack to generate dynamic scene animations such as character movement or environment changes, saving time on manual modeling. Lightweight models support real-time rendering possibilities. - Education and Demonstration

Teachers or trainers can generate instructional videos from still images to demonstrate dynamic processes (e.g., science experiment simulations.) The Gradio interface is easy to use and suitable for non-technical users. - edge computing

FramePack's 1.3 billion parameter model is suitable for deployment to edge devices such as mobile devices or embedded systems for localized video generation.

QA

- What GPUs does FramePack support?

Supports NVIDIA RTX 30XX, 40XX, 50XX series GPUs with minimum 6GB of RAM. GTX 10XX/20XX not tested, may not be compatible. - How long does it take to generate a video?

On the NVIDIA 4090, it is 2.5 seconds/frame unoptimized and 1.5 seconds/frame with teacache enabled. It takes about 3-4 minutes to generate 5 seconds of video. - How can the quality of generation be improved?

Use high quality input images, write clear action cues, disable sage-attention and turn off teacache for final high quality generation. - Is it possible to generate extra-long videos?

Yes, FramePack supports generating thousands of frames (e.g. 60 seconds at 30fps). The video memory requirement is fixed and will not increase due to video length.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...