Analyst Alberto Romero speculates that OpenAI's GPT-5 is actually long overdue

Let's start the new year in an exciting way!

- May be generated by GPT-5

What if I told you that the GPT-5 is real. Not only is it real, but it's already shaping the world in ways you can't see. Here's a hypothetical: OpenAI has developed GPT-5 but is keeping it in-house because the ROI is much higher than opening it up to millions of people. ChatGPT users. What's more, the ROI they get from the Not money. Rather, it's something else. As you can see, the idea is simple enough; the challenge is stringing together the clues that point to it. This article delves into why I think those clues eventually connect.

Disclaimer in advance: this is pure speculation. The evidence is all public, but there are no leaks or internal rumors to confirm that I am correct. In fact, I am building this theory through this article, not just sharing it. I have no privileged information - and even if I did, I am bound by a confidentiality agreement. This hypothesis is compelling because it logical . Honestly, what else do I need to start this rumor machine?

Whether you believe it or not is up to you. Even if I'm wrong - and we'll eventually know the answer - I think it's a fun detective game. I invite you to engage in speculation in the comments section, but please keep it constructive and thoughtful. Please be sure to read through the entire article first. Other than that, all debate is welcome.

I. Mysterious disappearance of Opus 3.5

Before exploring GPT-5, we have to mention its equally missing distant cousin: Anthropic's Claude Opus 3.5.

As you know, the three major AI labs - OpenAI, Google DeepMind, and Anthropic - all offer model portfolios that cover the price/latency vs. performance spectrum. openAI has GPT-4o, GPT-4o mini, as well as o1 and o1-mini; Google DeepMind offers Gemini Ultra, Pro, and Flash; Anthropic, Claude Opus, Sonnet, and Haiku. The goal is clear: cover as many customer profiles as possible. Some are looking for top performance regardless of cost, while others need an affordable, adequate solution. It all makes sense.

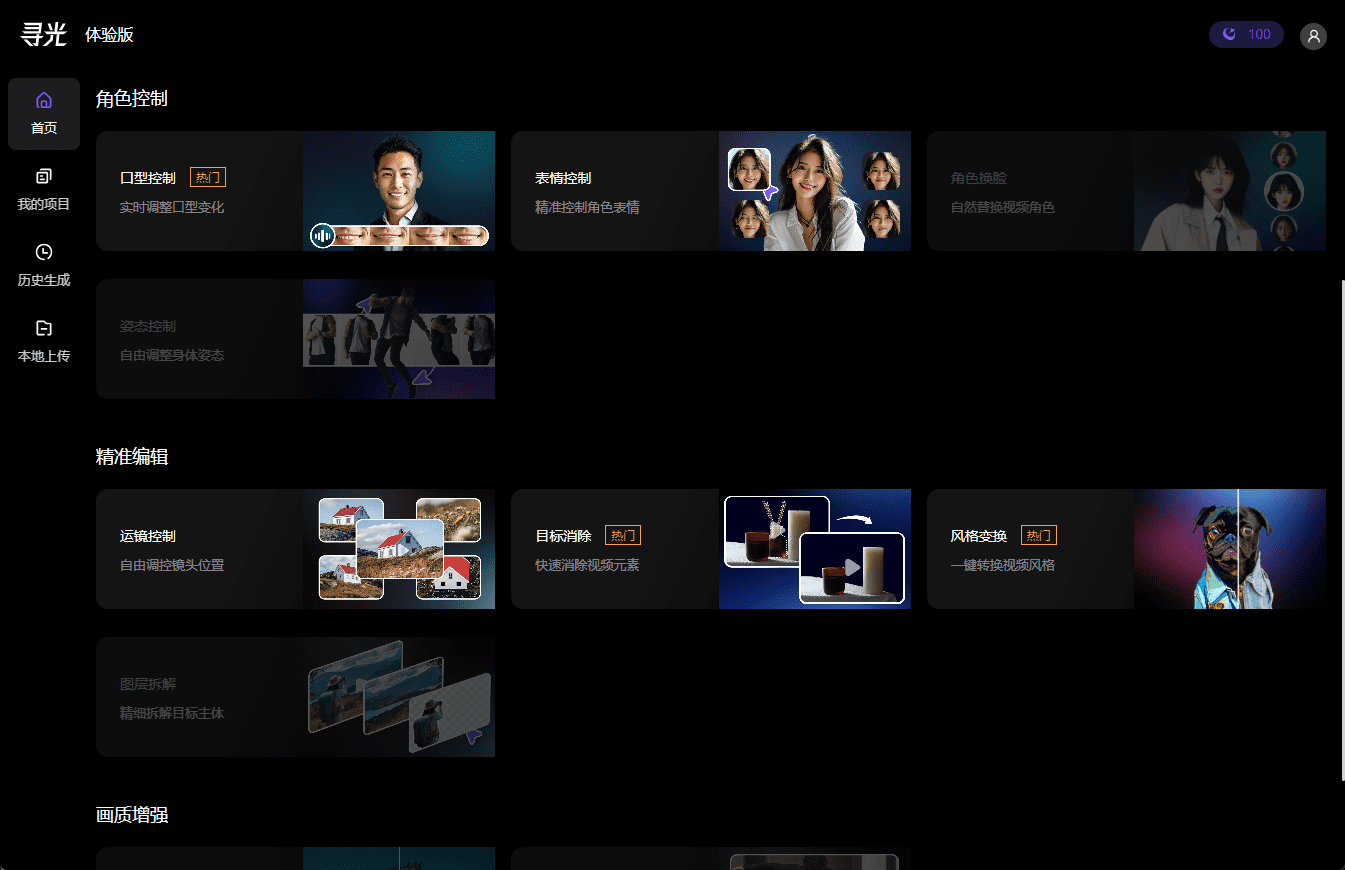

But a strange thing happened in October 2024. While everyone was expecting Anthropic When they released Claude Opus 3.5 as a response to GPT-4o (which was released in May 2024), they instead released an updated version of Claude Sonnet 3.5 (which they started calling Sonnet 3.6) on October 22nd. Opus 3.5 disappeared, leaving Anthropic without a product in direct competition with GPT-4o. GPT-4o. Strange, isn't it? Here's a quick look at the Opus 3.5 timeline:

- On October 28, I wrote in my weekly review article, "[There are] rumors that Sonnet 3.6 is... . an intermediate checkpoint generated during the highly anticipated Opus 3.5 training failure." On the same day, a post appeared in the r/ClaudeAI subforum, "Claude 3.5 Opus has been deprecated," with a link to the Anthropic models page - still no sign of Opus 3.5. Some have speculated that this move was made to maintain investor confidence ahead of a new round of funding.

- On November 11th, Anthropic CEO Dario Amodei dropped Opus 3.5 on the Lex Fridman podcast: "Although we can't give an exact date, we are still planning to release Claude 3.5 Opus." The wording is cautiously ambiguous, but effective.

- On November 13, Bloomberg confirmed early rumors that "After training was completed, Anthropic found that 3.5 Opus outperformed the older version in evaluations, but the boost fell short of expectations given the size of the model and the cost of building the run." Dario did not give a date, seemingly because the results were unsatisfactory despite Opus 3.5 training not failing. Note the focus on Cost to Performance Ratio Not just performance.

- On December 11, semiconductor expert Dylan Patel and his Semianalysis team brought the final twist, offering the explanation that "Anthropic completed the Claude 3.5 Opus training and performed well... ... but did not release it. Because they switched to Generating Synthesis Data with Claude 3.5 Opus that significantly enhances Claude 3.5 Sonnet through bonus modeling."

In short, Anthropic did train Claude Opus 3.5. they dropped the name because the results were not good enough. dario avoided specific dates because he believed that the results could be improved with a different training process. Bloomberg confirms that it outperforms existing models but the cost of inference (the cost to the user of using the model) is unaffordable. dylan's team reveals the connection between Sonnet 3.6 and the missing Opus 3.5: the latter was used internally to generate the synthetic data that boosted the performance of the former.

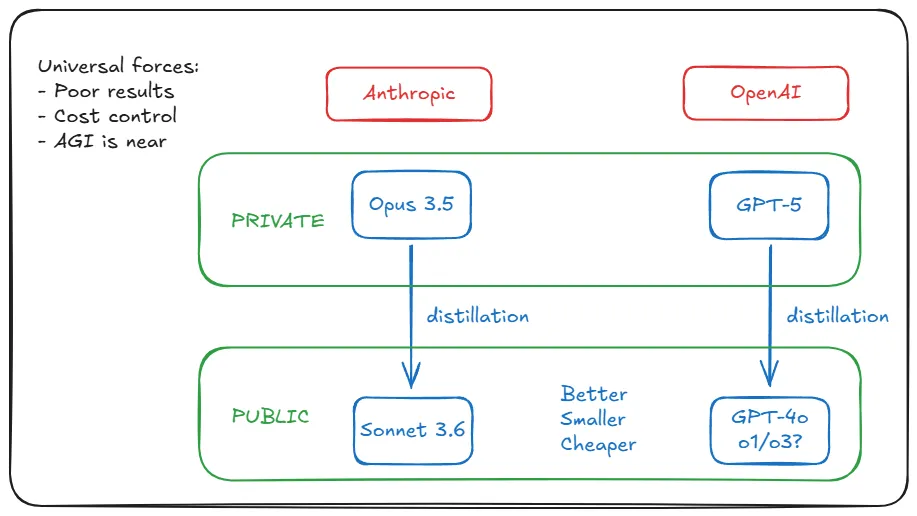

The whole process can be diagrammed as follows:

II. Better and smaller and cheaper?

The process of using a powerful and expensive model to generate data to augment a slightly weaker but more economical model is called distillation. This is common practice. The technique allows AI labs to push past the limitations of pre-training alone and boost the performance of smaller models.

There are different methods of distillation, but we won't go into that. The key thing to remember is that a strong model as a "teacher" can move the "student" model from [small, cheap and fast] + (following a decimal or fraction) slightly less than Transformed into [small, cheap, fast] + large . Distillation turns strong models into gold mines.Dylan explains why this makes sense for Anthropic's Opus 3.5-Sonnet 3.6 combination:

(The inference cost (of the new Sonnet over the old one) has not changed significantly, but the model performance has improved. Why bother releasing 3.5 Opus from a cost perspective when you can get 3.5 Sonnet by post-training with 3.5 Opus?

Back to the cost issue: distillation controls inference expenses while improving performance. This directly addresses the core problem reported by Bloomberg, and Anthropic chose not to release Opus 3.5 not only because of its lackluster results, but also because of its higher internal value. (Dylan points out that this is why the open source community is rapidly catching up to GPT-4 - they're taking gold directly from the OpenAI goldmine.)

The most amazing revelation?Sonnet 3.6 is not only excellent - it reaches the industry leader . Beyond GPT-4o. Anthropic's mid-range model beats OpenAI's flagship by distilling through Opus 3.5 (and probably for other reasons; five months is long enough in AI). Suddenly, the perception of high cost as synonymous with high performance began to crumble.

What happened to the era of "bigger is better", which OpenAI CEO Sam Altman warns is over? I wrote about it. When the top labs became secretive, they stopped sharing parameter numbers. Parameter sizes were no longer reliable, and we wisely shifted to focusing on benchmark performance. the last publicly available parameter size from OpenAI was 175 billion for GPT-3 in 2020. rumors in June 2023 suggested that GPT-4 was a hybrid expert model with ~1.8 trillion parameters. a subsequent detailed evaluation by Semianalysis confirmed that GPT-4 had 1.76 trillion parameters, in July 2023. Semianalysis follow-up detailed evaluation confirmed that GPT-4 had 1.76 trillion parameters in July 2023.

Until December 2024 - a year and a half from now - Ege Erdil, a researcher at EpochAI, an organization that focuses on the future impact of AI, estimates that the parameter scales of frontier models, including GPT-4o and Sonnet 3.6, are significantly smaller than GPT-4 (although both benchmarks outperform GPT-4):

... Current frontier models such as the first-generation GPT-4o and Claude 3.5 Sonnet may be an order of magnitude smaller than GPT-4, with 4o around 200 billion parameters and 3.5 Sonnet around 400 billion ... Although the roughness of the estimates may lead to errors of up to a factor of two.

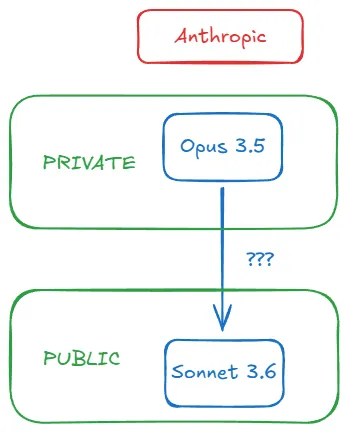

He explains in depth how he arrived at that number without the lab releasing architectural details, but that's not important to us. The point is that the fog is clearing: Anthropic and OpenAI seem to be following similar trajectories. Their latest models are not only better, they're also smaller and cheaper than their predecessors. We know that Anthropic did this by distilling Opus 3.5. But what did OpenAI do?

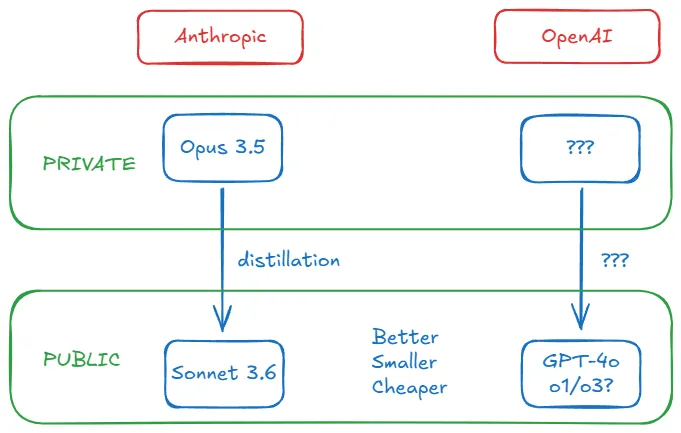

III. AI labs are driven by universalism

One might think that Anthropic's distillation strategy stems from a unique situation - namely, poor Opus 3.5 training results. But the reality is that Anthropic's situation is not unique, and the latest training results from Google DeepMind and OpenAI are equally unsatisfactory. (Note that poor results are not the same as The model is worse. ) The reasons do not matter to us: diminishing returns due to insufficient data, inherent limitations of the Transformer architecture, plateauing of the pre-training scaling law, etc. In any case, Anthropic's particular context is actually universal.

But remember what Bloomberg reports: performance metrics are only as good as the cost. Is this another shared factor? Yes, and Ege explains why: the surge in demand following the ChatGPT/GPT-4 boom. Generative AI is spreading at a rate that makes it difficult for labs to sustain the losses of continued expansion. This forced them to reduce inference costs (training is only once, inference costs grow with user volume and usage). If 300 million users are using the product every week, operational expenses could suddenly be fatal.

The factors that drove Anthropic to enhance Sonnet 3.6 with distillation are affecting OpenAI with exponential intensity. distillation is effective because it turns both of these pervasive challenges into advantages: solving the inference cost problem by providing small models while not releasing big models to avoid public backlash against mediocre performance.

Ege thinks OpenAI might choose the alternative: overtraining. That is, training more data with smaller models in a non-computationally optimal state: "When inference is a major part of the model's expense, it's best to... train more tokens with smaller models." But overtraining is no longer feasible. When inference is a major part of the model's expense, it's better to train more tokens with smaller models." But overtraining is no longer feasible, and AI Labs has run out of high-quality pre-training data, as both Elon Musk and Ilya Sutskever have recently acknowledged.

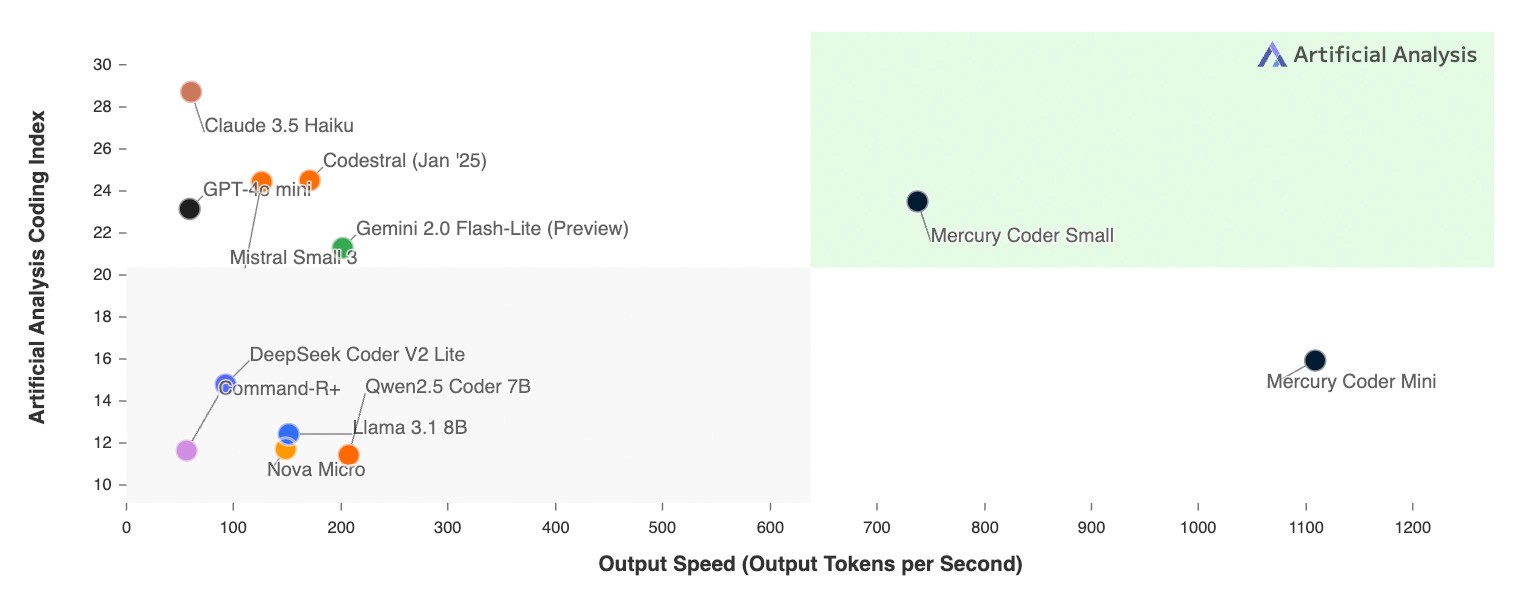

Returning to distillation, Ege concludes, "I think it's likely that both the GPT-4o and the Claude 3.5 Sonnet were distilled from larger models."

All clues up to this point indicate that OpenAI is doing what Anthropic did with Opus 3.5 (train and hide) in the same way (distillation) and for the same reasons (poor results/cost control). This is a discovery. But here's the thing: Opus 3.5 yet Where are the OpenAI counterparts hidden? Is it hidden in the company's basement? Dare you guess its name...

IV. Pioneers must clear the way

I open the analysis by examining Anthropic's Opus 3.5 event for its more transparent information. I then bridge the concept of distillation to OpenAI, explaining that the same underlying forces that drive Anthropic also act on OpenAI. but our theory runs into a new obstacle: as a pioneer, OpenAI may face obstacles that Anthropic has yet to encounter.

For example, the hardware requirements for training GPT-5. Sonnet 3.6 is comparable to GPT-4o, but it was released five months later. We should assume that GPT-5 is at a higher level: more powerful and larger. Not only the reasoning cost, but also the training cost is higher. Five hundred million dollars in training costs may be involved. Is this possible with existing hardware?

Ege is once again unraveling: it's possible. It is not realistic to offer such a behemoth to 300 million users, but training is not a problem:

In principle, existing hardware is sufficient to support models much larger than GPT-4: e.g., a 100 trillion parameter model that is 50 times larger than GPT-4, with an inference cost of about $3,000/million output tokens, and an output rate of 10-20 tokens/second. But for this to be feasible, large models must create significant economic value for customers.

But even Microsoft, Google, or Amazon (the moneymakers of OpenAI, DeepMind, and Anthropic, respectively) can't afford this kind of inference. The solution is simple: if they plan to make trillions of parametric models available to the public, they would have to "create significant economic value". So they don't.

They trained the model. Found "better performance than existing products". But have to accept that "it's not improved enough to justify the huge cost of keeping it running". (Does that sound familiar? The Wall Street Journal reported on the GPT-5 a month ago in terms strikingly similar to Bloomberg's report on Opus 3.5.)

They report mediocre results (with flexibility to adjust the narrative). Retain them internally as teacher models to distill student models. Then release the latter. We get Sonnet 3.6 and GPT-4o, o1, etc. and rejoice in their cheap quality. Expectations for Opus 3.5 and GPT-5 remain intact, even as we grow more impatient. Their goldmine continues to shine.

V. Of course, you have more reasons, Mr. Altman!

V. Of course, you have more reasons, Mr. Altman!

When I got this far in my investigation, I still wasn't completely convinced. It's true that all the evidence suggests that it's entirely plausible for OpenAI, but there's still a gap between "plausible" or even "plausible" and "real". I'm not going to fill in the gap for you - it's just speculation, after all. But I can further strengthen the argument.

Is there more evidence that OpenAI is operating in this manner? Are there more reasons for them to delay the release of GPT-5 other than poor performance and mounting losses, and what information can we extract from the public statements of OpenAI executives about GPT-5? Aren't they risking damage to their reputation by repeatedly delaying the release of the model? After all, OpenAI is the face of the AI revolution, and Anthropic operates in its shadow.Anthropic can afford these maneuvers, but what about OpenAI? Perhaps not without a price.

Speaking of money, let's dig into some relevant details about OpenAI's partnership with Microsoft. First, the well-known fact: the AGI Articles. In OpenAI's blog post about their structure, they have five governance clauses that define how they operate, their relationship with non-profit organizations, their relationship with the Board of Directors, and their relationship with Microsoft. The fifth clause defines AGI as "a highly autonomous system capable of outperforming humans in most economically valuable endeavors" and establishes that once the OpenAI Board of Directors declares that AGI has been realized, "the system will be excluded from the intellectual property licensing and other commercial terms with Microsoft, which are subject only to the terms of the Microsoft license and other commercial terms. other commercial terms with Microsoft, which apply only to technology prior to AGI."

Needless to say, neither company wants the partnership to break down. openAI sets the terms, but does everything it can to avoid having to abide by it. One way to do this is to delay the release of systems that might be labeled as AGI. "But GPT-5 is certainly not AGI," you'll say. And here's a second fact that almost no one knows: OpenAI and Microsoft have a secret definition of AGI that, while irrelevant for scientific purposes, legally defines their partnership: AGI is an "AI system capable of generating at least $100 billion in profits". AGI is an AI system "capable of generating at least $100 billion in profits.

If OpenAI hypothetically delayed the release on the pretext that GPT-5 wasn't ready, they'd accomplish something else besides controlling costs and preventing a public backlash: they'd avoid announcing whether or not it met the threshold for being classified as AGI. While $100 billion in profits is a staggering figure, there's nothing stopping ambitious customers from making that much profit on top of it. On the other hand, let's be clear: if OpenAI predicts that GPT-5 will generate $100 billion in annual recurring revenue, they won't mind triggering the AGI clause and parting ways with Microsoft.

Most of the public reaction to OpenAI not releasing GPT-5 was based on the assumption that they weren't releasing it because it wasn't good enough. Even if this were true, no skeptic stops to think that OpenAI might have a better internal use case than external use. There is a huge difference between creating a great model and creating a great model that can serve 300 million people cheaply. If you can't do it, you won't do it. But again, if you unnecessary Do it and you won't do it. They used to provide us with their best models because they needed our data. It's no longer so necessary. And they're no longer chasing our money. That's Microsoft's business, not theirs. They want AGI, then ASI. they want to leave a legacy.

VI. Why this changes everything

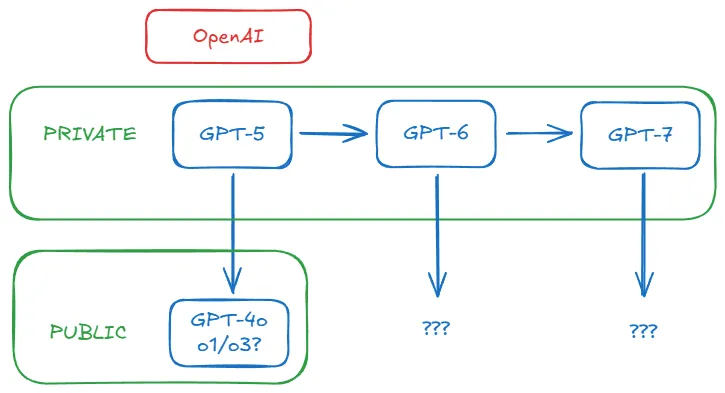

We're nearing the end. I believe I've made enough arguments to build a solid thesis: it's very likely that OpenAI already has GPT-5 in-house, just as Anthropic has Opus 3.5. It's even possible that OpenAI will never release GPT-5. the public now measures performance in terms of o1/o3, not just GPT-4o or Claude Sonnet 3.6. with OpenAI exploring the law of scaling in testing, the bar that needs to be crossed for GPT-5 keeps going up. How can they possibly release a GPT-5 that truly outperforms o1, o3, and the upcoming o-series models, especially when they are rolling them out at such a rapid pace? Besides, they don't need our money or data anymore.

Training new base models - GPT-5, GPT-6 and later - always makes sense internally for OpenAI, but not necessarily as a product. That's probably over. The only important goal for them now is to continue to generate better data for the next generation of models. From now on, the base model may operate in the background, empowering other models to achieve feats they couldn't achieve on their own - like a reclusive old man passing on wisdom from a secret cave, only this cave is a giant data center. Whether we see him or not, we will experience the consequences of his wisdom.

Even if GPT-5 is eventually released, this fact suddenly seems almost irrelevant. If OpenAI and Anthropic do get off the ground Recursive self-improvement operations (though still with human involvement), then it will no longer matter what they give us publicly. They will get further and further ahead - just as the universe is expanding so fast that light from distant galaxies can no longer reach us.

Maybe that's why OpenAI jumped from o1 to o3 in just three months. It's also why they're jumping to o4 and o5. That's probably why they've been so excited on social media lately. Because they've implemented a new and improved mode of operation.

Do you really think being close to AGI means you will be able to use increasingly powerful AI? Will they release every advancement for us to use? Of course you won't believe this. They meant it when they first said their models would push them so far that no one else would be able to catch up. Each new generation of models is an engine of escape velocity. From the stratosphere, they have waved goodbye.

It remains to be seen if they will return.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...