FastDeploy - Baidu's high-performance large model reasoning and deployment tool

What is FastDeploy?

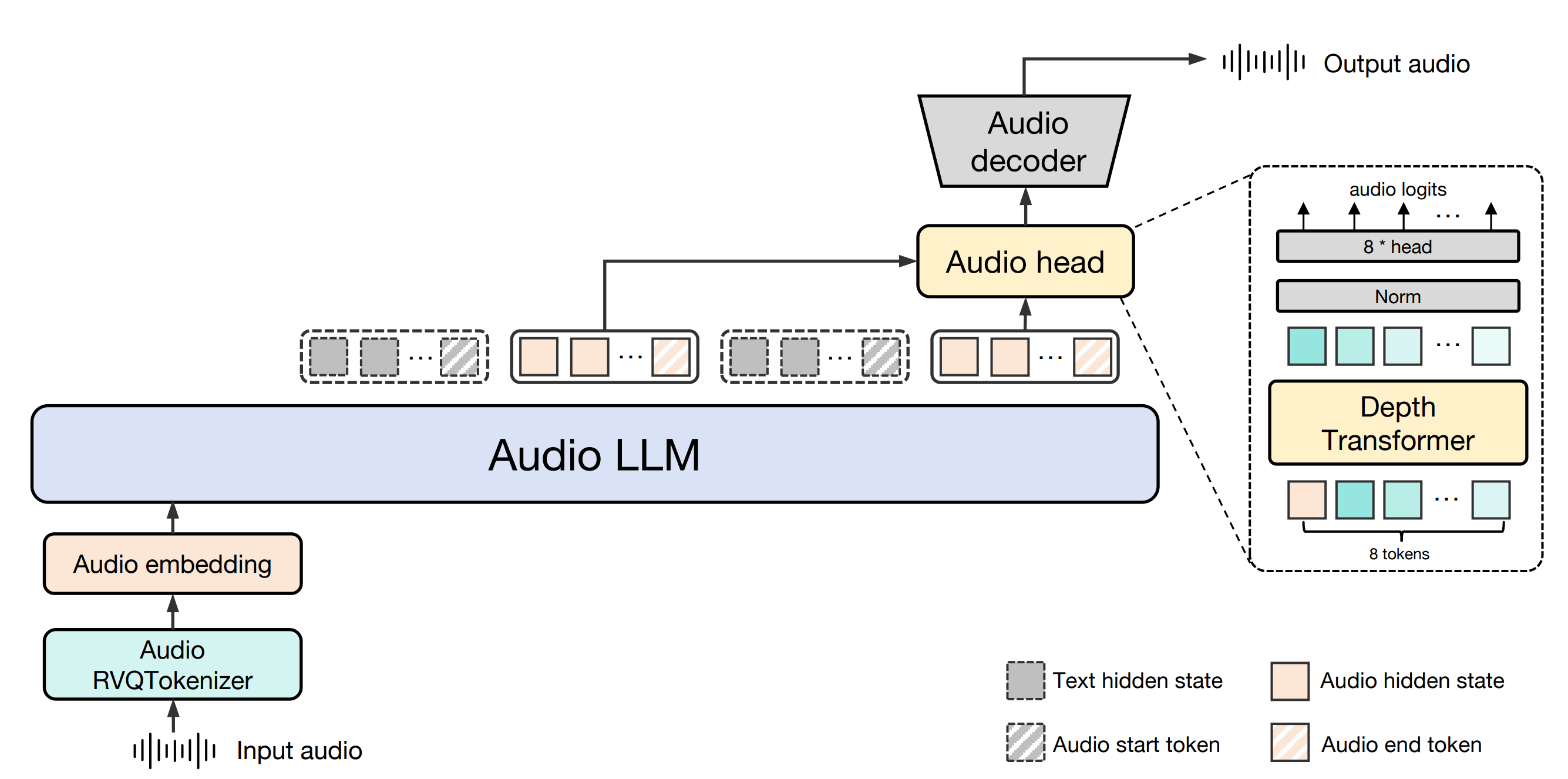

FastDeploy is a high-performance inference and deployment tool from Baidu, designed for Large Language Models (LLMs) and Visual Language Models (VLMs).FastDeploy is developed based on the PaddlePaddle framework, supports multiple hardware platforms (e.g., NVIDIA GPUs, Kunlun XPUs, etc.), and is equipped with features such as load balancing, quantization optimization, and distributed inference, which can significantly improve model inference performance and reduce hardware costs, With load balancing, quantization optimization, distributed inference and other features, FastDeploy can significantly improve model inference performance and reduce hardware costs. vLLM interfaces to support local and serviced reasoning, simplifying the deployment process of large models. The latest version of FastDeploy 2.0 introduces 2-bit quantization technology to further optimize performance and support efficient deployment of larger models.

Main features of FastDeploy

- Efficient inference deploymentIt supports a variety of hardware platforms such as NVIDIA GPUs and Kunlun Core XPUs, and provides a one-click deployment function to simplify the inference deployment process of large models.

- performance optimization: Significantly improve model inference speed with quantization techniques (e.g., 2-bit quantization), CUDA Graph optimization, and speculative decoding.

- distributed inference: Support large-scale distributed reasoning, optimize communication efficiency, and enhance the reasoning efficiency of large-scale models.

- Load Balancing and SchedulingReal-time load sensing and distributed load balancing scheduling based on Redis to optimize cluster performance and ensure stable system operation under high load.

- usability: Provides a clean Python interface and detailed documentation to get you up and running quickly.

- 2-bit quantization techniquesThe introduction of 2-bit quantization dramatically reduces memory footprint and hardware resource requirements, supporting the deployment of hundreds of billions of parameter-level models on a single card.

- compatibilityThe vLLM interface is compatible with the OpenAI API and vLLM interface, and supports both local and serviced reasoning, with 4 lines of code to complete the local reasoning and 1 line of command to start the service.

FastDeploy's official website address

- Project website:: https://paddlepaddle.github.io/FastDeploy/

- GitHub repository:: https://github.com/PaddlePaddle/FastDeploy

How to use FastDeploy

- Installation of dependencies: Install the Flying Paddle Frame and FastDeploy:

pip install paddlepaddle fastdeploy- Prepare the model:Download and prepare the model file (e.g., pre-trained model or converted model).

- local inference: Local reasoning with Python interfaces:

from fastdeploy import inference

# 加载模型

model = inference.Model("path/to/model")

# 准备输入数据

input_data = {"input_ids": [1, 2, 3], "attention_mask": [[1, 1, 1]]}

# 进行推理

result = model.predict(input_data)

print(result)- Service-oriented deployment: Start the service for reasoning:

fastdeploy serve --model path/to/model --port 8080- performance optimization: Optimizing models using quantitative techniques:

from fastdeploy import quantization

quantized_model = quantization.quantize_model("path/to/model", "path/to/quantized_model", quantization_type="2-bit")FastDeploy's Core Benefits

- High Performance ReasoningThe company's technology is based on quantization, CUDA Graph and other technologies to significantly improve inference speed, while supporting multiple hardware platforms to fully utilize the hardware performance.

- Efficient deployment: Provides a clean Python interface and command-line tools to support native and serviced reasoning and simplify the deployment process.

- Resource optimizationThe newest addition to the system is a 2-bit quantization technology that dramatically reduces graphics memory footprint, supports single card deployment of hyperscale models, and optimizes cluster resource utilization with load balancing.

- usabilityThe interface is simple and well-documented for quick startup, compatible with OpenAI APIs and vLLM interfaces, and supports quick startup of services.

- Multi-scenario application: Widely applicable to natural language processing, multimodal applications, industrial-grade deployments, academic research, and enterprise applications to meet diverse needs.

Who FastDeploy is for

- Enterprise Developers: Enterprise developers rapidly deploy large models, optimize resources to reduce costs, and help improve the efficiency of enterprise services.

- Data scientists and researchers: Researchers conduct high-performance inference experiments and multimodal studies to support efficient model optimization and experimentation.

- system architect: The architect is responsible for designing large-scale distributed inference systems and optimizing load balancing to ensure stable system operation.

- AI app developers: Developers develop natural language processing and multimodal applications to improve application performance and optimize user experience.

- Academic researchers: Scholars study model optimization and multimodal techniques to facilitate efficient experiments and advance academic research.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...