Fast-Agent: Declarative Grammar and MCP Integration for Rapidly Building Multi-Intelligent Body Workflows

General Introduction

Fast-Agent is an open source tool maintained by the evalstate team on GitHub, designed to help developers quickly define, test, and build multi-intelligent body workflows. Based on a simple declarative syntax, it supports integration with MCP (Model-Compute-Platform) servers, allowing users to focus on designing prompts and smartbody logic instead of tedious configuration. fast-Agent offers multiple workflow modes (e.g., chained, parallel, evaluation-optimized, etc.), built-in Command Line Instrumentation (CLI), and interactive chat features, and is suitable for development scenarios ranging from prototyping to production deployment. It is suitable for development scenarios from prototyping to production deployment. The project is licensed under the Apache 2.0 license, supports community contributions, and emphasizes ease of use and flexibility.

Function List

- Definition of Intelligent Body: Fast definition of intelligences via simple decorators, support for custom directives and MCP Server call.

- Workflow construction: Supports multiple workflow modes such as Chain, Parallel, Evaluator-Optimizer, Router and Orchestrator.

- Model Selection: Easily switch between different models (e.g. o3-mini, sonnet) and test the interaction between the model and the MCP server.

- interactive chat: Supports real-time conversations with individual intelligences or workflow components for easy debugging and optimization.

- Test Support: Built-in testing capabilities to validate the performance of intelligences and workflows suitable for integration into continuous integration (CI) processes.

- CLI operation: Provides command line tools to simplify the installation, runtime and debugging process.

- human input: Intelligentsia may request human input to provide additional context to accomplish tasks.

- Rapid Prototyping: From simple file configuration to running, it takes only a few minutes to launch the Smartbody application.

Using Help

The core goal of Fast-Agent is to lower the barriers to multi-intelligence development. Below is a detailed installation and usage guide to help users get up and running quickly and master its features.

Installation process

Fast-Agent depends on the Python environment, it is recommended to use the uv Package Manager. The following are the installation steps:

- Install Python and uv

Ensure that Python 3.9 or later is installed on your system, and then install theuv::

pip install uv

Verify the installation:

uv --version

- Installing Fast-Agent

pass (a bill or inspection etc)uvInstalled from PyPI:

uv pip install fast-agent-mcp

For full feature support (e.g., file system or Docker MCP server), run:

uv pip install fast-agent-mcp[full]

- Initialization Configuration

After the installation is complete, sample configuration files and intelligences are generated:

fast-agent setup

This will generate the agent.py cap (a poem) fastagent.config.yaml etc.

- Verify Installation

Check the version:

fast-agent --version

If the version number is returned, the installation was successful.

Usage

Fast-Agent supports running intelligences and workflows from the command line or from code, as described below.

Creating and running basic intelligences

- Defining Intelligence

compileragent.py, adding simple intelligences:

import asyncio

from mcp_agent.core.fastagent import FastAgent

fast = FastAgent("Simple Agent")

@fast.agent(instruction="Given an object, respond with its estimated size.")

async def main():

async with fast.run() as agent:

await agent()

if __name__ == "__main__":

asyncio.run(main())

- Running Intelligence

Run it from the command line:uv run agent.pyThis will start an interactive chat mode where you enter the object name (e.g. "the moon") and the smart will return the estimated size.

- Specify the model

utilization--modelParameter selection model:uv run agent.py --model=o3-mini.low

Creating Workflows

- Generate workflow templates

utilizationbootstrapcommand to generate examples:fast-agent bootstrap workflowThis will create a catalog of chained workflows showing how to build effective intelligences.

- Running a workflow

Go to the generated workflow directory and run it:uv run chaining.pyThe system will fetch the content from the specified URL and generate social media posts.

Featured Function Operation

- Chain workflow (Chain)

compilerchaining.py, define chained workflows:@fast.agent("url_fetcher", "Given a URL, provide a summary", servers=["fetch"]) @fast.agent("social_media", "Write a 280 character post for the text.") async def main(): async with fast.run() as agent: result = await agent.social_media(await agent.url_fetcher("http://example.com")) print(result)configure

fastagent.config.yamlAfter running the MCP server in the:uv run chaining.py - Parallel workflow (Parallel)

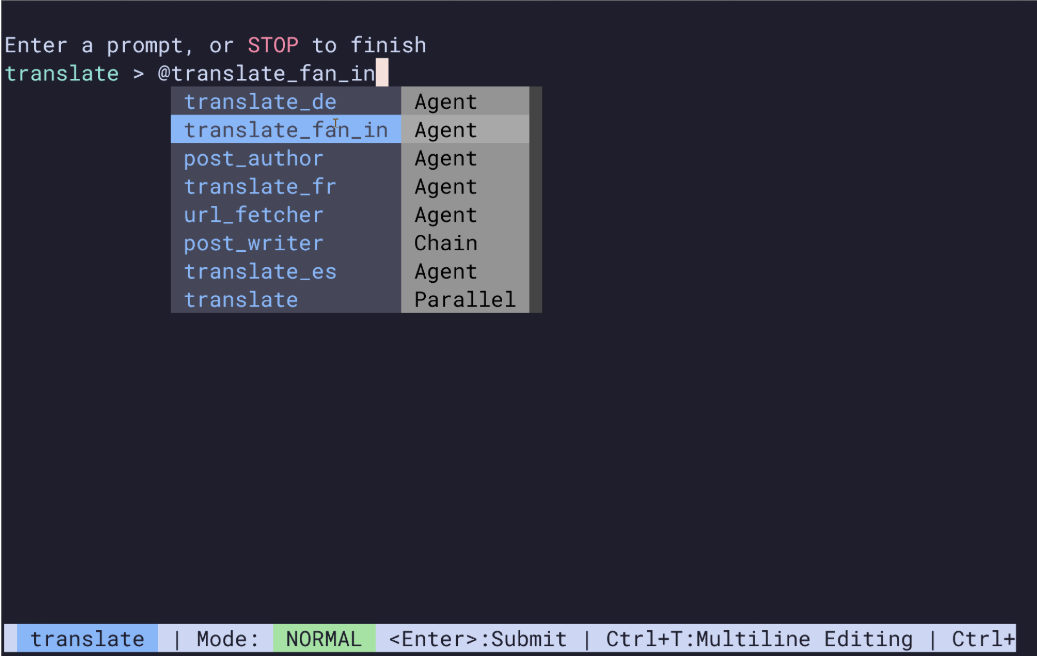

Define a multilingual translation workflow:@fast.agent("translate_fr", "Translate to French") @fast.agent("translate_de", "Translate to German") @fast.parallel(name="translate", fan_out=["translate_fr", "translate_de"]) async def main(): async with fast.run() as agent: await agent.translate.send("Hello, world!")Once run, the text will be translated into both French and German.

- human input

Define intelligences that require human input:@fast.agent(instruction="Assist with tasks, request human input if needed.", human_input=True) async def main(): async with fast.run() as agent: await agent.send("print the next number in the sequence")At runtime, the user will be prompted to enter more information if the intelligence requires it.

- Evaluator-Optimizer (Evaluator-Optimizer)

Generate a research workflow:fast-agent bootstrap researcherEdit the configuration file and run it, the intelligence will generate the content and optimize it until you are satisfied.

Configuring the MCP Server

compiler fastagent.config.yaml, add the server:

servers:

fetch:

type: "fetch"

endpoint: "https://api.example.com"

At runtime, the smart body will call this server for data.

Example of operation flow

Suppose you need to generate social media posts from URLs:

- (of a computer) run

fast-agent bootstrap workflowGenerate templates. - compiler

chaining.pyThe following table describes how to set up the URL and the MCP server. - fulfillment

uv run chaining.pyTo view the generated 280-character post. - utilization

--quietparameter returns only the result:uv run chaining.py --quiet

caveat

- Windows user: The file system and Docker MCP server configuration need to be adjusted, as described in the Generate Configuration File note.

- adjust components during testing: If the run fails, add

--verboseView detailed logs:uv run agent.py --verbose

With these steps, users can quickly install and use Fast-Agent to build and test multi-intelligence workflows for research, development and production scenarios.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...