Wujie-Emu3.5 - Wisdom Source Research Institute open source multimodal world big model

What is Wuki-Emu 3.5?

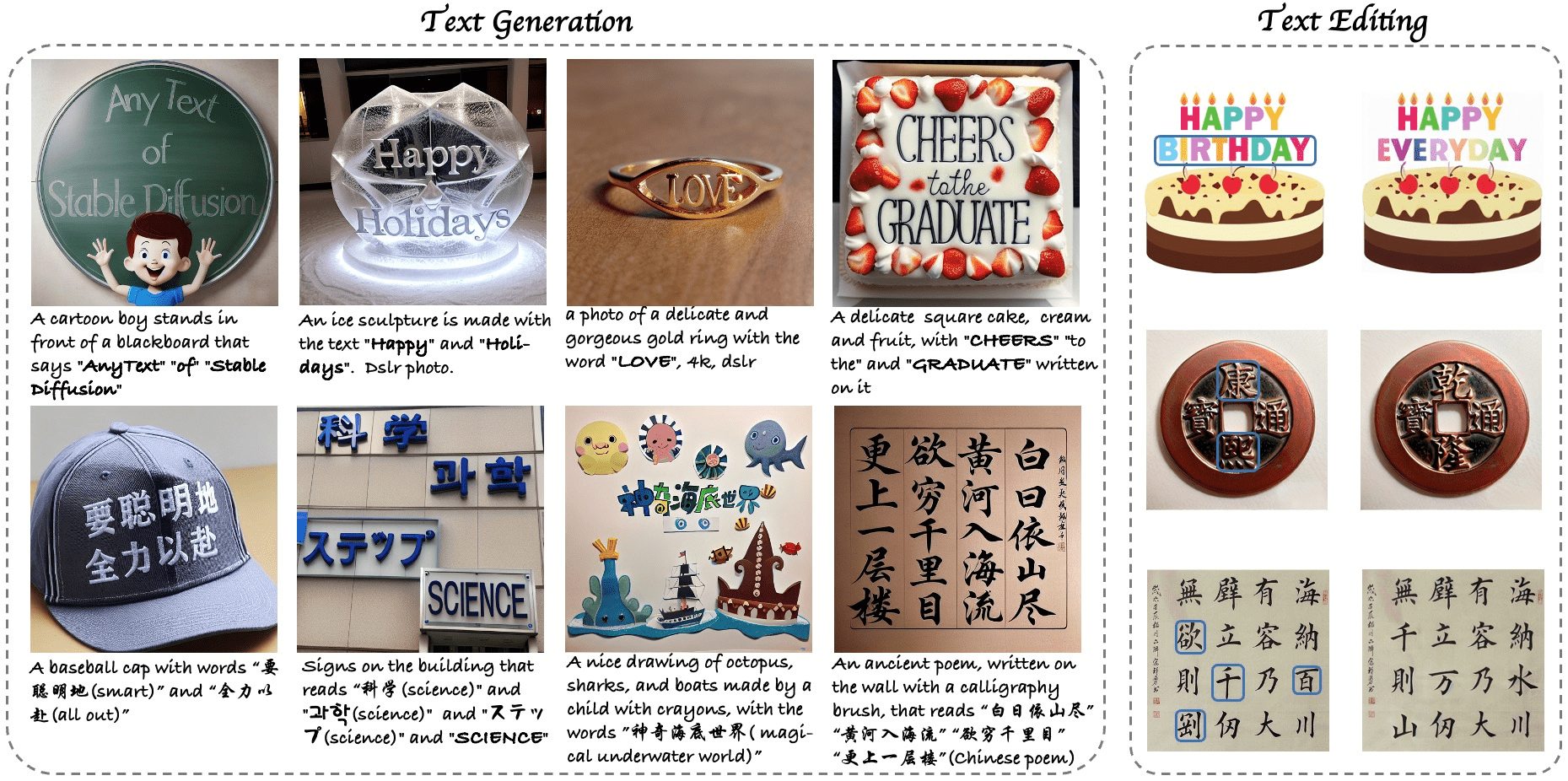

Wujie-Emu3.5 is an open source multimodal world grand model from Beijing Zhiyuan Artificial Intelligence Research Institute, with 34 billion references and native world modeling capability. Trained by 10 trillion multimodal Token (including 790 years of video data), it can simulate the laws of physics, and realize tasks such as graphic generation, visual guidance, and world exploration. The innovative "Discrete Diffusion Adaptive" technology increases the speed of image generation by 20 times, surpassing the performance of the Nano Banana model. The model has been open-sourced and is applicable to fields such as embodied intelligence and virtual scene construction.

Functional features of Wujie-Emu 3.5

- Multimodal generation capability: Generates high-quality text, image, and video content that seamlessly blends multiple modalities.

- World modeling and dynamic forecasting: Trained with large-scale video data, the model understands and predicts the physical dynamics and spatio-temporal continuum of the real world.

- Visual Narrative and Direction: Generate coherent graphic stories and step-by-step visual tutorials that provide an immersive narrative experience and intuitive how-to instructions.

- Efficient Reasoning Acceleration: Discrete Diffusion Adaptive (DiDA) technology is used to dramatically increase the speed of image generation and maintain the quality of generation.

- Decomposition of complex tasks: Break down complex robot manipulation tasks into multiple subtasks, providing detailed step-by-step instructions and keyframe images.

- Strong generalization capabilities: Demonstrates strong generalization capabilities on multiple out-of-distribution tasks, and is able to adapt to different application scenarios and task requirements.

Core Advantages of Wujie-Emu 3.5

- Native multimodal fusionBased on the unified goal of "next state prediction", it realizes in-depth fusion of text, image and video modalities, breaks down modal boundaries, and provides a more natural and coherent multimodal interaction experience.

- Efficient Reasoning Acceleration: Significantly improve the speed of image generation through Discrete Diffusion Adaptive (DiDA) technology to achieve inference efficiency comparable to top diffusion models while maintaining high quality generation.

- Powerful world modeling capabilities: By pre-training on large-scale video data, the model is able to internalize real-world physical dynamics and causal laws to support complex spatio-temporal reasoning and world exploration tasks.

- Rich application scenariosIt is suitable for content creation, education and training, virtual reality, robot control and many other fields, providing powerful technical support and innovative solutions for different industries.

- Openness and Extensibility: SmartSource Research Institute plans to open source Emu3.5 to provide the global AI research community with a powerful base model to support further research and development, and to facilitate the rapid development of multimodal intelligence technologies.

What is the official website of Gworld-Emu3.5?

- Project website:: https://zh.emu.world

- Github repository:: https://github.com/baaivision/emu3.5

- HuggingFace Model Library:: https://huggingface.co/collections/BAAI/emu35

- Technical Papers:: https://zh.emu.world/Emu35_tech_report.pdf

People for whom Wuki-Emu 3.5 is suitable

- content creatorThe company's multimodal generation capabilities allow it to create high-quality graphic and video content for advertising designers, film and TV producers, game developers, and more.

- educator: Teachers, trainers and others can enrich their teaching and learning with generated graphic stories and step-by-step tutorials.

- Scientific and technical researchers: Researchers working in the fields of artificial intelligence, robotics, and virtual reality can leverage the model's native multimodal fusion and world modeling capabilities to drive technological innovation.

- Companies & Brands: Enterprises that need efficient content production, precise marketing and user experience optimization can use the model to generate creative content to enhance brand image and market competitiveness.

- Developers & Engineers: Developers who wish to realize efficient development and deployment in multimodal applications can expand their application scenarios with secondary development based on the open source model.

- Students and learners: Students interested in multimodal learning, artificial intelligence, etc. can understand complex concepts and knowledge more intuitively through model-generated learning materials.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...