EchoMimic: Audio-driven portrait photos to generate talking videos (EchoMimicV2 accelerated installer)

General Introduction

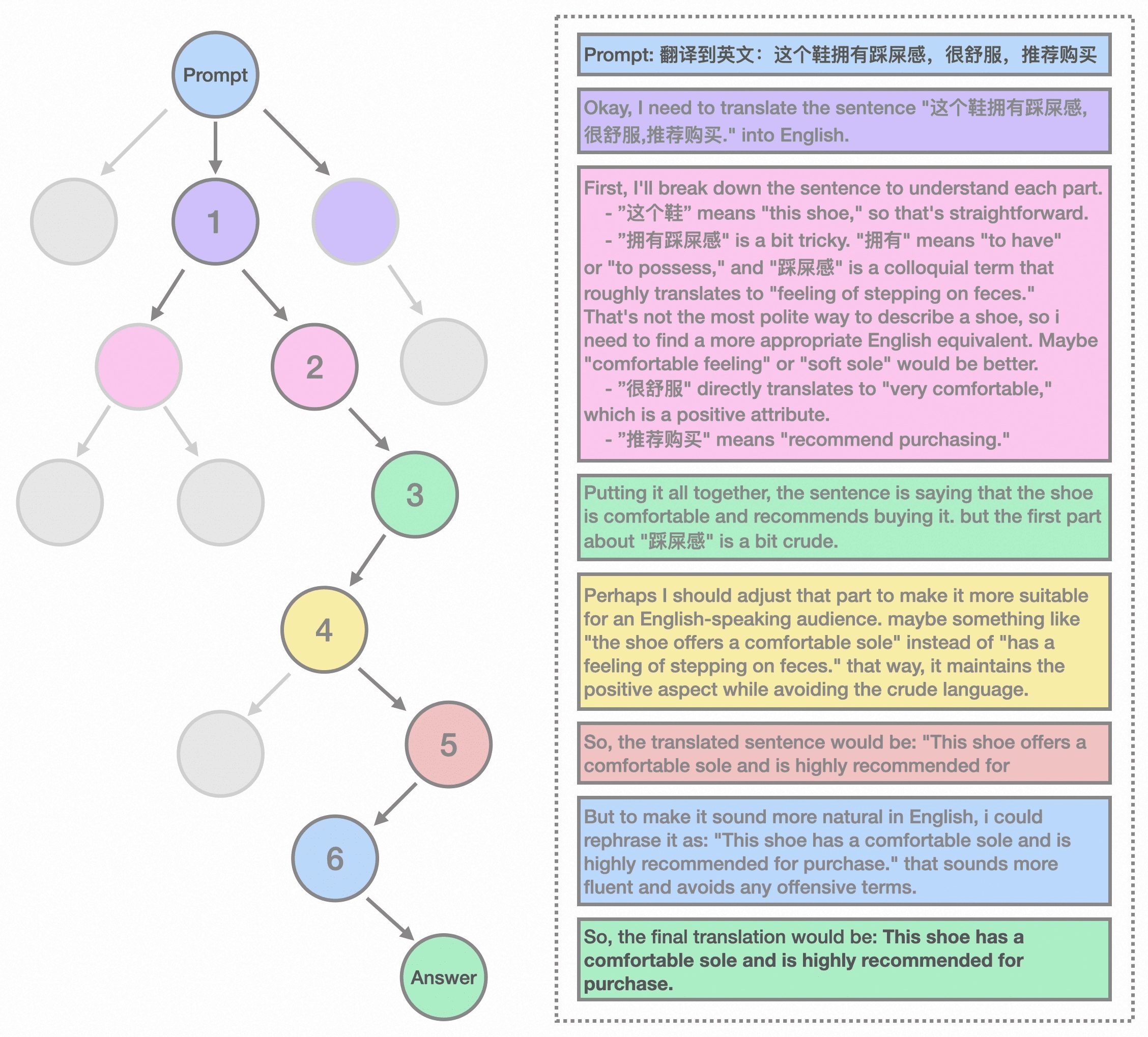

EchoMimic is an open source project designed to generate realistic portrait animations through audio-driven generation. Developed by Ant Group's Terminal Technologies division, the project utilizes editable marker point conditions that combine audio and facial marker points to generate dynamic portrait videos.EchoMimic has been comprehensively compared across multiple public and proprietary datasets, demonstrating its superior performance in both quantitative and qualitative evaluations.

EchoMimicV2 version optimizes inference speed, adds gesture actions, and is recommended.

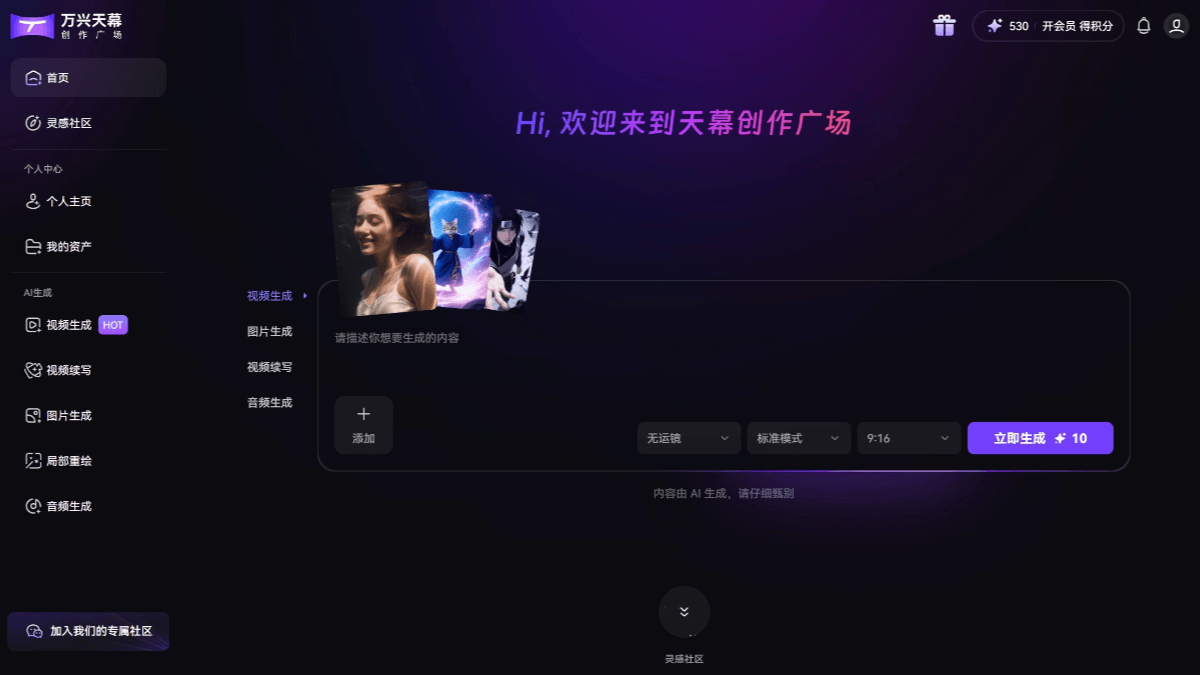

Demo at https://www.modelscope.cn/studios/BadToBest/BadToBest V2: https://huggingface.co/spaces/fffiloni/echomimic-v2

Function List

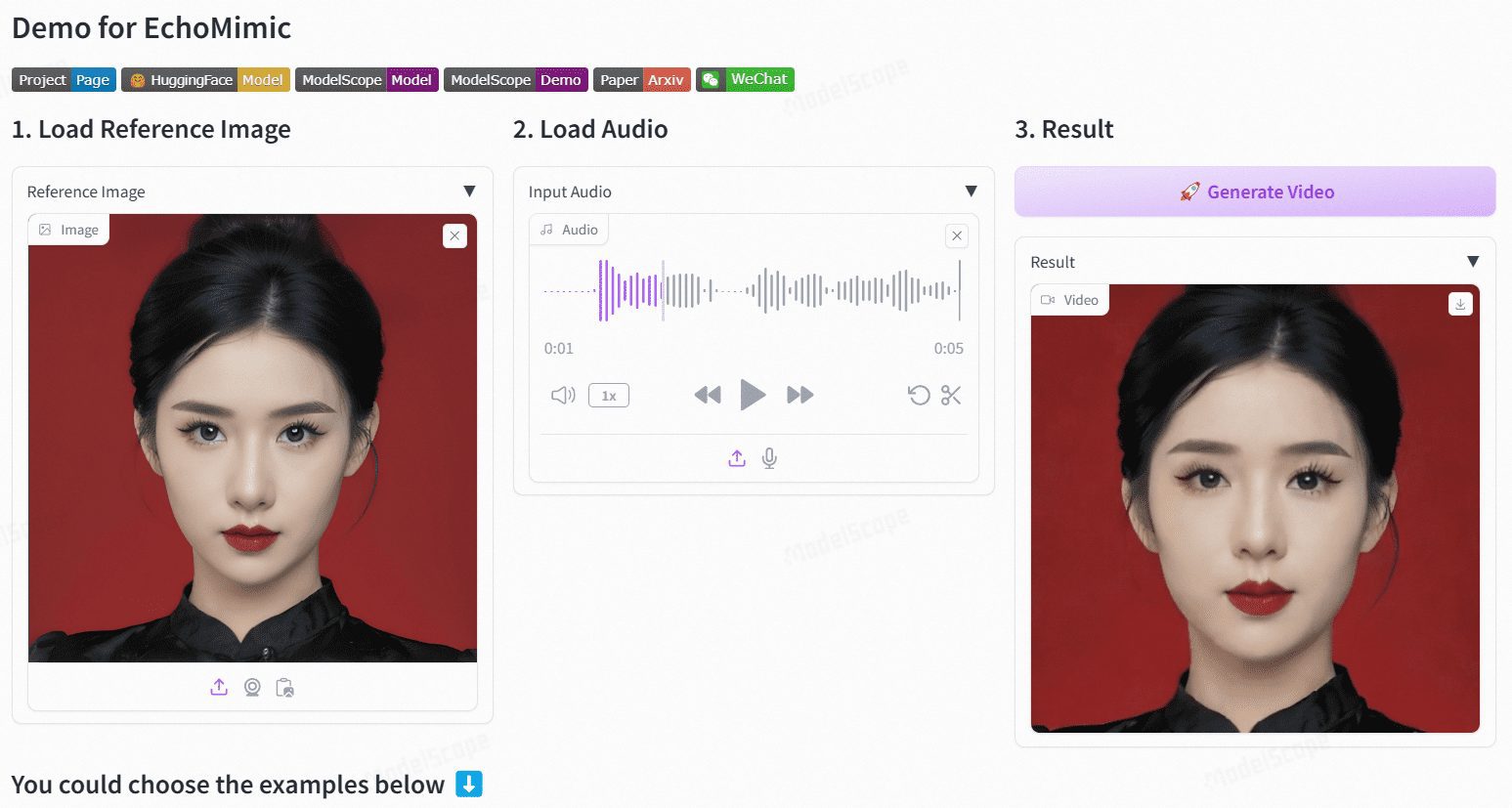

- Audio Driver Animation: Generate realistic portrait animations with audio input.

- Marker point driven animation: Generate stable portrait animations using facial marker points.

- Audio + Marker Driver: Combine audio and selected facial markers to generate more natural portrait animations.

- Multi-language support: Supports audio input in Chinese, English and other languages.

- Efficient Reasoning: Optimized models and pipelines significantly improve inference speed.

Using Help

Installation process

- Download Code::

git clone https://github.com/BadToBest/EchoMimic cd EchoMimic - Setting up the Python environment::

- It is recommended to use conda to create a virtual environment:

conda create -n echomimic python=3.8 conda activate echomimic - Install the dependency packages:

pip install -r requirements.txt

- It is recommended to use conda to create a virtual environment:

- Download and unzip ffmpeg-static::

- Download ffmpeg-static and unzip it, then set the environment variable:

export FFMPEG_PATH=/path/to/ffmpeg-4.4-amd64-static

- Download ffmpeg-static and unzip it, then set the environment variable:

- Download Pre-training Weights::

- Download the appropriate pre-trained model weights according to the project description.

Usage Process

- Running the Web Interface::

- Launch the web interface:

python webgui.py - Visit the local server to view the interface and upload audio files for animation generation.

- Launch the web interface:

- command-line reasoning::

- Use the following commands for audio-driven portrait animation generation:

python infer_audio2vid.py --audio_path /path/to/audio --output_path /path/to/output - Reasoning in conjunction with signposts:

python infer_audio2vid_pose.py --audio_path /path/to/audio --landmark_path /path/to/landmark --output_path /path/to/output

- Use the following commands for audio-driven portrait animation generation:

- model optimization::

- Using the optimized model and pipeline can significantly improve inference speed, e.g. from 7 min/240 fps to 50 sec/240 fps on V100 GPUs.

caveat

- Ensure that the Python version and CUDA version used are consistent with the project requirements.

- If you encounter problems during use, you can refer to the project's README file or submit an issue on GitHub for help.

Windows One-Click Installer

Link: https://pan.quark.cn/s/cc973b142d41

Extract code: 5T57

EchoMimicV2 Accelerated Download

Quark: https://pan.quark.cn/s/12acd147a758

Baidu: https://pan.baidu.com/s/1z8tiuGtN29luQ7Cg2zHJ8Q?pwd=9e8x

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...