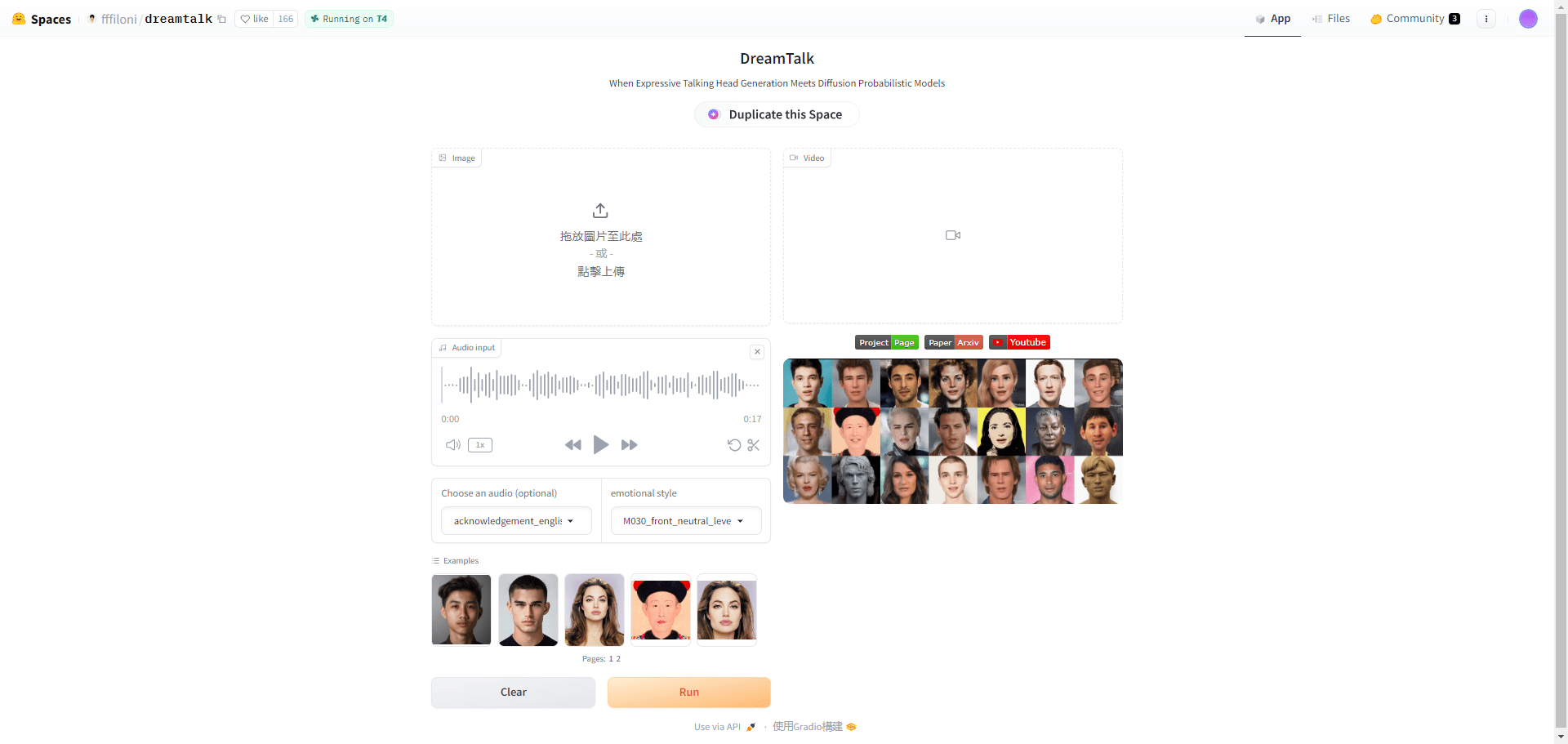

DreamTalk: Generate expressive talking videos with a single avatar image!

DreamTalk General Introduction

DreamTalk is a diffusion model-driven expressive talking head generation framework, jointly developed by Tsinghua University, Alibaba Group and Huazhong University of Science and Technology. It consists of three main components: a noise reduction network, a style-aware lip expert and a style predictor, and is capable of generating diverse and realistic talking heads based on audio input. The framework is capable of handling both multilingual and noisy audio, providing high-quality facial motion and accurate mouth synchronization.

DreamTalk Feature List

Generate realistic talking head videos based on audio

Supports multiple languages and voice input

Supports multiple styles and expressions of output

Support for customizing character avatars and style references

Support online demo and code download

DreamTalk Help

Visit the program homepage for more information and demo videos

Visit the paper's address to read technical details and experimental results

Visit the GitHub address to download the code and pre-trained models

Follow the installation guide to configure the environment and dependencies

Run inference_for_demo_video.py to reason and generate the video

Adjust input and output options according to parameter descriptions

DreamTalk Online Experience Address

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...