dots.vlm1 - Small red book hi lab open source multimodal big model

What is dots.vlm1?

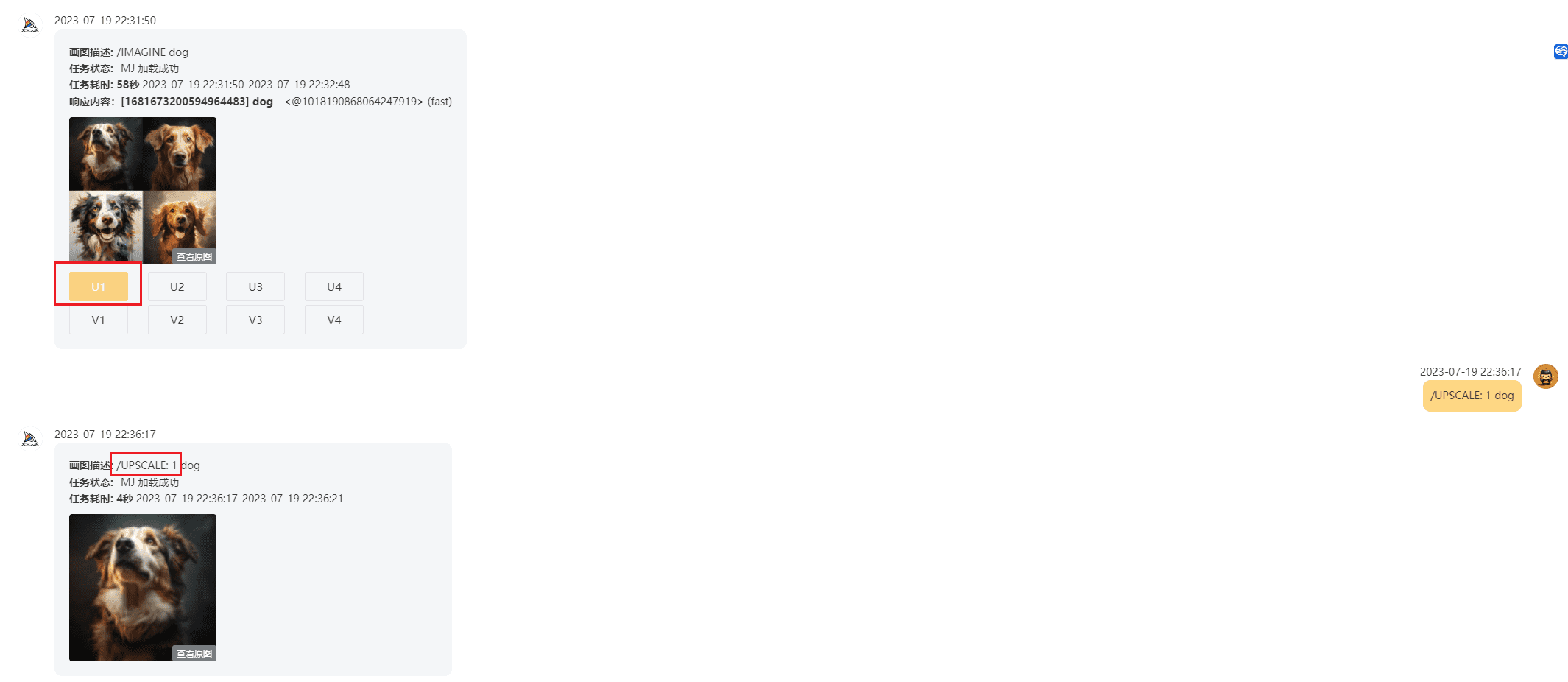

dots.vlm1 is the first multimodal macromodel open-sourced by Little Red Book hi lab. Based on a 1.2 billion parameter visual coder NaViT trained from zero and the DeepSeek V3 Large Language Model (LLM) with strong visual perception and textual reasoning capabilities. The model performs well on visual comprehension and inference tasks, approaching the level of closed-source SOTA models, and maintains competitiveness on textual tasks. dots.vlm1's visual coder, NaViT, is trained entirely from scratch, natively supports dynamic resolution, and adds pure visual supervision to textual supervision to enhance perceptual capabilities. The training data introduces a variety of synthetic data ideas to cover diverse image types and their descriptions to improve data quality.

Main functions of dots.vlm1

- Strong visual comprehension: Accurately recognizes and understands content in images, including complex charts, tables, documents, graphs, etc., and supports dynamic resolution for a wide range of visual tasks.

- Efficient Text Generation and Reasoning: Based on DeepSeek V3 LLM, it generates high-quality text descriptions and performs well in textual reasoning tasks such as math and code.

- Multimodal data processing: It supports data processing with graphic and textual interlacing, and can combine visual and textual information for integrated reasoning, which is suitable for multimodal application scenarios.

- Flexible Adaptation and ExtensionThe MLP adapter connects the visual encoder to the language model, allowing for flexible adaptation and extension across different tasks.

- Open Source and Openness: Provide complete open source code and models to support developers' research and application development, and promote the development of multimodal technology.

Project address for dots.vlm1

- GitHub repository:: https://github.com/rednote-hilab/dots.vlm1

- Hugging Face Model Library:: https://huggingface.co/rednote-hilab/dots.vlm1.inst

- Online Experience Demo:: https://huggingface.co/spaces/rednote-hilab/dots-vlm1-demo

Technical principles of dots.vlm1

- NaViT visual encoder: dots.vlm1 uses NaViT, a 1.2 billion parameter visual coder trained from scratch, not fine-tuned based on existing mature models. Native support for dynamic resolution, capable of handling image inputs of different resolutions, and purely visual supervision added to textual supervision to improve the model's ability to perceive images.

- Multimodal data training: The model adopts diverse multimodal training data, including ordinary images, complex charts, tables, documents, graphs, etc., and corresponding text descriptions (e.g. Alt Text, Dense Caption, Grounding, etc.). Synthetic data ideas and graphic-text interleaved data such as web pages and PDFs are introduced to enhance the data quality through rewriting and cleaning, and to enhance the model's multimodal comprehension ability.

- Visual and Linguistic Model Fusion: dots.vlm1 combines a visual encoder with the DeepSeek V3 Large Language Model (LLM), connected via a lightweight MLP adapter, to enable effective fusion of visual and linguistic information to support multimodal task processing.

- Three-phase training process: The training of the model is divided into three stages: visual coder pre-training, VLM pre-training and VLM post-training. The generalization ability and multimodal task processing ability of the model are enhanced by gradually increasing the image resolution and introducing diverse training data.

Core benefits of dots.vlm1

- Visual coder trained from scratch: A NaViT visual coder trained entirely from scratch, with native dynamic resolution support and pure visual supervision to raise the upper bound of visual perception.

- Multimodal data innovation: Introducing a variety of synthetic data ideas to cover diverse image types and their descriptions, as well as rewriting web page data using multimodal macromodels, significantly improves the quality of training data.

- Near SOTA performance: Achieving performance close to that of closed-source SOTA models in visual perception and reasoning, setting a new performance ceiling for open-source visual language models.

- Powerful text capabilities: Performs well in textual reasoning tasks with some math and coding skills while remaining competitive in plain text tasks.

- Flexible Architecture DesignThe MLP adapter connects the visual encoder to the language model, allowing for flexible adaptation and extension across different tasks.

People for whom dots.vlm1 is indicated

- Artificial intelligence researchers: Interested in multimodal macromodeling and would like to explore its applications and improvements in the fields of vision and language processing.

- Developers and engineers: The need to integrate multimodal functionality such as image recognition, text generation, visual reasoning, etc. into the project.

- educator: The model can be used to supplement instruction and help students better understand and analyze complex charts, documents, and other content.

- content creator: The need to generate high-quality graphic content, or make content recommendations and personalized creations.

- business user: In business scenarios where multimodal data needs to be processed, such as intelligent customer service, content recommendation, and data analytics, models can be used to improve efficiency and effectiveness.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...