Dolphin: Asian Language Recognition and Speech-to-Text Modeling for Asian Languages

General Introduction

Dolphin is an open source model developed by DataoceanAI in collaboration with Tsinghua University, focusing on speech recognition and language recognition for Asian languages. It supports 40 languages from East Asia, South Asia, Southeast Asia, and the Middle East, as well as 22 Chinese dialects. The model is trained on over 210,000 hours of audio data, combining proprietary and publicly available datasets.Dolphin can convert speech to text, as well as detect parts of speech (VAD), segment audio, and recognize language (LID). It has a simple design, and the code and some of the models are freely available on GitHub for developers.

Function List

- Supports speech-to-text in 40 Asian languages and 22 Chinese dialects.

- Provides Voice Activity Detection (VAD) to find speech segments in the audio.

- Support audio splitting, cut long audio into small segments for processing.

- Implement Language Identification (LID) to determine the language or dialect of the audio.

- Open source code and models that allow user modification and customization.

- Two models, base and small, are available to suit different needs.

- Use a two-tier tagging system that distinguishes between languages and regions (e.g.

<zh><CN>).

Using Help

The installation and usage process of Dolphin is simple and suitable for users with basic programming skills. Below are the detailed steps.

Installation process

- Preparing the environment

Requires Python 3.8 or above, and FFmpeg to process the audio.- Checking Python: In the terminal, type

python --version, confirm the version. - Python is not installed and can be downloaded from python.org.

- Install FFmpeg: Run the command according to your system:

- Ubuntu/Debian:

sudo apt update && sudo apt install ffmpeg - macOS:

brew install ffmpeg - Windows:

choco install ffmpeg

Uninstalled package management tools can be downloaded from the FFmpeg website.

- Ubuntu/Debian:

- Checking Python: In the terminal, type

- Installing Dolphin

There are two ways:- Install with pip

Enter it in the terminal:pip install -U dataoceanai-dolphinThis will install the latest stable version.

- Installation from source code

To use the latest development version, get it from GitHub:- Cloning Warehouse:

git clone https://github.com/DataoceanAI/Dolphin.git - Go to the catalog:

cd Dolphin - Installation:

pip install .

- Cloning Warehouse:

- Install with pip

- Download model

Dolphin has 4 models, currently base (140M parameters) and small (372M parameters) are available for free download.- surname Cong Hugging Face Get the model file.

- Save to the specified path, e.g.

/data/models/dolphin/The - The base model is faster and the small model is more accurate.

Usage

Command line and Python operations are supported.

command-line operation

- speech-to-text

Prepare the audio file (e.g.audio.wav), enter:

dolphin audio.wav

The system automatically downloads the default model and outputs the text. The audio should be in WAV format and can be converted with FFmpeg:

ffmpeg -i input.mp3 output.wav

- Specifying models and paths

Use the small model:

dolphin audio.wav --model small --model_dir /data/models/dolphin/

- Specify language and region

Recognizing Chinese Mandarin with double-layer markers:

dolphin audio.wav --model small --model_dir /data/models/dolphin/ --lang_sym "zh" --region_sym "CN"

lang_symis the language code, such as "zh" (Chinese).region_symis the area code, e.g. "CN" (Mainland China).

For a complete list of languages see languages.mdThe

- Fill Short Audio

Available when audio is less than 30 seconds--padding_speech trueFilling:

dolphin audio.wav --model small --model_dir /data/models/dolphin/ --lang_sym "zh" --region_sym "CN" --padding_speech true

Python Code Manipulation

- Loading Audio and Models

Runs in Python:

import dolphin

waveform = dolphin.load_audio("audio.wav") # 加载音频

model = dolphin.load_model("small", "/data/models/dolphin/", "cuda") # 加载模型

"cuda"with GPU, without GPU change to"cpu"The

- Executive Recognition

Processes audio and outputs it:result = model(waveform) # 转文本 print(result.text) # 显示结果 - Specify language and region

Add parameters:result = model(waveform, lang_sym="zh", region_sym="CN") print(result.text)

Featured Function Operation

- Voice Activity Detection (VAD)

Automatically detects speech segments and labels them with time, for example:0.0-2.5s: 你好 3.0-4.5s: 今天天气很好 - Language Identification (LID)

Determine the audio language, for example:dolphin audio.wav --model small --model_dir /data/models/dolphin/output as

<zh>(Chinese) or<ja>(Japanese). - bilingual markup

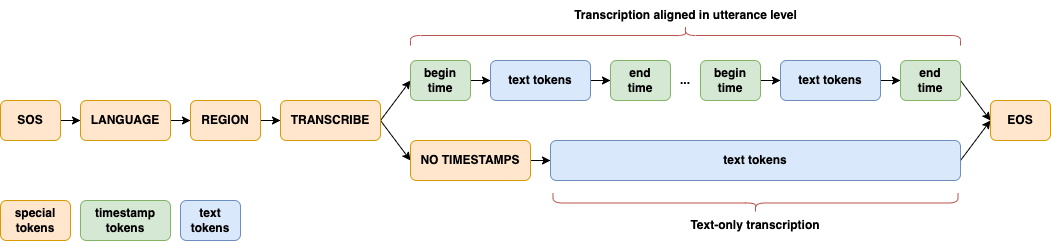

Distinguish between languages and regions with two-level markers, such as<zh><CN>(Mandarin Chinese),<zh><TW>(Taiwanese Mandarin) to improve Asian language processing skills. - model architecture

The CTC-Attention architecture, with E-Branchformer for encoder and Transformer for decoder, is optimized for Asian languages.

application scenario

- proceedings

Converts recordings of Asian multilingual conferences into text, suitable for international or local meetings. - dialect study

To analyze the phonological features of 22 Chinese dialects and generate research data. - Smart Device Development

Integration into smart devices for voice control in Asian languages.

QA

- What languages are supported?

Support for 40 Asian languages and 22 Chinese dialects, see languages.mdThe - Need a GPU?

Not required. CPU can run, GPU (CUDA support) is faster. - What is the difference between the base and small models?

The base model is small (140M parameters) with an error rate of 33.31 TP3T; the small model is large (372M parameters) with an error rate of 25.21 TP3T.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...