DiffPortrait360: Generate 360-degree head views from a single portrait

General Introduction

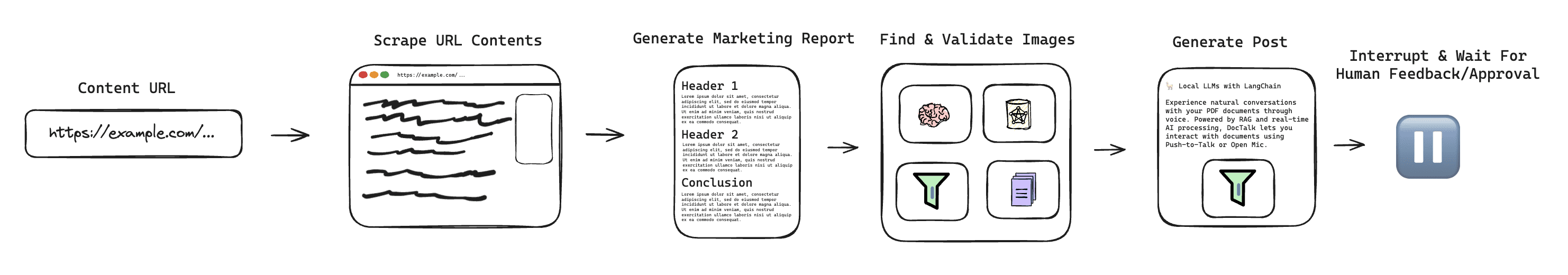

DiffPortrait360 is an open source project that is part of the CVPR 2025 paper "DiffPortrait360: Consistent Portrait Diffusion for 360 View Synthesis". It generates a consistent 360-degree head view from a single portrait photo, supporting real humans, stylized images, and anthropomorphic characters, even including details such as glasses and hats. The project is based on a diffusion model (LDM), combined with ControlNet and the Dual Appearance module to generate high-quality Neural Radiation Fields (NeRFs) that can be used for real-time free-view rendering. It is suitable for immersive telepresence and personalized content creation, and is already gaining attention in academia and the developer community.

Function List

- Generate a 360-degree head view from a single portrait photo.

- Supports generation of real humans, stylized images and anthropomorphic characters.

- Use ControlNet to generate back details to ensure a realistic view.

- Output high quality NeRF models with free-view rendering support.

- Consistency of front and rear views is maintained with the Dual Appearance Module.

- Open source inference code and pre-trained models for developers to use and modify.

- Provides Internet-collected test data with Pexels and 1,000 real portraits.

Using Help

DiffPortrait360 is a tool for developers and researchers and requires a certain technical foundation. Detailed installation and usage instructions are provided below.

Installation process

- Prepare hardware and systems

You will need a NVIDIA GPU with CUDA support and a minimum of 30GB of RAM (to generate 32 frames of video), 80GB is recommended (e.g. A6000). The operating system needs to be Linux.- Check the CUDA version, 12.2 is recommended for operation:

nvcc --version

- Check the CUDA version, 12.2 is recommended for operation:

- Creating the Environment

Creating a Python 3.9 environment with Conda:

conda env create -n diffportrait360 python=3.9

conda activate diffportrait360

- Cloning Code

Download the project code locally:

git clone https://github.com/FreedomGu/DiffPortrait360.git

cd DiffPortrait360/diffportrait360_release

- Installation of dependencies

Project offersrequirements.txt, run the following command to install it:

pip install -r requirements.txt

- If you encounter a dependency conflict, update the pip:

pip install --upgrade pip

- Download pre-trained model

Download the model from Hugging Face:

- interviews HF LinksThe

- downloading

PANO_HEAD_MODEL,Head_Back_MODELcap (a poem)Diff360_MODELThe - Place the model in the local path and

inference.shModify the corresponding path in, for example:PANO_HEAD_MODEL=/path/to/pano_head_model

- Verification Environment

Check if the GPU is available:

python -c "import torch; print(torch.cuda.is_available())"

exports True Indicates a normal environment.

Operation of the main functions

Generate 360-degree header view

- Preparing to enter data

- Prepare a frontal portrait photo (JPEG or PNG) with a recommended resolution of 512x512 or higher.

- Place the photo into the

input_image/folder (if this folder does not exist, create it manually). - gain

dataset.json(camera information), refer to PanoHead Cropping Guide Process your own photos.

- Running inference scripts

- Go to the code catalog:

cd diffportrait360_release/code - Executive reasoning:

bash inference.sh - The output will be saved in the specified folder (default)

output/).

- View Results

- The output consists of multi-angle view images and NeRF model files (

.nerf(Format). - Load using a NeRF rendering tool such as NeRFStudio.

.nerffile, adjust the viewing angle to see the 360-degree effect.

Optimizing the backside with ControlNet

- exist

inference.shEnable the backside generation module in the Modify parameters:

--use_controlnet

- After running, the back detail will be more realistic for complex scenes.

Customized Data Reasoning

- Place customized photos into the

input_image/The - generating

dataset.jsonMake sure the camera information is correct. - Running:

bash inference.sh

caveat

- Insufficient GPU memory may result in failure, it is recommended to use a high memory graphics card.

- The project does not provide training code and only supports inference. Follow GitHub updates for the latest progress.

- Test data can be downloaded from the Hugging Face Download with Pexels and 1000 real portraits.

application scenario

- Immersive teleconferencing

Users can generate a 360-degree head view with a single photo to enhance the realism of virtual meetings. - Game Character Design

Developers generate 3D head models from concept art to speed up the game development process. - Digital Art Creation

Artists use it to generate stylized avatars for NFT or social media presentations.

QA

- What are the minimum hardware requirements?

Requires NVIDIA GPU with CUDA support, minimum 30GB RAM, 80GB recommended. - Does it support low-resolution photos?

Not recommended. Details may be lost and results deteriorate when the input resolution is lower than 512x512. - Is it possible to generate a video?

The current version generates static view sequences that can be converted to video with the tool, but does not support direct output of dynamic video.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...