DiaMoE-TTS - Tsinghua and Giant Networks open source multi-dialect speech synthesis framework

What is DiaMoE-TTS?

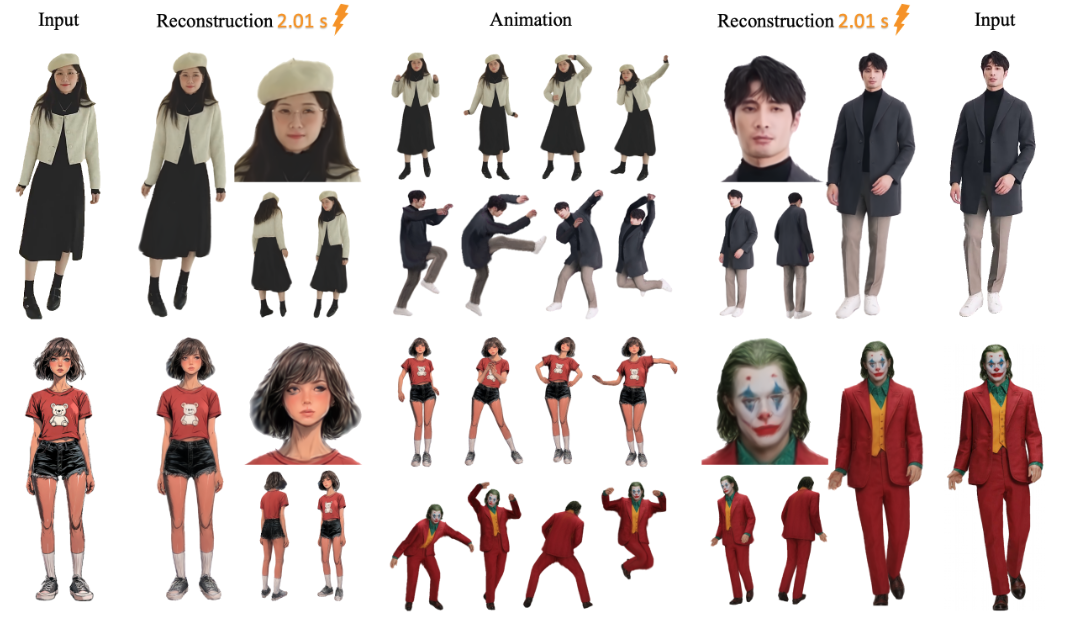

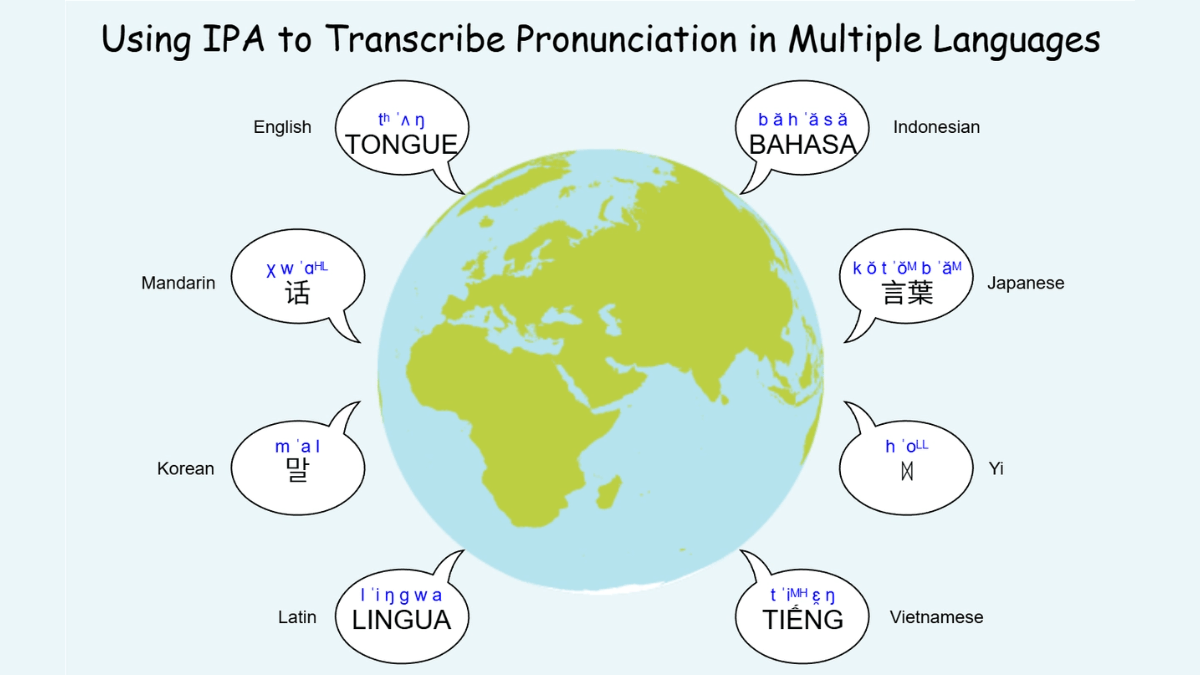

DiaMoE-TTS is a multi-dialect speech synthesis framework jointly open-sourced by Tsinghua University and Giant Network, based on the International Phonetic Alphabet (IPA), to solve the problems of dialect data scarcity, orthographic inconsistency, and complex phonological changes. It eliminates cross-dialect differences through a unified IPA front-end standardized phoneme representation, and adopts a dialect-aware Mixture-of-Experts (MoE) architecture to allow different expert networks to focus on learning the features of different dialects, preserving the unique timbre and rhythm of each dialect. The framework is based on F5-TTS It is constructed by introducing low-rank adapters (LoRA) and conditional adapters to realize parameter-efficient dialect migration, and only a small number of parameters need to be fine-tuned to complete dialect expansion. The training is completely based on open-source data, eliminating the need for expensive manually labeled speech and lowering the technical threshold. Experiments show that DiaMoE-TTS generates natural and expressive speech, achieving zero-sample performance for unseen dialects and specialized domains (e.g. Peking Opera) using only a few hours of data.DiaMoE-TTS supports 11 dialects and Mandarin, and can be extended to European languages.

Functional Features of DiaMoE-TTS

- Harmonized IPA Front End: Adopting the International Phonetic Alphabet (IPA) as the input system, constructing a highly scalable phoneme inventory, supporting phoneme annotation for multiple dialects and languages, eliminating cross-dialect differences, and ensuring modeling consistency and generalization capability.

- Dialect-aware MoE architectureThe Mixture-of-Experts architecture for dialect-awareness is introduced, where different expert networks focus on learning the features of different dialects, and a dynamic gating mechanism automatically selects the most appropriate expert routes, preserving the unique timbre and rhythm of each dialect.

- Low Resource Dialect AdaptationThe following is an example of how to achieve rapid adaptation of low-resource dialects: by adopting an efficient parameter migration strategy, dialect expansion can be accomplished by fine-tuning only a small number of parameters, and the backbone is kept frozen with the MoE module to avoid forgetting the existing knowledge.

- Multi-stage training methodsThe model is designed to gradually improve the model performance and adapt to the dialect diversity by including the phases of IPA migration initialization, multi-dialect co-training, dialect expert enhancement, and low-resource fast adaptation.

- Open Data Drive: Trained entirely on open-source ASR data, it eliminates the need for expensive manual labeling of speech, lowers the technical threshold, and supports scalable, open-data-based speech synthesis.

- Efficient generalization capabilities: It can still achieve high pronunciation accuracy in low-resource dialects, e.g., 91.7% for Hakka, and can achieve zero-sample performance testing for unknown dialects and specialized domains (e.g., Peking Opera).

- Rich application scenariosIt supports the speech synthesis of various Chinese dialects and Mandarin, and can be extended to European languages. It is applicable to the fields of dialect protection, culture and entertainment, and provides technical support for the inheritance of dialects and the development of cultural industry.

- complete toolchain: Provides training and inference scripts, pre-trained models, and IPA front-ends for open source datasets, facilitating quick onboarding and application, and accelerating the research and development process.

Core Benefits of DiaMoE-TTS

- Data-driven and open source: Training based entirely on open-source data eliminates the need for expensive manual labeling of speech, lowering the technical threshold and cost.

- Efficient generalization capabilities: High pronunciation accuracy can still be achieved on low-resource dialects, and zero-sample performance testing can be achieved for unseen dialects and specialized domains (e.g., Peking Opera).

- Dialect preservation and expansionIt supports a wide range of Chinese dialects and Mandarin, and can be extended to European languages, providing strong support for dialect preservation and linguistic diversity.

- Fast Adaptation and MigrationThe efficient parameter migration strategy allows dialect expansion with only a few fine-tuned parameters and fast adaptation to new dialects.

- natural speech synthesis: The generated speech is natural and expressive, and the experimental results show that it excels in speech quality and expressiveness.

What is DiaMoE-TTS's official website?

- GitHub repository:: https://github.com/GiantAILab/DiaMoE-TTS

- HuggingFace Model Library:: https://huggingface.co/RICHARD12369/DiaMoE_TTS

- arXiv Technical Paper:: https://www.arxiv.org/pdf/2509.22727

People for whom DiaMoE-TTS is indicated

- dialect researcher: To provide efficient tools for the study of phonological features and phonological evolution of Chinese dialects and other languages, and to assist linguistic research.

- Speech Synthesis Developer: Provides open source frameworks and pre-trained models to facilitate developers to quickly build and optimize multi-dialect speech synthesis systems.

- Dialect preservationists: Contribute to the Dialect Preservation Project, which promotes linguistic diversity by recording and passing on endangered dialects through speech synthesis technology.

- Cultural and entertainment practitioners: In the fields of film and television, broadcasting, and games, it can be used to produce voice content with local characteristics and enhance cultural expression.

- educator: Can be used to develop dialect teaching resources to help students learn and understand different dialects and promote language education.

- technology enthusiast: Individuals interested in speech synthesis, artificial intelligence technology can learn and explore through open source code and documentation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...