Dia: text-to-speech modeling for generating hyper-realistic multiplayer conversations

General Introduction

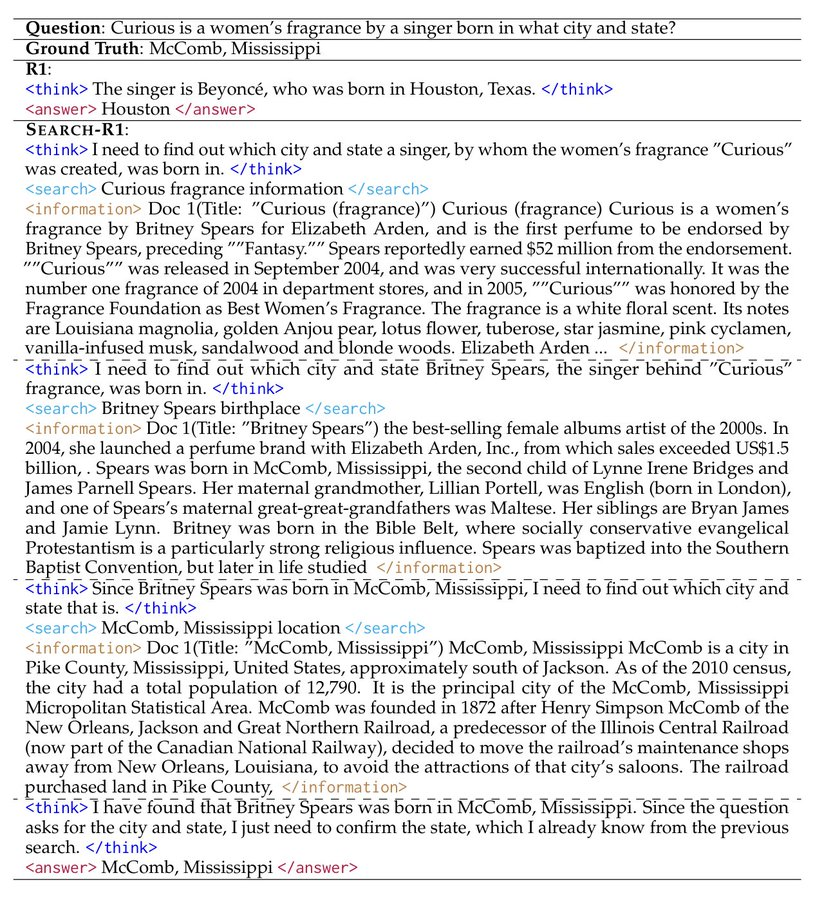

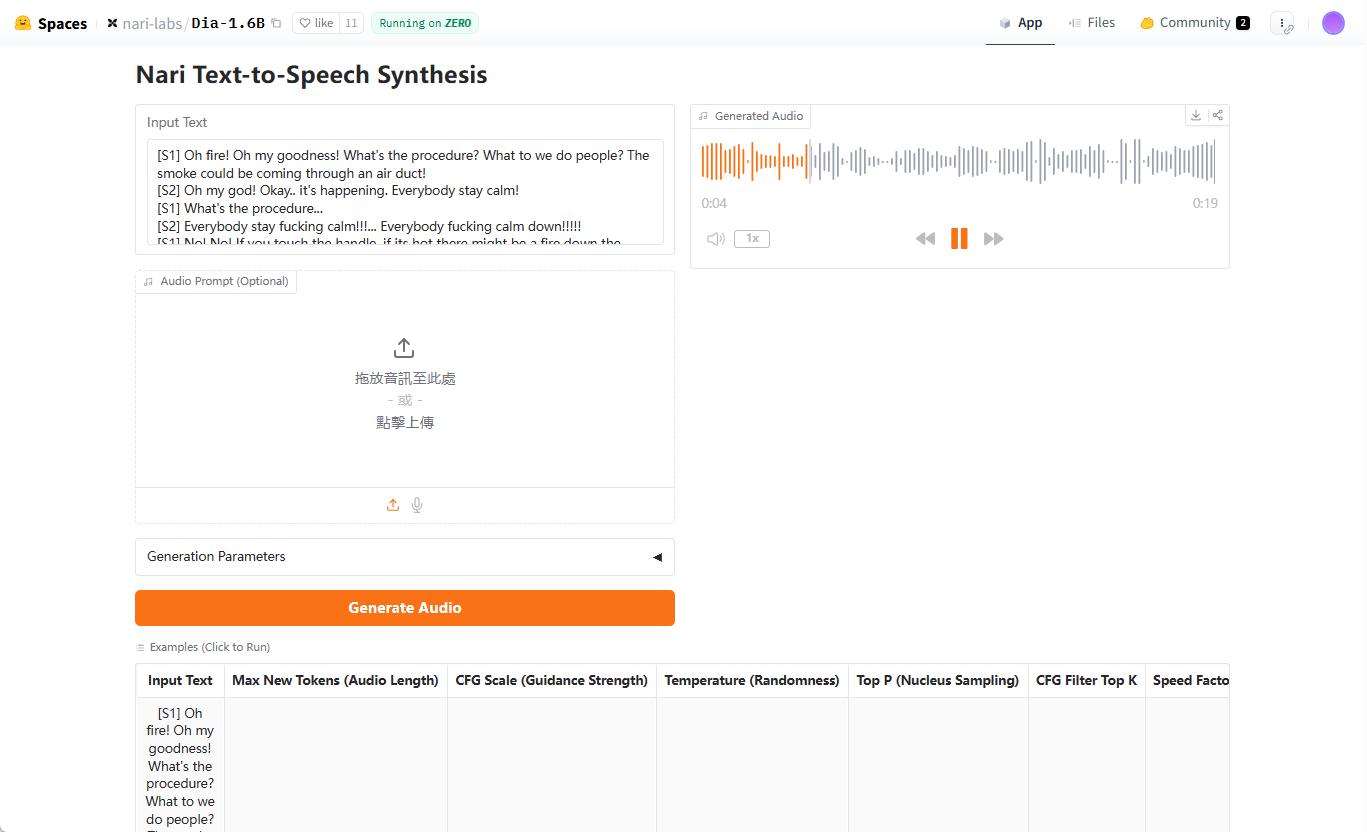

Dia is an open source text-to-speech (TTS) model developed by Nari Labs that focuses on generating hyper-realistic conversational audio. It transforms text scripts into realistic multi-character conversations in a single process, supports emotion and intonation control, and even generates non-verbal expressions such as laughter.At the heart of Dia is a 1.6 billion-parameter model hosted on Hugging Face, with code and pre-training models available to users via GitHub. Designed with an emphasis on openness and flexibility, it allows users to have full control over dialogue scripts and speech output, and Dia provides a Gradio interface for users to get a quick taste of the generated dialogue. The project is supported by the Google TPU Research Cloud and the Hugging Face ZeroGPU Grant, and is inspired by technologies such as SoundStorm and Parakeet.

Demo address: https://huggingface.co/spaces/nari-labs/Dia-1.6B

Function List

- Surreal dialog generation: Convert text scripts to multi-character dialog audio with support for multiple speaker tags (e.g. [S1], [S2]).

- Emotion and tone control: Adjusting the emotion and tone of voice through audio cues or fixed seeds.

- nonverbal expression: Generate non-verbal sounds such as laughter and pauses to enhance conversational realism.

- Gradio Interactive Interface: Provides a visual interface to simplify dialogue generation and audio output operations.

- Open Source Models and Codes: Users can download pre-trained models from Hugging Face or get the source code on GitHub.

- Cross-Device Support: Supports GPU operation, with CPU support planned for the future.

- Repeatable settings: Ensure consistent generation of results by setting random seeds.

Using Help

Installation process

To use Dia, you first need to clone your GitHub repository and set up your environment. Here are the detailed steps:

- clone warehouse::

Run the following command in the terminal:git clone https://github.com/nari-labs/dia.git cd dia - Creating a Virtual Environment::

Use Python to create virtual environments to isolate dependencies:python -m venv .venv source .venv/bin/activate # Windows 用户运行 .venv\Scripts\activate - Installation of dependencies::

Dia Usageuvtool to manage dependencies. InstallationuvAnd run:pip install uv uv run app.pyThis will automatically install the required libraries and launch the Gradio interface.

- hardware requirement::

- GPUs: Recommended NVIDIA GPUs with CUDA support.

- CPU: GPU optimization is currently better, CPU support is planned.

- random access memory (RAM): At least 16GB RAM, higher memory required for model loading.

- Verify Installation::

(of a computer) runuv run app.pyAfter that, the terminal will display the local URL of the Gradio interface (usually thehttp://127.0.0.1:7860). Open this URL in your browser and check if the interface loads properly.

Using the Gradio Interface

The Gradio interface is Dia's primary method of interaction and is suitable for quick testing and generating dialogs. The steps are as follows:

- Open the interface::

activate (a plan)uv run app.pyThe interface contains text input boxes, parameter settings, and audio output areas. - Entering dialog scripts::

Enter the script in the text box, using the[S1],[S2]etc. markers to distinguish between speakers. For example:[S1] 你好,今天过得怎么样? [S2] 还不错,就是有点忙。(笑)The script supports non-language tags such as

(笑)The - Setting the generation parameters::

- Maximum Audio Marker(

--max-tokens): Controls the length of the generated audio, default 3072. - CFG ratio(

--cfg-scale): Adjusts the generation quality, default 3.0. - temp(

--temperature): control the randomness, default 1.3, the higher the value the more random. - Top-p(

--top-p): kernel sampling probability, default 0.95.

These parameters can be adjusted in the interface, and beginners can use the default values.

- Maximum Audio Marker(

- Adding audio cues (optional)::

For voice consistency, you can upload a reference audio. Click on the "Audio Cue" option in the interface and select a WAV file. The official documentation mentions that guidelines for audio cueing will be released soon, but for now, you can refer to the example in the Gradio interface. - Generate Audio::

Click the "Generate" button and the model will process the script and output the audio. The generation time depends on the hardware performance, usually a few seconds to tens of seconds. The generated audio can be previewed in the interface or downloaded as a WAV file. - seed fixation::

In order to ensure that the sound generated is the same every time, you can set a random seed. Click on the "Seed" option in the interface and enter an integer (e.g.35). If not set, Dia may generate a different sound each time.

Command Line Usage

In addition to Gradio, Dia also supports command line operations for developers or batch generation. Below are examples:

- Running CLI Scripts::

Runs in a virtual environment:python cli.py "[S1] 你好! [S2] 嗨,很好。" --output output.wav - Specify the model::

By default, the Hugging Face'snari-labs/Dia-1.6BModel. If you are using a local model, you need to provide a configuration file and checkpoints:python cli.py --local-paths --config config.yaml --checkpoint checkpoint.pt "[S1] 测试" --output test.wav - Adjustment parameters::

Generation parameters can be set from the command line, for example:python cli.py --text "[S1] 你好" --output out.wav --max-tokens 3072 --cfg-scale 3.0 --temperature 1.3 --top-p 0.95 --seed 35

Featured Function Operation

- Multi-character dialog::

Dia's core strength is the generation of multi-actor dialogs. Scripts are created with the[S1],[S2]etc. markers to differentiate characters, the model will automatically assign a different voice to each character. It is recommended that character tone or emotion be made explicit in the script, for example:[S1] (兴奋)我们赢了! [S2] (惊讶)真的吗?太棒了! - nonverbal expression::

Add to the script(笑),(停顿)etc. markers, Dia will generate the corresponding sound effects. For example:[S2] 这太好笑了!(笑) - voice consistency::

To avoid generating different sounds each time, either fix the seed or use audio cues. Fixing the seed is done in the Gradio interface or on the command line via the--seedSettings. Audio prompts should be uploaded as high-quality WAV files with clear voice clips.

caveat

- Models not fine-tuned for specific sounds: Generated sounds may vary each time, and consistency needs to be ensured by seeding or audio cueing.

- hardware limitation: GPU performance has a significant impact on generation speed and may be slower on lower-end devices.

- Ethical Guidelines: Nari Labs provides ethical and legal guidelines for use that users need to follow to avoid generating inappropriate content.

application scenario

- content creation

Dia is ideal for generating realistic dialog for podcasts, animations, or short videos. Creators can enter scripts and quickly generate character voices, eliminating recording costs. For example, animators can generate dialog with different tones for their characters to enhance their work. - Education and training

Dia can generate audio dialogues for language learning or role-play training. For example, language teachers can create multi-role dialogues that simulate real-life scenarios to help students practice listening and speaking. - game development

Game developers can use Dia to generate dynamic dialog for NPCs. The scripts support emotion tagging and can generate character-specific speech for different scenarios. - Research and Development

AI researchers can explore TTS technologies through secondary development based on Dia's open source code. The model supports local loading, which is suitable for experimentation and optimization.

QA

- What input formats does Dia support?

Dia accepts text scripts with the[S1],[S2]Markers differentiate between speakers. Support for non-verbal tokens such as(笑)The audio cue is available in WAV format as an option. - How do I ensure that the generated sounds are consistent?

Fixed seeds can be set by setting (--seed) or upload audio cue implementations. Seeds can be set in the Gradio interface or on the command line, and audio cues need to be high quality WAV files. - Does Dia support CPU operation?

Dia is currently optimized to run on GPUs, with support for CPUs planned for the future; NVIDIA GPUs are recommended for best performance. - How long does it take to generate audio?

The generation time depends on the hardware and the length of the script. On high-performance GPUs, short dialogs typically take a few seconds to generate, and long dialogs can take tens of seconds. - Is Dia free?

Dia is an open source project and the code and models are free. Users are responsible for the cost of the hardware needed to run it.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...