DeepSeek Releases Unified Multimodal Understanding and Generative Models: from JanusFlow to Janus-Pro

JanusFlow Speed Reading

DeepSeek The team has released another new model, launching Janus-Pro, an innovative multimodal framework in the early hours of the 28th, which is a unified model capable of handling multimodal comprehension and generation tasks simultaneously. Built on DeepSeek-LLM-1.5b-base/DeepSeek-LLM-7b-base, the model supports 384 x 384 image input and uses a specific tokenizer for image generation. The most important feature is the division of the visual encoding into separate channels while maintaining a single transformer architecture for processing.

This innovative design not only solves the problem of conflicts in the roles of visual encoders in traditional models, but also makes the whole system more flexible. In practice, Janus-Pro outperforms previous unified models, and even rivals specialized task-based models on certain tasks. It beat OpenAI's DALL-E 3 and Stable Diffusion in GenEval and DPG-Bench benchmarks.

The Janus model line, which began with the JanusFlow, aiming to build aA unified framework for multimodal understanding and generationThe core idea is to combine an autoregressive language model (LLM) with a Rectified Flow generation model. The core idea is to combine an autoregressive language model (LLM) with a Rectified Flow generation model, aiming to achieve both superior visual understanding and high-quality image generation capabilities within a single model.Janus-Pro As an advanced version of Janus, the performance of Janus model is further enhanced by comprehensive optimizations in training strategy, data size, and model dimension, and has achieved significant progress in several benchmark tests. In this paper, we systematically review the evolution of Janus model from JanusFlow to Janus-Pro, focusing on its features, parameter characteristics and key improvements.

1. JanusFlow: the cornerstone of unified architecture

paper address:: https://arxiv.org/pdf/2411.07975

JanusFlow The core innovation is itsMinimalist Unified ArchitectureThe LLM architecture, which is a clever way to seamlessly integrate the modified flow generation model into the autoregressive LLM framework, does not require complex modifications to the LLM structure. The core features of this architecture include:

- Corrected streaming image generation: JanusFlow utilizes a modified flow model for image generation, starting from Gaussian noise, iteratively predicting velocity vectors to update the latent space representation of the image, and ultimately generating high-quality images through a decoder. This approach avoids the limitation of traditional methods where the LLM only acts as a conditional generator and lacks direct generation capability.

- Decoupled visual encoder: In order to optimize the performance of the unified model, JanusFlow uses theDecoupled Encoderstrategy, separate visual coders are used to handle the comprehension task and the generation task, respectively:

- Understanding encoders (fenc): Using pre-trained SigLIP-Large-Patch/16 model, which is responsible for extracting semantic features of images to enhance multimodal understanding.

- Generate encoder (genc) and decoder (gdec): scratch training ConvNeXt module dedicated to image generation tasks to optimize generation quality.

- Representation Alignment Mechanisms: During the uniform training process, JanusFlow introduces thecharacter alignmentmechanism that aligns the intermediate representations of the generation and comprehension modules, thus enhancing semantic coherence and consistency in the generation process.

- A three-stage training strategy: JanusFlow has designed a fine-grained three-stage training program:

- Stage 1: Random Initialization Component Adaptation - The linear layer, generative encoder and decoder are trained to work with the pre-trained LLM and SigLIP encoders as an initialization phase for subsequent training.

- Stage 2: Harmonization of pre-training - Train the entire model except for the visual encoder, fusing multimodal understanding, image generation, and plain text data to initially establish the model's unifying capabilities.

- Stage 3: Monitoring fine-tuning - The command fine-tuning data is used to further train the model to improve the response to user commands and to unfreeze the parameters of the SigLIP encoder at this stage.

Parameter Characterization:

- Foundation LLM: Lightweight LLM architecture with 1.3B parameters.

- Visual Coder: SigLIP-Large-Patch/16 (comprehension), ConvNeXt (generation of encoders and decoders).

- Image Resolution: 384 × 384 pixels.

Performance: JanusFlow achieves significant performance on both text-to-image generation and multimodal understanding tasks, outperforming many specialized models and proving the effectiveness of its unified architecture.

2. Janus-Pro: a comprehensive upgrade of data, models and strategies

paper address:: https://github.com/deepseek-ai/Janus/blob/main/janus_pro_tech_report.pdf

Janus-Pro As an improved version of Janus, JanusFlow has been upgraded in the following three main areas:

- Optimized training strategies: Janus-Pro fine-tunes JanusFlow's three-stage training strategy, aiming to address training efficiency and performance bottlenecks:

- Stage 1: Extended ImageNet dataset training - Increasing the number of training steps on the ImageNet dataset allows the model to more fully learn pixel dependencies and improve the underlying capabilities of image generation.

- Stage 2: Focus on Text-to-Image Data Training - In Stage 2 training, the ImageNet data is removed and the regular text-to-image dataset is used directly, allowing the model to more efficiently learn to generate high-quality images based on dense text descriptions.

- Stage 3: Scaling the data - In the supervised fine-tuning stage, the ratios of multimodal comprehension data, plain text data, and text-to-image data were fine-tuned (from 7:3:10 to 5:1:4) to further improve the multimodal comprehension performance while ensuring the visual generation capability.

- Extended training data: Janus-Pro dramatically scales the size and diversity of the training data to improve the generalization ability and generation quality of the model:

- Multimodal understanding of data: In the Stage 2 pre-training phase, about 90 million new samples were added, covering a wider range of image captioning data (e.g., YFCC) as well as table, chart, and document comprehension data (e.g., Docmatix). In Stage 3 fine-tuning, further samples are introduced. DeepSeek-VL2 dataset as well as MEME comprehension, Chinese dialog data, etc., which significantly improves the model's dialog capability and multitasking ability.

- Visual generation of data: In order to improve the aesthetic quality and stability of the generated images, Janus-Pro introduces about 72 million high-quality synthesized aesthetic data and adjusts the ratio of real data to synthesized data to 1:1. Experiments show that the addition of the synthesized data accelerates the model convergence and significantly improves the aesthetic quality and stability of the generated images.

- Extended model size: Janus-Pro not only retains the 1.5B parametric model of JanusFlow, but also extends it further to include 7B Parametersand provides Janus-Pro series in 1.5B and 7B model sizes. The experimental results show that larger scale LLMs can significantly improve the performance of the model and accelerate the convergence speed, verifying the scalability of the Janus model architecture.

Parameter Characterization:

- Model Size: Available in 1.5B and 7B model sizes.

- Architecture: Follows JanusFlow's decoupled visual encoder architecture.

- Training data: Significantly expanded and optimized training data for multimodal understanding and vision generation.

- Image Resolution: The image resolution used in the experiments remained at 384 × 384 pixels.

Performance: Janus-Pro demonstrated the effectiveness of the data, model, and strategy upgrades by achieving significant performance gains in all benchmarks, especially in the multimodal understanding benchmark MMBench and the text-to-image generation benchmarks GenEval and DPG-Bench, which both outperformed JanusFlow and other advanced unified and specialized models.

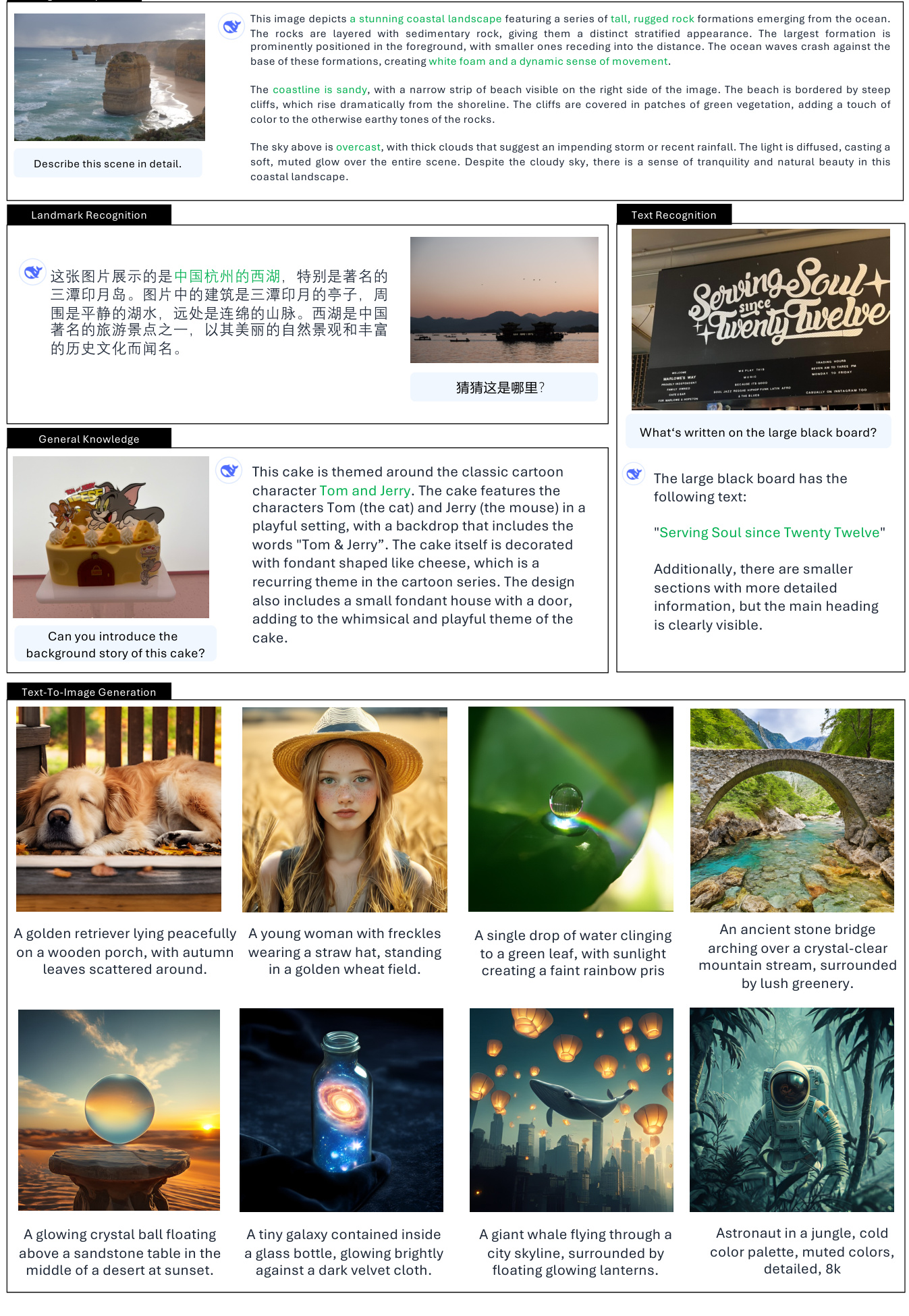

3.Janus real use scenarios

Visual comprehension functions:

- Image Description/Captioning.

- Detailed Scene Description: Generate detailed text descriptions based on the image content, including scene elements, objects, environment, etc. (Example: describe the three pools of the West Lake, the seaside landscape, etc.) (Example: describing the Three Pools of the West Lake, the seaside landscape, etc.)

- Graphical Description: Be able to understand and describe information on graphs and charts, such as bar graphs to represent data and analyze trends. (Example: Interpreting the "Kid's Favourite Fruits" bar graph)

- Object Recognition/Classification.

- Identify types of objects in an image: Be able to recognize and list categories of objects that appear in an image. (Example: Identify the type of fruit in the image)

- Object Counting.

- Accurately count the number of objects in an image: Ability to accurately count the number of specific objects in an image. (Example: count the number of penguins in the image)

- Landmark Recognition.

- Recognize famous landmarks in images: Be able to recognize landmarks or locations that appear in images. (Example: Recognize the Three Pools of the West Lake)

- Text Recognition/OCR.

- Recognize text content in images: Be able to recognize text appearing in an image and extract text information. (Example: Recognize "Serving Soul since Twenty Twelve" on the board)

- Visual Question Answering.

- Answer questions based on image content: Be able to understand the content of an image and give a reasonable answer based on the user's question. (Example: Ask "What fruit is in the picture?" in response to an image)

- Visual Reasoning/Knowledge Integration.

- Understand the meanings and associations behind images: Be able to engage in deeper visual reasoning and contextualize knowledge. (Examples: explaining the humor in the Mona Lisa dog picture, understanding the cartoon themed context of the cake)

- Code Generation (Python for Plotting).

- Generate code based on user commands: Understand user requirements for charting and generate Python code accordingly. (Example: Generate Python code to draw a bar chart)

Text-to-image generation function:

- Text-Guided Image Generation.

- Generate images based on text descriptions: Can generate semantically related images based on text prompts entered by the user.

- Creative Image Generation: Ability to understand abstract and imaginative text prompts to generate creative and artistic images. (Examples: flying whale, cosmic nebula corgi, etc.)

- Stylized Image Generation: Generate images with specific artistic styles according to the style description in the text prompts. (Examples: Renaissance style church, Chinese ink painting style mountain village, etc.)

- Simple Text Generation on Images: Ability to generate images with simple text elements. (Example: writing "Hello" on a blackboard)

Examples of practical application scenarios:

- Intelligent Assistant: Acts as a multimodal intelligent assistant that understands user uploaded images and conducts Q&A, descriptions, analysis, etc.

- Content Creation: Assist content creators in quickly generating high-quality image materials, such as social media graphics, article illustrations, and more.

- Educational applications: Used in image recognition teaching, chart interpretation teaching, etc. to help students understand visual information.

- Information Retrieval: Provides richer retrieval results through image search combined with text comprehension and generation capabilities.

- Artistic Creation: As a creative tool, it assists artists to create images and explore new forms of visual expression.

Parameter characteristics and limitations to note:

- Image Resolution Limitations: The current model training and testing is mainly based on 384x384 resolution images, which may have limitations for scenarios that require higher resolution.

- Detail finesse: Although the image is semantically rich, there may still be room for improvement in detail finesse due to the resolution and reconstruction loss of the vision tokenizer, e.g., small-sized face areas may not be fine enough.

4. Summary and outlook

The Janus family of models, from JanusFlow to Janus-Pro, demonstrates the potential for continued breakthroughs in the field of unified multimodal understanding and generation.JanusFlow laid the foundation for a unified architecture, while Janus-Pro achieved a quantum leap in performance through training strategy optimization, data scale-up, and model size upgrades.The success of Janus-Pro The success of Janus-Pro validatesData-driven and model extensions are key to improving the performance of unified modelsThe evolution of the Janus model family not only pushes forward the progress of multimodal models, but also lays a solid foundation for building more general and intelligent AI systems.

Full paper "Janus-Pro: A unified multimodal understanding and generative model, enabled by data and model extensions

author: Xiaokang Chen, Zhiyu Wu, Xingchao Liu, Zizheng Pan, Wen Liu, Zhenda Xie, Xingkai Yu, Chong Ruan

project page: https://github.com/deepseek-ai/Janus

summaries

In this work, we introduce Janus-Pro, an enhanced version of the previous Janus model. Specifically, Janus-Pro integrates (1) an optimized training strategy, (2) extended training data, and (3) scaling to larger model sizes. With these improvements, Janus-Pro makes significant advances in multimodal understanding and command-following capabilities for text-generated images, while enhancing the stability of text-generated images. We hope that this work will inspire further exploration in this area. The code and model have been publicly released.

1. Introduction

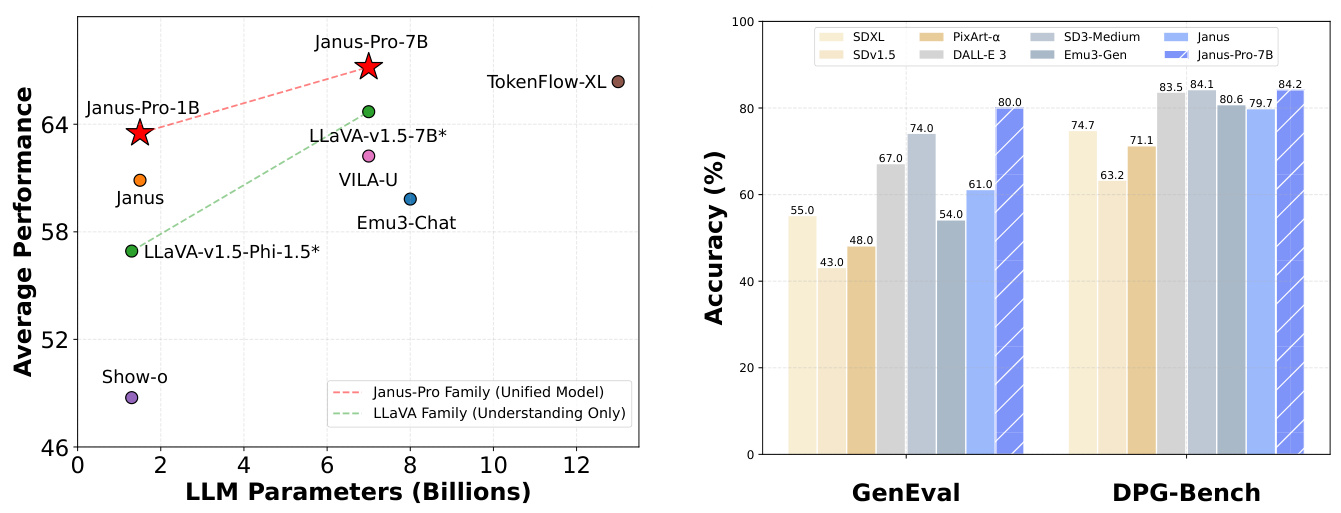

(a) Average performance of the four multimodal comprehension benchmarks. (b) Performance of the text-to-generate-image instruction following the benchmark.

Figure 1 | Multimodal comprehension and visual generation results for Janus-Pro. For multimodal comprehension, we averaged the accuracy of POPE, MME-Perception, GQA, and MMMU. the score for MME-Perception was divided by 20 to scale to the range [0, 100]. For vision generation, we evaluated the performance of both GenEval and DPG-Bench command following benchmarks. Overall, Janus-Pro outperforms previous state-of-the-art unified multimodal models as well as some task-specific models. The best results were obtained for on-screen viewing.

1. a simple photo of an orange tangerine 2. a clean blackboard with a green surface and the word "Hello" written precisely and clearly in white chalk 3. a close-up of a sunflower symbolizing prosperity, with green stems and leaves, petals in full bloom, and a bee resting on it, its wings glistening in the sun.

Figure 2 | Comparison of text-generated images from Janus-Pro and its predecessor, Janus. Janus-Pro provides a more consistent output for short prompts, with higher visual quality, richer detail, and the ability to generate simple text. The image resolution is 384x384 and is best viewed on a screen.

Significant progress has recently been made in unifying multimodal understanding and generative models [30, 40, 45, 46, 48, 50, 54, 55]. These approaches have been shown to enhance instruction adherence in visual generation tasks while reducing model redundancy. Most of these approaches use the same visual encoder to process inputs for both multimodal comprehension and generation tasks. This typically results in poor multimodal comprehension performance due to the different representations required for the two tasks. To address this problem, Janus [46] proposed decoupled visual coding, which alleviates the conflict between the multimodal comprehension and generation tasks, achieving excellent performance in both tasks.

As a pioneering model, Janus was validated at 1B parameter scale. However, due to the limitations of the training data and the relatively small model capacity, it exhibits several drawbacks, such as poor performance in short cue image generation and inconsistent quality of text-generated images. In this paper, we present Janus-Pro, an enhanced version of Janus that improves on three aspects: training strategy, data, and model size.The Janus-Pro family consists of two model sizes: 1B and 7B, demonstrating the scalability of the visual coding-decoding method.

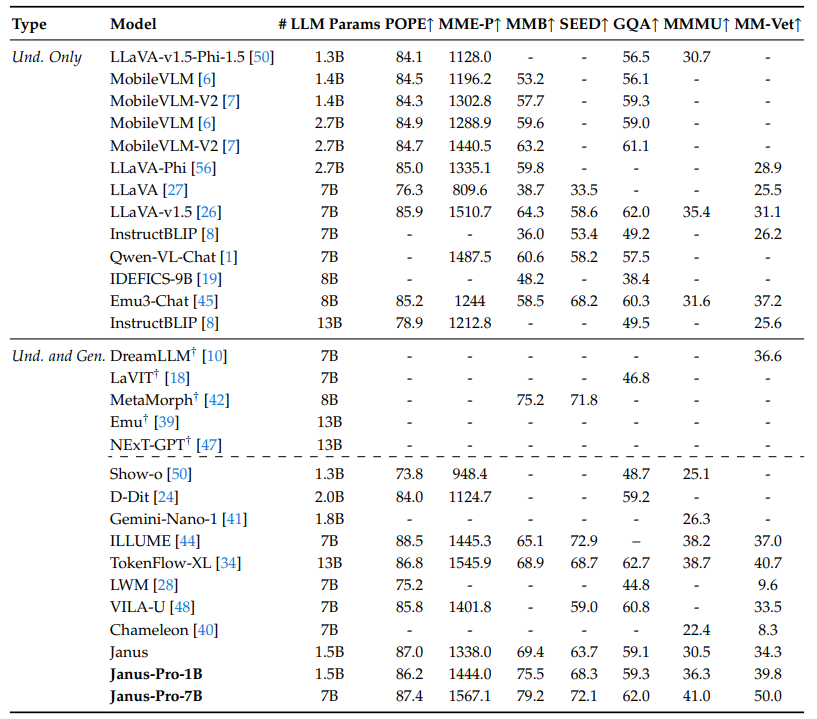

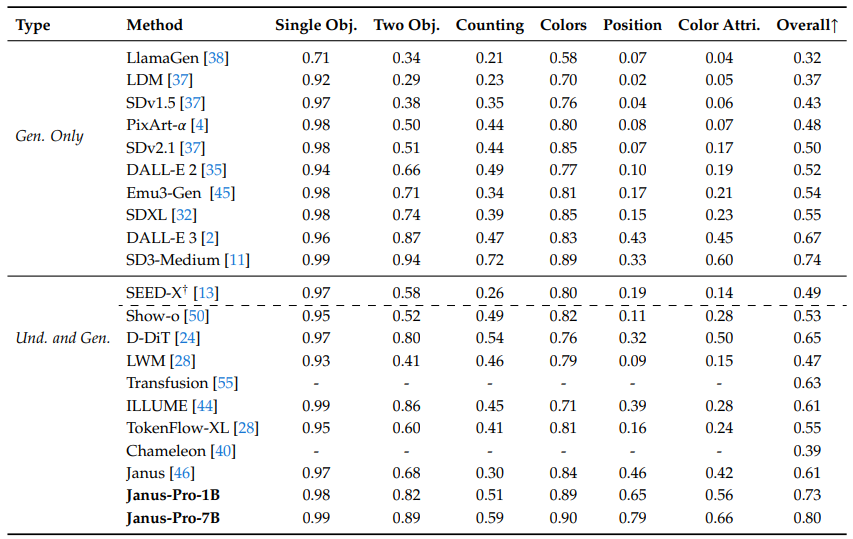

We evaluated Janus-Pro on multiple benchmarks, and the results show superior multimodal comprehension and significantly improved performance for text-generated image instruction adherence. Specifically, Janus-Pro-7B scores 79.2 on the multimodal understanding benchmark MMBench [29], outperforming previous state-of-the-art unified multimodal models such as Janus [46] (69.4), TokenFlow [34] (68.9), and MetaMorph [42] (75.2). In addition, Janus-Pro-7B scores 0.80 in the text-to-generate-images directive following the leaderboard GenEval [14], outperforming Janus [46] (0.61), DALL-E 3 (0.67), and Stable Diffusion 3 Medium [11] (0.74).

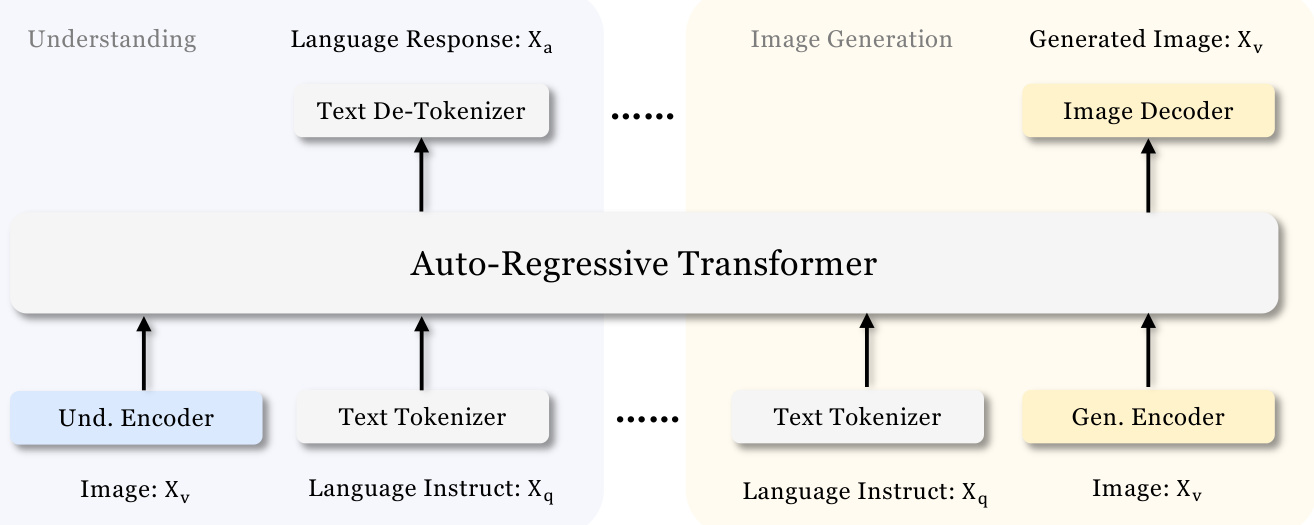

Figure 3 | Architecture of Janus-Pro. We decouple visual encoding for multimodal understanding and visual generation. "Und. Encoder" and "Gen. Encoder" are abbreviations for "Understanding Encoder" and "Generation Encoder" respectively. "Und. Encoder" and "Gen. Encoder" are abbreviations for "Understanding Encoder" and "Generation Encoder" respectively. Best viewed on screen.

2. Methodology

2.1.

The architecture of Janus-Pro is shown in Figure 3 and is the same as Janus [46]. The core design principle of the overall architecture is to decouple visual coding for multimodal understanding and generation. We apply independent coding methods to convert raw inputs into features, which are then processed by a unified autoregressive transformer. For multimodal understanding, we use the SigLIP [53] encoder to extract high-dimensional semantic features from images. These features are spread from a 2-D mesh to a 1-D sequence, and these image features are mapped to the input space of the LLM using an understanding adapter. For the visual generation task, we use the VQ tagger from [38] to convert images to discrete IDs. after flattening the ID sequences to 1-D, we use a generative adapter to map the codebook embeddings corresponding to each ID to the input space of the LLM. We then concatenate these feature sequences to form a multimodal feature sequence, which is subsequently fed into the LLM for processing. In addition to the built-in prediction header in the LLM, we use a randomly initialized prediction header for image prediction in visual generation tasks. The entire model follows an autoregressive framework.

2.2 Optimized training strategies

The previous version of Janus utilized a three-stage training process. The first stage focuses on training adapters and image heads. The second phase dealt with uniform pre-training, where all components except the comprehension encoder and the generation encoder update their parameters. The third phase is supervised fine-tuning, which builds on the second phase by further unlocking the parameters of the comprehension encoder during the training process. There are some problems with this training strategy. In the second phase, Janus divides the training of the text-to-image capability into two parts, following PixArt [4]. The first part was trained using ImageNet [9] data, using image category names as cues for text-generated images, with the goal of modeling pixel dependencies. The second part was trained using plain text-generated image data. In the implementation, 66.67% of the text-generated image training steps in the second phase were allocated to the first part. However, through further experiments, we found that this strategy is sub-optimal and leads to significant computational inefficiencies.

Two changes were made to address this issue.

- Longer training in the first phase: We added a first-stage training step that allows full training on the ImageNet dataset. Our findings show that even with fixed LLM parameters, the model can effectively model pixel dependencies and generate sensible images based on category names.

- Phase II focused training: In the second phase, we abandoned ImageNet data and directly utilized plain text-generated image data to train the model to generate images based on dense descriptions. This redesigned approach allows the second phase to utilize text-generated image data more efficiently, thereby improving training efficiency and overall performance.

We also adjusted the data ratios of the different types of datasets in the third stage of the supervised fine-tuning process, changing the ratio of multimodal data, text-only data, and text-generated image data from 7:3:10 to 5:1:4. By slightly decreasing the ratio of text-generated image data, we observed that this adjustment allowed us to achieve an increase in multimodal comprehension performance while maintaining a strong visual generative capability.

2.3 Data extensions

We extend the training data used by Janus in multimodal understanding and vision generation.

- multimodal understanding: For the second stage pre-training data, we refer to DeepSeekVL2 [49] and add about 90 million samples. These include image captioning datasets (e.g., YFCC [31]), as well as data for table, chart, and document comprehension (e.g., Docmatix [20]). For the third stage of supervised fine-tuning data, we also added additional datasets from DeepSeek-VL2, such as MEME comprehension, Chinese dialog data, and datasets designed to enhance the dialog experience. These additions significantly extend the capabilities of the model, enriching its ability to handle a variety of tasks while improving the overall dialog experience.

- visual generation: We observed that the real-world data used in the previous version of Janus lacked quality and contained significant noise, which typically resulted in unstable text-generated images leading to aesthetically poor output. In Janus-Pro, we have included approximately 72 million synthetic aesthetic data samples to bring the ratio of real to synthetic data to 1:1 in the unified pre-training phase. cues from these synthetic data samples are publicly available, such as those in [43]. Experiments show that when training on synthetic data, the model converges faster and produces not only more stable but also significantly better aesthetic quality of the text-generated image output.

2.4 Model extensions

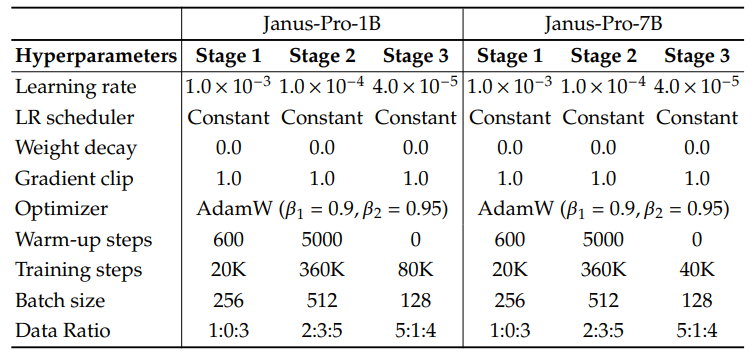

A previous version of Janus verified the effectiveness of using the 1.5B LLM for visual coding decoupling. In Janus-Pro, we extended the model to 7B, and the hyperparameters of the 1.5B and 7B LLMs are detailed in Table 1. We observe that the convergence speeds of multimodal understanding and visually generated losses are significantly higher when using larger LLMs compared to smaller models. This finding further validates the robust scalability of this approach.

Table 1 | Architecture configuration of Janus-Pro. We list the hyperparameters of the architecture.

| Janus-Pro-1B | Janus-Pro-7B | |

| Vocabulary size | 100K | 100K |

| Embed size | 2048 | 4096 |

| context window | 4096 | 4096 |

| attention span | 16 | 32 |

| storey | 24 | 30 |

Table 2 Detailed hyperparameters for Janus-Pro training. The data ratio refers to the ratio of multimodal comprehension data, plain text data, and visually generated data.

3. Experiments

3.1 Realization details

In our experiments, we use DeepSeek-LLM (1.5B and 7B) [3] as the base language model with a maximum supported sequence length of 4096.For the visual coder used in the comprehension task, we chose SigLIP-Large-Patch16-384 [53]. The generation encoder has a codebook of size 16,384 and downsamples the image 16 times. Both the comprehension adapter and the generation adapter are two-layer MLPs. detailed hyperparameters for each stage are provided in Table 2. All images were resized to 384x384 pixels. For multimodal comprehension data, we resize the long side of the image to 384 and fill the short side with the background color (RGB: 127, 127, 127) to reach 384. For visual generation data, the short side is resized to 384 and the long side is cropped to 384. we use sequence packing during the training process to improve the training efficiency. We mix all data types according to specified ratios in a single training step. Our Janus is trained and evaluated using HAI-LLM [15], a lightweight and efficient distributed training framework built on PyTorch. The entire training process took approximately 7/14 days on a 16/32-node cluster equipped with eight Nvidia A100 (40GB) GPUs for 1.5B/7B models.

3.2 Assessment of the setting

Multimodal Understanding: To evaluate multimodal comprehension, we evaluated our model on widely recognized image-based visual-verbal benchmarks including GQA [17], POPE [23], MME [12], SEED [21], MMB [29], MM-Vet [51], and MMMU [52].

Table 3 | Comparison with state-of-the-art techniques in multimodal understanding benchmarking. "Und." and "Gen." denote "Understanding" and "Generation" respectively. Models that use external pre-trained diffusion models are labeled with †.

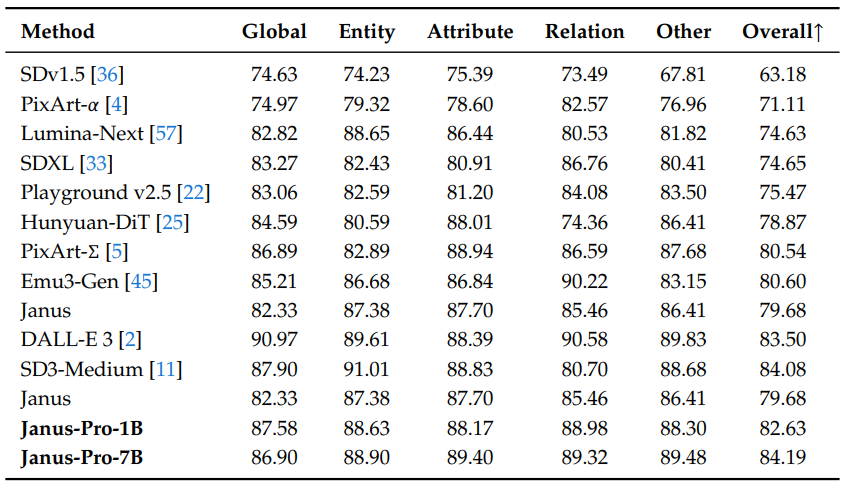

Visual Generation: To evaluate visual generation capabilities, we used GenEval [14] and DPG-Bench [16].GenEval is a challenging image-to-text generation benchmark designed to reflect the full generative capabilities of visual generation models through a detailed instance-level analysis of their combinatorial capabilities.DPG-Bench (Dense Prompted Graph Benchmark) is a comprehensive dataset containing 1065 lengthy, dense cues in a comprehensive dataset designed to assess the complex semantic alignment capabilities of text-to-image models.

3.3 Comparison with state-of-the-art

Multimodal Understanding Performance: We compare the proposed approach with state-of-the-art unified models and comprehension-only models in Table 3.Janus-Pro achieves the overall best results. This can be attributed to the decoupling of multimodal comprehension and generated visual coding, which alleviates the conflict between these two tasks. Janus-Pro remains highly competitive when compared to much larger models. For example, Janus-Pro-7B outperforms TokenFlow-XL (13B) in all benchmarks except GQA.

Table 4 Evaluation of text-to-image generation capabilities in the GenEval benchmark test. "Und." and "Gen." denote "Understanding" and "Generating" respectively. Models that use external pre-trained diffusion models are marked with †.

Table 5 performance on DPG-Bench. With the exception of Janus and Janus-Pro, all methods in this table are specific to the generation task model.

Visual Generation Performance: We report visual generation performance on GenEval and DPG-Bench. As shown in Table 4, our Janus-Pro-7B obtains an overall accuracy of 80% on GenEval, which outperforms all other unified or generation-only supported methods, e.g., Transfusion [55] (63%), SD3-Medium (74%), and DALL-E 3 (67%). This shows that our method has better instruction following capability. As shown in Table 5, Janus-Pro achieves a score of 84.19 on the DPG-Bench, outperforming all other methods. This shows that Janus-Pro is good at following dense instructions for text-to-image generation.

3.4 Qualitative results

We show the results of multimodal understanding in Fig. 4. Janus-Pro demonstrates its power by showing impressive understanding when processing inputs from different contexts. We also show some text-generated image generation results in the lower part of Figure 4. the images generated by Janus-Pro-7B are very realistic and contain a great deal of detail even though the resolution is only $384\times384$. For imaginative and creative scenarios, Janus-Pro-7B accurately captures the semantic information in the prompts and generates sensible and coherent images.

Figure 4 | Qualitative results of multimodal understanding and visual generation capabilities. The model is Janus-Pro-7B, and the visually generated image output has a resolution of $384\times384$. best viewed on a screen.

4. Conclusion

In this paper, improvements are made to Janus in terms of training strategy, data and model size. These enhancements result in significant improvements in multimodal understanding and text generation image command following. However, Janus-Pro still has some limitations. For multimodal understanding, the input resolution is limited to $384\times384$, which affects its performance in fine-grained tasks such as OCR. For text-generated images, the low resolution coupled with the reconstruction loss introduced by the visual tagger results in images that are semantically rich but still lack detail. For example, small facial regions occupying limited image space may not appear to have enough detail. Increasing the image resolution can alleviate these problems.

bibliography

[1] J. Bai, S. Bai, S. Yang, S. Wang, S. Tan, P. Wang, J. Lin, C. Zhou, and J. Zhou. qwen-vl: a cutting-edge large-scale visual language model with multifunctionality. arXiv preprint arXiv:2308.12966, 2023.[2] J. Betker, G. Goh, L. Jing, T. Brooks, J. Wang, L. Li, L. Ouyang, J. Zhuang, J. Lee, Y. Guo, et al. Improving image generation through better captioning. Computer Science. https://cdn.openai.com/papers/dall-e-3.pdf, 2(3):8, 2023.

[3] X. Bi, D. Chen, G. Chen, S. Chen, D. Dai, C. Deng, H. Ding, K. Dong, Q. Du, Z. Fu, et al. DeepSeek LLM: Extending open source language models using long-termism. arXiv preprint arXiv:2401.02954, 2024.

[4] J. Chen, J. Yu, C. Ge, L. Yao, E. Xie, Y. Wu, Z. Wang, J. Kwok, P. Luo, H. Lu, et al. PixArtℎ: fast training diffusion transformer for photo-realistic text generation image synthesis. arXiv preprint arXiv:2310.00426, 2023.

[5] J. Chen, C. Ge, E. Xie, Y. Wu, L. Yao, X. Ren, Z. Wang, P. Luo, H. Lu, and Z. Li. PixArt-Sigma: weak-to-strong diffusion transformer training for 4K text generation image generation. arXiv preprint arXiv:2403.04692, 2024.

[6] X. Chu, L. Qiao, X. Lin, S. Xu, Y. Yang, Y. Hu, F. Wei, X. Zhang, B. Zhang, X. Wei, et al. Mobilevlm: a fast, reproducible and powerful visual language assistant for mobile devices. arXiv preprint arXiv:2312.16886, 2023.

[7] X. Chu, L. Qiao, X. Zhang, S. Xu, F. Wei, Y. Yang, X. Sun, Y. Hu, X. Lin, B. Zhang, et al. Mobilevlm v2: A faster and more powerful foundation for visual language modeling. arXiv preprint arXiv:2402.03766, 2024.

[8] W. Dai, J. Li, D. Li, A. M. H. Tiong, J. Zhao, W. Wang, B. Li, P. Fung, and S. Hoi. Instructblip: toward a generalized visual language model with command fine-tuning, 2023.

[9] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. Imagenet: a large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 248-255. Institute of Electrical and Electronics Engineers, 2009.

[10] R. Dong, C. Han, Y. Peng, Z. Qi, Z. Ge, J. Yang, L. Zhao, J. Sun, H. Zhou, H. Wei, et al. Dreamllm: multimodal collaborative understanding and creation. arXiv preprint arXiv:2309.11499, 2023.

[11] P. Esser, S. Kulal, A. Blattmann, R. Entezari, J. Mller, H. Saini, Y. Levi, D. Lorenz, A. Sauer, F. Boesel, D. Podell, T. Dockhorn, Z. English, K. Lacey, A. Goodwin, Y. Marek, and R. Rombach. scaling-corrected stream transformer for high-resolution image synthesis, 2024. URL https://arxiv.org/abs/2403.03206.

[12] C. Fu, P. Chen, Y. Shen, Y. Qin, M. Zhang, X. Lin, J. Yang, X. Zheng, K. Li, X. Sun, et al. MME: a comprehensive evaluation benchmark for multimodal large language models. arXiv preprint arXiv:2306.13394, 2023.

[13] Y. Ge, S. Zhao, J. Zhu, Y. Ge, K. Yi, L. Song, C. Li, X. Ding, and Y. Shan. SEED-X: a multimodal model with unified multi-granularity understanding and generation. arXiv preprint arXiv:2404.14396, 2024.

[14] D. Ghosh, H. Hajishirzi, and L. Schmidt. GenEval: an object-oriented framework for evaluating text-generated image alignment. Advances in Neural Information Processing Systems, 36, 2024.

[15] High-flyer. HAI-LLM: An Efficient and Lightweight Large Model Training Tool, 2023. URL https://www.high-flyer.cn/en/blog/hai-llm.

[16] X. Hu, R. Wang, Y. Fang, B. Fu, P. Cheng, and G. Yu. ELLA: Equipping diffusion models for enhanced semantic alignment. arXiv preprint arXiv:2403.05135, 2024.

[17] D. A. Hudson and C. D. Manning. gqa: a new dataset for real-world visual reasoning and combinatorial quizzing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 6700-6709, 2019.

[18] Y. Jin, K. Xu, L. Chen, C. Liao, J. Tan, B. Chen, C. Lei, A. Liu, C. Song, X. Lei, et al. Dynamic discrete visual tokenization for unified language visual pretraining. arXiv Preprint arXiv:2309.04669, 2023.

[19] H. Laurenon, D. van Strien, S. Bekman, L. Tronchon, L. Saulnier, T. Wang, S. Karamcheti, A. Singh, G. Pistilli, Y. Jernite, and et al. Introducing IDEFICS: an open model for reproducing state-of-the-art visual language models, 2023. URL https://huggingface.co/blog/id efics.

[20] H. Laurenon, A. Marafioti, V. Sanh, and L. Tronchon. Building and better understanding visual language models: insights and future directions, 2024.

[21] B. Li, R. Wang, G. Wang, Y. Ge, Y. Ge, and Y. Shan. SEED-Bench: benchmarking multimodal LLMs using generative understanding. arXiv preprint arXiv:2307.16125, 2023.

[22] D. Li, A. Kamko, E. Akhgari, A. Sabet, L. Xu, and S. Doshi. Playground v2.5: Three-point insights for enhancing the aesthetic quality of text-generated image generation. arXiv Preprint arXiv:2402.17245, 2024.

[23] Y. Li, Y. Du, K. Zhou, J. Wang, W. X. Zhao, and J.-R. Wen. Evaluating object illusions in large-scale visual language models. arXiv preprint arXiv:2305.10355, 2023.

[24] Z. Li, H. Li, Y. Shi, A. B. Farimani, Y. Kluger, L. Yang, and P. Wang. double diffusion for unified image generation and understanding. arXiv preprint arXiv:2501.00289, 2024.

[25] Z. Li, J. Zhang, Q. Lin, J. Xiong, Y. Long, X. Deng, Y. Zhang, X. Liu, M. Huang, Z. Xiao, et al. Hunyuan-DiT: a powerful multiresolution diffusion transformer with fine Chinese understanding. arXiv preprint arXiv:2405.08748, 2024.

[26] H. Liu, C. Li, Y. Li, and Y. J. Lee. Improved fine-tuning baselines for visual commands. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 26296-26306, 2024.

[27] H. Liu, C. Li, Q. Wu, and Y. J. Lee. visual command fine-tuning. Advances in Neural Information Processing Systems, 36, 2024.

[28] H. Liu, W. Yan, M. Zaharia, and P. Abbeel. world modeling on million-length videos and languages using ring attention. arXiv preprint arXiv:2402.08268, 2024.

[29] Y. Liu, H. Duan, Y. Zhang, B. Li, S. Zhang, W. Zhao, Y. Yuan, J. Wang, C. He, Z. Liu, et al. MMBench: is your multimodal model an all-rounder? arXiv preprint arXiv:2307.06281, 2023.

[30] Y. Ma, X. Liu, X. Chen, W. Liu, C. Wu, Z. Wu, Z. Pan, Z. Xie, H. Zhang, X. yu, L. Zhao, Y. Wang, J. Liu, and C. Ruan. Janusflow: reconciling autoregressive and corrective flows for unified multimodal understanding and generation, 2024.

[31] mehdidc. yfcc-huggingface. https://huggingface.co/datasets/mehdidc/yfcc15 m, 2024.

[32] D. Podell, Z. English, K. Lacey, A. Blattmann, T. Dockhorn, J. Mller, J. Penna, and R. Rombach. sdxl: Improved potential diffusion modeling for high-resolution image synthesis. arXiv preprint arXiv:2307.01952,. 2023.

[33] D. Podell, Z. English, K. Lacey, A. Blattmann, T. Dockhorn, J. Mller, J. Penna, and R. Rombach. sdxl: improving potential diffusion models for high-resolution image synthesis. 2024.

[34] L. Qu, H. Zhang, Y. Liu, X. Wang, Y. Jiang, Y. Gao, H. Ye, D. K. Du, Z. Yuan, and X. Wu. Tokenflow: a unified image tokenizer for multimodal understanding and generation. arXiv preprint arXiv:2412.03069, 2024.

[35] A. Ramesh, P. Dhariwal, A. Nichol, C. Chu, and M. Chen. hierarchical text-conditional image generation using CLIP latent values. arXiv preprint arXiv:2204.06125, 1(2):3, 2022.

[36] R. Rombach, A. Blattmann, D. Lorenz, P. Esser, and B. Ommer. High-resolution image synthesis using a latent diffusion model. 2022.

[37] R. Rombach, A. Blattmann, D. Lorenz, P. Esser, and B. Ommer. High-resolution image synthesis using latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10684-10695, 2022.

[38] P. Sun, Y. Jiang, S. Chen, S. Zhang, B. Peng, P. Luo, and Z. Yuan. Autoregressive modeling defeats diffusion: the LLama for scalable image generation. arXiv preprint arXiv:2406.06525, 2024.

[39] Q. Sun, Q. Yu, Y. Cui, F. Zhang, X. Zhang, Y. Wang, H. Gao, J. Liu, T. Huang, and X. Wang. multimodal generative pretraining. arXiv preprint arXiv:2307.05222, 2023.

[40] C. Team. chameleon: mixed-mode early fusion basis model. arXiv preprint arXiv:2405.09818, 2024.

[41] G. Team, R. Anil, S. Borgeaud, Y. Wu, J.-B. Alayrac, J. Yu, R. Soricut, J. Schalkwyk, A. M. Dai, A. Hauth, et al. Gemini: a family of capable multimodal models. arXiv preprint arXiv:2312.11805, 2023.

[42] S. Tong, D. Fan, J. Zhu, Y. Xiong, X. Chen, K. Sinha, M. Rabbat, Y. LeCun, S. Xie, and Z. Liu. Metamorph: multimodal understanding and generation through instruction fine-tuning. arXiv preprint arXiv:2412.14164,. 2024.

[43] Vivym. Midjourney Prompt dataset. https://huggingface.co/datasets/vivym/midjourney-prompts, 2023. Date of access: [insert date of access, e.g. 2023-10-15].

[44] C. Wang, G. Lu, J. Yang, R. Huang, J. Han, L. Hou, W. Zhang, and H. Xu. Illume: illuminating your LLMs to see, draw, and self-enhance. arXiv preprint arXiv:2412.06673, 2024.

[45] X. Wang, X. Zhang, Z. Luo, Q. Sun, Y. Cui, J. Wang, F. Zhang, Y. Wang, Z. Li, Q. Yu, et al. Emu3: the next tag prediction is all you need. arXiv preprint arXiv:2409.18869, 2024.

[46] C. Wu, X. Chen, Z. Wu, Y. Ma, X. Liu, Z. Pan, W. Liu, Z. Xie, X. Yu, C. Ruan, et al. Janus: decoupling visual coding for unified multimodal understanding and generation. arXiv preprint arXiv:2410.13848, 2024.

[47] S. Wu, H. Fei, L. Qu, W. Ji, and T.-S. Chua. next-gpt: any-to-any multimodal LLM. arXiv preprint arXiv:2309.05519, 2023.

[48] Y. Wu, Z. Zhang, J. Chen, H. Tang, D. Li, Y. Fang, L. Zhu, E. Xie, H. Yin, L. Yi, et al. VILA-U: a foundational model for integrating visual understanding and generation. arXiv preprint arXiv:2409.04429, 2024.

[49] Z. Wu, X. Chen, Z. Pan, X. Liu, W. Liu, D. Dai, H. Gao, Y. Ma, C. Wu, B. Wang, et al. DeepSeek-VL2: hybrid expert visual language model for advanced multimodal understanding. arXiv preprint arXiv:2412.10302, 2024.

[50] J. Xie, W. Mao, Z. Bai, D. J. Zhang, W. Wang, K. Q. Lin, Y. Gu, Z. Chen, Z. Yang, and M. Z. Shou. show-o: a single converter to unify multimodal understanding and generation. arXiv preprint arXiv:2408.12528,. 2024.

[51] W. Yu, Z. Yang, L. Li, J. Wang, K. Lin, Z. Liu, X. Wang, and L. Wang. MM-Vet: assessing the synthesis capability of large multimodal models. arXiv preprint arXiv:2308.02490, 2023.

[52] X. Yue, Y. Ni, K. Zhang, T. Zheng, R. Liu, G. Zhang, S. Stevens, D. Jiang, W. Ren, Y. Sun, et al. MMMU: A large-scale multidisciplinary multimodal understanding and inference benchmark for expert AGI. in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9556-9567, 2024.

[53] X. Zhai, B. Mustafa, A. Kolesnikov, and L. Beyer. sigmoid loss for language image pretraining. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 11975-11986, 2023.

[54] C. Zhao, Y. Song, W. Wang, H. Feng, E. Ding, Y. Sun, X. Xiao, and J. Wang. Monoformer: a single converter for diffusion and autoregression. arXiv preprint arXiv:2409.16280, 2024.

[55] C. Zhou, L. Yu, A. Babu, K. Tirumala, M. Yasunaga, L. Shamis, J. Kahn, X. Ma, L. Zettlemoyer, and O. Levy. Transfusion: predicting the next labeled and diffused image using a multimodal model. arXiv Preprint. arXiv:2408.11039, 2024.

[56] Y. Zhu, M. Zhu, N. Liu, Z. Ou, X. Mou, and J. Tang. LLAVA-Phi: Efficient multimodal assistant with small language models. arXiv preprint arXiv:2401.02330, 2024.[57] L. Zhuo, R. Du, H. Xiao, Y. Li, D. Liu, R. Huang, W. Liu, L. Zhao, F.-Y. Wang, Z. Ma, et al. Lumina-Next: making Lumina-T2X more powerful and faster with Next-DiT. arXiv preprint arXiv:2406.18583, 2024.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...