DeepSeek-V3/R1 Reasoning System Overview (DeepSeek Open Source Week Day 6)

System design principles

The optimization objective of the DeepSeek-V3/R1 inference service is:Higher throughput and lower latency.

To optimize these two goals, DeepSeek uses a solution called cross-node expert parallelism (EP).

- First, EP significantly scales the batch size, improves GPU matrix computation efficiency and increases throughput.

- Second, EP reduces latency by distributing experts across multiple GPUs, with each GPU processing only a small fraction of the experts (reducing memory access requirements).

However, EP increases system complexity in two main ways:

- EP introduces cross-node communication. To optimize throughput, appropriate computational workflows must be designed to overlap communication with computation.

- EP involves multiple nodes and therefore requires data parallelism (DP) itself and load balancing between different DP instances.

This article focuses on DeepSeek How to address these challenges by:

- Using EP to extend the batch size.

- Hiding communication delays behind computations, and

- Performs load balancing.

Large-scale cross-node expert parallelism (EP)

Due to the large number of experts in DeepSeek-V3/R1 (only 8 out of 256 experts per layer are activated), the high sparsity of the model requires a very large total batch size. This ensures sufficient batch size per expert, resulting in higher throughput and lower latency. Large-scale cross-node EP is crucial.

Since DeepSeek employs a pre-population-decoding separation architecture, different levels of parallelism are employed in the pre-population and decoding phases:

- Pre-population phase [Routing Specialist EP32, MLA/Shared Specialist DP32]: Each deployment unit spans 4 nodes and has 32 redundant routing experts, with each GPU handling 9 routing experts and 1 shared expert.

- Decoding phase [Routing Specialist EP144, MLA/Sharing Specialist DP144]: Each deployment unit spans 18 nodes and has 32 redundant route specialists, with each GPU managing 2 route specialists and 1 shared specialist.

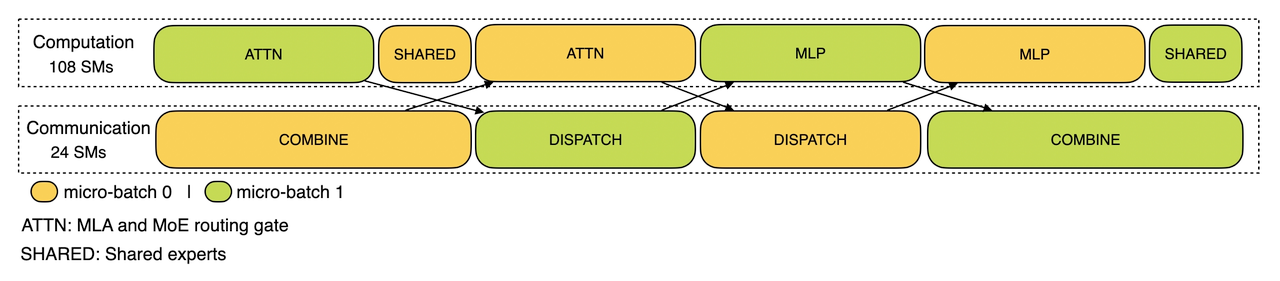

computing-communications overlap

Large-scale cross-node EP introduces significant communication overhead. To mitigate this, DeepSeek employs a dual-batch overlapping strategy to hide the communication cost and improve the overall throughput by splitting a batch of requests into two micro-batches. During the pre-population phase, these two micro-batches are executed alternately, and the communication cost of one micro-batch is hidden behind the computation of the other.

Calculation of pre-population phase - communication overlap

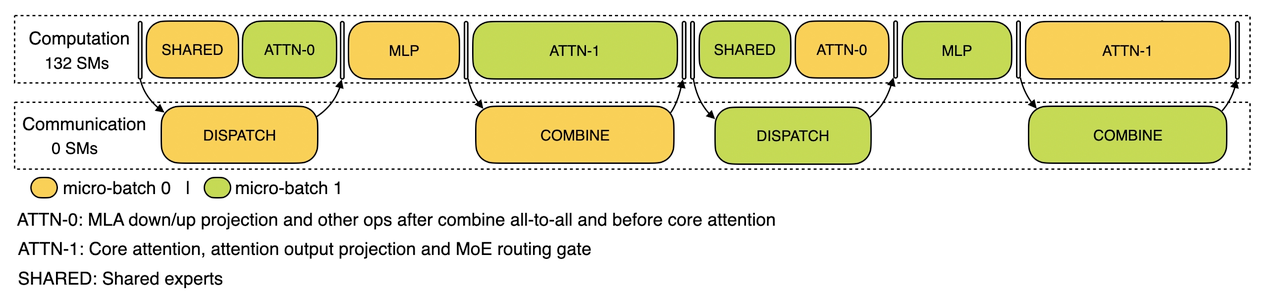

In the decoding phase, the execution time of different stages is uneven. Therefore, DeepSeek subdivides the attention layer into two steps and uses a 5-phase pipeline to achieve seamless computation-communication overlap.

Computational-communication overlap in the decoding phase

For more detailed information on DeepSeek's computation-communication overlap mechanism, please visit https://github.com/deepseek-ai/profile-dataThe

Achieve optimal load balancing

Massive parallelism (including DP and EP) poses a key challenge: if a single GPU is overloaded with computation or communication, it becomes a performance bottleneck, slowing down the entire system while the other GPUs are idle. To maximize resource utilization, DeepSeek strives to balance compute and communication loads across all GPUs.

1. Pre-populated load balancer

- Key issue: varying number of requests and sequence lengths between DP instances leads to imbalance in core attention computation and scheduling send load.

- Optimization goals:

- Balance core attention computation between GPUs (core attention computation load balancing).

- Equalize the inputs to each GPU Token number (scheduling send load balancing) to prevent long processing times on specific GPUs.

2. Decoding load balancers

- Key problem: Uneven number of requests and sequence lengths between DP instances lead to differences in core attention computation (related to KVCache usage) and scheduling send load.

- Optimization goals:

- Balance KVCache usage between GPUs (Core Attention Computing load balancing).

- Equalize the number of requests per GPU (scheduling send load balancing).

3. Expert parallel load balancer

- Key issue: for a given MoE model, there is an inherently high load of experts, resulting in an unbalanced expert computation workload between different GPUs.

- Optimization goals:

- Balance the expert computation on each GPU (i.e., minimize the maximum scheduling receive load on all GPUs).

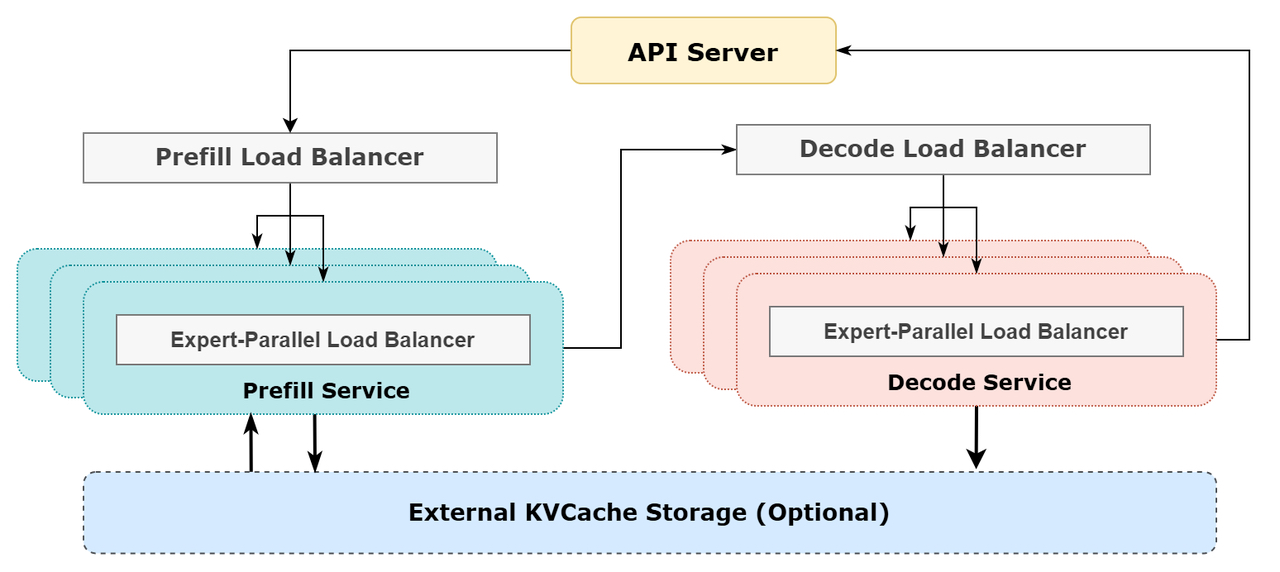

Schematic diagram of DeepSeek online reasoning system

Schematic diagram of DeepSeek online reasoning system

DeepSeek online service statistics

All DeepSeek-V3/R1 inference services are provided on H800 GPUs with accuracy consistent with training. Specifically, matrix multiplication and scheduling transfers are in FP8 format consistent with training, while core MLA computation and combinatorial transfers use BF16 format to ensure optimal service performance.

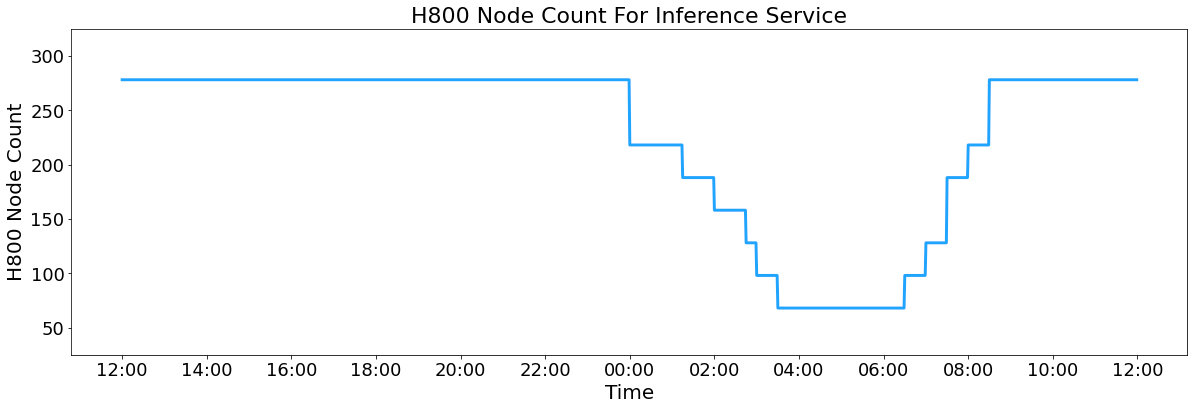

In addition, due to the high service load during the day and low load at night, DeepSeek implemented a mechanism to deploy the inference service on all nodes during peak daytime hours. During low load hours at night, DeepSeek reduces inference nodes and allocates resources to research and training. Over the past 24 hours (February 27, 2025 at 12:00 PM UTC+8 to February 28, 2025 at 12:00 PM UTC+8), the peak node occupancy for the V3 and R1 inference services totaled 278 nodes, with an average occupancy of 226.75 nodes (each containing 8 H800 GPUs). Assuming a rental cost of $2 per hour for one H800 GPU, the total daily cost is $87,072.

H800 Number of inference service nodes

V3 and R1 during the 24-hour statistical period (12:00 noon UTC+8 February 27, 2025 to 12:00 noon UTC+8 February 28, 2025):

- Total Input Token: 608B, of which 342B Token (56.3%) hit the KV cache on disk.

- Total Output Token: 168 B. The average output speed is 20-22 Token per second, and the average kvcache length of each output Token is 4,989 Token.

- Each H800 node provides an average of ~73.7k Token/s input throughput (including cache hits) during pre-population or ~14.8k Token/s output throughput during decoding.

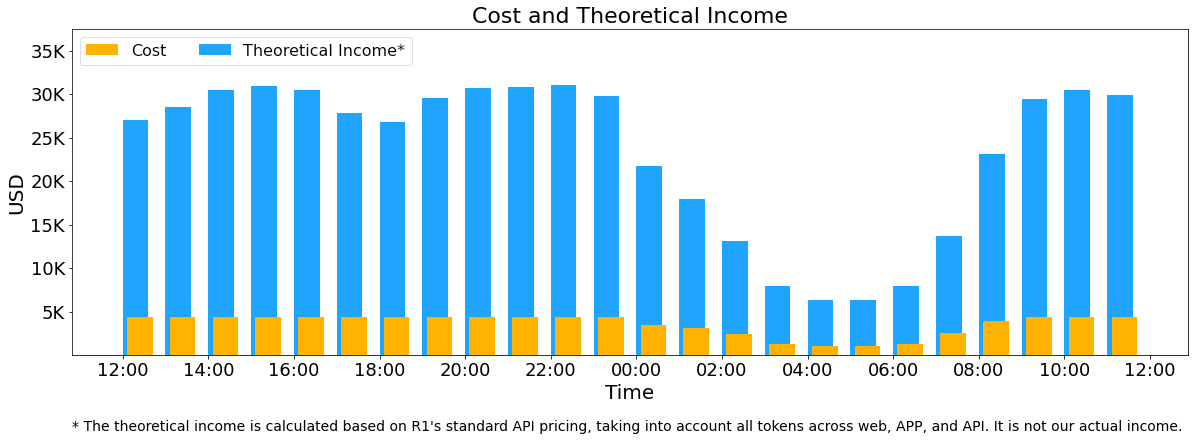

The above statistics include all user requests from the web, app and API. If all tokens are in accordance with the DeepSeek-R1 pricing (*) billing, the total daily revenue would be $562,027 with a cost margin of 545%.

() R1 Pricing: $0.14/M for input Token (cache hit), $0.55/M for input Token (cache miss), $2.19/M for output Token*.

However, DeepSeek's actual revenue is much lower for the following reasons:

- DeepSeek-V3 is priced well below the R1, the

- Only some of the services are fee-based (Web and APP access remains free).

- Nightly discounts are automatically applied during off-peak hours.

Costs and theoretical revenues

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...