DeepSeek-V3.2-Exp - DeepSeek's latest open source experimental AI model

What is DeepSeek-V3.2-Exp?

DeepSeek-V3.2-Exp is DeepSeek's open source experimental AI model that significantly improves the efficiency of long text processing by introducing the DeepSeek Sparse Attention (DSA) mechanism. The model is based onDeepSeek-V3.1-TerminusDeepSeek-V3.2-Exp has been continuously trained, only introducing DSA in its architecture, realizing fine-grained sparse attention mechanism, efficiently selecting key information with the help of lightning indexer, and dramatically improving the efficiency in training and reasoning on long texts.DeepSeek-V3.2-Exp is basically the same as DeepSeek-V3.1-Terminus in several public review sets. Terminus is essentially equal, demonstrating its capabilities in different domains.

Features of DeepSeek-V3.2-Exp

- Sparse attention mechanism: DeepSeek-V3.2-Exp introduces DeepSeek Sparse Attention (DSA), which significantly improves the efficiency of long text processing through a fine-grained sparse attention mechanism while maintaining the model output.

- Long text processing capabilityThe model supports up to 160K long sequence context lengths, which is especially suitable for long text processing scenarios, such as long document analysis and long text generation.

- API cost reduction: API prices have dropped dramatically, reducing the cost for developers to call the DeepSeek API by more than 50%, enabling more developers to access and use the model at a lower cost.

- Multi-platform support: Official App,web-based, the applet has been updated to DeepSeek-V3.2-Exp, which allows users to use the model directly on multiple platforms without additional configuration.

- open source sharing: DeepSeek-V3.2-Exp is open-sourced on Hugging Face and ModelScope platforms, providing detailed implementation details and model weights to facilitate researchers and developers to conduct research and applications.

- performance optimization: DeepSeek-V3.2-Exp performs essentially the same as DeepSeek-V3.1-Terminus on multiple public review sets, while significantly reducing inference costs in long text processing.

- Flexible deployment: Users can download model weights from the Hugging Face platform for local runs, which can be fine-tuned based on the model to better suit specific application scenarios.

Core Benefits of DeepSeek-V3.2-Exp

- Efficiency gains: DeepSeek-V3.2-Exp significantly improves the efficiency of long text processing and reduces the inference cost through sparse attention mechanism.

- stable performance: The model's performance is essentially on par with DeepSeek-V3.1-Terminus on several public review sets, maintaining a high level of performance.

- Cost reduction: API prices have dropped significantly, lowering the cost of use for developers and enabling more users to access and use the model at a lower cost.

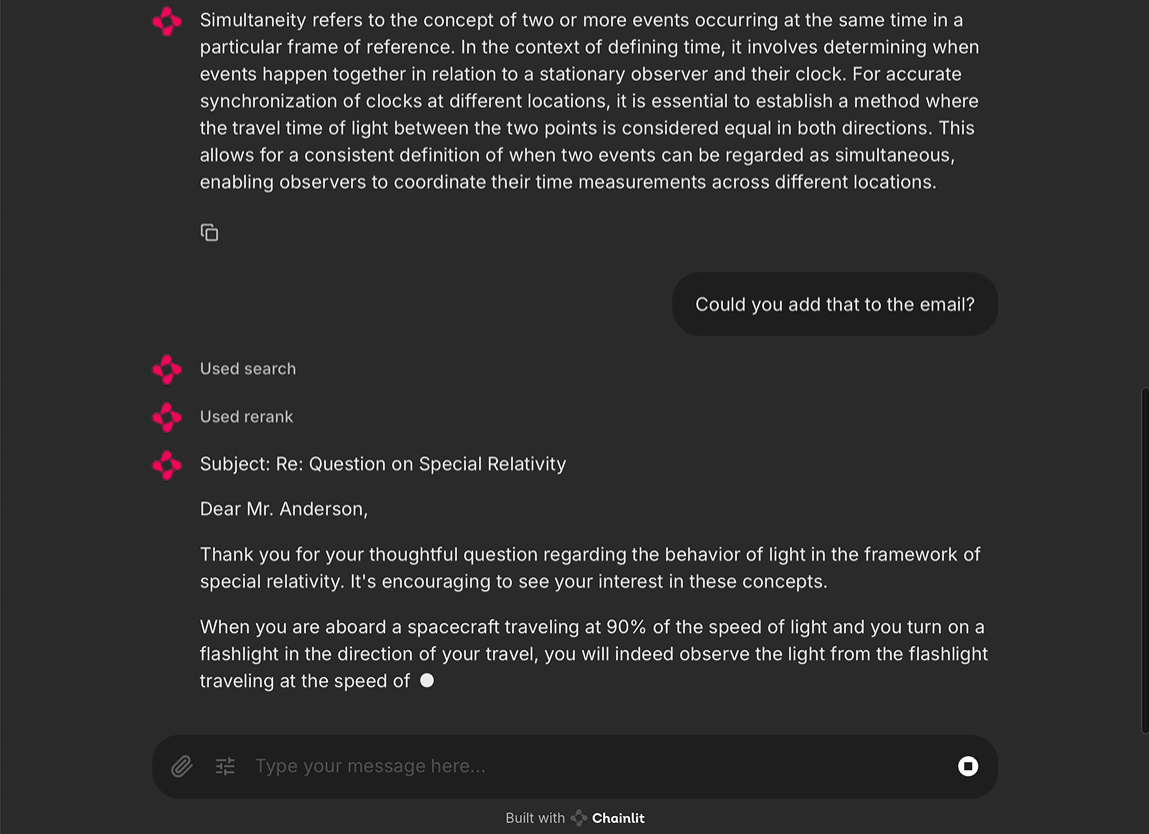

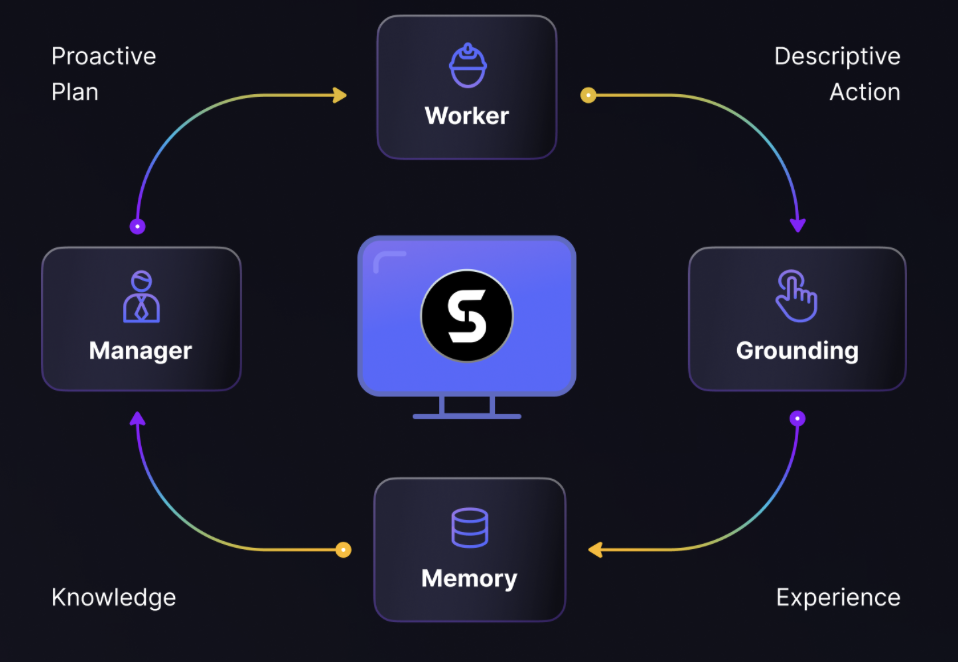

- adaptable: The model shows good adaptability to tasks in different domains, including mathematical reasoning, code generation, and search agents, demonstrating its wide applicability.

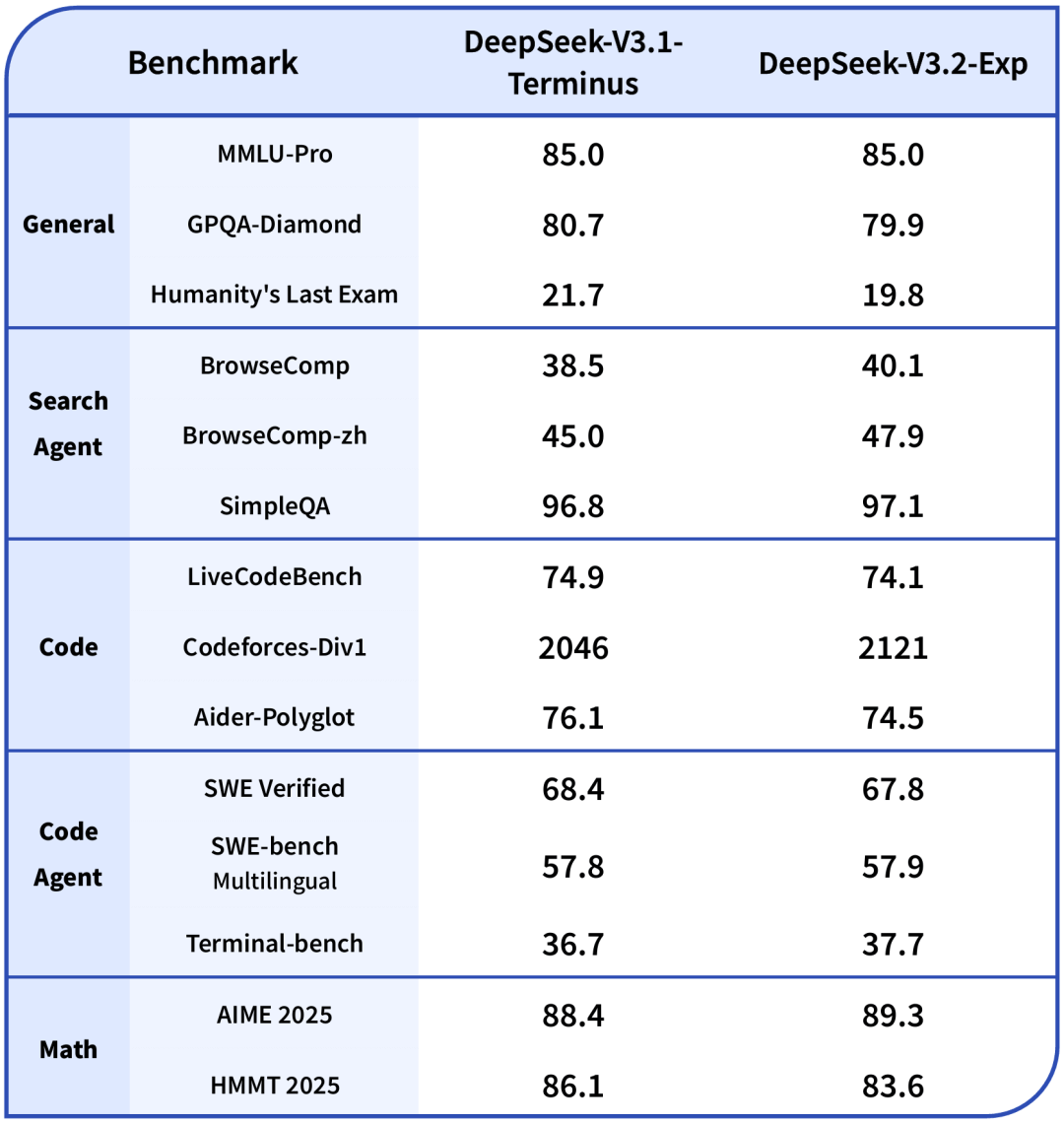

Performance Comparison of DeepSeek-V3.2-Exp vs V3.1-Terminus

- Improved reasoning efficiencyDeepSeek-V3.2-Exp is significantly faster in long text inference, about 2-3 times faster compared to V3.1-Terminus. When processing 128K long contexts, the inference cost is significantly reduced, especially in the decoding phase.

- Performance is basically the same: DeepSeek-V3.2-Exp performs about the same as V3.1-Terminus on public review sets in various domains. For example, both scored 85.0 on MMLU-Pro.

- Lower memory usage: DeepSeek-V3.2-Exp memory usage is reduced by about 30-40% compared to V3.1-Terminus.

- Increased training efficiency: The training efficiency of DeepSeek-V3.2-Exp is improved by about 50% compared to V3.1-Terminus.

- Differences in task-specific performanceIn programming tasks, DeepSeek-V3.2-Exp scored 2121 on Codeforces, slightly higher than V3.1-Terminus's 2046; but in humanities exams such as Humanity's Last Exam, V3.2-Exp scored 19.8, lower than V3.1-Terminus's 21.7. The score of 19.8 is lower than that of 21.7 in V3.1-Terminus.

What is DeepSeek-V3.2-Exp official website?

- HuggingFace Model Library:: https://huggingface.co/deepseek-ai/DeepSeek-V3.2-Exp

- Magic Matching Community:: https://modelscope.cn/models/deepseek-ai/DeepSeek-V3.2-Exp

- Technical Papers:: https://github.com/deepseek-ai/DeepSeek-V3.2-Exp/blob/main/DeepSeek_V3_2.pdf

Who is DeepSeek-V3.2-Exp for?

- developers: The reduced API price of DeepSeek-V3.2-Exp makes it an ideal choice for cost-sensitive developers, and is especially suitable for application development that needs to process long text or has high requirements for inference efficiency.

- content creator: For creators who need to efficiently generate long-form content, such as writers, copywriters, etc., to quickly provide creative inspiration and aid in writing.

- educator: It can be used in the field of education to assist in the generation of teaching content, the organization of learning materials, and intelligent tutoring to help educators improve their work efficiency.

- business user: Suitable for intelligent customer service, document processing, data analysis and other scenarios within the enterprise, to improve the operational efficiency and intelligence of the enterprise.

- regular userThe model's powerful functions can be easily experienced by ordinary users through the official app, web terminal and applet, which can meet the daily needs of text generation and information retrieval.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...