DeepSeek-R1 WebGPU: Run DeepSeek R1 1.5B locally in your browser!

General Introduction

DeepSeek-R1 WebGPU is a cutting-edge AI inference model provided by webml-community on the Hugging Face Spaces platform that utilizes WebGPU technology to allow users to run complex AI models directly in the browser. The model is based on DeepSeek-R1 and is designed for inference tasks, providing efficient and localized AI processing capabilities. Users do not need to install additional software, but only need WebGPU support in their browser to experience advanced AI features such as mathematical reasoning, code generation and question answering, making it ideal for education, research and developers.

Benefits:Deploying DeepSeek-R1 Open Source Models Online with Free GPU Computing Power(API)

Function List

- Running AI models in the browser: No download required, just a modern browser.

- Supports WebGPU acceleration: Utilizes GPUs for efficient computation and improved performance.

- Multitasking AI reasoning: Includes math, code generation, and complex text comprehension tasks.

- Interactive Chat Interface: Users can interact with the model directly from the interface.

- No registration required: Ready-to-use and simplified user experience.

Using Help

How to use the DeepSeek-R1 WebGPU

Compatibility check and preparation:

First, make sure your browser supports WebGPU.Currently, Google Chrome (version 88 and above) is the most commonly used browser that supports WebGPU. You can confirm this by visiting Chrome's experimental feature (chrome://flags/) and enabling WebGPU.

Visit the website:

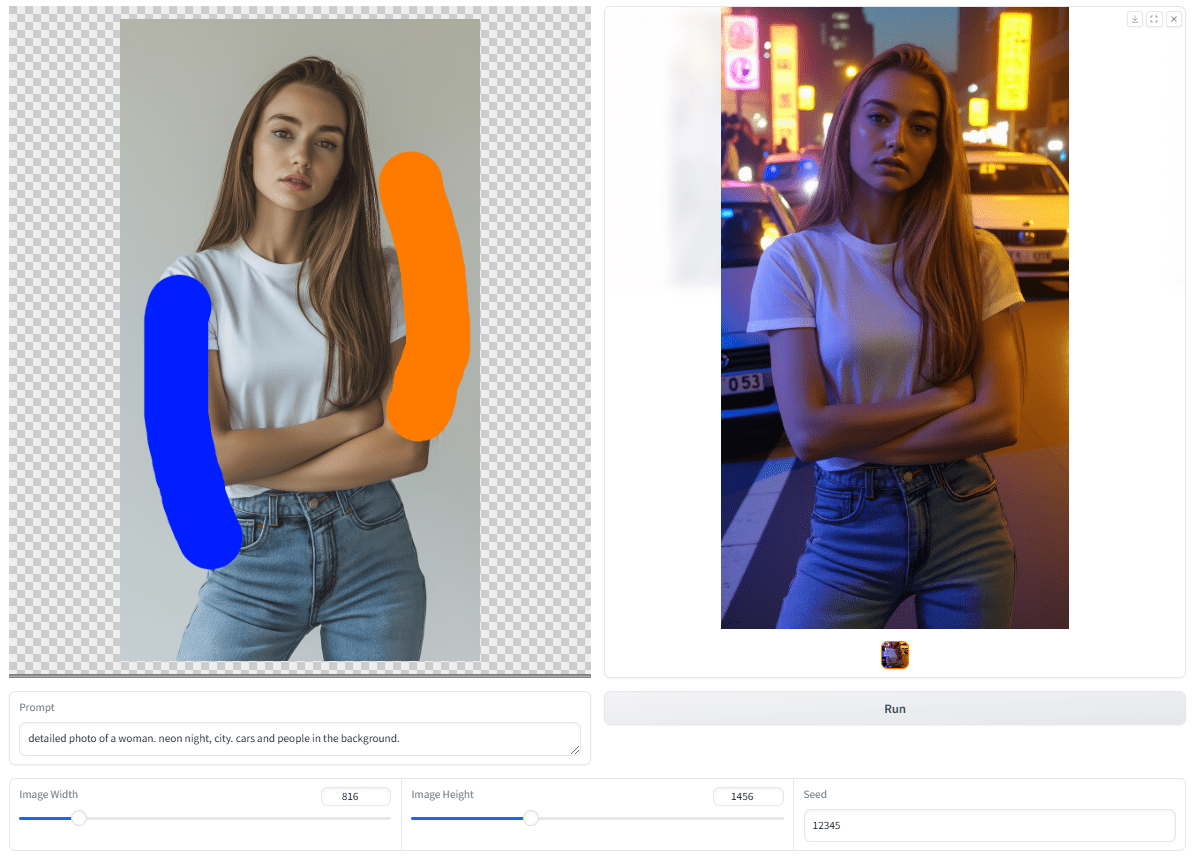

- Open your browser and navigate toHugging Face SpacesThe

- Once the page loads, you are presented with a clean user interface containing an input box and output area.

Reasoning with models:

- Enter a question or task: Type in the input box the problem or task you want the model to handle. This can be a math problem, programming code generation, or any task that requires textual reasoning.

- Example Input:

计算1000阶乘的最后一位数字maybe编写一个Python函数来计算斐波那契数列The

- Example Input:

- Submission of mandates: Click the Send button or press Enter to submit your input. The model will start processing your request, which may take a few seconds depending on the complexity of the task.

- View Results: The results are displayed in the output area. The model will give detailed steps or direct results, depending on the nature of the task.

Optimize the usage experience:

- Adjustment of model parameters: While the current interface is relatively simple, the future may offer options for parameter tuning, such as temperature (to control the randomness of the generated text) or maximum response length.

- try again and again: If you are not satisfied with the results, you can adjust the question or try a different expression.

FAQ:

- What if my browser does not support WebGPU? - It is recommended to update your browser or use a WebGPU-enabled browser such as the latest version of Chrome.

- Model response too slow? - Make sure you have a good internet connection and that your browser's GPU settings are not disabled.

Caveats:

- Since the model runs inside a browser, output quality and speed may be affected by device performance and network conditions.

- Please note that the use of any online AI model is subject to its terms of use and privacy policy.

DeepSeek-R1 WebGPU Deployment Tutorial

DeepSeek The R1 WebGPU is a Hugging Face based Transformers.js The DeepSeek R1 WebGPU is a deep learning model example designed to show how to efficiently run deep learning models in the browser. The project utilizes WebGPU technology to enable significantly faster model inference, up to 100 times faster than WASM.DeepSeek R1 WebGPUs are primarily used for natural language processing tasks and support a wide range of pre-trained models, which can be run directly in the browser without a server.

Getting Started

Follow the steps below to set up and run the application.

- Cloning Repositories

Clone the example repository from GitHub:

git clone https://github.com/huggingface/transformers.js-examples.git

- Go to the project directory

Switch the working directory to the deepseek-r1-webgpu folder:

cd transformers.js-examples/deepseek-r1-webgpu

- Installing dependencies

Use npm to install the necessary dependencies:

npm i

- Running the development server

Start the development server:

npm run dev

The application should now be running locally. Open your browser and visit http://localhost:5173 Make a view.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...