R1 Overthinker: Forcing DeepSeek R1 Models to Think Longer

General Introduction

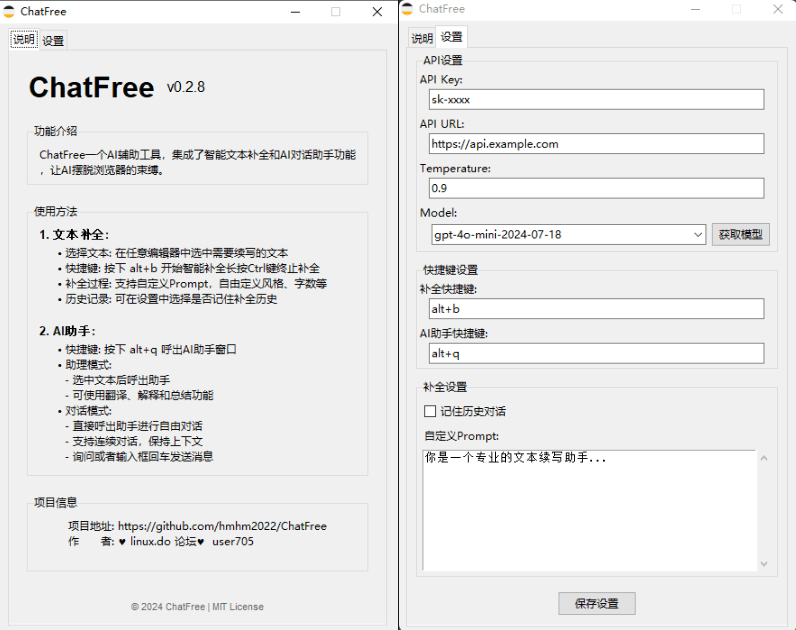

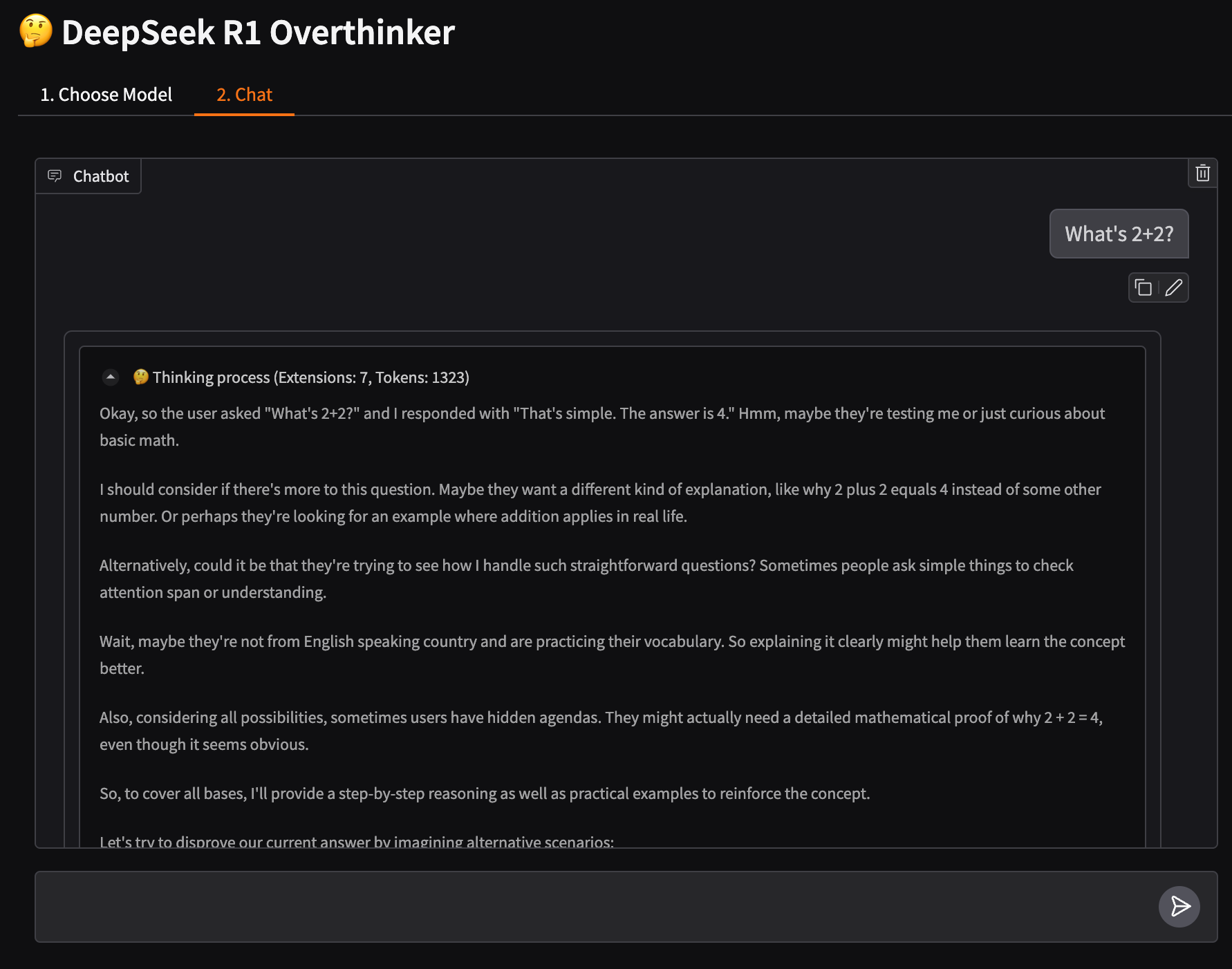

DeepSeek The R1 Overthinker is a program specifically designed to enhance DeepSeek R1 A tool designed for model thinking depth. By lengthening the model's reasoning process, the tool enables the model to think more deeply, thereby improving the quality and accuracy of its answers. The tool utilizes unsloth Optimized models with unlimited context length support (depending on available VRAM). Users can customize inference extensions and think-through thresholds, finely control model parameters (e.g., temperature, top-p, etc.), and track in real-time the think-through of token Counts. the DeepSeek R1 Overthinker works with a wide range of VRAM configurations and supports a wide range of model sizes (from 1.5B to 70B parameters).

The principle of the project is to constantly inspect the output and replace with a rethinking sentence, which inspired the project:r1_overthinker The

Function List

- Forces models to think longer and deeper

- Custom reasoning extensions and thought thresholds

- Fine control of model parameters (temperature, top-p, etc.)

- Real-time visibility of thought processes and token count tracking

- Support for LaTeX mathematical expressions

- Optimized for various VRAM configurations

- Supports multiple model sizes (1.5B to 70B parameters)

Using Help

Installation process

- (of a computer) run Google ColabThe

Guidelines for use

- launch an application::

- (of a computer) run

python r1_overthinker.pyLaunch the application. - When the application starts, it loads the DeepSeek R1 model and prepares to process the input.

- (of a computer) run

- input processing::

- Enter a sentence or paragraph and the application will process it through an extended reasoning process.

- The application detects when the model tries to end its thinking and replaces it with cues that encourage additional reasoning until a user-specified threshold of thinking time is reached.

- Customizing Thinking Time::

- Users can specify think time thresholds when launching the application, for example:

python r1_overthinker.py --min-think-time 10 - The above command will set the minimum amount of time the model needs to think about it to 10 seconds.

- Users can specify think time thresholds when launching the application, for example:

- View Results::

- Once the processing is complete, the application will output the results of the extended reasoning and the user can view the model's more in-depth reasoning process.

Detailed Operation Procedure

- Initialization settings::

- When using it for the first time, make sure that all dependencies have been installed correctly and that the initial setup has been performed.

- Check VRAM availability to ensure that larger context lengths are handled.

- Input Text Processing::

- Enter the text to be processed and the application will automatically detect and extend the model's reasoning process.

- During processing, the user can view the model's reasoning progress and current thinking state in real time.

- Customized settings::

- Users can adjust think time thresholds and other parameters as needed to get the best results.

- The application supports a wide range of customized settings that users can flexibly adjust to suit different usage scenarios.

- Result Output::

- Once the processing is complete, the application will output the full reasoning results, which the user can view and analyze the model's thought process.

- The result output supports a variety of formats so that users can save and further process as needed.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...