DeepSeek Debate: China's Leadership in Cost, Real Training Costs, and Profit Impact of Closed-Source Models

Keywords: h100 price spikes, subsidized inferred pricing, export controls, MLAs

DeepSeek's Narrative Takes the World by Storm

DeepSeek Taking the world by storm. For the past week, DeepSeek has been the only topic that everyone in the world wants to talk about. Currently, DeepSeek has far more daily traffic than Claude, Perplexity, or even Gemini.

But for those who follow the field closely, this is not exactly "new" news. We've been talking about DeepSeek for months now (every link is an example). The company isn't new, but the hype is, and SemiAnalysis has long argued that DeepSeek is very talented and that the broader US public doesn't care. When the world finally paid attention, it did so in a frenzied hype that didn't reflect reality.

We would like to emphasize that this statement has changed from last month, when Scaling Laws were broken and we dispelled the misconception that algorithms are now improving too quickly, which in a way is also bad for Nvidia and GPUs.

BACKGROUND: December 11, 2024 Scaling Laws - O1 Pro architecture, inference training infrastructure, Orion and Claude 3.5 Opus "failures"

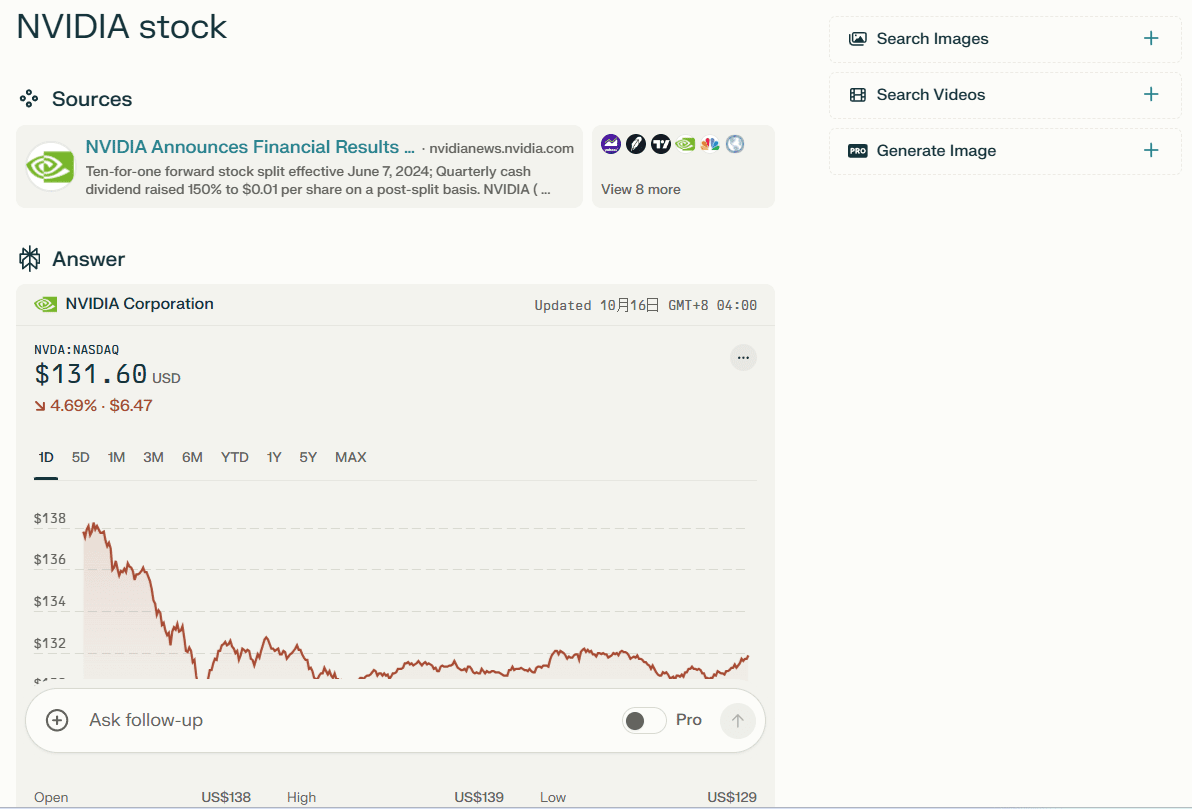

The narrative now is that DeepSeek is so efficient that we don't need more calculations and there is now a huge overcapacity in everything due to the modeling changes. While the Jevons Paradox is also overhyped, Jevons is closer to reality, and these models have induced demand that has had a tangible impact on the pricing of the H100 and H200.

DeepSeek and High-Flyer

High-Flyer is a Chinese hedge fund and an early adopter of using AI in their trading algorithms. They realized early on the potential of AI outside of finance and the key insights of Scaling. As a result, they have been increasing their GPU supply. After experimenting with models using clusters of thousands of GPUs, High Flyer invested in 10,000 A100 GPUs in 2021 * before any export restrictions. * This paid off. As High-Flyer improved, they realized it was time to spin off "DeepSeek" in May 2023, with the goal of pursuing further AI capabilities in a more focused way. High-Flyer self-funded the company because at the time, outside investors had little interest in AI, and were primarily concerned about the lack of a business model. High-Flyer and DeepSeek today often share resources, both human and computational.

DeepSeek has evolved into a serious, collaborative endeavor that is far from the "side hustle" that many in the media claim it to be. We believe their GPU investment is over half a billion dollars, even taking into account export controls.

Source: SemiAnalysis, Lennart Heim

GPU situation

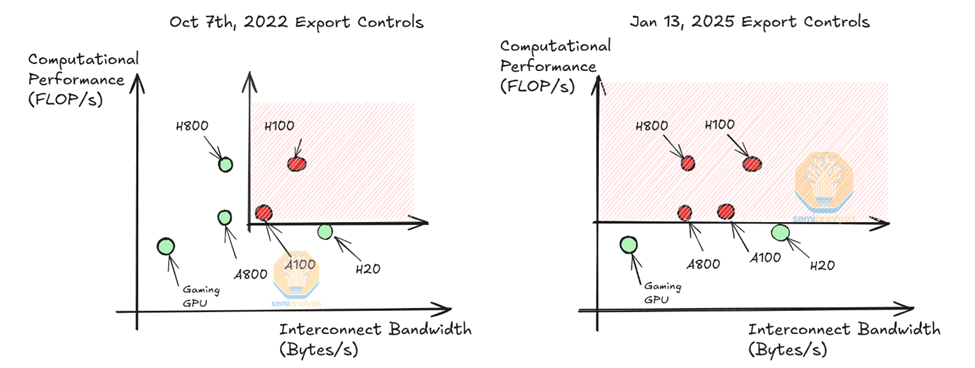

We think they could use about 50,000. Hopper GPUThis is not the same as the 50,000 H100's that some claim. Nvidia makes different models of H100's to comply with different regulations (H800, H20), and only the H20 is currently available to model providers in China. Note that the H800 has the same computing power as the H100, but with lower network bandwidth.

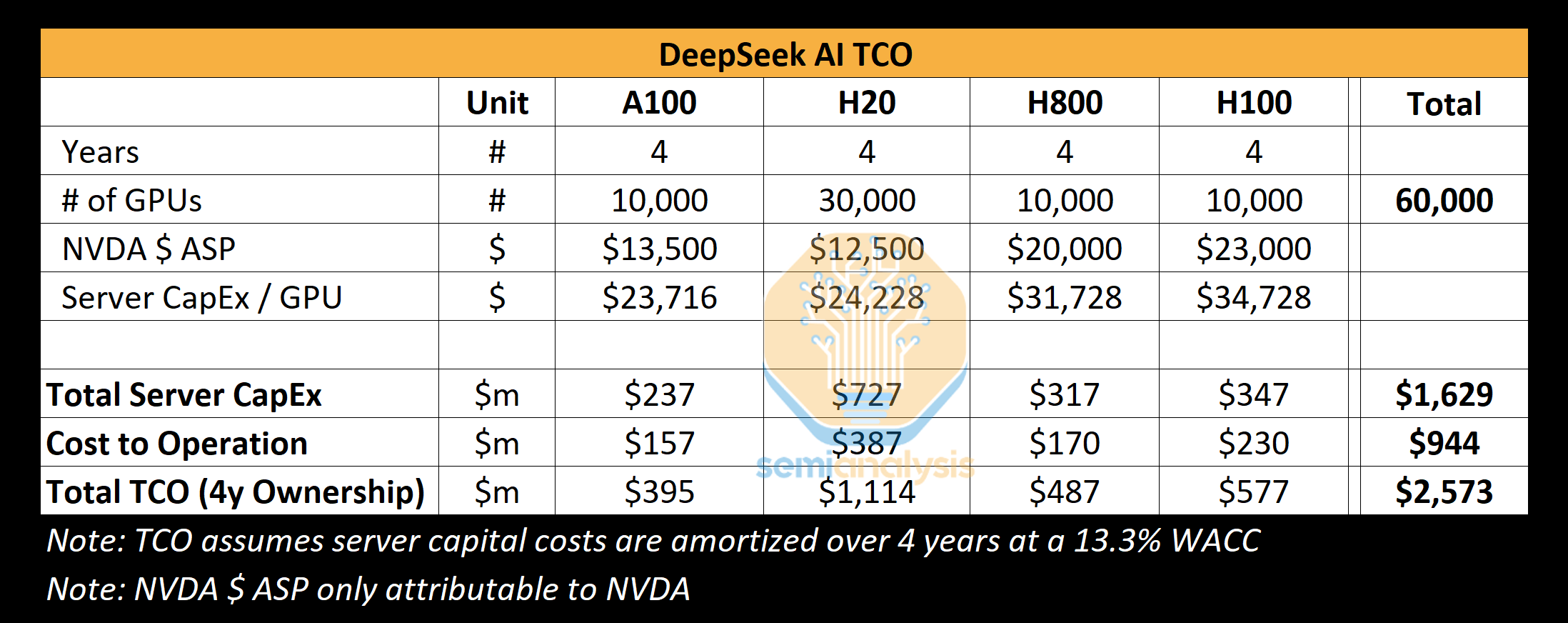

We believe that DeepSeek has access to about 10,000 H800s and about 10,000 H100s. in addition, they have ordered more H20s, and Nvidia has produced more than 1 million China-specific GPUs in the last 9 months. these GPUs are shared between High-Flyer and DeepSeek, and are somewhat geo-distributed. These GPUs are shared between High-Flyer and DeepSeek and are somewhat geographically distributed. They are used for trading, inference, training, and research. For a more specific and detailed analysis, see our gas pedal model.

Source: SemiAnalysis

Our analysis shows that DeepSeek's total server capex is about $1.6 billion, with costs associated with operating such clusters as high as $944 million. Similarly, all AI labs and hyperscalers have more GPUs for a variety of tasks, including research and training, than they submit to a single training run, as centralization of resources is a challenge. x.AI is a unique AI lab with all of its GPUs in 1 location.

DeepSeek specializes in sourcing talent from China, regardless of previous qualifications, with a strong focus on competence and curiosity, and regularly hosts recruiting events at top universities such as Peking University and Zhejiang University, where many of its employees have graduated. Positions are not necessarily predefined, and recruiters are given flexibility, with job ads even boasting access to more than 10,000 GPUs with no limit on usage. They are extremely competitive, reportedly offering salaries in excess of $1.3 million to promising candidates, much higher than competitive large Chinese tech companies and AI labs such as Moonshot, who have around 150 employees but are growing rapidly.

History has shown that a small, well-funded, focused startup can often push the boundaries of what's possible. deepSeek lacks the bureaucracy of a company like Google, and because they are self-funded, they can move ideas forward quickly. However, like Google, DeepSeek (for the most part) runs its own data centers and does not rely on outside parties or providers. This opens up more space for experimentation and allows them to innovate across the entire stack.

We believe they are the best "open weights" lab today, beating out Meta's Llama, Mistral and others.

Cost and performance of DeepSeek

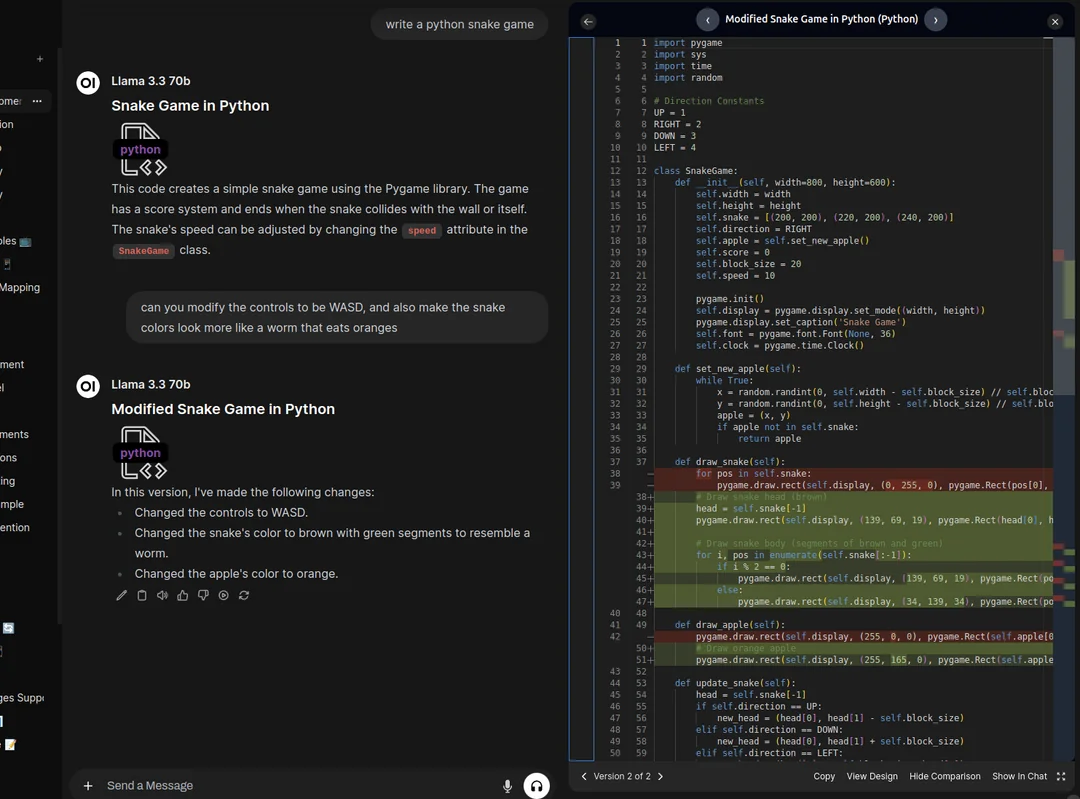

DeepSeek's pricing and efficiency sparked a frenzy this week, with the main headline being DeepSeek V3's "$6 million" training cost. This is wrong. This is similar to pointing to a specific part of a product's bill of materials and attributing it to the entire cost. Pre-training costs are a very small part of the total cost.

Training costs

We believe the pre-training numbers are far from the actual spend on the model. We believe they have spent much more than $500 million on hardware over the company's history. In order to develop new architectural innovations, there is considerable spending on testing new ideas, new architectural ideas, and ablation during model development. Multi-Head Latent Attention, a key DeepSeek innovation, took months to develop and cost the entire team manpower and GPU time.

The $6 million cost in the paper is attributable only to the GPU cost of the pre-training runs, which is only a fraction of the total cost of the model. It does not include important parts of the puzzle, such as R&D and the TCO of the hardware itself. for reference, Claude 3.5 Sonnet cost tens of millions of dollars to train, and if that was all Anthropic needed, they wouldn't be raising billions of dollars from Google and tens of billions from Amazon. This is because they have to experiment, come up with new architectures, collect and clean data, pay their employees, etc.

So how does DeepSeek have such a large cluster? The lag in export controls is key and will be discussed in the export section below.

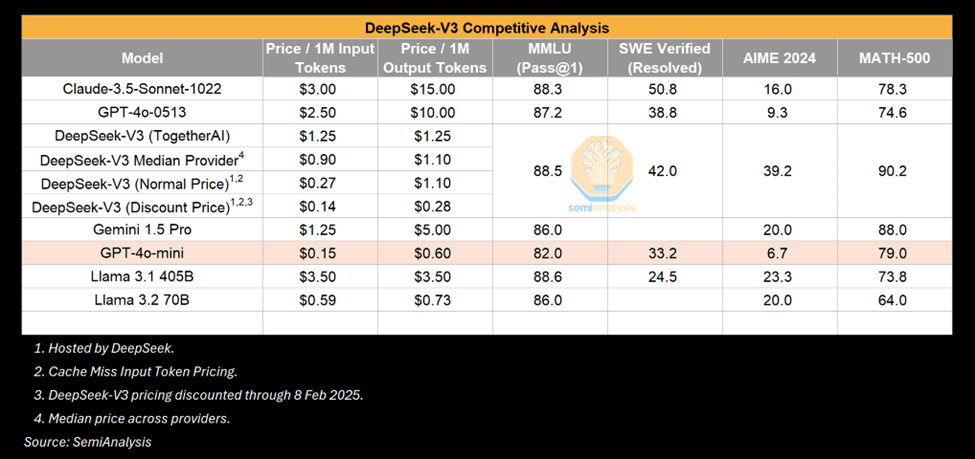

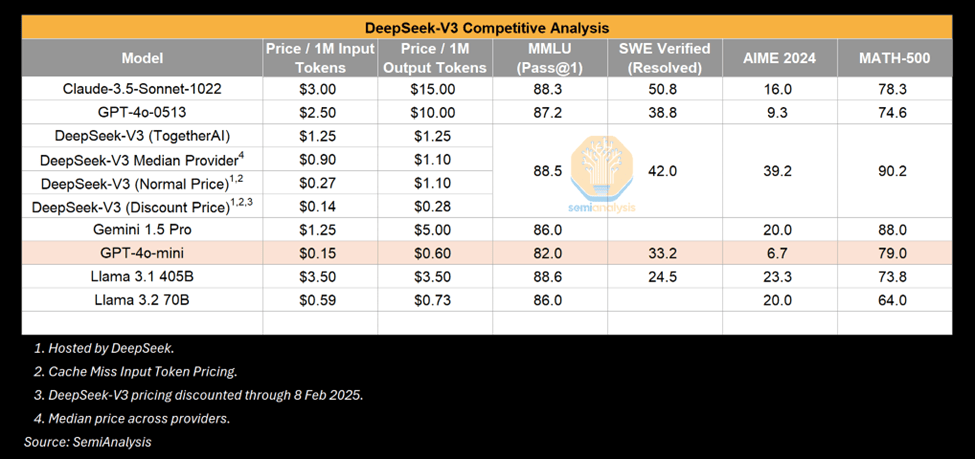

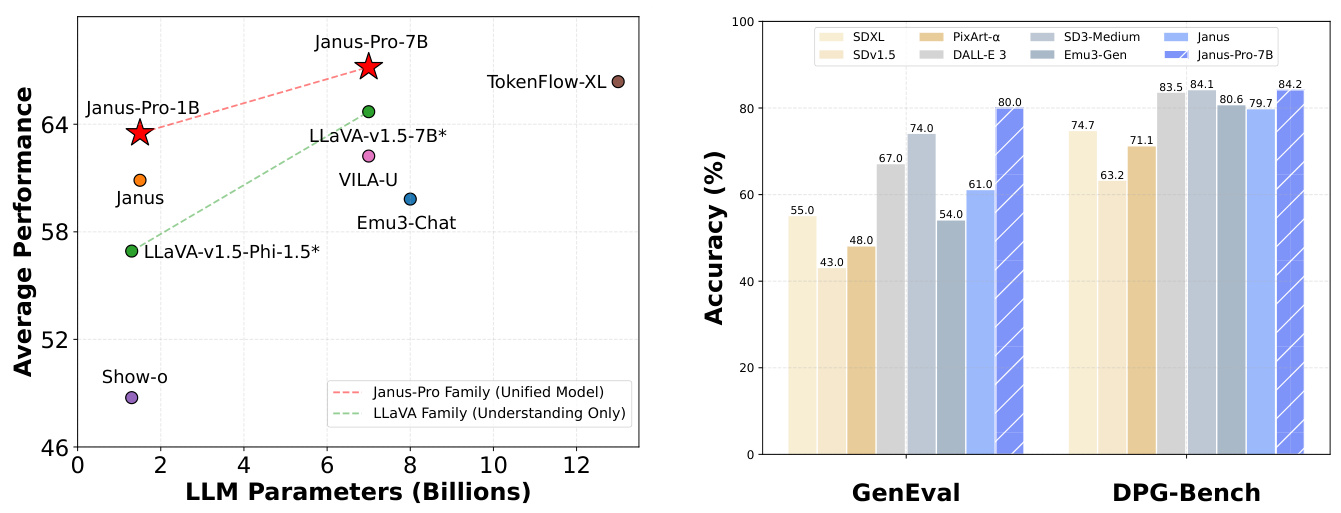

Closing the Gap - V3 Performance

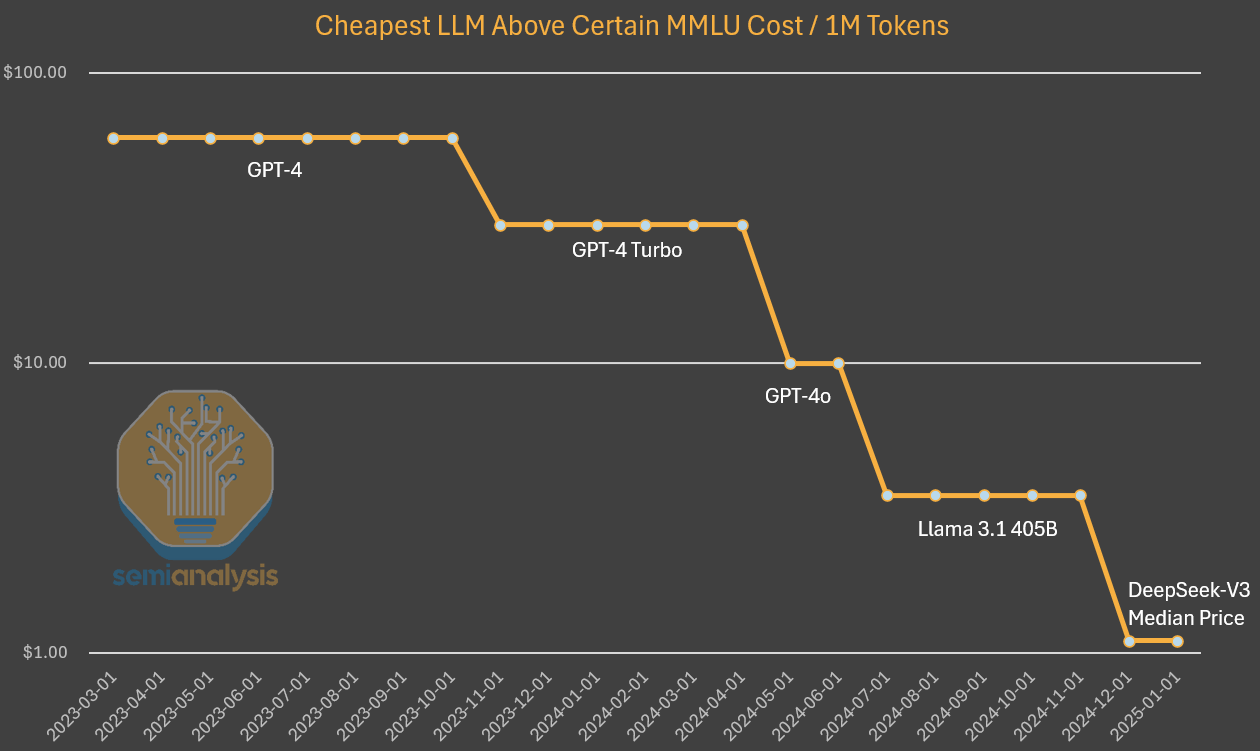

The V3 is undoubtedly an impressive model, but it is worth emphasizing that the As opposed to what's impressive. Many have compared the V3 to the GPT-4o and emphasized how the V3 beats the 4o's performance. This is true, but the GPT-4o is on the May 2024released. ai is evolving rapidly and may 2024 is another era in terms of algorithmic improvements. Moreover, we are not surprised to see comparable or greater power after a given amount of time with fewer computations. The collapse of inference costs is a sign of AI improvement.

Source: SemiAnalysis

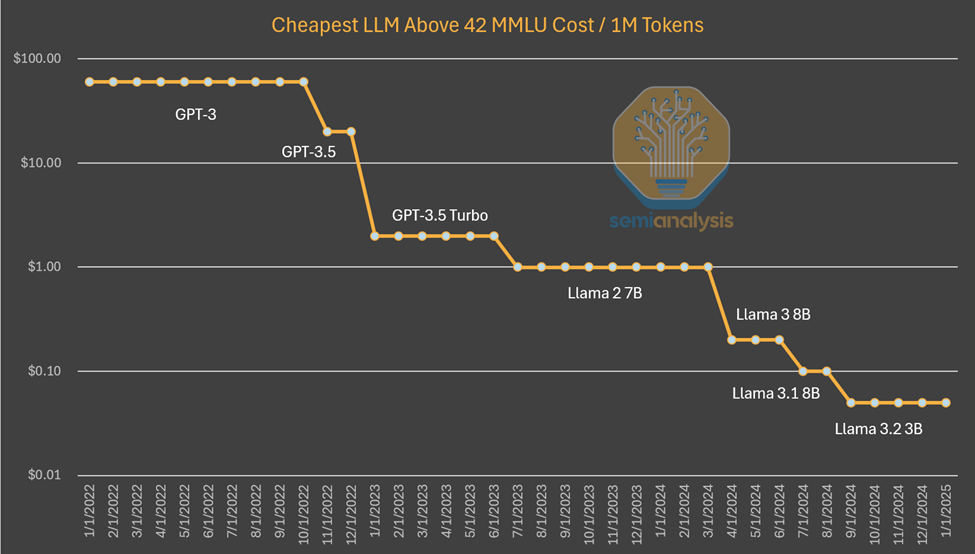

One example is that small models that can run on laptops have performance comparable to GPT-3, which requires a supercomputer for training and multiple GPUs for inference. In other words, algorithmic improvements allow for fewer computations to train and reason about models with the same capabilities, and the pattern works again and again. This time the world took notice because it came from the sino of a lab. But smaller models getting better is nothing new.

Sources: SemiAnalysis, Artificialanalysis.ai, Anakin.ai, a16z

This pattern we've witnessed so far is that AI labs are spending more absolute dollars to get more The intelligence of Anthropic. Algorithmic progress is estimated to be 4x per year, meaning that every year that passes requires only 4x fewer computations to achieve the same capability.Dario, Anthropic's CEO, believes that algorithmic progress is even faster, yielding a 10x improvement. In the case of GPT-3 quality inference pricing, the cost has dropped by a factor of 1200.

In investigating the costs of GPT-4, we see a similar decrease in costs, albeit earlier in the curve. Although the decrease in the difference in cost over time can be explained by no longer keeping the capacity constant as in the above graph. In this case, we see that algorithmic improvements and optimizations lead to a 10x reduction in cost and a 10x increase in capacity.

Sources: SemiAnalysis, OpenAI, Together.ai

To be clear, DeepSeek is unique in that they realized this level of cost and capability first. They are unique in releasing open weights, but the previous Mistral and Llama models have done this in the past.DeepSeek has achieved this level of cost, but by the end of the year, don't be surprised if the cost drops another 5x.

Is the performance of R1 comparable to that of o1?

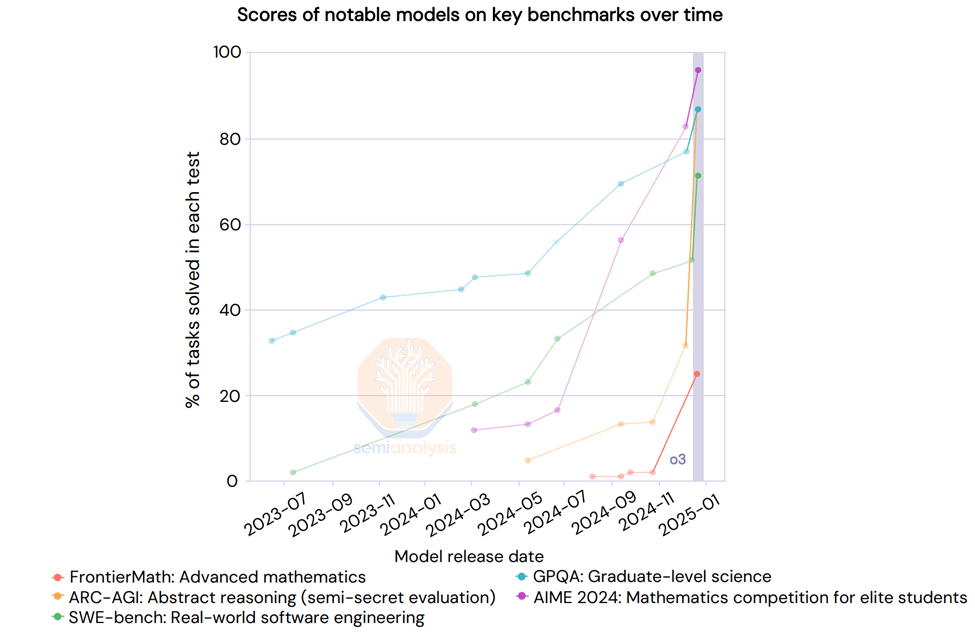

On the other hand, R1 was able to achieve comparable results to o1, which was only announced in September. how did DeepSeek catch up so quickly?

The answer is that inference is a new paradigm with faster iteration rates and easier-to-achieve goals, where a small amount of computation can yield meaningful gains compared to previous paradigms. As described in our Scaling Laws report, previous paradigms relied on pre-training, which is becoming increasingly expensive and difficult to achieve robust gains.

The new paradigm focuses on enabling inference capabilities through synthetic data generation and reinforcement learning in post-training on existing models, resulting in faster gains at a lower price. The lower barrier to entry coupled with simple optimizations means that DeepSeek is able to replicate the o1 approach faster than usual. As participants figure out how to scale more in this new paradigm, we expect the time gap in matching capabilities to increase.

Please note that the R1 paper Not mentioned The computations used. This is not a coincidence - it takes a lot of computation to generate the synthetic data used to post-train R1. This doesn't include reinforcement learning. r1 is a very good model, we don't dispute that, and catching up with the inference advantage so quickly is objectively impressive. the fact that DeepSeek is Chinese and caught up with fewer resources makes it even more impressive.

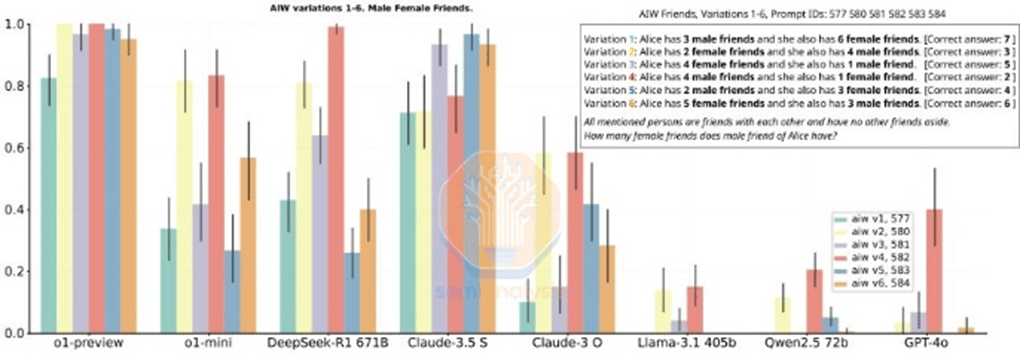

But some of the benchmarks R1 mentions also misleading. Comparing R1 to o1 is tricky because R1 doesn't specifically mention benchmarks where they don't lead. While R1 matches in inference performance, it is not a clear winner in every metric, and in many cases it is worse than o1.

Source: (Yet) another tale of Rise and Fall. DeepSeek R1

We haven't mentioned o3. o3 is significantly more capable than R1 or o1. in fact, OpenAI recently shared results for o3 where the Scaling of the benchmark is vertical. "Deep learning has hit the wall", but in a different way.

Source: AI Action Summit

Google's inference model is as good as R1's

While there's a wave of frenzied hype around R1, a $2.5 trillion U.S. company released a cheaper inference model a month ago: Google's Gemini Flash 2.0 Thinking. the model is available and is accessible via the API Much cheaper than the R1., even if the context of the model is of greater length.

Flash 2.0 Thinking beats R1 in the reported benchmarks, although benchmarks don't tell the whole story.Google only released 3 benchmarks, so it's an incomplete picture. Nonetheless, we think Google's model is robust and can compete with the R1 in many ways, but is not subject to any hype. This could be due to Google's lack of market entry strategy and poor user experience, but the R1 was also a surprise in China.

Source: SemiAnalysis

To be clear, none of this detracts from the excellence of DeepSeek, whose structure as a fast-growing, well-funded, smart, and focused startup is such that it beats the odds in terms of publishing inference models Meta and other giants for a reason that is commendable.

Technical Achievements

DeepSeek has cracked the code and unlocked innovations not yet realized by leading labs. We expect that any released DeepSeek improvements will be replicated by Western labs almost immediately.

What are these improvements? Most of the architectural achievements are specifically related to V3, which is also the base model for R1. Let's describe these innovations in more detail.

Training (pre- and post-training)

DeepSeek V3 utilizes an unprecedented scale of multiple Token Prediction (MTP), these are added attention modules that predict the next few Token instead of a single Token.This improves model performance during training and can be discarded during inference. This is an example of an algorithmic innovation that achieves improved performance with a lower amount of computation.

There are other caveats, such as FP8 accuracy in training, but leading US labs have been doing FP8 training for some time.

DeepSeek v3 is also a hybrid expert model, which is a large model consisting of many other smaller experts that specialize in different things, a kind of emergent behavior. one of the challenges faced by the MoE model is how to determine which Token goes to which sub-model or "expert". DeepSeek implements a "gated network" that routes Token to the correct expert in a balanced way without affecting model performance. This means that routing is very efficient and each token changes only a few parameters during training relative to the overall size of the model. This increases training efficiency and low inference costs.

Despite concerns that efficiency gains from hybrid experts (MoE) may reduce investment, Dario points out that the economics of more powerful AI models are so great that any savings are quickly reinvested in building larger models. the improved efficiency of MoE does not reduce overall investment, but rather speeds up AI Scaling efforts. These companies focus on scaling models to more computations and making them more efficient through algorithms.

In the case of R1, it benefited from having a strong base model (v3), in part because of reinforcement learning (RL). This is partly due to Reinforcement Learning (RL).RL has two main focuses: formatting (to ensure that it provides coherent output) and usefulness and harmlessness (to ensure that the model is useful). Reasoning power emerges on a synthetic dataset during the fine-tuning of the model. This.As described in our Scaling Laws articleThat's what happened with o1. Note that there is no mention of computation in the R1 paper, this is because mentioning how much computation was used would indicate that they had more GPUs than their narrative implies. RL at this scale requires a lot of computation, especially to generate synthetic data.

Additionally, some of the data used by DeepSeek appears to come from OpenAI's models, which we think will have an impact on the strategy for extracting from the output. This is already illegal in the TOS, but looking ahead, a new trend may be a form of KYC (Know Your Customer) to block extractions.

Speaking of extraction, perhaps the most interesting part of the R1 paper is the ability to fine-tune non-inference mini-models by using outputs from inference models to transform them into inference models. The dataset management contains a total of 800,000 samples, and now anyone can make their own datasets using R1's CoT outputs and make inference models with the help of those outputs. We are likely to see more small models demonstrating inference capabilities, thus improving the performance of small models.

Multi-Level Attention (MLA)

MLA is a key innovation that makes DeepSeek's inference significantly less expensive. The reason is that compared to standard attention, MLA reduces the amount of KV caching required per query by about 93.3%The KV cache is Transformer A memory mechanism in the model for storing data that represents the context of a conversation, thus reducing unnecessary computation.

As discussed in our Scaling Laws article, the KV cache grows as the conversation context grows and creates a considerable memory limit. Significantly reducing the amount of KV cache required per query would reduce the amount of hardware required per query, thereby reducing costs. However, we believe that DeepSeek is offering inference at cost to gain market share without actually making any money. google Gemini Flash 2 Thinking is still cheaper and Google is unlikely to offer it at cost. mLA in particular has attracted the attention of many of the leading U.S. labs. mLA in the May 2024 MLA was released in May 2024 in DeepSeek V2. DeepSeek also enjoys higher inference workload efficiency with the H20 compared to the H100 because of the H20's higher memory bandwidth and capacity. They also announced a partnership with Huawei, but so far there has been very little work on Ascend computing.

The implications we find most interesting are particularly the impact on margins, and what this means for the ecosystem as a whole. Below we take a look at the future pricing structure across the AI industry and detail why we think DeepSeek is subsidizing prices and why we see early signs of the Jevons Paradox at work. We comment on the implications for export controls, how CCPs might react as DeepSeek's dominance grows, and more.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...