DeepSeek released the first open source version of its v3 model, now with the strongest code capabilities (in China)

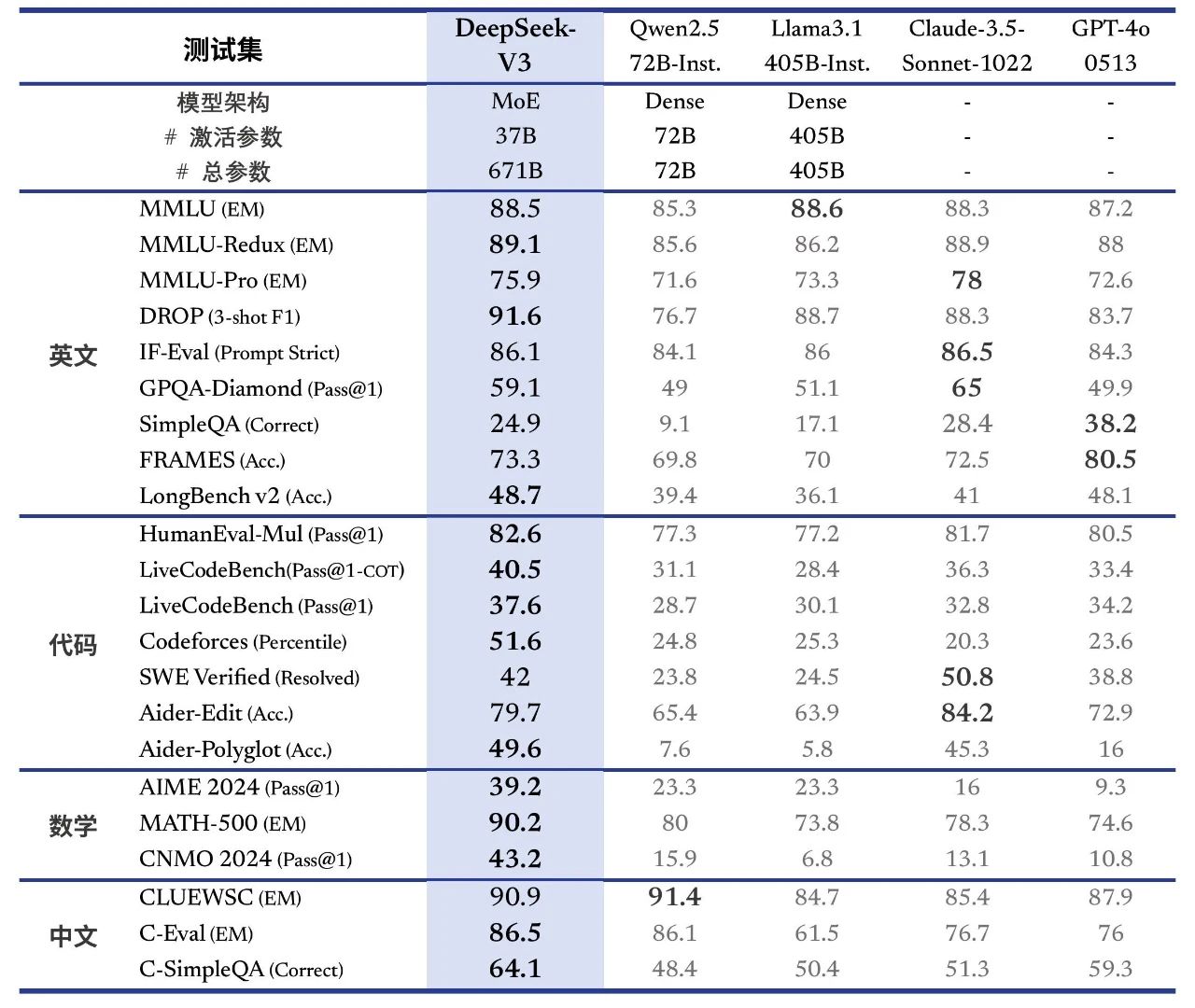

DeepSeek-V3 is a powerful Mixture-of-Experts (MoE) language model with 671 billion total parameters and 3.7 billion parameters activated for each token. The model employs an innovative Multi-head Latent Attention (MLA) architecture along with the well-validated DeepSeekMoE architecture.CogAgent implements a load-balancing strategy with no auxiliary loss and proposes a multi-token prediction training objective to significantly improve the model performance. It is pre-trained on 14.8 million diverse and high-quality tokens and undergoes supervised fine-tuning and reinforcement learning phases to exploit its full potential.

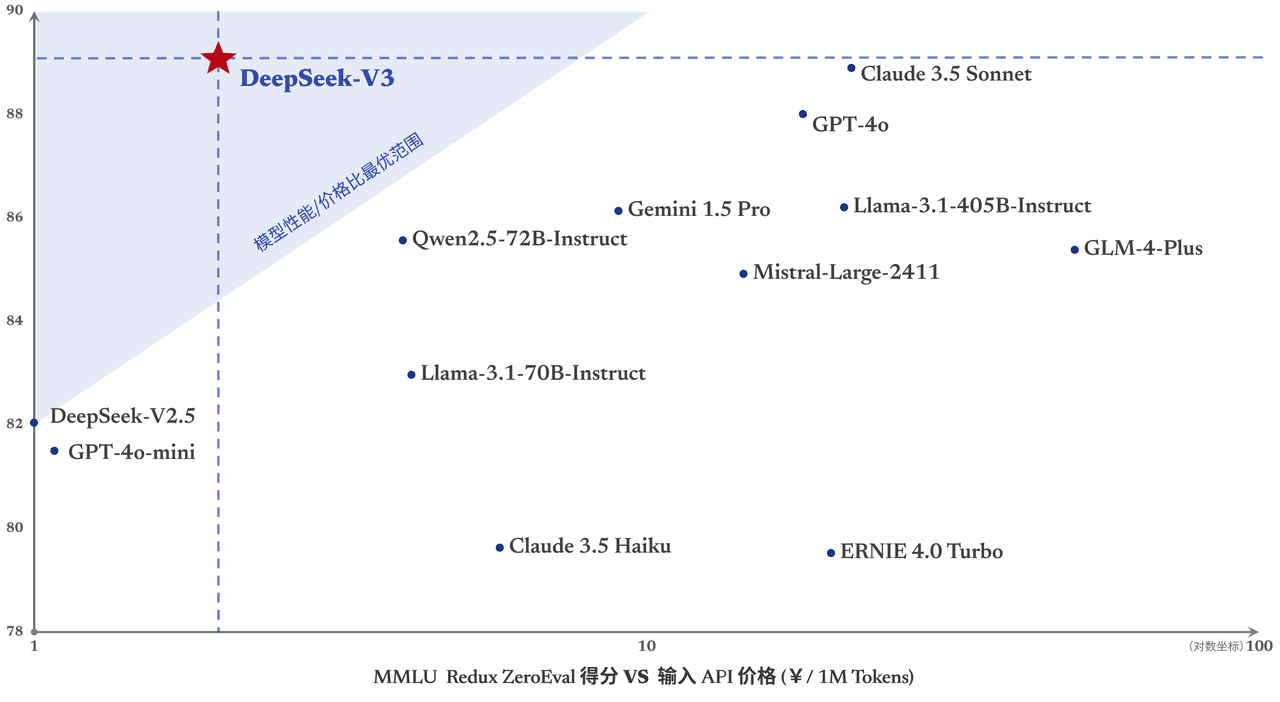

DeepSeek-V3 performs well in many standard benchmarks, especially on math and code tasks, making it the strongest open-source base model currently available with low training costs, and its stability throughout training is highly recognized.

Yesterday, the first version of DeepSeek's new model series, DeepSeek-V3, was released and open-sourced at the same time. You can talk to the latest version of V3 model by logging into chat.deepseek.com. The API service has been updated simultaneously, and no changes are needed for the interface configuration. The current version of DeepSeek-V3 does not support multimodal input and output.

Performance Alignment Overseas Leader Closed Source Model

DeepSeek-V3 is a homegrown MoE model with 671B parameters and 37B activations at 14.8 T token Pre-training was conducted on the

Link to paper:

https://github.com/deepseek-ai/DeepSeek-V3/blob/main/DeepSeek_V3.pdf

DeepSeek-V3 outperforms other open-source models such as Qwen2.5-72B and Llama-3.1-405B in several reviews, and matches the performance of the world's top closed-source models GPT-4o and Claude-3.5-Sonnet.

- encyclopedic knowledge: The level of DeepSeek-V3 on knowledge-based tasks (MMLU, MMLU-Pro, GPQA, SimpleQA) is significantly improved compared to its predecessor, DeepSeek-V2.5, and is close to the current best performing model, Claude-3.5-Sonnet-1022.

- long text: On average, DeepSeek-V3 outperforms the other models on DROP, FRAMES, and LongBench v2 in long textual measures.

- coding::DeepSeek-V3 is far ahead of all non-o1 models available on the market in algorithmic codeforces.; and approximates Claude-3.5-Sonnet-1022 in the engineering class code scenario (SWE-Bench Verified).

- math: DeepSeek-V3 vastly outperformed all open-source closed-source models at the American Mathematics Competition (AIME 2024, MATH) and the National Conference on Mathematics in High Schools (CNMO 2024).

- Chinese Language Proficiency: DeepSeek-V3 performs similarly to Qwen2.5-72B on assessment sets such as Educational Assessment C-Eval and Pronoun Disambiguation, but is more advanced on Factual Knowledge C-SimpleQA.

Up to 3x faster generation

Through algorithmic and engineering innovations, DeepSeek-V3 dramatically increases the speed of word generation from 20 TPS to 60 TPS, a 3x increase compared to the V2.5 model, bringing users a faster and smoother experience.

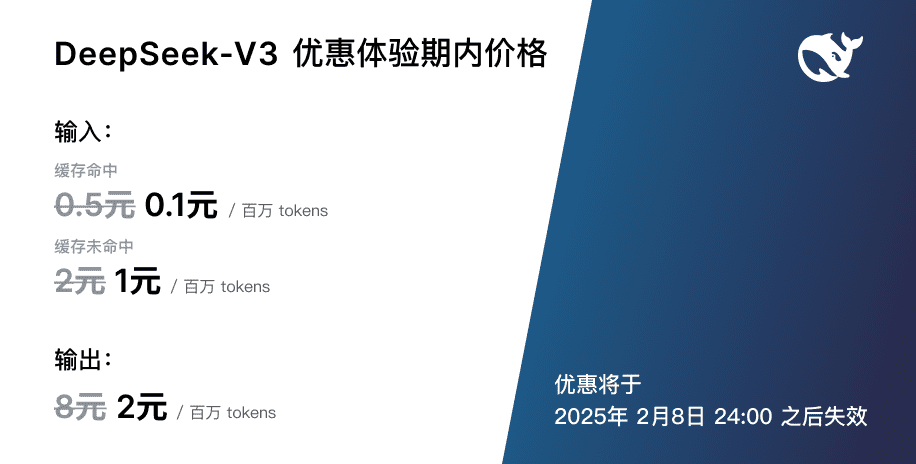

API Service Price Adjustment

As the more powerful and faster DeepSeek-V3 update goes live, the pricing of our modeling API service will be adjusted to0.5 per million input tokens (cache hits) / $2 (cache misses), $8 per million output tokensWe hope to be able to continue to provide better modeling services to everyone.  At the same time, we decided to offer the new model a 45-day pricing period: from now until February 8, 2025, the price of the DeepSeek-V3 API service will remain the same as the familiar0.1 per million input tokens (cache hits) / $1 (cache misses), $2 per million output tokensThe above discounted rates are available to both existing users and new users who register during this period.

At the same time, we decided to offer the new model a 45-day pricing period: from now until February 8, 2025, the price of the DeepSeek-V3 API service will remain the same as the familiar0.1 per million input tokens (cache hits) / $1 (cache misses), $2 per million output tokensThe above discounted rates are available to both existing users and new users who register during this period.

Open source weights and local deployment

DeepSeek-V3 uses FP8 training and open-sources native FP8 weights. Thanks to the support of the open source community, SGLang and LMDeploy support the native FP8 inference of V3 model at the first time, while TensorRT-LLM and MindIE implement BF16 inference. In addition, we provide conversion scripts from FP8 to BF16 for the convenience of the community to adapt and expand the application scenarios.

Model weights download and more local deployment information can be found at:

https://huggingface.co/deepseek-ai/DeepSeek-V3-Base

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...