DeepRetrieval: efficient information retrieval query generation driven by reinforcement learning

summaries

Information retrieval systems are critical for efficient access to large document collections. Recent approaches utilize large language models (LLMs) to improve retrieval performance through query enhancement, but typically rely on expensive supervised learning or distillation techniques that require significant computational resources and manually labeled data. In this paper, we introduce DeepRetrieval, a novel reinforcement learning-based approach to directly train LLMs for query enhancement through trial and error without the need for supervised data. By using retrieval recall as a reward signal, our system learns to generate effective queries to maximize document retrieval performance. Our preliminary results show that DeepRetrieval achieves a recall of 60.821 TP3T on the publication search task and 70.841 TP3T on the experimental search task while using a smaller model (3B vs. 7B parameters) and without any supervised data. These results suggest that our reinforcement learning approach provides a more efficient and effective paradigm for information retrieval that may change the landscape of document retrieval systems.

Author: Chengjiang Peng (Department of Computer Science, UIUC)

Original: https://arxiv.org/pdf/2503.00223

Code address: https://github.com/pat-jj/DeepRetrieval

1. Introduction

Information Retrieval (IR) systems play a crucial role in helping users find relevant documents in large-scale document collections. Traditional IR approaches rely on keyword matching and statistical methods, which often struggle to understand the semantic meaning behind user queries. Recent advances in Large Language Models (LLMs) have shown promise in addressing these limitations through query augmentation (Bonifacio et al., 2022), where LLMs extend or reformulate user queries to better capture relevant documents.

However, current LLM-based query enhancement methods typically employ supervised learning or distillation techniques, which have several significant limitations:

- They require expensive computational resources to generate training data, often costing thousands of dollars.

- The quality of the enhanced queries depends on the quality of the surveillance data.

- They rely on larger models to generate data for smaller models, which introduces potential biases and limitations.

In this work, we introduce DeepRetrieval, a novel approach that uses reinforcement learning (RL) to train LLMs for query enhancement. Unlike approaches that rely on supervised data, DeepRetrieval allows models to learn through direct trial and error, using retrieval recall as a reward signal. This approach has several key advantages:

- No need for expensive supervised data generation

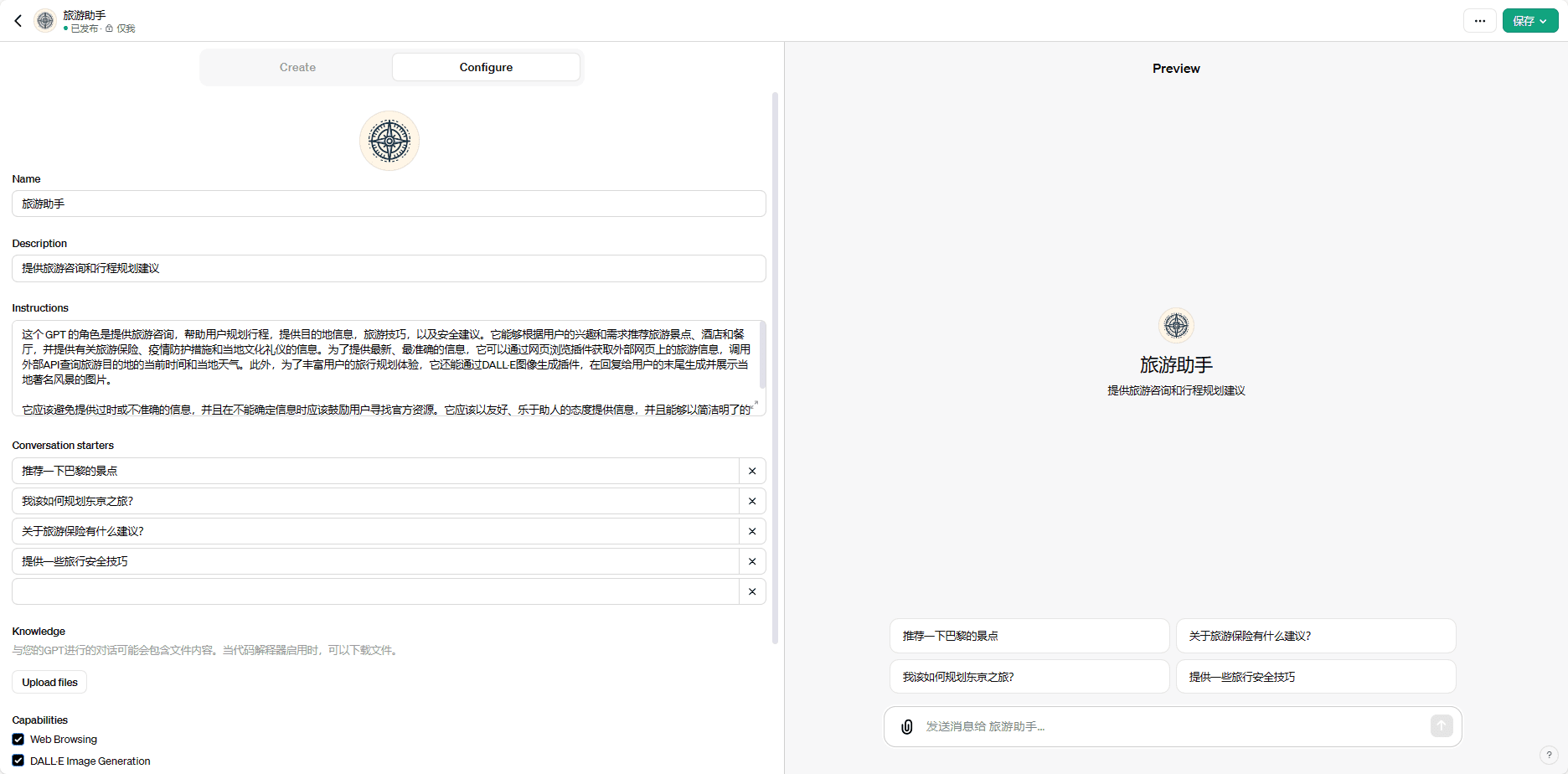

Figure 1: DeepRetrieval: the LLM generates enhanced queries for retrieving documents. Recall is calculated and used as a reward to update the model.

Figure 1: DeepRetrieval: the LLM generates enhanced queries for retrieving documents. Recall is calculated and used as a reward to update the model.

- Optimize directly for the end goal (recall performance)

- Ability to learn effective strategies without human demonstration

Our preliminary results show that DeepRetrieval significantly outperforms existing state-of-the-art methods, including the recent LEADS system (Wang et al., 2025), achieving a recall of 60.821 TP3T on the publication search task and 70.841 TP3T on the trial search task. It is worth noting that these results were obtained with a smaller model (3B parameters) compared to LEADS (7B parameters) and without any supervised data, which highlights the efficiency and effectiveness of our approach.

2. Methodology

Our DeepRetrieval approach builds on recent advances in reinforcement learning for LLMs by applying this paradigm to a specific task of information retrieval - query enhancement. Our approach is directly inspired by DeepSeek-R1-Zero (DeepSeek-AI et al., 2025), which demonstrated that RL can be used to train models with advanced reasoning capabilities without relying on supervised data. Figure 1 illustrates the overall architecture of our system.

2.1 Formulation of the problem

found D is a collection of documents.q for user queries. The goal of an information retrieval system is to return the same information as q A subset of related documents Dq⊂ D . In query augmentation, the original query q Converted into an enhanced query q'The query is more effective in retrieving relevant documents.

Traditionally, this augmentation process is learned through supervised learning, where (q,q') pairs are provided as training data. In contrast, our approach uses reinforcement learning, where the model learns to generate valid augmented queries through trial and error, similar to how DeepSeek-R1-Zero learns to solve inference problems.

2.2 Enhanced learning framework

We formulate the query enhancement task as a reinforcement learning problem:

- state of affairs: Original user query q

- movements: Enhanced queries generated by the model q'

- incentives: Use q' Recall achieved when retrieving documents

Models are trained to maximize the expected reward, i.e., to generate augmented queries that achieve high recall. This direct optimization of the final objective is different from supervised approaches, which optimize enhancements similar to those generated by artificially generated or larger models.

2.3 Model architecture and output structure

We use Qwen-2.5-3B-Instruct (Yang et al., 2024) as the baseline LLM for our system.The model takes user queries as input and generates augmented queries. The model is structured to first generate reasoning steps in sections and then generate the final augmented query in JSON format in sections. This structured generation allows the model to consider all aspects of the query and explore different enhancement strategies before finalizing its response.

In our preliminary experiments, we focused on medical literature search using specialized prompts based on the PICO framework (see Appendix A for details). To be compatible with the search system, the JSON format requires grouping using Boolean operators (AND, OR) and appropriate brackets. However, our approach is generic and can be applied to traditional IR datasets with appropriate modifications to the prompt and query formats.

2.4 Incentive mechanisms

Our reward function aims to directly optimize the retrieval performance. The process is as follows:

- The model generates enhanced queries in response to PICO framework queries.

- Perform enhanced queries against document collections (PubMed or ClinicalTrials.gov).

- Calculate the recall as the percentage of relevant documents retrieved.

- Composite awards are calculated based on the following:

- Formatting correctness (JSON structure, proper labeling)

- Retrieve the recall rate, the higher the recall rate, the higher the reward.

Specifically, our reward function uses a recall-based hierarchical scoring system as shown in Table 1.

| recall rate | ≥ 0.7 | ≥ 0.5 | ≥ 0.4 | ≥ 0.3 | ≥ 0.1 | ≥ 0.05 | < 0.05 |

|---|---|---|---|---|---|---|---|

| incentives | +5.0 | +4.0 | +3.0 | +1.0 | +0.5 | +0.1 | -3.5 |

Table 1: Reward tiers based on recall performance. Higher recall values receive significantly larger rewards, incentivizing the model to generate more efficient queries.

In addition, correct formatting will receive +1 point, while incorrect formatting will receive -4 points. Importantly, if the format is incorrect (missing tags, incorrect JSON structure, etc.), the answer bonus is not computed at all and only a format penalty is incurred. This reward structure strongly encourages the model to generate well-formatted queries that maximize recall while adhering to the desired output format.

2.5 Training process

Our training process follows these steps:

- Initialize the model using the pre-trained weights.

- for each query in the training set:

- Generate enhanced queries.

- Execute queries against the search system.

- Calculate the recall rate (the percentage of relevant documents retrieved).

- Use recall-based rewards to update the model.

- Repeat until convergence.

This process allows the model to learn effective query enhancement strategies directly from retrieval performance without explicit supervision. The model gradually improves its ability to convert PICO framework queries into valid search terms to maximize the recall of relevant medical literature.

3. Experiments

3.1 Data sets

We evaluate our approach on two medical literature search tasks:

- Publication search: Retrieve relevant medical publications from PubMed based on user queries expressed in the PICO framework.

- Test Search: Retrieve relevant clinical trials from ClinicalTrials.gov based on a similar PICO framework query.

These datasets are particularly challenging for information retrieval systems because of the specialized terminology and complex relationships in the medical literature. For each query, we have a set of ground truth related documents (identified by their PMIDs) that should be ideally retrieved by the augmented query.

3.2 Assessment of indicators

We use recall as our primary evaluation metric, which measures the proportion of relevant documents retrieved. Specifically, we report:

- Recall rate (publication search): Percentage of relevant publications retrieved.

- Recall (test search): Percentage of relevant clinical trials retrieved.

3.3 Baseline

We compare our approach to several baselines:

- GPT-4o: Various configurations (zero sample, less sample, ICL, ICL + less sample).

- GPT-3.5: Various configurations (zero sample, less sample, ICL, ICL + less sample).

- Haiku-3: Various configurations (zero sample, less sample, ICL, ICL + less sample).

- Mistral-7B (Jiang et al., 2023): Zero sample configuration.

- LEADS (Wang et al., 2025): A state-of-the-art medical literature search method using the Mistral-7B for distillation training.

3.4 Realization details

We use the VERL framework1implemented DeepRetrieval, an open source implementation of the HybridFlow RLHF framework (Sheng et al., 2024).

Our training configuration uses Proximal Policy Optimization (PPO) with the following key parameters:

- baseline model: Qwen-2.5-3B-Instruct (Yang et al., 2024).

Figure 2: Training dynamics of DeepRetrieval. Recall calculation is based on PubMed searches during training.

Figure 2: Training dynamics of DeepRetrieval. Recall calculation is based on PubMed searches during training.

- PPO small lot size:16.

- PPO Micro Batch Size: 8.

- learning rate: Actor 1e-6, Critic 1e-5.

- KL factor: 0.001.

- Maximum sequence length: Both prompts and responses are 500 tokens.

We trained the model on two NVIDIA A100 80GB PCIe using the FSDP strategy with gradient checkpoints enabled to optimize memory usage. The training process was run for 5 cycles.

As shown in Figure 2, the training dynamics show a steady improvement in performance metrics as training progresses. The average reward (top left) shows a consistent upward trend, starting with negative values but quickly changing to positive values and continuing to improve throughout training. At the same time, the incorrect answer ratio (top center) and the formatting error ratio (top right) decrease dramatically, indicating that the model is learning to generate well-structured queries to retrieve relevant documents.

The most notable improvement is the consistent increase in all recall thresholds. The proportion of queries reaching high recall values (≥0.5, ≥0.7) steadily increased, with the highest recall tier (≥0.7) growing from near zero to ~0.25 at the end of training.The medium recall ratio (≥0.4, ≥0.3) showed even stronger growth to ~0.6-0.7, while lower recall thresholds (≥0.1, ≥0.05) rapidly approached and stabilized around 0.8- 0.9 neighborhood. This progress clearly demonstrates how reinforcement learning can incrementally enhance the model's ability to generate effective query enhancements by directly optimizing retrieval performance.

4. Results

4.1 Main results

Table 2 shows the main results of the experiments.DeepRetrieval achieved a recall of 60.821 TP3T on the publication search task and 70.841 TP3T on the trial search task, significantly outperforming all baselines, including the state-of-the-art LEADS system.

4.2 Analysis

Several key observations emerge from our results:

- superior performance: DeepRetrieval outperforms LEADS by a large margin on the publication search task (60.821 TP3T vs. 24.681 TP3T), and on the experimental search task (70.841 TP3T vs. 32.111 TP3T), albeit using a smaller model (3B vs. 7B parameters).

- cost-effectiveness: Unlike LEADS, which requires expensive distillation (estimated at over $10,000 for training data generation), DeepRecallal does not require supervised data, making it significantly more cost-effective.

- versatility: Sustained performance on publication and experimental search tasks shows that our approach generalizes well across different retrieval scenarios.

- Efficiency of structured generation: Using the / structure, the model is able to reason through complex queries before finalizing its response, thus improving overall quality.

5. Discussion

5.1 Why reinforcement learning works

DeepRetrieval's superior performance can be attributed to several factors:

- Direct Optimization: By directly optimizing recall, the model learns to generate queries that are valid for retrieval, rather than queries that match some predefined pattern.

- explorations: Reinforcement learning frameworks allow models to explore a wide range of query enhancement strategies, potentially uncovering effective methods that may not be present in supervised data.

- adaptive learning: Instead of a one-size-fits-all approach, the model can adapt its enhancement strategy to the specific characteristics of the query and the document collection.

- structured reasoning: A two-stage generation approach using separate think and answer components allows the model to work through the question space before submitting the final query.

5.2 Limitations and future work

While our preliminary results are promising, there are still some limitations and directions for future work:

- Evaluation on the classical IR dataset: Our current experiments focus on medical literature retrieval using the PICO framework. A key next step is to evaluate DeepRecallal on standard IR benchmark tests (e.g., MS MARCO, TREC, and BEIR) to test its effectiveness in more generalized retrieval scenarios.

- Comparison with more advanced methods: Additional comparisons with recent query enhancement methods will further validate our findings.

- Model Extension: Examining how performance varies with larger models provides insight into the trade-off between model size and retrieval performance.

- Incentive works: Exploring more complex reward functions that incorporate metrics other than recall (e.g., precision, nDCG) may lead to further improvements.

- Integration with the retrieval pipeline: Explore how DeepRecallal can be integrated into existing retrieval pipelines, including hybrid approaches that combine neural and traditional retrieval methods.

6. Conclusion

In this paper, we introduce DeepRecallal, a novel reinforcement learning-based query enhancement method for information retrieval. By training a 3B-parameter language model to directly optimize retrieval recall, we achieve state-of-the-art performance on a medical literature retrieval task that significantly outperforms existing methods that rely on supervised learning or distillation.

The key innovation of our approach is its ability to learn effective query enhancement strategies through trial and error without the need for expensive supervised data. This makes DeepRecallal not only more efficient, but also more cost-effective than existing methods.

Our results show that reinforcement learning offers a promising paradigm for information retrieval that may change the landscape of document retrieval systems. We believe that this approach can be extended to other information retrieval tasks and domains, providing a generalized framework for improving retrieval performance for a variety of applications.

Appendix A PICO Tips

In our medical literature search experiments, we used the following specialized input prompts:

Assistant is a clinical specialist. He is conducting research and conducting medical literature reviews. His task is to create query terms for searching URLs to find relevant literature on PubMed or ClinicalTrials.gov.

The study is based on the PICO framework definition:

P: Patient, Problem, or Population - Who or what is being studied?

I: Interventions - What are the main interventions or exposure factors considered?

C: Control - What is the intervention compared to?

O: Outcomes - What are the relevant findings or measured effects?

The Assistant should show his thought process within the tag.

Assistant should return the final answer within the tags and use JSON formatting, for example:

,

[Thinking process]

<answer>

{

"query":"...."

}

</answer>

Note: Queries should use Boolean operators (AND, OR) as well as parentheses to group terms appropriately.

This specialized input prompt is specifically for medical literature retrieval, but can be applied to other information retrieval (IR) domains by modifying the task description and query structure guidance.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...