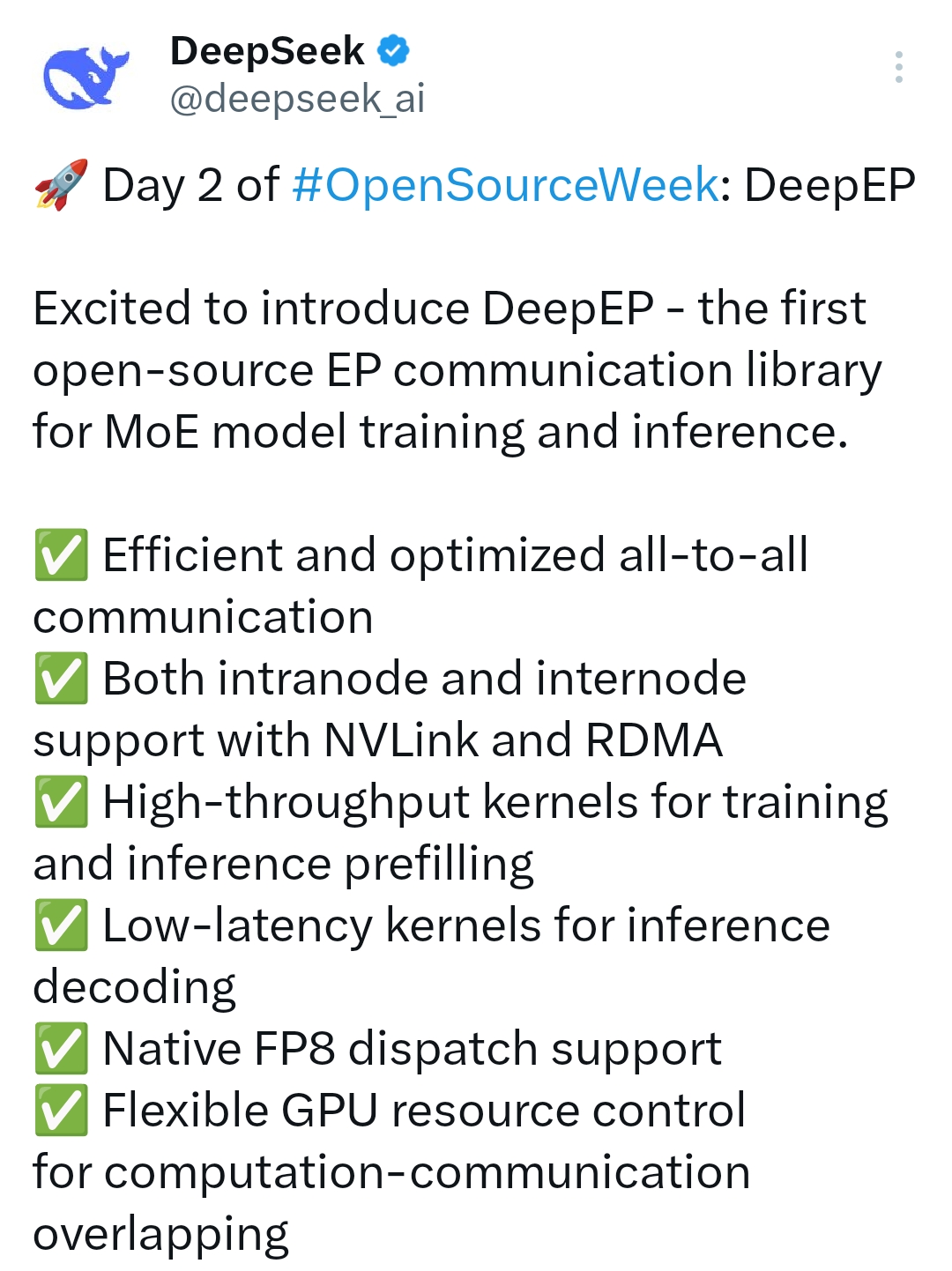

DeepGEMM: An Open Source Library with Efficient Support for FP8 Matrix Operations (DeepSeek Open Source Week Day 3)

General Introduction

DeepGEMM is a program developed by DeepSeek The open source FP8 GEMM (Generalized Matrix Multiplication) library developed by the team is focused on providing efficient matrix operation support. It is specifically designed for the Tensor Core of the NVIDIA Hopper architecture and supports grouped GEMM operations for common matrix operations and mixed expert models (MoE). Written in CUDA, the library is runtime kernel-compiled using lightweight Just-In-Time (JIT) compilation, eliminating the need for install-time pre-compilation and greatly simplifying the deployment process.DeepGEMM delivers outstanding performance while maintaining clean code, achieving over 1350 TFLOPS of FP8 computation power on Hopper GPUs. Not only is it suitable for training machine learning models and accelerating inference, it is also an excellent resource for learning FP8 matrix optimization due to its open-source nature and accessibility.

Function List

-Supports FP8 matrix operations: Provides efficient FP8 Generalized Matrix Multiplication (GEMM) for high-performance computing scenarios.

-MoE model optimization: Supports grouped GEMM for hybrid expert models, grouped only for the M-axis, with adaptation experts sharing the same shape of the scene.

-Just-In-Time (JIT) compilation: Compile the kernel at runtime to adapt to different hardware environments without pre-compilation.

-high performance computing: Achieved FP8 compute throughput of over 1350 TFLOPS on NVIDIA Hopper GPUs.

-Simple code design: About 300 lines of core code, easy to learn and secondary development.

-high compatibility: Supports both normal GEMM and packetized GEMM with masks, adapting to a variety of inference scenarios.

-Open source and free: Published under the MIT protocol for research and commercial use.

Using Help

DeepGEMM is an open source matrix arithmetic library designed for developers, mainly for users with some basic CUDA programming and machine learning background. The following is a detailed guide to help you quickly get started and integrate it into your project.

Installation process

DeepGEMM does not require a complex pre-compilation process and can be installed and the runtime environment configured in just a few steps:

1.environmental preparation::

- System requirements: GPUs that support the NVIDIA Hopper architecture (e.g. H100).

- Software dependencies: Install CUDA Toolkit (recommended version 11.8 or higher) and Python (3.8+).

- Hardware Support: Make sure your device is equipped with an NVIDIA GPU with at least 40GB of video memory.

2.clone warehouse::

Run the following command in a terminal to download the DeepGEMM repository locally:

git clone https://github.com/deepseek-ai/DeepGEMM.git**

cd DeepGEMM**

- Install the dependencies:

Use Python's package management tools to install the required dependencies:

pip install torch numpy

DeepGEMM itself requires no additional compilation, as it relies on on-the-fly compilation techniques and all kernels are automatically generated at runtime.

4. Verify the installation:

Run the provided test scripts to ensure that the environment is configured correctly:

python test/deep_gemm_test.py

If the output shows normal matrix operation results, the installation is successful.

Main Functions

1. Performing basic FP8 GEMM operations

DeepGEMM provides an easy-to-use interface for performing ungrouped FP8 matrix multiplication:

- Operational Steps:

- Import libraries and functions:

import torch

from deep_gemm import gemm_fp8_fp8_bf16_nt

- Prepare the input data (matrices A and B, must be in FP8 format):

A = torch.randn(1024, 512, dtype=torch.float8_e4m3fn).cuda()

B = torch.randn(512, 1024, dtype=torch.float8_e4m3fn).cuda()

- Call the function to perform matrix multiplication:

C = gemm_fp8_fp8_bf16_nt(A, B)

print(C)

- Caveats:

- The input matrix needs to be located on the GPU and in FP8 format (E4M3 or E5M2).

- The output is in BF16 format, suitable for subsequent calculations or storage.

2. Grouping GEMM in support of MoE models

For users who need to work with MoE models, DeepGEMM provides grouped GEMM support:

- Operational Steps:

- Import Grouping GEMM Functions:

from deep_gemm import m_grouped_gemm_fp8_fp8_bf16_nt_contiguous

- Prepare input data for continuous layout:

A = torch.randn(4096, 512, dtype=torch.float8_e4m3fn).cuda() # 多个专家的输入拼接

B = torch.randn(512, 1024, dtype=torch.float8_e4m3fn).cuda()

group_sizes = [1024, 1024, 1024, 1024] # 每个专家的 token 数

- Perform group GEMM:

C = m_grouped_gemm_fp8_fp8_bf16_nt_contiguous(A, B, group_sizes)

print(C)

- Caveats:

- The M-axes of the input matrix A need to be spliced in expert groups and the size of each group needs to be aligned to the GEMM M block size (available).

get_m_alignment_for_contiguous_layout()(Access). - The N and K axes of the B matrix need to be kept fixed.

- The M-axes of the input matrix A need to be spliced in expert groups and the size of each group needs to be aligned to the GEMM M block size (available).

3. Mask grouping in the inference phase GEMM

In the inference decoding phase, DeepGEMM supports grouped GEMM using masks for dynamic token allocation:

- Operational Steps:

- Import the mask grouping function:

from deep_gemm import m_grouped_gemm_fp8_fp8_bf16_nt_masked

- Prepare the input data and mask:

A = torch.randn(4096, 512, dtype=torch.float8_e4m3fn).cuda()

B = torch.randn(512, 1024, dtype=torch.float8_e4m3fn).cuda()

mask = torch.ones(4096, dtype=torch.bool).cuda() # 掩码指示有效 token

- Perform mask grouping GEMM:

C = m_grouped_gemm_fp8_fp8_bf16_nt_masked(A, B, mask)

print(C)

- Caveats:

- Masks are used to specify which tokens need to be computed and are suitable for dynamic reasoning when CUDA graphs are enabled.

Featured Functions Operation Procedure

High Performance Optimization and Debugging

The core strength of DeepGEMM is its efficiency and simplicity, which developers can further optimize and debug by following the steps below:

- View performance data:

Add log output to monitor TFLOPS when running test scripts:import logging logging.basicConfig(level=logging.INFO) C = gemm_fp8_fp8_bf16_nt(A, B)

Adjustment parameters*:

Adjust the block size (TMA parameter) to the specific hardware to optimize data movement and computational overlap, refer to the examples in the test/ folder in the documentation.

Learning and Expansion*:

The core code is located in deep_gemm/gemm_kernel.cu, about 300 lines, developers can read it directly and modify it to fit custom requirements.

Recommendations for use

hardware requirement*: Currently only supports NVIDIA Hopper architecture GPUs, other architectures are not yet adapted.

documentation reference*: Detailed function descriptions and sample code can be found in the README.md and test/ folders of the GitHub repositories.

Community Support*: If you encounter problems, submit feedback on the GitHub Issues page and the DeepSeek team will respond positively.

With these steps, you can easily integrate DeepGEMM into your machine learning projects and enjoy its efficient FP8 matrix computing power.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...