Danswer: AI assistant specializing in enterprise knowledge management and document search, integrating multiple work tools

General Introduction

Danswer is an open source enterprise document retrieval AI assistant designed to connect to a team's documents, apps, and people to provide unified search and natural language query answers through an intelligent chat interface and unified search capabilities. It ensures that user data and chat logs are fully controlled by the user. Its modular design and easy scalability make it an ideal tool for team knowledge management and collaboration.

Currently renamed onyx , and refactored the vast majority of functionality.

Function List

- Chat interface: Dialog with a document and select a specific document to interact with.

- Customized AI Assistant: Create AI assistants with different tips and knowledge bases.

- Document Search: Provides document search and AI answers for natural language queries.

- Multiple connectors: Supports connectivity with common work tools such as Google Drive, Confluence, Slack, and more.

- Slack integration: Get answers and search results directly in Slack.

- user authentication: Provides document-level access management.

- Role Management: Supports role management for administrators and regular users.

- Chat log persistence: Save chat logs for easy follow-up.

- UI Configuration: Provides a user interface for configuring AI assistants and prompts.

- multimodal support: Support for dialog with images, videos, etc. (planned).

- Tool invocation and agent configuration: Provides tool calls and agent configuration options (planned).

Using Help

Installation process

- local deployment::

- Download and install Docker.

- Clone Danswer's GitHub repository.

- Navigate to the project directory in the terminal and run

docker-compose upCommand. - Open your browser and visit

http://localhost:8000, started using Danswer.

- Cloud Deployment::

- Install Docker on the virtual machine.

- Clone Danswer's GitHub repository.

- Navigate to the project directory in the terminal and run

docker-compose upCommand. - Configure the domain name and SSL certificate to ensure secure access.

- Kubernetes Deployment::

- Install and configure a Kubernetes cluster.

- Clone Danswer's GitHub repository.

- Find the Kubernetes deployment file in the project directory and run the appropriate kubectl command to deploy it.

Guidelines for use

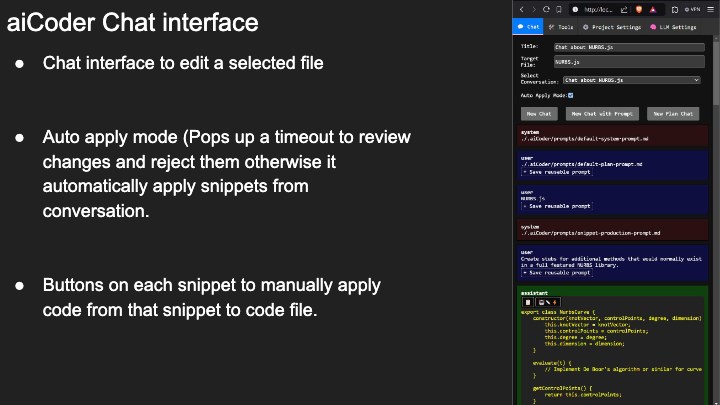

- Chat interface::

- Open the Danswer web application, log in and go to the chat screen.

- Select the document you want to talk to, enter a natural language question, and Danswer will provide the relevant answer.

- Customized AI Assistant::

- In the administrator interface, create a new AI assistant.

- Configure the assistant's tips and knowledge base and save the settings.

- Select different AI assistants to talk to in the chat screen.

- Document Search::

- Enter a natural language query in the search bar and Danswer will return relevant documents and AI-generated answers.

- Supports filtering and sorting of search results to quickly find the information you need.

- Slack integration::

- Install the Danswer app in Slack.

- Configure Danswer's connection to Slack to authorize access to relevant channels.

- Enter a query directly in Slack and Danswer will return the search results and answers.

- User authentication and role management::

- Add and manage users in the administrator interface.

- Configure user access rights and roles to ensure data security.

- Chat log persistence::

- All chats will be saved automatically and users can view the history at any time.

- Supports searching and filtering of chat logs, making it easy to find past conversations.

Featured Functions

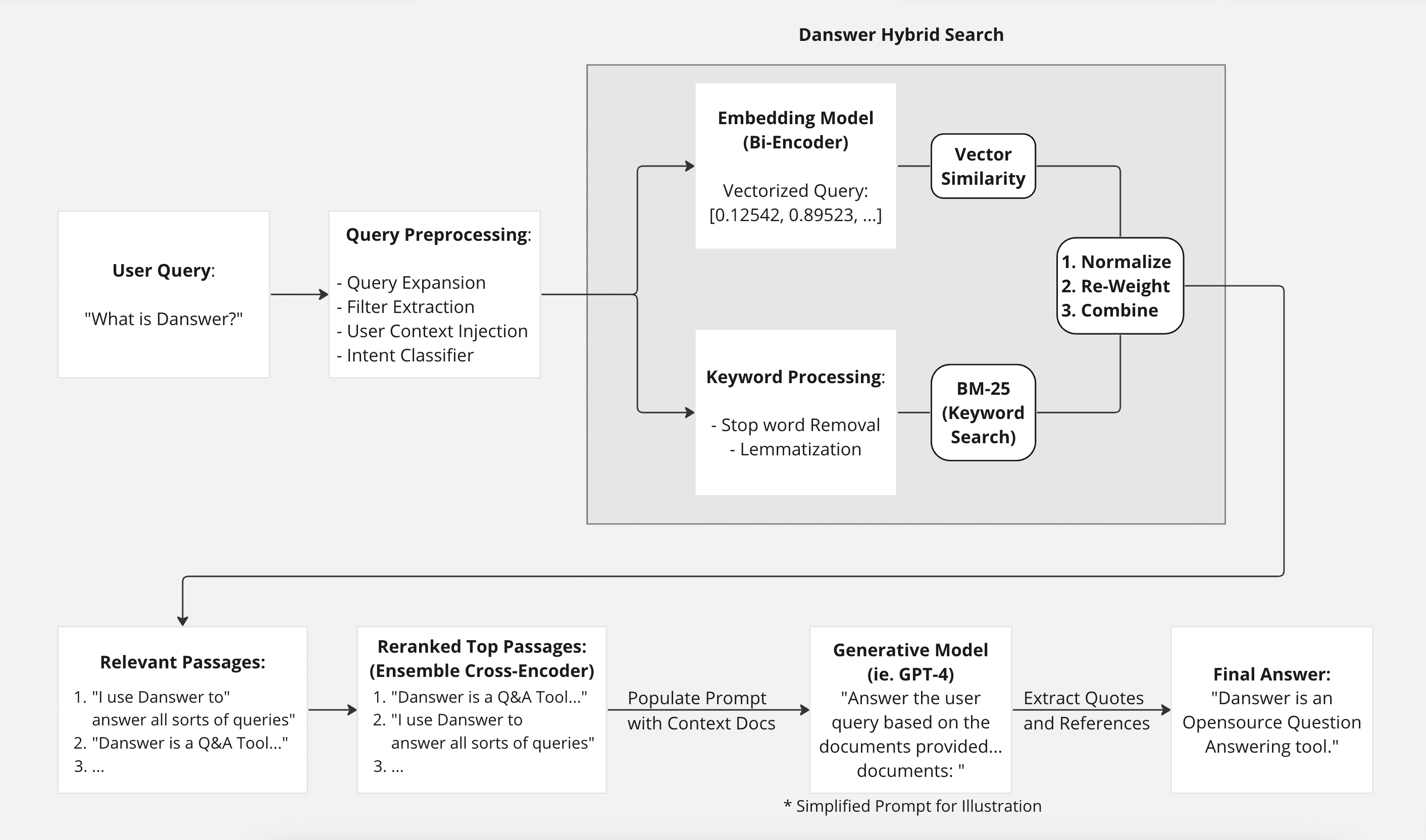

- Efficient Search: Combining BM-25 and prefix-aware embedding models to provide an optimal hybrid search experience.

- Custom Models: Support for customizing deep learning models and learning from user feedback.

- Multiple Deployment Options: Supports local, cloud, and Kubernetes deployments, flexibly adapting to the needs of teams of different sizes.

- multimodal support: Future versions will support conversations with images, videos, etc. to enhance the user experience.

- Tool invocation and agent configuration: Provides flexible tool invocation and agent configuration options to meet the needs of different teams.

- Organizational understanding and expert advice: Danswer will be able to recognize the experts in the team and provide relevant advice to enhance team collaboration.

System Overview

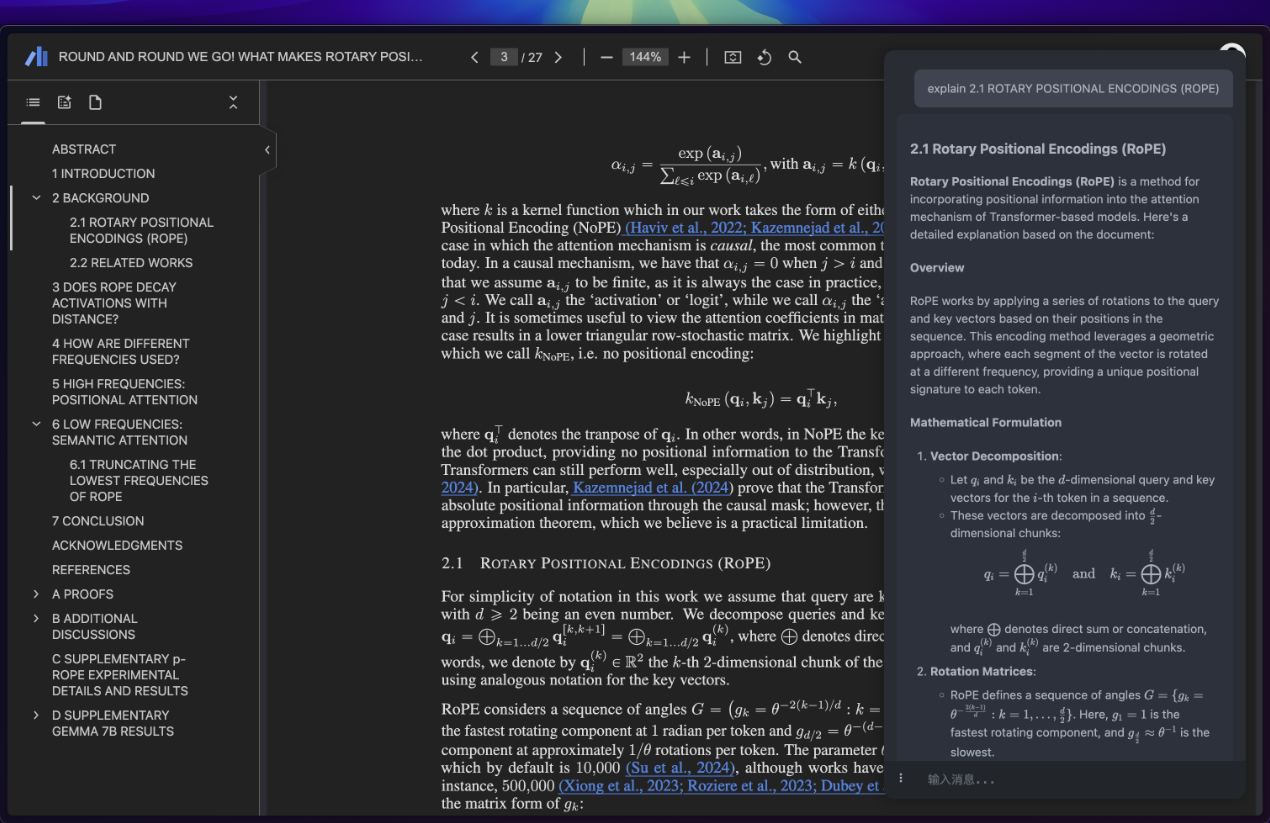

Explanation of different system components and processes

This page discusses how Danswer works from a high level. The goal is to make our design more transparent. This way, you can feel confident when using Danswer.

Or, if you want to customize the system or become an open source contributor, this is a great place to start.

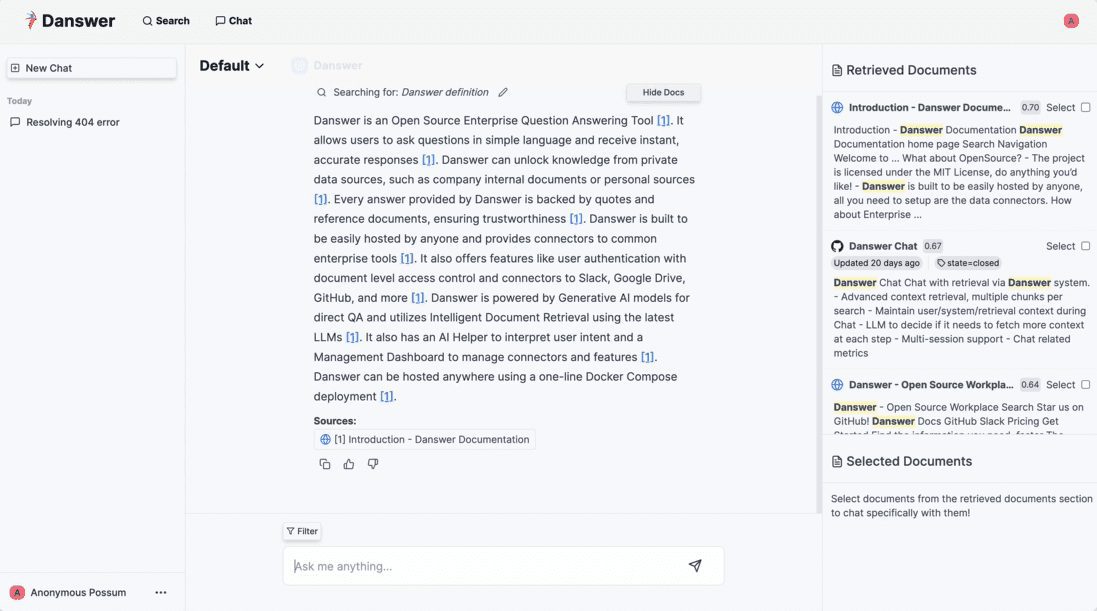

system architecture

Whether Danswer is deployed on a single instance or a container orchestration platform, the data flow is the same. Documents are pulled and processed through connectors and then persistently stored in Vespa/Postgres running in system containers.

The only time sensitive data leaves your Danswer setup is when it calls LLM to generate answers. Communication with LLM is encrypted.Data persistence on the LLM API depends on the terms of the LLM hosting service you are using.

We also noticed that Danswer has some very limited and anonymized telemetry data, which helps us improve the system by identifying bottlenecks and unreliable data connectors. You can turn off telemetry by setting the DISABLE_TELEMETRY environment variable to True.

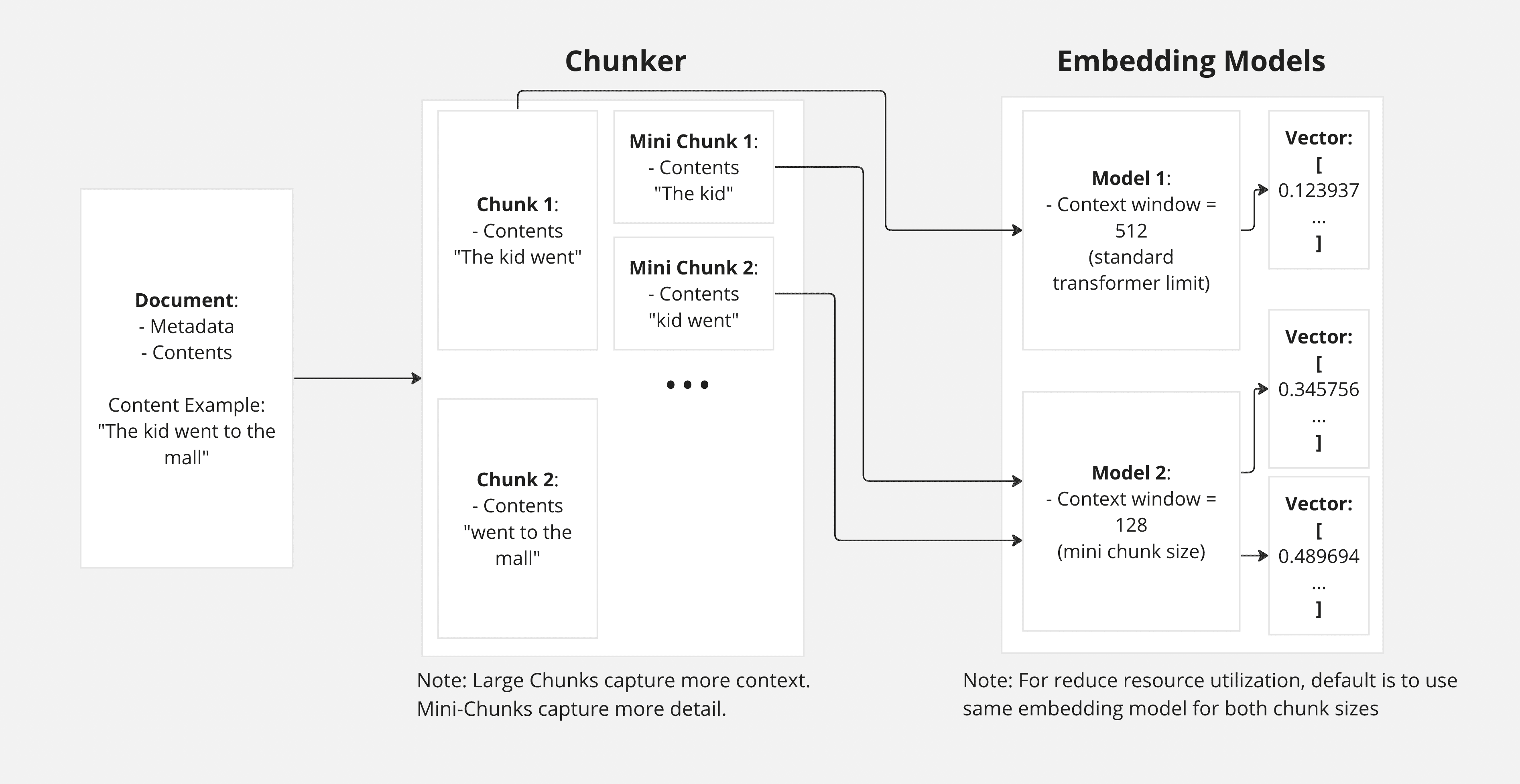

embedded stream

Each document is divided into smaller parts called "chunks".

By passing blocks to the LLM instead of the full document, we are able to reduce the noise in the model by passing only the relevant parts of the document. In addition, this significantly improves cost-effectiveness, as LLM services are typically charged per token. Finally, by embedding blocks instead of full documents, we are able to retain more details since each vector embedding can only encode a limited amount of information.

Adding microblocks deepens this concept even further. By embedding different sizes, Danswer can retrieve high-level context and detail. Microblocks can also be turned on/off via environment variables, as generating multiple vectors per block may slow down document indexing when hardware performance is low.

In choosing our embedding model, we use the latest state-of-the-art dual encoder, which is small enough to run on a CPU while maintaining sub-second document retrieval times.

Inquiry Process

This flow is typically updated as we continually strive to push the capabilities of the retrieval pipeline to take advantage of the latest advances from the research and open source communities. Also note that many of the parameters of this flow, such as how many documents to retrieve, how many to reorder, which models to use, which blocks to pass to LLM, etc., are configurable.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...