Cursor explodes in popularity, but Cursor is not the way out for domestic AI programming

In 2021, Microsoft launched GitHub Copilot, which jumped to become the most sought-after AI tool in the programming world.

GitHub Copilot can automatically generate complete code functions based on contextual information provided by the user, such as function names, comments, code snippets, etc. It has been called a "game changer" in the programming world.

What makes it so amazing is the underlying access to OpenAI's Codex model, which has a parameter size of 12 billion and is an early version of GPT-3, optimized specifically for coding tasks. This is the first time that a large parameter model based on the Transformer architecture has really "emerged" in the code domain.

GitHub Copilot ignited a passion for AI programming among developers around the world, and four MIT undergraduates came together with a dream of changing software development to start a company called Anysphere in 2022.

Anysphere, which has "brazenly challenged" Microsoft as its main competitor, co-founder Michael Truell made it clear that while Microsoft's Visual Studio Code dominates the IDE market, Anysphere sees an opportunity to offer a different product. Anysphere saw an opportunity to offer a different product.

Michael Truell (far right)

Microsoft may not have imagined that in less than three years, this little-known team will throw a heavy "bomb" to the industry, triggering a new round of AI programming fever in the world, and the company also jumped to become a unicorn valued at $ 2.5 billion in four months.

1. What makes Cursor a hit?

In August 2024, Andrej Karpathy, Tesla's former director of AI, sent several tweets on X praising a code editor called "Cursor," saying it had crushed GitHub Copilot.

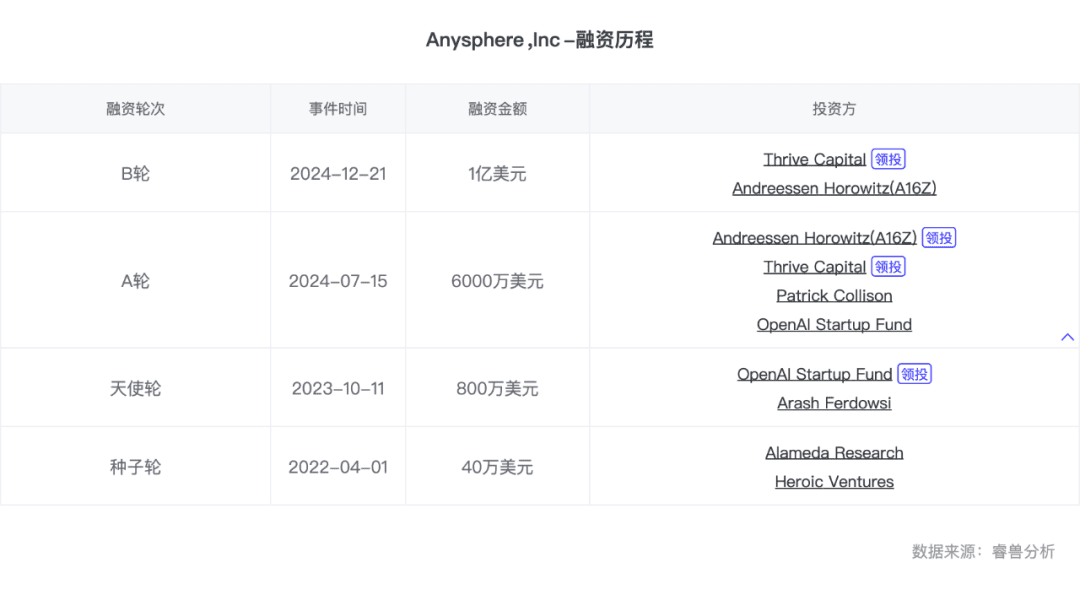

In the same month, Anysphere, the body of the company behind Cursor, closed a $60 million Series A round valued at $400 million.

Cursor is amazing with features such as multi-line editing, cross-file contextual completion, questioning, next action prediction, and more. Developers can automate code changes across an entire file by simply pressing the Tab key over and over again, and Cursor's results are more accurate and faster, with little to no perceivable delay.

Anyone who knows anything about programming knows how deep this goes.

"Completion and prediction across multiple files is a very subtle requirement that may be hard for developers to accurately express themselves, but is very 'cool' when you actually use it."

Tom Yedwab, a developer with decades of experience, also wrote to share that the Tab Completion feature is the one that best matches his daily coding habits and saves him the most time. "It's like the tool reads my mind and predicts what I'm going to do next, allowing me to focus less on code details and more on building the overall architecture." Tom Yedwab writes.

The key to Cursor's success is not so much the high technical barriers, but the fact that they were the first to identify a subtle new need and dared to gamble on a path that had never been traveled before.

Cursor is parasitic on VS Code, Visual Studio Code, a free, open-source cross-platform code editor developed by Microsoft with some basic code-completion features.

Previously, developers will build all kinds of plug-ins to expand the functional boundaries of VS Code, but VS Code's own plug-in mechanism has many limitations. For example, when dealing with large projects, some plug-ins may lead to slower code indexing and analysis; for some complex plug-ins, the configuration process is more cumbersome, requiring users to manually modify the configuration file, which invariably increases the threshold of use.

Therefore, in order to eliminate these limitations, the Cursor team adopted a very bold approach, they did not follow the traditional way to do plug-ins on VS Code, but "magically changed" the code of VS Code, compatible with a number of AI models at the bottom, and through a large number of engineering optimization, to improve the user experience of the entire IDE.

At the beginning of the development of Cursor, many practitioners, including him, are not optimistic about this road is difficult, is a huge "non-consensus". VS Code internal architecture is complex, involving code editing, syntax analysis, code indexing, plug-in system and other modules, and different versions of VS Code may have differences, "magic" process to consider compatibility. Compatibility should be considered in the process of "magic transformation". In addition, when multiple AI models are built into VS Code, it is necessary to solve the interaction problems between the model and the editor, for example, how to effectively pass the code context to the model? How to process the model's output and apply it to the code? And how to minimize the latency of code generation?

To solve a range of problems involves a cumbersome system of engineering optimizations. In 2023 alone, Cursor underwent three major version updates and nearly 40 feature iterations.This is a huge test of patience for the entire R&D team and the investors behind the company.

In the end, Silicon Valley once again proved to the world its ability to nurture disruptive innovation, and Cursor's success is a very classic Silicon Valley entrepreneurial template: a group of paranoid technology geeks with a grand vision, supported by Silicon Valley's mature VC system to break into the no man's land, and against the backdrop of countless skepticism to be the first to eat crab, and ultimately rely on the product to make a splash.

"That's what's so fascinating about entrepreneurship, such 'off the wall' programs, and they ran with it."

Recently, Anysphere announced that it closed a $100 million Series B funding round and has been valued at $2.6 billion. Sacra estimates that Cursor's annual recurring revenue (ARR) reached $65 million in November 2024, up 64,001 TP3 T. And since its inception in 2022, Anysphere has only 12 people.

2. Copilot clear, Agent confused

Cursor is not the first product to come out of the ring on the AI programming track.

In March 2024, the "world's first AI programmer," billed as the Devin came out of nowhere and ignited the industry's passion for AI programming for the first time.

Devin is an Autonomous Agent with full-stack skills to learn on its own, build and deploy applications end-to-end, fix bugs, and even train and fine-tune its own AI models. The company behind it, Cognition AI, is also a glittering AI "dream team".

However, Devin was initially released as a demo and developers were unable to get their hands on it. It wasn't until December 11, 2024 that Devin went live, with a whopping $500 monthly subscription fee. In comparison, Cursor's $20 per month subscription fee even seems more affordable.

In contrast to Cursor's universal popularity, developer reviews of Devin have been controversial. Some think Devin is excellent in handling code migration and generating PRs (Pull Requests, code change requests submitted by developers during code collaboration for code review and merging by other team members), which can significantly reduce the repetitive work of developers; however, some users point out that Devin still requires a lot of manual intervention when dealing with complex business logic. However, some users have noted that Devin still requires a lot of manual intervention when dealing with complex business logic, especially when the project is under-documented or has poor code quality.

The fundamental reason for the difference in "popularity" between Cursor and Devin is the difference in failure rates and cost of failure for developers using the product.

At present, the failure rate of Copilot scenario has been relatively low, and the accuracy of the corresponding measurement HumanEval has been converging to 100%, while the accuracy of the corresponding measurement SWE benchmark for Agent scenario is currently less than 60%.

In addition, the results of AI work need to be accepted and confirmed by humans, and the interaction mode of Copilot products determines that the cost for developers to view the results generated by the AI is very low, and the cost for users to modify or not to adopt them after failure is also very low. However, for Agent class products, the user's confirmation cost is significantly higher than that of Copilot, and the cost of modification after failure is also higher.

The two directions of Cursor and Devin also largely reflect the current state of the two product forms, Copilot and Agent, in a generic scenario.

Cursor stands for Copilot and requires AI and humans to work in sync, with humans leading and AI assisting.

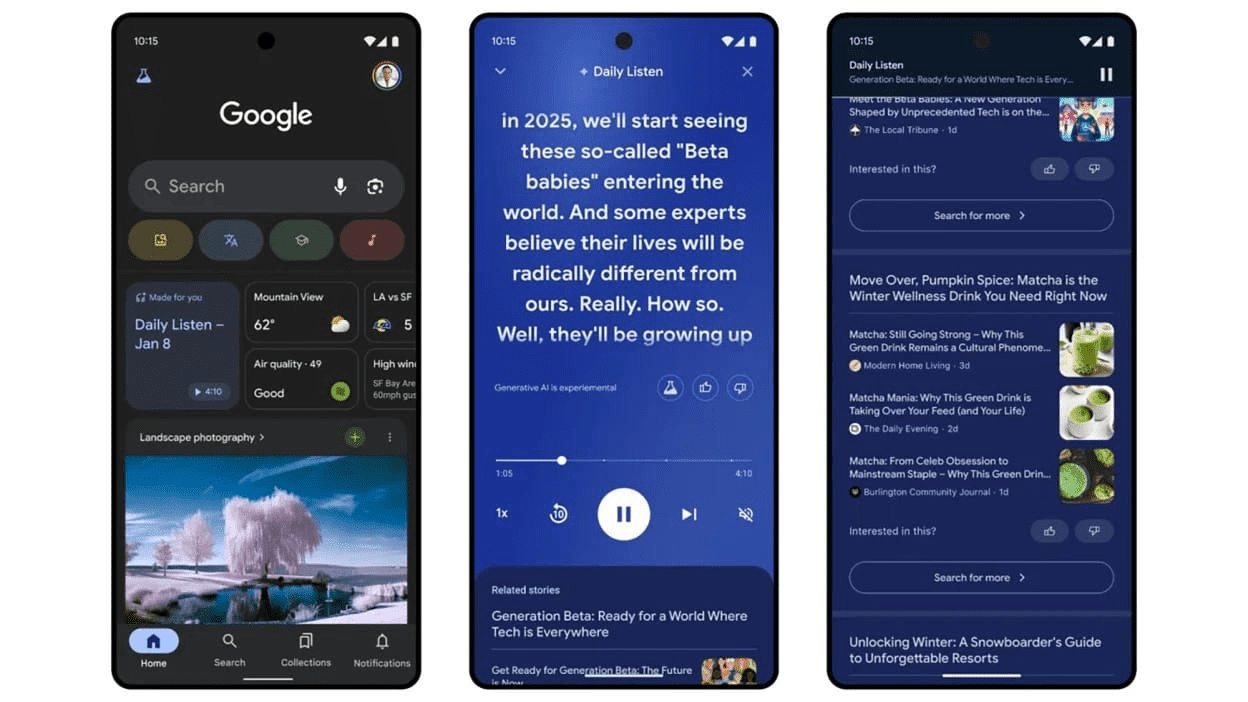

Currently, it's Copilot that really runs the PMF.Copilot can be parasitic in IDEs such as VS Code, in the form of plug-ins, assisting human developers to complete all kinds of coding actions, and after the emergence of GitHub Copilot, users have gradually become accustomed to the form of collaboration in Copilot, GPT-3.5, which has made Copilot from the demo into a real usable product.

However, I have written about the "hidden concerns" of the Copilot products. "The real moat is VS Code, which has gone from a simple editor to a platform. The reason why users can easily migrate from GitHub Copilot to Cursor is that they are both parasitic on VS Code, and the user's habits, experience, and features/plugins are all exactly the same. that the big models get and are already part of the model."

In contrast, Agent is a new species spawned by GPT-3.5, a new concept more capable of stimulating the sensitive nerves of entrepreneurs and VCs.Devin is a representative of the Agent form, which requires the AI to work asynchronously with humans, and the AI to have more initiative to autonomously complete some of the decision-making and execution.

Agent is the opportunity for entrepreneurs. But he's not sold on the all-powerful Agent vision that Devin champions, the"Doing everything means doing nothing, and the value of Agent applications in niche areas is much higher."

However, since the Agent concept is too early and all are exploring, the Agent's parasitic environment and capability boundaries are still unclear, and there are people entering in the directions of code generation, code completion, unit test generation and defect detection.

Gru chose to start with Unit-testing. Before formally launching the product, Gru also had a period of trial and error internally, automatic generation of documents, bug fixing, E2E testing and other directions have been tried, but limited by modeling capabilities, software iteration and maintenance and other pain points can not be advanced.

Eventually, Gru discovered unit testing as a need that exists commonly, but not insignificantly. Many developers don't like writing unit tests because they are boring. In addition, for less demanding projects, unit testing is not a necessary requirement for software engineering. However, Gru believes that, from the perspective of technical capabilities, AI product landing must solve the problem of business context and engineering context coherence, unit testing is the least dependent on the two contexts, but also the most relevant to the current model capabilities of the link.

However.Whether Copilot or Agent is a means rather than an end, they are not "either/or", but will co-exist and solve different problems.

For many individual developers and some small and medium-sized enterprises, generic products such as Cursor or some open-source models may be sufficient to solve most of the needs; however, for many large enterprises and complex business scenarios in different fields, it is difficult to meet the needs through a "Copilot" or "Agent" form of generic products, which requires technology vendors to have stronger domain-oriented service capabilities. However, for many large enterprises and complex business scenarios in different fields, it is difficult to satisfy the demand through a generic product in the form of "Copilot" or "Agent", which requires technology vendors to have stronger domain-oriented service capabilities.

The latter is where the opportunity lies for domestic AI programming companies.

3. Domestic opportunities in verticals

Looking back to 2024, AI programming is undoubtedly one of the hottest VC directions in Silicon Valley, having run out of unicorns such as Cursor, Poolside, Cognition, Magic, Codeium, Replit, and more.

In contrast, domestic Internet companies and large model manufacturers have basically launched their own "code model", but there are few well-developed startups. According to Silicon Star people reported that last year, Qiji Chuangtan invested in six AI programming startups, and since then almost all of them have been wiped out, and last year more than 10 briefly surfaced in the code class team, this year, most of them have already withdrawn from the scene.

After the emergence of ChatGPT, Clearstream Capital has looked at dozens of projects in the AI programming track, but the only one that has finally made a move is Silicon Heart Technology ("aiXcoder" for short).

For the domestic AI programming program, many viewpoints believe that the product is made more "shallow". "There are developers in the community complaining that many products now generate code for a few minutes, but they have to spend half a day or more to debug."

Underneath the "shallow" appearance of the product is the difference in the environment of the 2B market between China and the U.S. that has developed over the years.. Analysis of the reasons are three: the United States junior programmer group is huge, and the human cost is higher, the introduction of AI products can help enterprises to significantly reduce costs; the United States SaaS market has been run through the PLG model, the enterprise's willingness to pay for general-purpose products is stronger; and the exit path of the foreign 2B market is clear, the willingness of investors to invest is strong, and the logic of the first level of the market to take over the business is also very clear, angel investors are very much and very active. Startups can almost always get the first round of funding to validate their ideas.

In September 2024, Gru launched Gru.ai and ranked first in the swe-bench verified evaluation released by OpenAI with a high score of 45.2%. It was clearly felt that with the product, it was more acceptable in Silicon Valley.

And for the domestic B-side market, the clichéd problem still exists. "It's harder to do to B domestically, the sales chain involved is longer, and in the end the ones that can pay the bill are still mostly big enterprises, but sometimes big enterprises are not the ones he will buy if your stuff is good." "Many enterprises have a large number of internal security compliance requirements, for example, because of concerns about the risk of information leakage, can not use the cloud to call the product, the need for locally deployed code tools."

As a result, domestic AI programming companies must stick both feet in the dirt to solve specific problems in various industries.

"The model in the actual landing process to consider business continuity, the domestic code model from the evaluation results to see the performance are improved, but in specific application scenarios, it is necessary to analyze the specific scene specifically." Previously, after communicating with an industrial manufacturing enterprise, it was found that the language used in some software systems in industrial scenarios is not the common python or C++, but some industry-specific coding tools, which requires technology vendors to make targeted adjustments to their products.

This is not a demand unique to industrial scenarios; each industry has its own domain characteristics, and each enterprise has a specific business logic and engineering system, which requires AI programming companies to have stronger domain-oriented service capabilities.

After studying dozens of companies, it was found that "for all types of software development needs, AI programming functions include at least a range of tasks such as search, defect detection and repair, and testing, in addition to code generation;In addition to functionality, there is also the need to consider how to integrate these capabilities with the client's own business logic so that the model has deeper domain knowledge, which actually has a high bar."

As a result, Clearstream Capital is more optimistic about the idea of deep coupling of models and products with in-house private knowledge, data and software development frameworks, and invested in aiXcoder in September 2023.

"In this proven need, aiXcoder is the most technically and commercially compatible team. At the same time, a number of key members of the company's commercial team have more than a decade of sales experience to domestic and international Big B customers, and have deep insights into customers and the market. They proposed a 'domainization' landing solution in Q2 2023, i.e., the strategy that AI programming should be deeply coupled with internal private knowledge, data and software development frameworks in the enterprise, which is also recognized by a large number of head enterprise customers from the results of the actual landing of the project."

Incubated from the Institute of Software Engineering of Peking University, aiXcoder is the world's earliest team to apply deep learning technology to code generation and code understanding, as well as the earliest team to apply deep learning to programming products. The team has accumulated more than 100 papers in top international journals and conferences, many of which are the first papers and most cited papers in the field of intelligent software engineering.

The business partner and president of aiXcoder said that when facing the B-end private deployment scenarios, because the general-purpose big model has not learned the data of the private domain, the model lacks the deep integration of the internal business requirements, industry specifications, software development framework and operation environment, and fails to incorporate the background knowledge of the enterprise domain such as requirements analysis and design documents into the model training, resulting in the generated or supplemented code in the business logic level lacks relevance and reliability.

The result is that the accuracy and usability of the big models in enterprise on-the-ground applications are lower than expected. "Many big models perform admirably in general-purpose scenarios or mainstream assessment sets, with an accuracy of up to 301 TP3T, but when deployed internally in the enterprise, the accuracy usually plummets to below 101 TP3T.Conventional means of fine-tuning are also difficult to achieve the results desired by companiesTherefore, learning and mastering "domainized" knowledge is the key to successfully implementing AI programming systems in enterprises. Therefore, learning and mastering domain knowledge is the key to successful implementation of AI programming systems in enterprises. Solving domain-specific problems for enterprise customers is where our differentiating value lies."

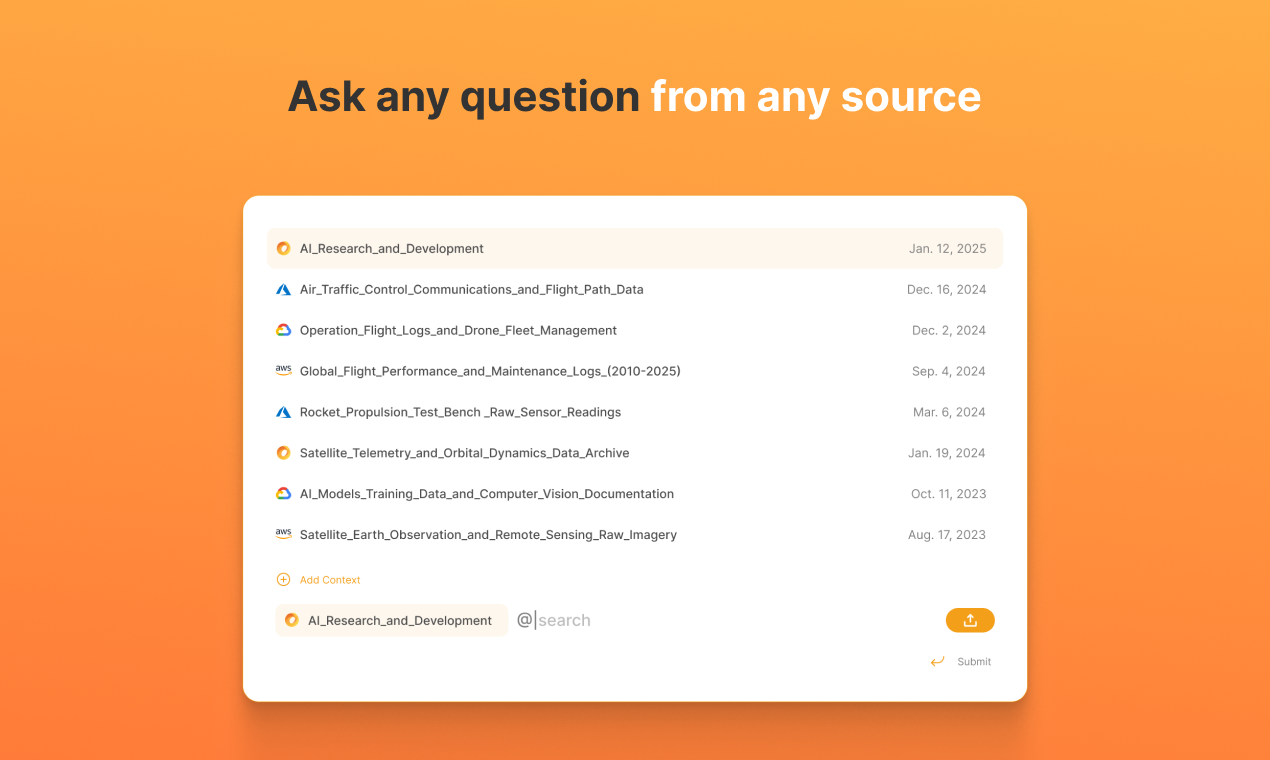

To address the above pain points, aiXcoder conducts targeted incremental training based on a variety of internal data provided by the enterprise - including code, business documents, requirements documents, design documents, test documents, as well as industry business terminology and process specifications, industry technical standards and specifications, enterprise technology stacks and programming frameworks, and other domain knowledge. . In addition to model training, it is also combined with multi-agent, RAG, software development tools, and "engineered Prompt system" that fits the enterprise software development framework, so as to improve the quality of code generation and the ability of the whole process of R&D.

In the form of delivery.Domain-based solutions are not the same as traditional highly customized project-based deliveryaiXcoder will extract the capabilities and tools with common value from customers' individual needs and form standardized products and processes to be delivered to customers; at the same time, aiXcoder maintains high-frequency communication with customers through regular meetings, not only assisting customers in solving cyclical problems, but also needing to continue iterating products based on customers' common and real needs.

4. The AI industry has "cried wolf" too many times.

From the result-oriented point of view, whether to small B or to big B, "training model" or "not training model", Copilot or Agent, there may be no optimal answer, all need to be based on the actual needs of customers, as well as the entrepreneurial team's own There may not be an optimal answer.

Regardless of which path they take, AI programming companies have a simple and straightforward goal of improving software development efficiency. However, it's still early days in the current market, andCorrectly channeling customer needs is a problem for every entrant in the businessThe

The biggest struggle at the moment is how to get customers to recognize the value of segmented Agents."Even in Silicon Valley, the first reaction of many potential customers when they hear about a new AI product is skepticism, not excitementBecause the AI track has one bad thing going for it, too many 'wolf' stories in the past. Because one of the bad things about the AI track is that there have been too many 'crying wolf' stories in the past, and a lot of demos have been made that don't work. "At the moment, Gru spends a lot of energy reaching out to customers and building up word-of-mouth from seed users, which will be the basis for large-scale commercialization later.

For the domestic market, the demand side of AI programming systems also needs to clarify the boundaries of their own needs and model capabilities. "Currently, big model-driven AI programming systems have a promising future in improving software productivity." "To truly leverage the value of this technology in an enterprise environment, it is necessary to deeply combine the code big model with the enterprise's own domain knowledge, and to continuously iterate and validate it in specific business scenarios."

In fact.Big models have evolved to the point where market sentiment has largely returned to rationality, but the noise still existsThe year 2024, for example, is a year in which large models of bidding information are common, but some of the data may be "misleading". For example, in 2024, large model bidding information is common, but some of the data may be "misleading".

"The ecological division of labor is more clear in foreign countries, but many domestic projects that do TO B end up becoming bidding, and many companies are squeezing their heads off for the bidding." However, in the field of AI programming, judging from the public bidding information, even a few big manufacturers have gotten few orders.

The reason is thatSuccessful bidding does not equate to a successful landing of a model or productThe

On the one hand, in many purchasers responsible for procurement and the actual use of the product is often not the same wave, which may result in procurement decisions and the actual business needs of the two layers of skin. On the other hand, these landing often rely on standardized products plus fine-tuning, not for the enterprise's business scenarios and internal logic for in-depth domain training and adaptation, which may lead to programmers in the use of the process found that the results are not satisfactory.

An industry insider revealed that most of the orders in the current bidding market that include hardware are in the millions, while pure software orders, such as intelligent software development, code assistants and other projects are mostly in the range of 300,000 or so. Many enterprises after procurement found that the problem can not be solved, can only be re-introduced to the market to find a more suitable manufacturer, resulting in a waste of resources.

However, some consensus is emerging from the deconstruction.More and more companies realize that the trend is to "decouple" product and model capabilities.

In the first half of 2024, when the modeling capabilities are getting stronger, the models will converge in terms of programming, and the product should no longer be tailored to fit the modeling capabilities but should beMake the product "model-agnostic.". "Starting in the first half of 2024, we're basically no longer doing specific optimizations for different models, but rather enhancing the capabilities of our product architecture, and any model on the market will be able to be plugged in as long as it passes our benchmarks."

"Enterprise customers should pay full attention to business continuity and should not be tied to any single big model vendor. Currently, it is difficult to truly meet the needs of enterprise customers for big model landing only by purchasing standardized products. Enterprises need to achieve architectural decoupling in terms of big models, data level, domainization and engineering, and flexibly choose models and service providers that better fit their needs. The most critical thing is to effectively solve the actual problem of domainization of software development within the enterprise, and help enterprises realize cost reduction and efficiency."

As the industry's third-party perspective, in the future, access to the model is only one part of the industry landing. "Now there are 100 kilometers from the model to the application, if the technology vendors standardize the capabilities of the first 95-99 kilometers into infrastructure, the remaining last 1-5 kilometers can be done by the application side itself."

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...