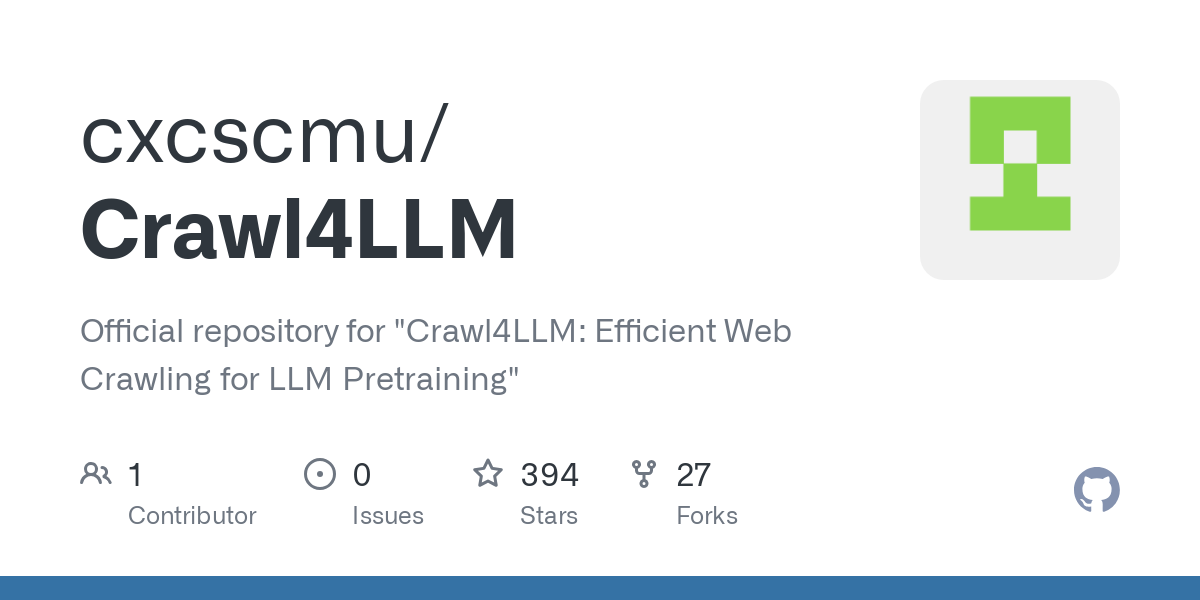

Crawl4LLM: An Efficient Web Crawling Tool for LLM Pretraining

General Introduction

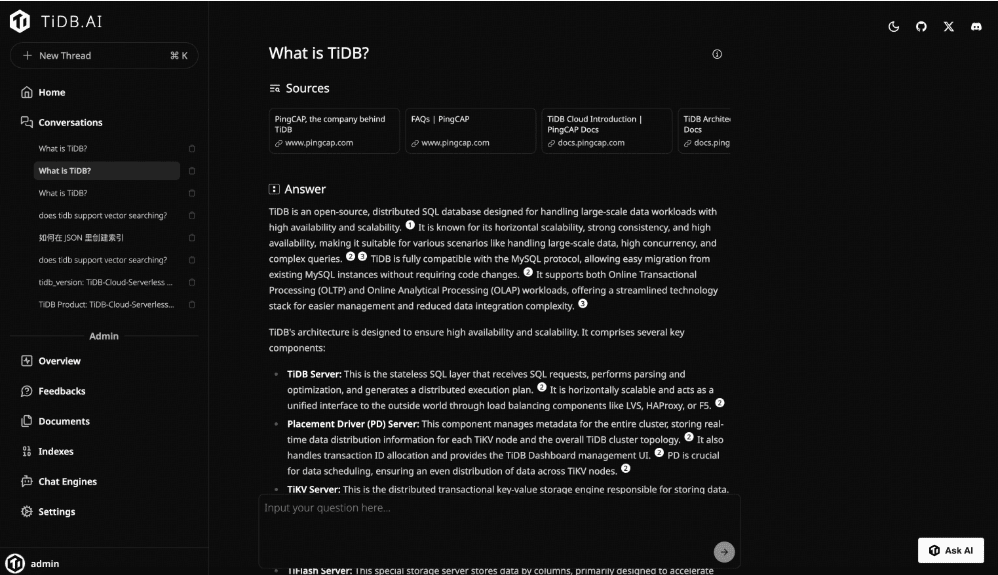

Crawl4LLM is an open source project jointly developed by Tsinghua University and Carnegie Mellon University, focusing on optimizing the efficiency of web crawling for pre-training of large models (LLM). It significantly reduces ineffective crawling by intelligently selecting high-quality web page data, claiming to be able to reduce the workload of originally needing to crawl 100 web pages to 21, while maintaining the pre-training effect. The project is hosted on GitHub, with detailed code and configuration documentation for developers and researchers.The core of Crawl4LLM lies in its data selection algorithm, which evaluates the value of web pages for model training and prioritizes crawling useful content, and has already attracted attention in academia and the developer community.

Function List

- Intelligent Data Selection: Filter high-value content based on the influence of web pages on the pre-training of large models.

- Multiple crawling modesCrawl4LLM: Support Crawl4LLM mode, random crawling, etc., to flexibly respond to different needs.

- Efficient crawling engine: Use multi-threading and optimized configurations to significantly increase crawling speed.

- Data extraction and storage: Save the crawled web page IDs and text content as files that can be used for model training.

- Support for large-scale data sets: Compatible with datasets such as ClueWeb22, suitable for academic research and industrial applications.

- Configuration Customization: Crawling parameters, such as the number of threads and the maximum number of documents, are adjusted via YAML files.

Using Help

Installation process

Crawl4LLM needs to be run in an environment that supports Python, here are the detailed installation steps:

- environmental preparation

- Make sure Python 3.10 or later is installed on your system.

- Create virtual environments to avoid dependency conflicts:

python -m venv crawl4llm_env source crawl4llm_env/bin/activate # Linux/Mac crawl4llm_env\Scripts\activate # Windows

- cloning project

- Download the source code from GitHub:

git clone https://github.com/cxcscmu/Crawl4LLM.git cd Crawl4LLM

- Download the source code from GitHub:

- Installation of dependencies

- Run the following command to install the required libraries:

pip install -r requirements.txt - Note: The dependency file lists all the Python packages needed for the crawler to run, make sure you have a good network.

- Run the following command to install the required libraries:

- Download Sorter

- The project uses the DCLM fastText classifier to evaluate the quality of web pages, which requires manually downloading the model file to the

fasttext_scorers/Catalog. - Example path:

fasttext_scorers/openhermes_reddit_eli5_vs_rw_v2_bigram_200k_train.binThe - Available from official resources or related links.

- The project uses the DCLM fastText classifier to evaluate the quality of web pages, which requires manually downloading the model file to the

- Preparing the dataset

- If you are using ClueWeb22 datasets, you need to request access and store them on the SSD (the path needs to be specified in the configuration).

How to use

The operation of Crawl4LLM is mainly done through the command line, which is divided into three steps: configuration, crawling and data extraction. The following is the detailed flow:

1. Configure the crawling task

- exist

configs/Create a YAML file in the directory (e.g.my_config.yaml), content examples:cw22_root_path: "/path/to/clueweb22_a" seed_docs_file: "seed.txt" output_dir: "crawl_results/my_crawl" num_selected_docs_per_iter: 10000 num_workers: 16 max_num_docs: 20000000 selection_method: "dclm_fasttext_score" order: "desc" wandb: false rating_methods: - type: "length" - type: "fasttext_score" rater_name: "dclm_fasttext_score" model_path: "fasttext_scorers/openhermes_reddit_eli5_vs_rw_v2_bigram_200k_train.bin"

- Parameter description::

cw22_root_path: ClueWeb22 dataset path.seed_docs_file: Initial seed document list.num_workers: Number of threads, adjusted for machine performance.max_num_docs: Maximum number of documents to crawl.selection_method: Data selection method, recommendeddclm_fasttext_scoreThe

2. Running the crawler

- Execute the crawl command:

python crawl.py crawl --config configs/my_config.yaml - When the crawl is complete, the document ID is saved in the

output_dirin the file under the specified path.

3. Extracting document content

- Use the following command to convert the crawled document ID to text:

python fetch_docs.py --input_dir crawl_results/my_crawl --output_dir crawl_texts --num_workers 16 - The output is a text file that can be used directly for subsequent model training.

4. Viewing Individual Documents

- If you need to check a specific document and its links, you can run:

python access_data.py /path/to/clueweb22 <document_id>

Featured Function Operation

- Intelligent Web Page Selection

- The core of Crawl4LLM is its data filtering capability. It uses fastText classifiers to evaluate the length and quality of web content, prioritizing the pages that are more useful for model training. Users don't need to filter manually, the system does the optimization automatically.

- How to do it: Set the YAML configuration in the

selection_methodbecause ofdclm_fasttext_score, and make sure the model path is correct.

- Multi-threaded acceleration

- pass (a bill or inspection etc)

num_workersparameter to adjust the number of threads. For example, a 16-core CPU can be set to 16 to fully utilize computing resources. - Note: A high number of threads may lead to memory overflow, it is recommended to test according to the machine configuration.

- pass (a bill or inspection etc)

- Support for large-scale crawling

- The project is designed for very large datasets such as ClueWeb22 and is suitable for research scenarios that require the processing of billions of web pages.

- Action Suggestion: Ensure I/O performance by storing data on an SSD; set the

max_num_docsis the target number of documents (e.g., 20 million).

Tips for use

- Debugging and Logging: Enable

wandb: trueThe crawling process can be recorded for analyzing efficiency. - Optimize storage: Crawl results are large and it is recommended to reserve enough disk space (e.g. hundreds of GB).

- Extended functionality: In combination with the DCLM framework, the extracted text can be used directly for large model pre-training.

With these steps, users can quickly get started with Crawl4LLM for efficient web data crawling and optimized pre-training process.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...