Command R7B: Enhanced Retrieval and Reasoning, Multilingual Support, Fast and Efficient Generative AI

The smallest model in our R family delivers top-notch speed, efficiency, and quality to build powerful AI applications on common GPUs and edge devices.

Today, we are pleased to release Command R7B, the smallest, fastest, and last model in our R family of Large Language Models (LLMs) developed specifically for the enterprise.Command R7B delivers industry-leading performance in its class of open-weighted models capable of handling real-world tasks that matter to users. The model is designed for developers and enterprises that need to optimize speed, cost-effectiveness, and computational resources.

Like other models in the R family, Command R7B offers a context length of 128k and excels in several important business application scenarios. It combines strong multi-language support, reference-validated Retrieval Augmented Generation (RAG), reasoning, tool usage, and agent behavior. Thanks to its compact size and efficiency, it can run on low-end GPUs, MacBooks, and even CPUs, dramatically reducing the cost of putting AI applications into production.

High performance in a small package

A comprehensive model

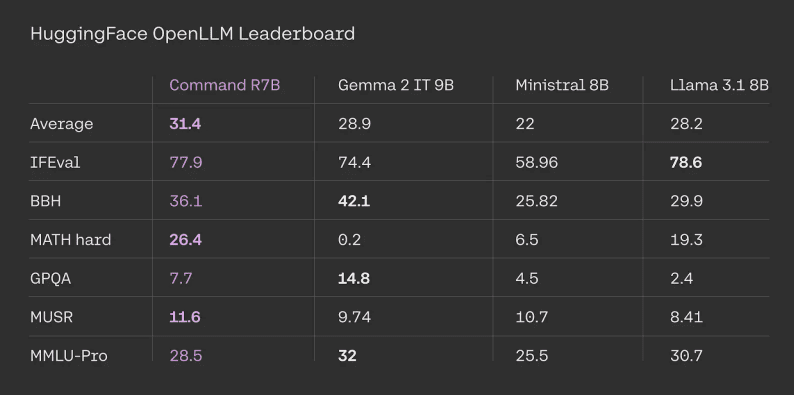

The Command R7B performs well in standardized and externally verifiable benchmarks such as HuggingFace Open LLM leaderboards. Command R7B ranked first on average on all tasks with strong performance compared to other comparable open weighting models.

HuggingFace leaderboard evaluation results. Competitors' figures are taken from the official leaderboards. the results for Command R7B are calculated by us based on the tips and evaluation codes provided by the official HuggingFace.

Efficiency gains in math, code and reasoning tasks

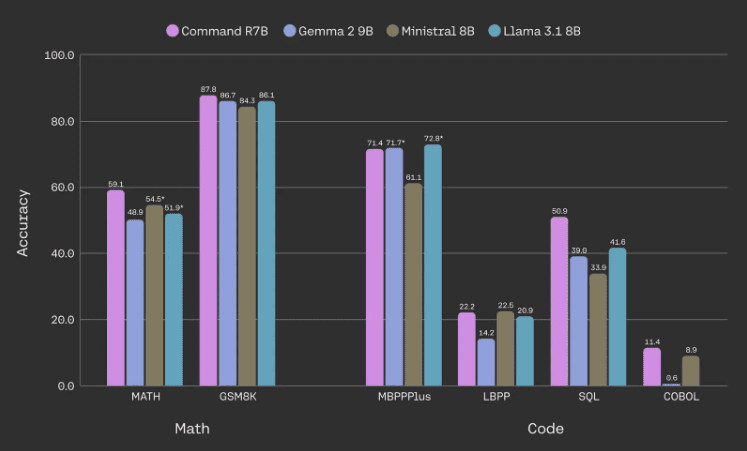

A major focus of Command R7B is to improve performance on math and reasoning, code writing, and multilingual tasks. In particular, the model is able to match or exceed the performance of comparable open-weighted models on common math and code benchmarks, while using fewer parameters.

model's performance in math and code benchmarking. All data is from internal evaluations, numbers marked with an asterisk are from externally reported results which are higher. We use the base version of MBPPPlus, LBPP is averaged across 6 languages, SQL is averaged across 3 datasets (higher and super-high difficulty sections of SpiderDev and Test only, BirdBench, and an internal dataset), and COBOL is a dataset that we developed in-house.

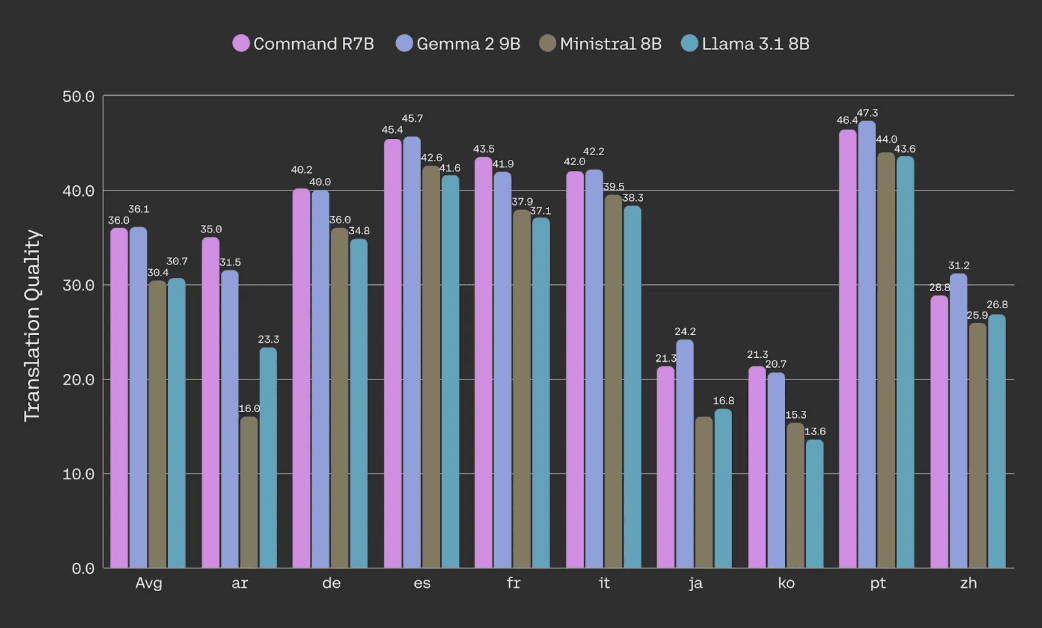

Document translation quality (via corpus spBLEU metrics) evaluated using the NTREX dataset.

Best-in-class RAG, tool use, and intelligentsia

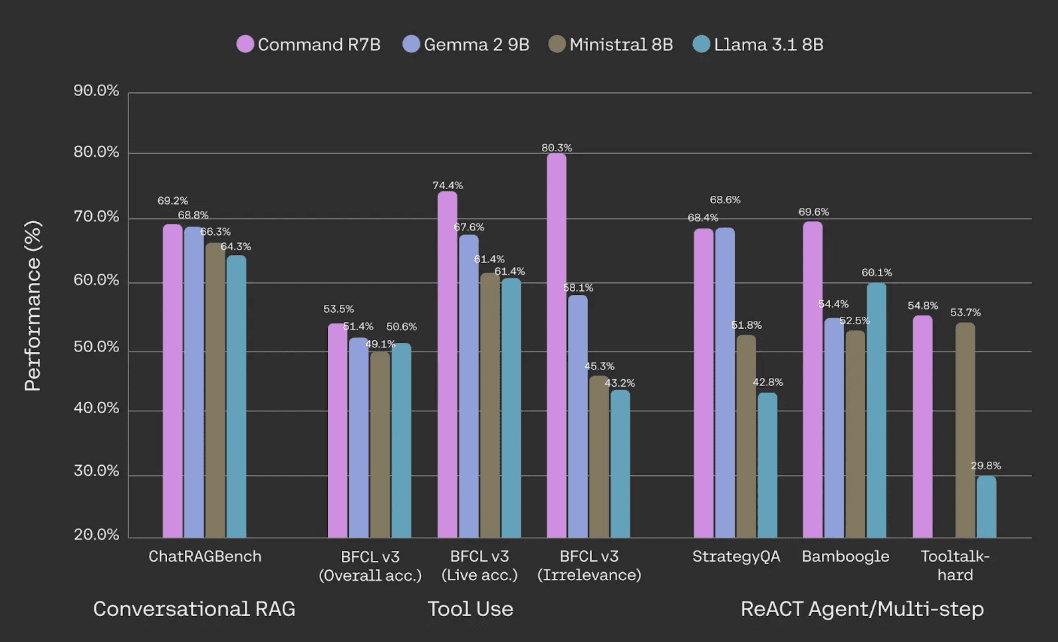

Command R7B outperforms other open weighting models of comparable size in handling core business applications such as RAG, tool usage and AI intelligences. It is ideal for organizations seeking a cost-effective model based on internal documents and data. As with our other R-series models, our RAG Local inline references are provided, greatly reducing illusions and making fact-checking easier.

Performance evaluation on ChatRAGBench (averaged over 10 datasets), BFCL-v3, StrategyQA, Bamboogle, and Tooltalk-hard. See footnote [1] below for methodology and more details.

In terms of tool usage, we see that compared to comparable scale models, Command R7B is more efficient in terms of industry-standard Berkeley Function-Calling Leaderboard This shows that Command R7B is particularly good at tool usage in diverse and dynamic environments in the real world and is able to avoid unnecessary calls to tools, an important aspect of tool usage in real-world applications. This demonstrates that Command R7B is particularly adept at tool usage in real-world diverse and dynamic environments, and is able to avoid calling tools unnecessarily, which is an important aspect of tool usage in real-world applications.Command R7B's ability to use multi-step tools enables it to support fast and efficient AI intelligences.

Optimization for enterprise use cases

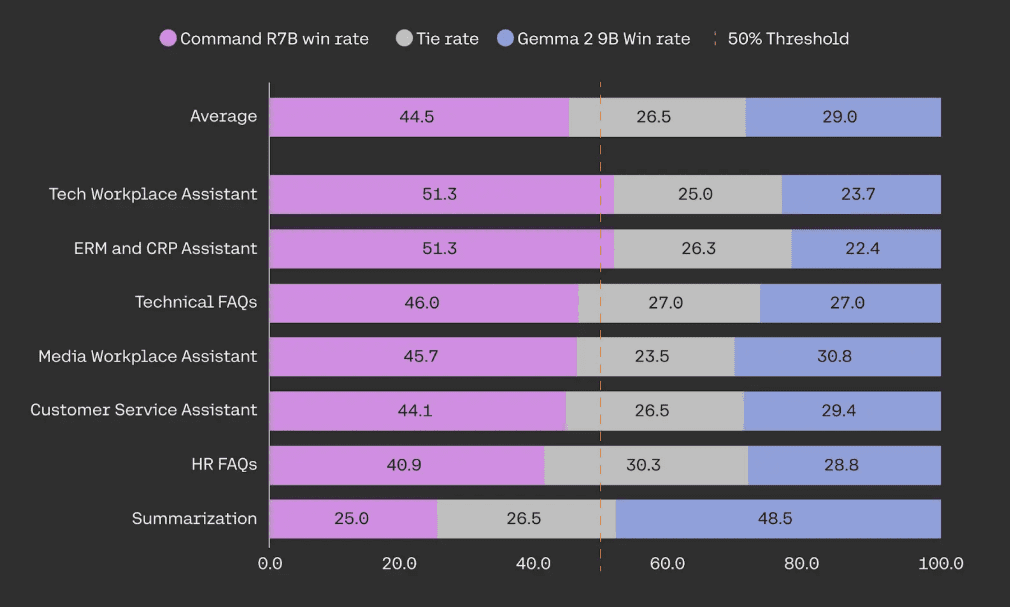

Our models are optimized to meet the capabilities organizations need when deploying AI systems in the real world. the R-Series offers an unparalleled balance of efficiency and robust performance. This means ensuring they outperform in human assessments, the gold standard for quality assessment.Command R7B outperforms similarly sized, open-weighted models in blind head-to-head tests on RAG use cases for building AI assistants that customers care about, such as customer service, HR, compliance, and IT support functions.

In the human evaluation, Command R7B was tested against Gemma 2 9B on a sample of 949 enterprise RAG use cases. All examples were at least triple-blind annotated by specially trained human annotators to assess fluency, fidelity, and answer utility.

Efficient and fast

Command R7B's compact size provides a small service footprint for rapid prototyping and iteration. It excels in high-throughput real-time use cases such as chatbots and code assistants. It also supports device-side reasoning by dramatically reducing the cost of deploying infrastructure, such as consumer-grade GPUs and CPUs.

We have not compromised our enterprise-level security and privacy standards in this process to ensure the protection of customer data.

Quick Start

Command R7B is available today at Cohere Platform It can also be used on the HuggingFace On Access. We are pleased to release the weights for this model to provide the AI research community with broader access to cutting-edge technology.

| Cohere API Pricing | importation Token | Output Token |

|---|---|---|

| Command R7B | $0.0375 / 1M | $0.15 / 1M |

[1] Conversational RAG: An average performance test on the ChatRAGBench benchmark on 10 datasets, testing the ability to generate responses in a variety of environments, including conversational tasks, processing long inputs, analyzing forms, and extracting and manipulating data information in a financial environment. We improved the evaluation methodology by using PoLL discriminator integration (Verga et al., 2024) in combination with Haiku, GPT3.5, and Command R, which provided higher consistency (Fleiss' kappa = 0.74 compared to 0.57 in the original version, based on 20,000 manual ratings). Tool use: performance on the 12/12/2024 BFCL-v3 benchmark. All available scores are from public leaderboards, otherwise internal evaluations using the official codebase. For competitors, we report the higher of their BFCL 'prompted' or 'function-calling' score. We report the overall score, the real-time subset score (testing the use of the tool in real-world, diverse, and dynamic environments), and the irrelevant subset score (testing how the model avoids calling the tool unnecessarily).REACT Agent/multi-step: We evaluated LangChain REACT The ability of intelligences connected to the Internet to decompose complex problems and develop a plan for successfully executing research was assessed using Bamboogle and StrategyQA. Bamboogle was assessed using PoLL integration, and StrategyQA was assessed by evaluating whether the model followed the format instructions, and finally answering 'Yes ' or 'No' to make a judgment. We use the test sets of Chen et al. (2023) and Press et al. (2023).ToolTalk challenges the model to perform complex reasoning and actively seek information from the user in order to accomplish complex user tasks such as account management, sending emails, and updating calendars.ToolTalk-hard is evaluated using the soft success rate of the official ToolTalk library. ToolTalk requires models to expose a function call API, which Gemma 2 9B does not have.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...