Cognita: An Open Source Framework for Building Modular RAG Applications and Rapidly Testing Diverse RAG Strategies

General Introduction

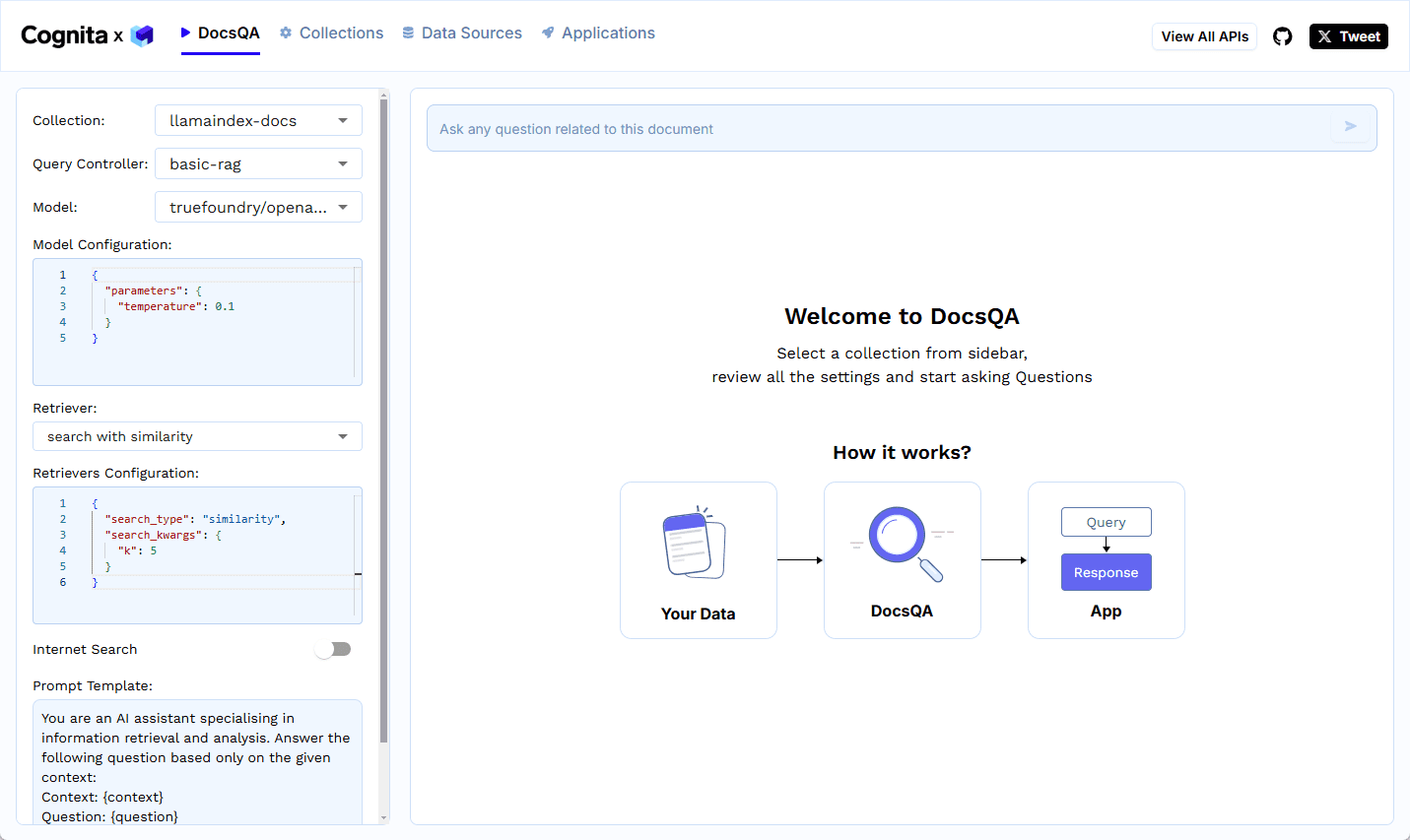

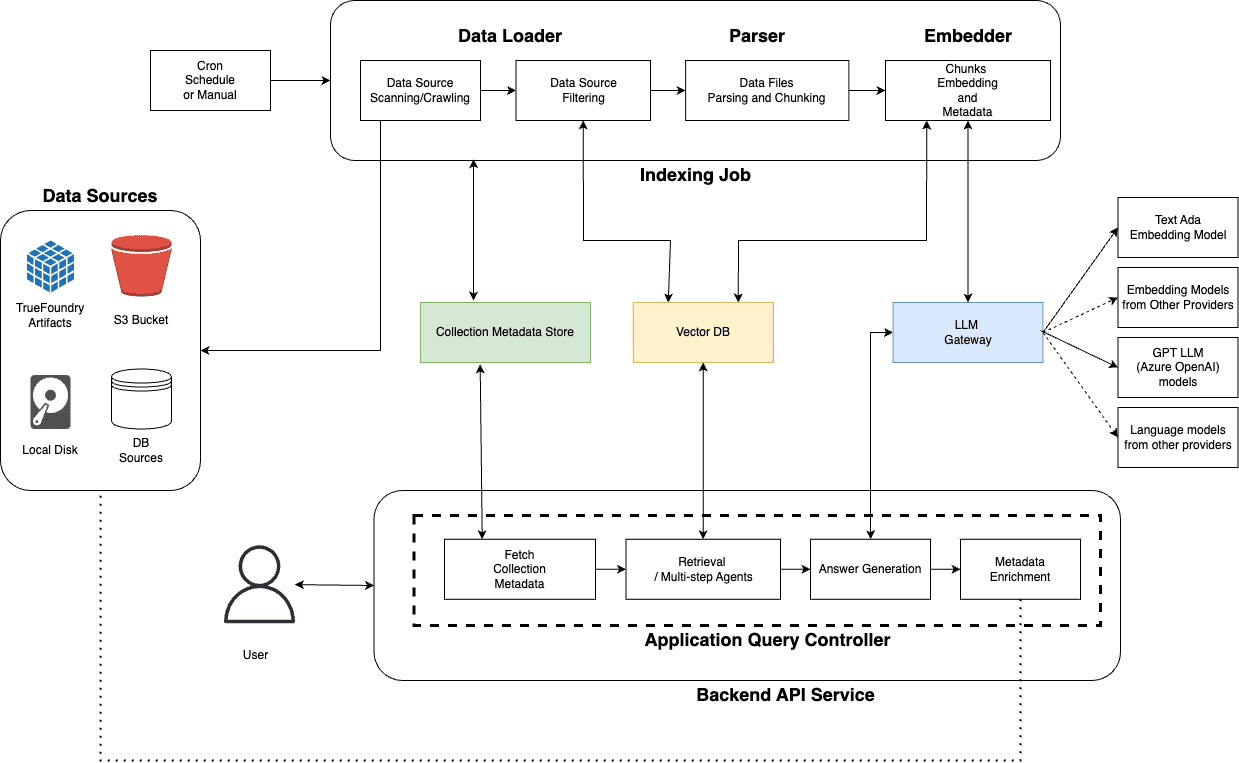

Cognita is an open source framework developed by TrueFoundry to simplify the development of RAG (Retrieval-Augmented Generation) based applications. The framework provides a structured, modular solution that makes it easy to combine RAG technology from the prototype stage to production environments.Cognita supports multiple data sources, parsers, and embedded models, and provides an easy-to-use user interface that allows non-technical users to experiment with RAG configurations. It integrates seamlessly with existing systems, supports incremental indexing and multiple vector databases, and helps developers achieve rapid iteration and deployment in AI application development.

Referencing different RAG strategies based on Langchain/LlamaIndex modularity and providing a friendly user interface to quickly test and release production-grade applications.

Function List

- Modular design: Split the RAG application into independent modules such as data loader, parser, embedder and retriever to improve code reusability and maintainability.

- Intuitive User Interface: Provides a visual interface that allows users to easily upload documents and perform Q&A operations.

- API Driver: Support full API driver, easy to integrate with other systems.

- Incremental indexing: Re-index only changed documents, saving computing resources.

- Multi-data source support: Load data from local directories, S3, databases, and many other data sources.

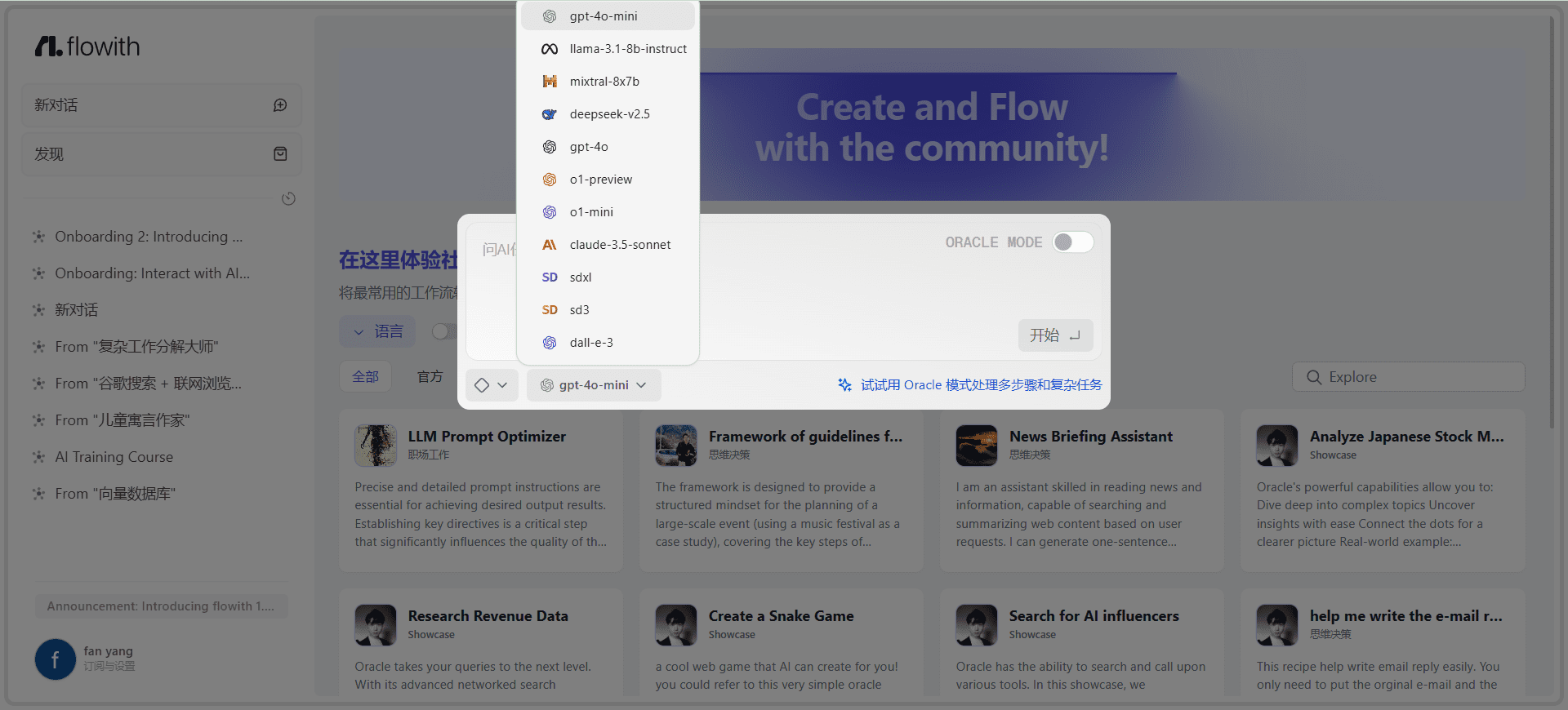

- Multi-model support: Including OpenAI, Cohere and other embedded models and language model support.

- Vector Database Integration: Seamless integration with vector databases such as Qdrant, SingleStore, etc.

Using Help

Installation process

Since Cognita is an open source Python project, the installation process involves the following steps:

- clone warehouse::

git clone https://github.com/truefoundry/cognita.git cd cognita - Setting up a virtual environment(Recommended Practice):

python -m venv .cognita_env source .cognita_env/bin/activate # Unix .cognita_env\Scripts\activate # Windows - Installation of dependencies::

pip install -r requirements.txt - Configuring Environment Variables::

- Copy .env.example to the .env file and configure it according to your needs, such as API key, database connection, etc.

Guidelines for use

Data loading:

- Choose a data source: Cognita supports loading data from local files, S3 storage buckets, databases, or TrueFoundry artifacts. Choose the type of data source that is right for you.

- Upload or configure the data: if you choose local files, upload the files directly. If it's another data source, configure the access rights and path.

Data parsing:

- Select Parser: Select the appropriate parser according to the document type (e.g. PDF, Markdown, text file) Cognita supports parsing of multiple file formats by default.

- Perform Parsing: Click the Parsing button and the system will convert the document to a uniform format.

Data embedding:

- Select Embedded Model: Select the embedded model (e.g. OpenAI's model or other open source models) according to your needs.

- Generate Embedding: Performs embedding operations to convert text into a vector representation for subsequent retrieval.

Query and Retrieval:

- Enter a query: Enter your query in the UI or via the API.

- Retrieve relevant information: The system will retrieve the most relevant document fragments in the database according to your query.

- Generate Answers: Use the selected language model to generate answers based on the retrieved segments.

Incremental indexing:

- Monitor data changes: Cognita provides the ability to index only new or updated documents, increasing efficiency and saving computing resources.

User Interface Operation:

- Manage Collections: You can create, delete or edit document collections in the UI.

- Q&A operation: Users can experience the effects of the RAG system by taking questions and answers directly from the interface.

Featured Function Operation

- Multi-language support: If your data contains multiple languages, you can take advantage of Cognita's multi-language support for multi-language Q&A.

- Dynamic Model Switching: Cognita allows you to switch between different embedding or language models on demand, without having to redeploy the entire application.

With the steps and features described above, users can quickly get started and leverage Cognita to build and optimize their own RAG applications to improve AI-driven information retrieval and generation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...

![FLUX.2 [klein] - Black Forest Labs 开源的轻量级图像生成与编辑模型](https://aisharenet.com/wp-content/uploads/2026/01/1768710007-1768710007-FLUX.2-klein.png)