code2prompt: converting code libraries into big-model comprehensible prompt files

General Introduction

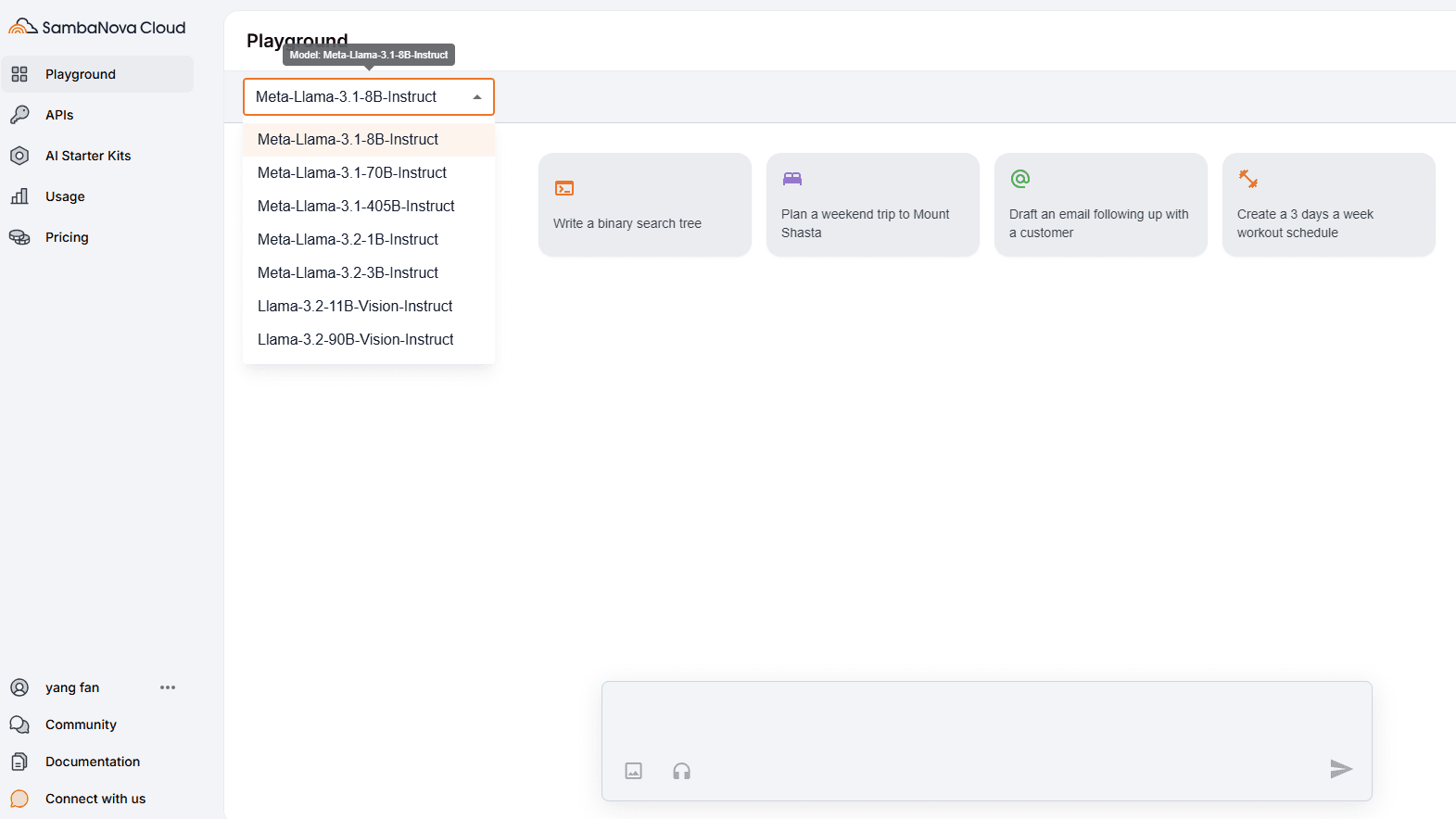

code2prompt is an open source command line tool, created by developer Mufeed VH and hosted on GitHub, designed to help users quickly convert entire code bases into prompts suitable for the Large Language Model (LLM). It generates formatted Markdown output by traversing code directories, generating source tree structures, and consolidating file content for direct use in tasks such as code analysis, documentation, or feature improvement. With support for Handlebars template customization, token counting statistics, and Git diff integration, the tool is particularly well suited for developers who want to take advantage of large models like ChatGPT (or Claude) to handle complex code bases. Whether it's optimizing performance, finding bugs, or generating commits, code2prompt delivers significant efficiency gains. As of March 3, 2025, the project has more than 4,500 stars, demonstrating its widespread acceptance in the developer community.

Function List

- Codebase Conversion: Consolidate code files from a specified directory into a single LLM-readable prompt.

- Source Code Tree Generation: Automatically generate a tree structure of code directories for easy understanding of the project layout.

- Template customization: Customize the output format with Handlebars templates to support a variety of usage scenarios.

- Token Count: Statistical generation of prompts for token number, ensuring that the contextual constraints of different models are adapted.

- Git Integration: Support for adding Git diff and log to show code change history.

- File Filtering: Supports glob mode filtering of files, ignoring extraneous content such as .gitignore.

- Markdown output: Generate structured Markdown documents for easy uploading directly to LLM.

- Cross-platform support: Stable on Linux, macOS and Windows.

- Clipboard Support: Automatically copy the generated tips to the clipboard to enhance operational efficiency.

Using Help

Installation process

code2prompt is a command line tool written in Rust with a simple installation process and support for multiple methods. Here are the detailed steps:

Method 1: Installation through source code

- pre-conditions: Ensure that Rust and Cargo (Rust's package manager) are installed on your system. This can be checked with the following command:

rustc --version cargo --version

If you don't have it, visit the Rust website to download and install it.

2. clone warehouse::

git clone https://github.com/mufeedvh/code2prompt.git

cd code2prompt

- Compilation and Installation::

cargo build --releaseOnce compiled, the

target/release/directory to find thecode2promptExecutable file. - Move to global path(Optional): Moves the file to a system path for global invocation, for example:

sudo mv target/release/code2prompt /usr/local/bin/

Method 2: By pre-compiling binaries

- Visit the GitHub Releases page to download the latest version of the binary for your operating system (e.g., the

code2prompt-v2.0.0-linux-x86_64). - Unzip and give execute permission:

chmod +x code2prompt - Move to global path (optional):

sudo mv code2prompt /usr/local/bin/

Method 3: Installation via Nix

If you use the Nix package manager, you can install it directly:

# 无 flakes

nix-env -iA nixpkgs.code2prompt

# 有 flakes

nix profile install nixpkgs#code2prompt

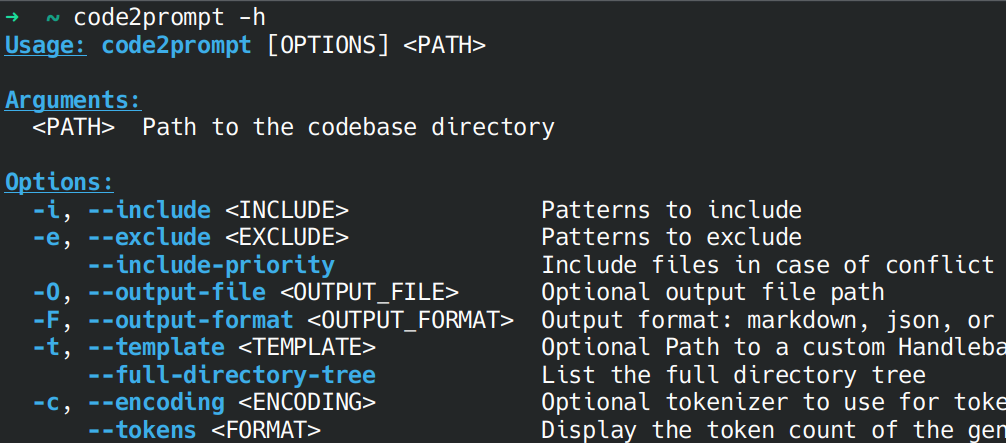

Usage

Once installed you can run it from the command line code2prompt. Below is the detailed operation flow of the main functions:

1. Basic use: generating code base hints

Suppose you have a code directory /path/to/codebase, run the following command:

code2prompt /path/to/codebase

- The output will contain the source tree and all file contents, generated by default in Markdown format and copied to the clipboard.

- Sample Output:

Source Tree:dir/

file1.rs

└── file2.py`dir/file1.rs`: <文件内容>

2. Customized templates

code2prompt provides built-in templates (e.g., for generating git commit messages, documentation comments, etc.) located in the installation directory under the templates/ folder. You can also create custom templates. For example, using the document-the-code.hbs Add document comments:

code2prompt /path/to/codebase -t templates/document-the-code.hbs

- Custom templates need to follow the Handlebars syntax, and the variables include

absolute_code_path(code path),source_tree(source code tree) andfiles(list of documents).

3. Token counting and encoding options

View the number of generated hint tokens, with support for multiple splitters:

code2prompt /path/to/codebase --tokens -c cl100k

- Optional Splitter:

cl100k(default),p50k,p50k_edit,r50kThe

4. Git Integration

Add Git diff (staged files):

code2prompt /path/to/codebase --diff

Compare the differences between the two branches:

code2prompt /path/to/codebase --git-diff-branch "main, development"

Get the commit log between branches:

code2prompt /path/to/codebase --git-log-branch "main, development"

5. Document filtering

Exclude specific files or directories:

code2prompt /path/to/codebase --exclude "*.log" --exclude "tests/*"

Contains only specific files:

code2prompt /path/to/codebase --include "*.rs" --include "*.py"

6. Save output

Save the results as a file instead of just copying them to the clipboard:

code2prompt /path/to/codebase -o output.md

Example of operation

Suppose you want to generate performance optimization recommendations for a Python project:

- Run command:

code2prompt /path/to/project -t templates/improve-performance.hbs -o prompt.md - commander-in-chief (military)

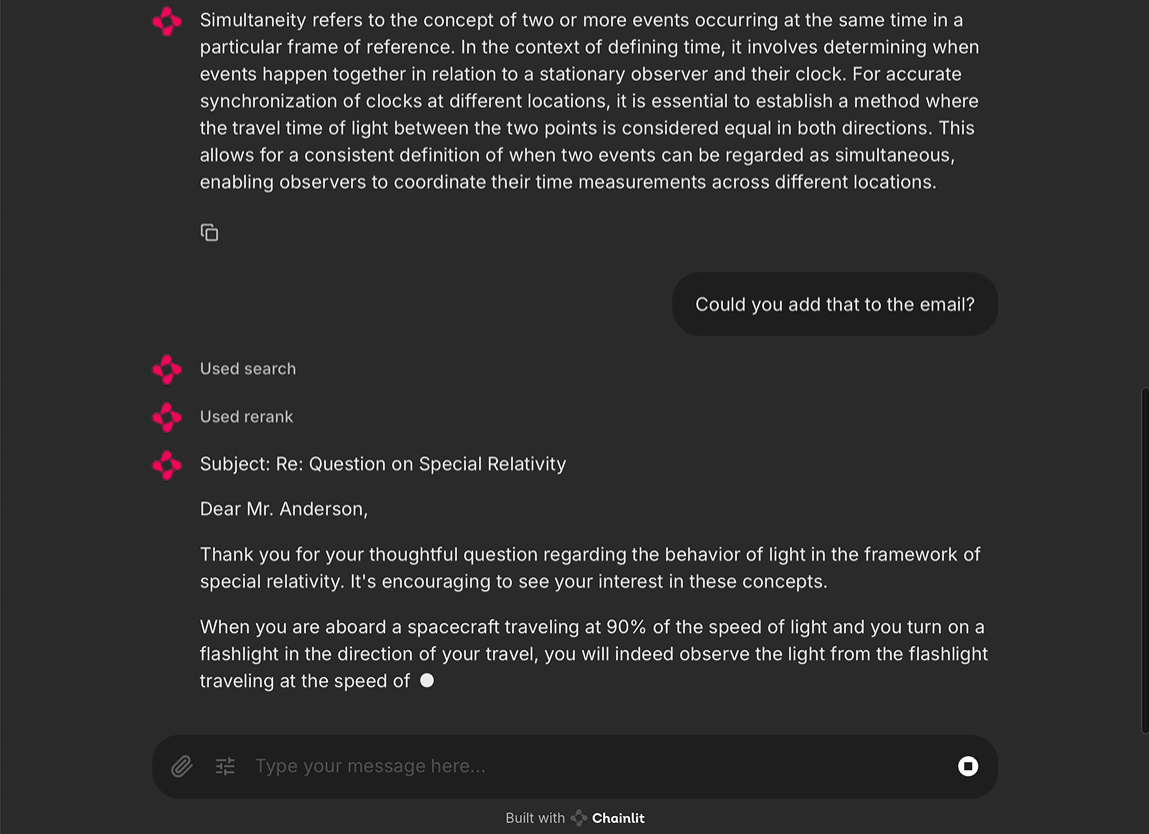

prompt.mdContent uploaded to Claude or ChatGPT, enter, "Suggest performance optimizations based on this code base." - Get optimizations returned by the model, such as loop optimizations, memory management recommendations, etc.

caveat

- If the clipboard function fails on some systems (e.g. Ubuntu 24.04), export to the terminal and copy manually:

code2prompt /path/to/codebase -o /dev/stdout | xclip -selection c - probe

.gitignorefile to ensure that extraneous files are correctly ignored (available with the--no-ignore(Disabled).

With the above steps, you can quickly get started with code2prompt and easily turn your code base into tips available for large models, completing the entire process from analysis to optimization.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...