Learn how AI Coding works, starting with Cline!

Unexpectedly, AI has set off a half-changing sky in the programming field. From v0, bolt.new to all kinds of programming tools Cursor, Windsurf combined with Agant, AI Coding already has a huge potential of idea MVP. From the traditional AI-assisted coding, to today's direct project generation behind, in the end how to realize?

This article will look at open source products Cline Starting from there, we'll take a peek at some of the implementation ideas of AI Coding products at this stage. At the same time, you can understand the deeper principles and make better use of AI editors.

The final implementation may not be consistent across AI Coding editors. Also, this article will not go into the implementation details of Tool Use.

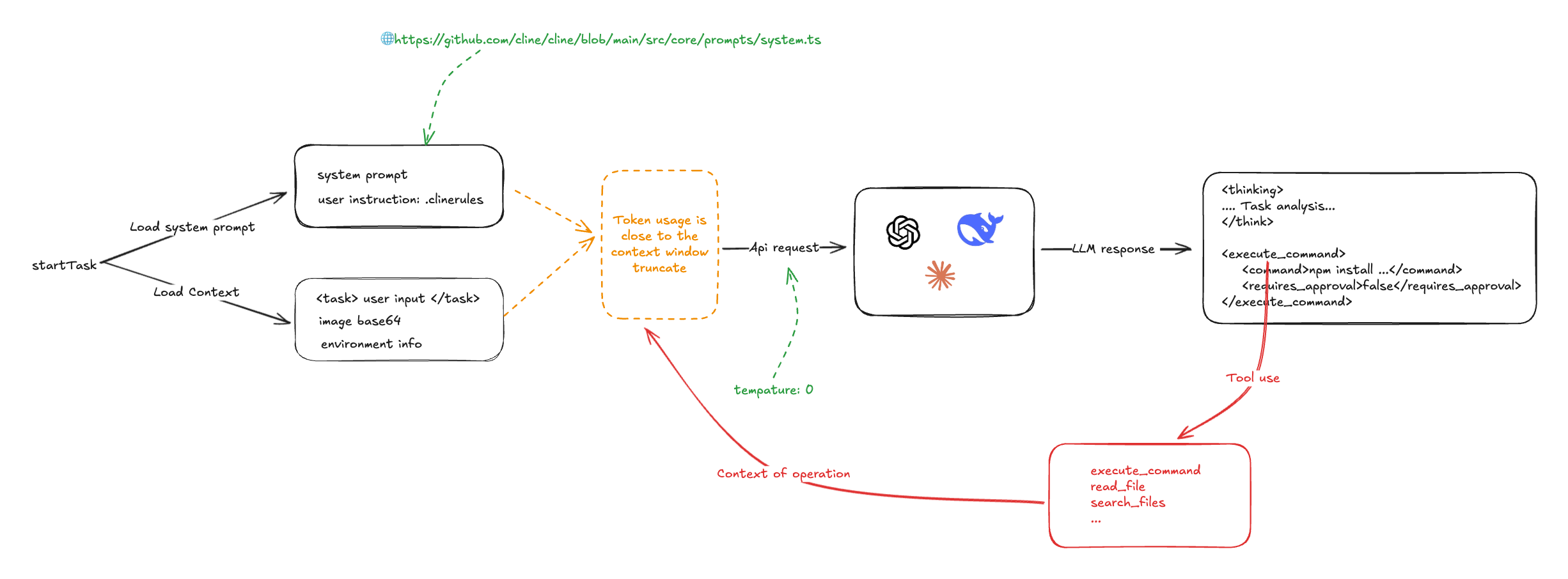

Cline I made a sketch of the overall process:

At its core, Cline relies on system prompts and the command following capabilities of the Big Language Model. At the start of a programming task, system prompts, user-defined prompts, user input, and information about the project's environment (which files, open tabs, etc.) are collected and submitted to the LLM, which outputs solutions and actions in accordance with the directives, and to the LLM, which outputs solutions and actions in accordance with the directives, and to the LLM, which outputs solutions and actions in accordance with the directives. <execute_command />,<read_file />Cline calls the written Tool Use capability to perform the processing and passes the results to the LLM for processing. Cline makes multiple calls to LLM to accomplish a single task.

system cue

Cline's system prompts are v0-like prompts, written in Markdown and XML. The LLM Tool Use rules and usage examples are defined in detail:

# Tool Use Formatting

Tool use is formatted using XML-style tags. The tool name is enclosed in opening and closing tags, and each parameter is similarly enclosed within its own set of tags. Here's the structure:

<tool_name>

<parameter1_name>value1</parameter1_name>

<parameter2_name>value2</parameter2_name>

...

</tool_name>

For example:

<read_file>

<path>src/main.js</path>

</read_file>

Always adhere to this format for the tool use to ensure proper parsing and execution.

# Tools

## execute_command

## write_to_file

...

## Example 4: Requesting to use an MCP tool

<use_mcp_tool>

<server_name>weather-server</server_name>

<tool_name>get_forecast</tool_name>

<arguments>

{

"city": "San Francisco",

"days": 5

}

</arguments>

</use_mcp_tool>

The MCP server is also injected into the system prompt word.

MCP SERVERS

The Model Context Protocol (MCP) enables communication between the system and locally running MCP servers that provide additional tools and resources to extend your capabilities.

# Connected MCP Servers

...

User commands can also be passed through the .clinerules injected into the system's system cue word.

From this we can venture to assume that Cursor and WindSurf Injecting .cursorrules is similar

It can be seen that Cline is at its core dependent on the LLM's ability to follow instructions, so the model's temperature is set to 0The

const stream = await this.client.chat.completions.create({

model: this.options.openAiModelId ?? "",

messages: openAiMessages,

temperature: 0, // 被设置成了 0

stream: true,

stream_options: { include_usage: true },

})

First input

Multiple inputs exist for the user, respectively:

- Directly typed copy with

<task />embody - pass (a bill or inspection etc)

@Input file directory, file and url

In Cline.@ There's not a lot of tech involved, for file directories, list the file directory structure; for files, read the contents of the file; and for url, read the contents directly from puppeteer. Then we take the content and user input and output it to LLM.

A sample input is as follows:

<task>实现一个太阳系的 3D 环绕效果 'app/page.tsx' (see below for file content) 'https://stackoverflow.com/questions/23673275/orbital-mechanics-for-a-solar-system-visualisation-in-three-js-x-y-z-for-planet' (see below for site content)

</task>

<file_content path="app/page.tsx">

import Image from "next/image";

export default function Home() {...}

</file_content

<site_content>

...

</site_content>

The user input also contains a type of information about the project environment, such as the list of files in the current working directory, the tabs that vscode opens, and so on.

A simple task gives the following input to the LLM:

<task>

实现一个太阳系的 3D 环绕效果

</task>

<environment_details>

# VSCode Visible Files

(No visible files)

# VSCode Open Tabs

(No open tabs)

# Current Time

1/23/2025, 4:41:03 PM (Asia/Shanghai, UTC+8:00)

# Current Working Directory (/Users/nazha/nazha-all/cline-dev/nazha) Files

.gitignore

eslint.config.mjs

next.config.ts

package-lock.json

package.json

postcss.config.mjs

README.md

tailwind.config.ts

tsconfig.json

app/

app/favicon.ico

app/globals.css

app/layout.tsx

app/page.tsx

public/

public/file.svg

public/globe.svg

public/next.svg

public/vercel.svg

public/window.svg

# Current Mode

ACT MODE

</environment_details>

As you can see here, other AI Coding editors (such as Cursor)it's possibleEmbedding the codebase, but cline is more brute-force and straightforward.

First round returns #

The LLM is returned as required by the command (temperature is set to 0) and typically contains <thinking /> and operation in two parts. For example:

<thinking>

分析任务需求:

1. 需要创建一个3D太阳系环绕效果

2. 当前项目是一个Next.js项目(根据next.config.ts和app目录结构判断)

3. 需要添加3D功能,可以使用Three.js库

4. 需要创建新的组件来展示3D效果

下一步行动:

1. 安装Three.js依赖

2. 创建一个新的组件来展示3D太阳系

3. 在页面中引入该组件

</thinking>

<execute_command>

<command>npm install three @types/three</command>

<requires_approval>false</requires_approval>

</execute_command>

In this example, Cline invokes various types of system operations by parsing the instructions output by LLM, including but not limited to:

- execute a command

- Read and write files

- Search Content

- MCP Operation

At the same time, Cline collects information about the operational status of various operations.

Second input

Next, Cline outputs the user's behavior, the output state and the result of the previous operation, including the previous system prompt and user input, to the LLM again, requesting the LLM to give guidance on the next step to be taken. And so on.

role: system

content: [...省略]

role: user

content: [

{

type: 'text',

text: '<task>\n实现一个太阳系的 3D 环绕效果\n</task>'

},

{

type: 'text',

text: "<environment_details>...</environment_details>"

}

]

role: 'assistant',

content: '<thinking>\n分析任务需求:\n1. 需要创建一个3D太阳系环绕效果\n2. 当前项目是一个Next.js项目(根据next.config.ts和app目录结构判断)\n3. 需要添加3D功能,可以使用Three.js库\n4. 需要创建新的组件来展示3D效果\n\n下一步行动:\n1. 安装Three.js依赖\n2. 创建一个新的组件来展示3D太阳系\n3. 在页面中引入该组件\n</thinking>\n\n<execute_command>\n<command>npm install three @types/three</command>\n<requires_approval>false</requires_approval>\n</execute_command>'

role: 'user',

content: [

{

type: 'text',

text: '[execute_command for 'npm install three @types/three'] Result:'

},

{

type: 'text',

text: 'Command executed.\nOutput:\n⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏⠋⠙⠹⠸⠼⠴⠦⠧⠇⠏\nadded 9 packages and audited 385 packages in 5s\n⠏\n⠏142 packages are looking for funding\n⠏ run `npm fund` for details\n⠏\nfound 0 vulnerabilities\n⠏'

},

{

type: 'text',

content: '<environment_details>\n# VSCode Visible Files\n(No visible files)\n\n# VSCode Open Tabs\n(No open tabs)\n\n# Current Time\n1/23/2025, 10:01:33 PM (Asia/Shanghai, UTC+8:00)\n\n# Current Mode\nACT MODE\n</environment_details>'

}]

As you can see, processing a single task requires looping back and forth to call LLM multiple times until the task is finished. The other thing is that Cline basically just shoves everything into the LLM, one task at a time. Token The usage is very high.

Another problem is that it's easy to trigger LLM's context window limit, and Cline's strategy for dealing with this is to violently truncate it.

This is presumably how other AI Coding editors handle it as well. When I was using windsurf before, I was curious as to why it wasn't limited by the LLM context window. However, the previous answer is often repeated in later Q&As.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...