Clevrr Computer: Automating Desktop Manipulation Intelligence with the PyAutoGUI Library

General Introduction

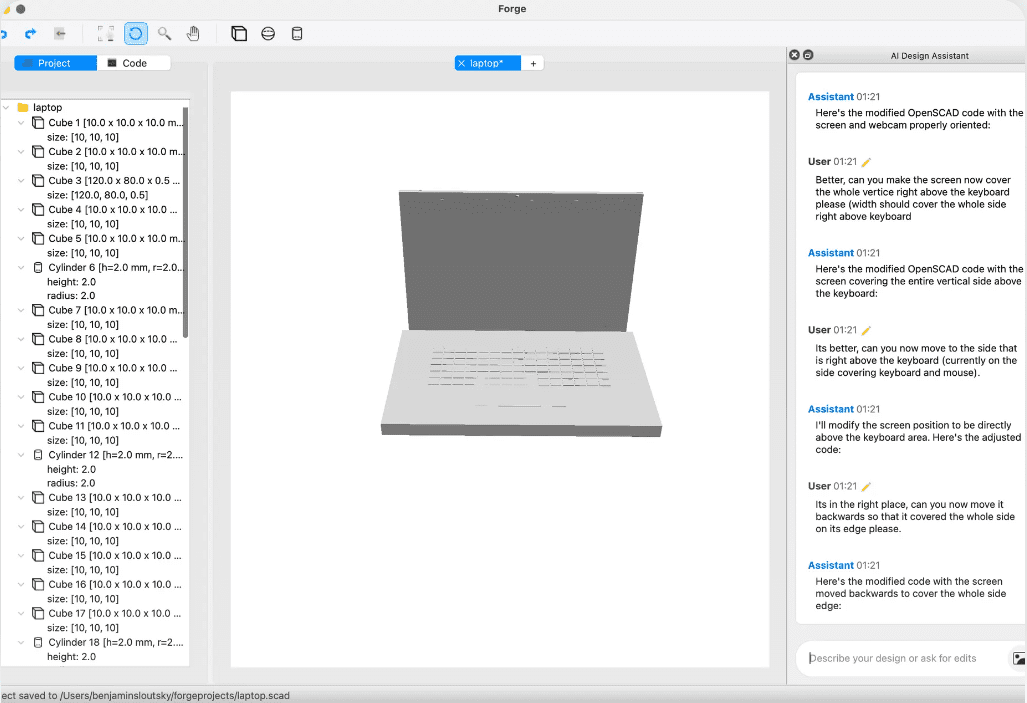

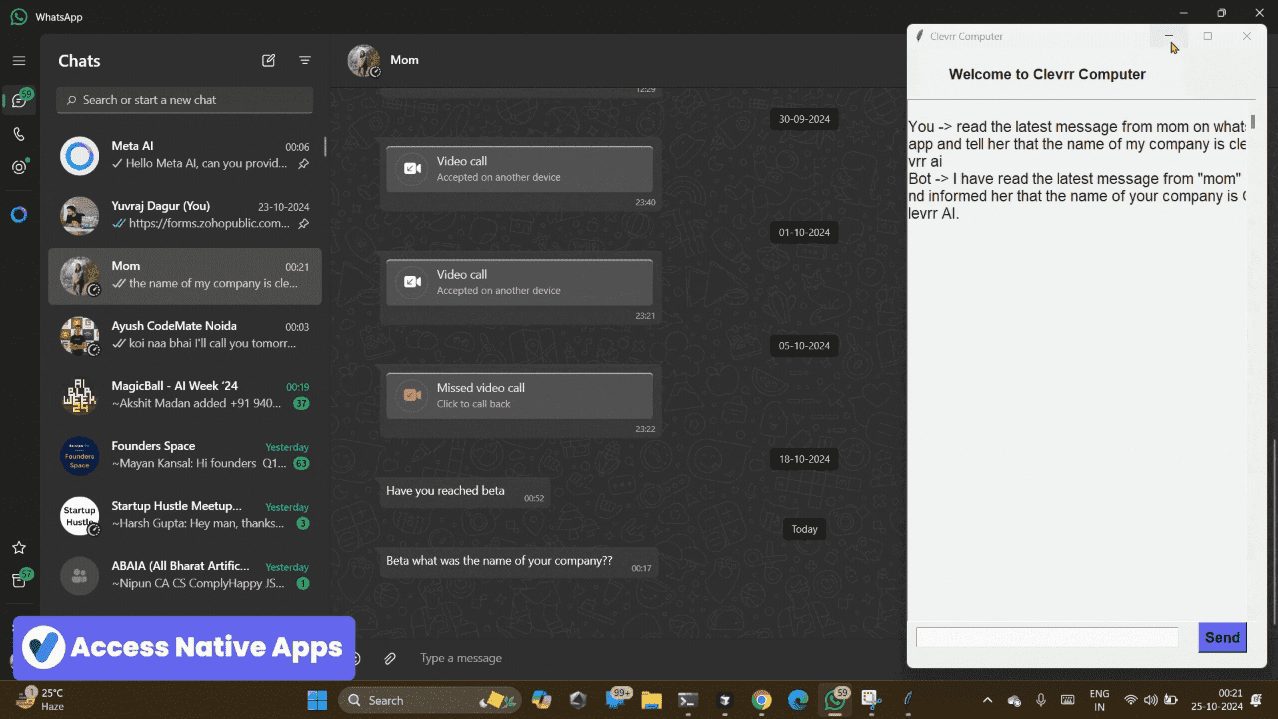

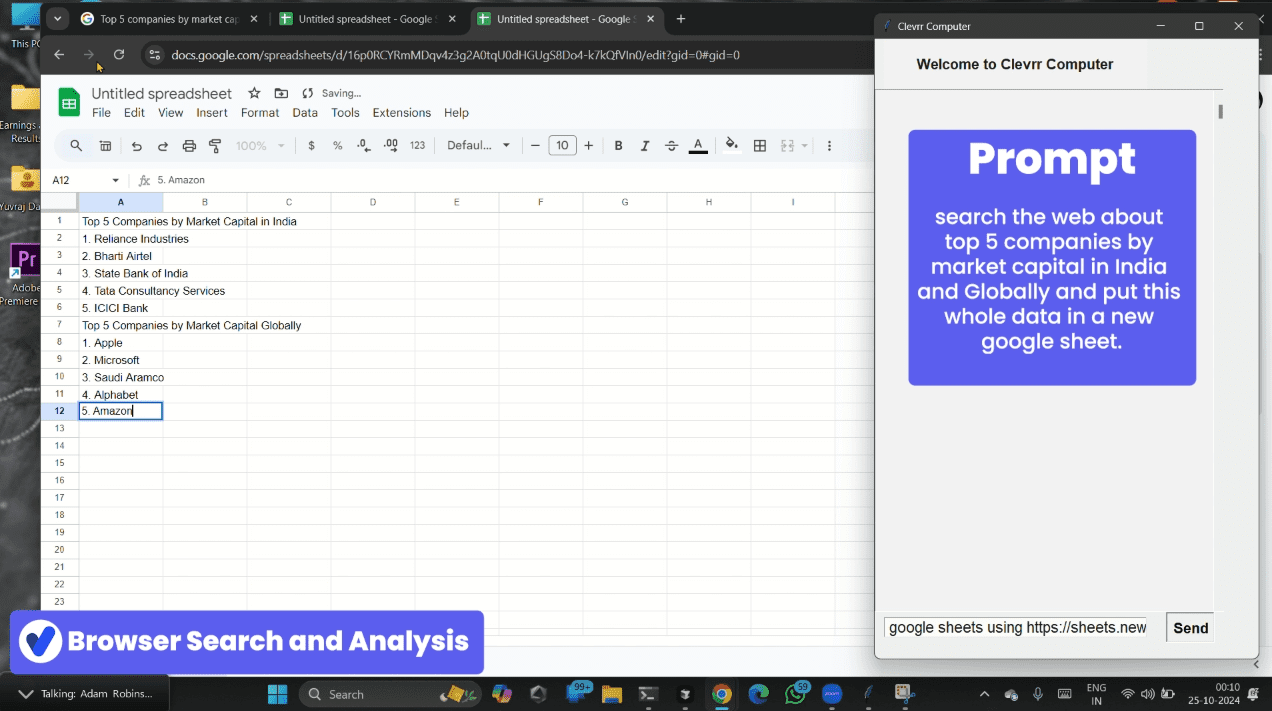

Clevrr Computer is an open source project that aims to automate system operations by using the PyAutoGUI library. The project is supported by Anthropic Clevrr Computer has been inspired to design an automated agent that performs the user's system operation tasks accurately and efficiently.Clevrr Computer is able to automate keyboard, mouse, and screen interactions while ensuring the security and accuracy of each task. The project is currently in beta and users should be aware of the risks associated with its use.

Recommended automated desktop operating intelligences introduced by Smart Spectrum:GLM-PC (Smart Spectrum Bull) officially released for internal download, the real AI that can control the computer

Function List

- Automate mouse movements, clicks and keyboard input

- Screenshots and management windows

- Handle errors gracefully and provide feedback

- Execute tasks with maximum precision and avoid unintentional operations

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/Clevrr-AI/Clevrr-Computer.git cd Clevrr-Computer

- Install the dependencies:

pip install -r requirements.txt - Setting environment variables:

commander-in-chief (military).env_devRename the file to.envand add your API key and other configurations:AZURE_OPENAI_API_KEY=<YOUR_AZURE_API_KEY> AZURE_OPENAI_ENDPOINT=<YOUR_AZURE_ENDPOINT_URL> AZURE_OPENAI_API_VERSION=<YOUR_AZURE_API_VERSION> AZURE_OPENAI_CHAT_DEPLOYMENT_NAME=<YOUR_AZURE_DEPLOYMENT_NAME> GOOGLE_API_KEY=<YOUR_GEMINI_API_KEY>

Usage

- Run the application:

python main.pyBy default, this will use the gemini model and enable floating UI.

- Optional Parameters:

- Selecting a model: You can select a model by passing

--modelparameter to specify the model to use. The acceptable parameters aregeminimaybeopenaiThepython main.py --model openai - Floating UI: By default, the TKinter UI will float and stay at the top of the screen. You can float the UI by passing the

--float-uidenote0to disable this behavior.python main.py --float-ui 0

- Selecting a model: You can select a model by passing

Functional operation flow

Clevrr Computer works through a multimodal AI agent running in the background with a continuous screenshot mechanism to understand what's on the screen and perform the appropriate actions using the PyAutoGUI library. The agent creates a chain of thought based on the task and uses the get_screen_info tool to get screen information. The tool takes a screenshot of the current screen and uses a grid to mark the true coordinates of the screen. The agent then uses a multimodal LLM to understand the screen content and give answers based on the agent's questions. Thought Chain Support get_screen_info tool and the PythonREPLAst tool, which is designed to perform operations using the PyAutoGUI library.

caveat

- Use dedicated virtual machines or containers that run with minimal privileges to prevent direct system attacks or accidents.

- Avoid providing sensitive data, such as account login information, to the model to prevent information leakage.

- Restrict Internet access to only whitelisted domains to minimize exposure to malicious content.

- Manual confirmation is required for decisions that may have a practical impact and for tasks that require confirmation, such as accepting cookies, executing financial transactions, or agreeing to terms of service.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...