Claude 3.7 Sonnet and Claude Code: cutting-edge reasoning meets Agentic coding

Anthropic The company released today Claude 3.7 Sonnet ^1^ , which is not only Anthropic's smartest model to date, but also marks the first hybrid reasoning model on the market.Claude 3.7 Sonnet provides both near-instantaneous responses and deeper, step-by-step thinking, and the thinking process is accessible to the user.The API user also has fine-grained control of how the model thinks about the length of timeThe

Claude 3.7 Sonnet shows particularly significant improvements in coding and front-end web development. The model is accompanied by Claude Code, a command-line tool for agentic coding, currently available in a limited research preview, which enables developers to delegate a wide range of engineering tasks to Claude directly from the terminal.

Claude 3.7 Sonnet is now supported on all Claude plans (including Free, Pro, Team, and Enterprise), as well as the Anthropic API, Amazon Bedrock, and Google Cloud Vertex AI. extended Thinking Mode is available on all platforms except the free version of Claude. Extended Thinking Mode is available on all platforms except the free version of Claude.

Pricing for Claude 3.7 Sonnet remains consistent with its predecessor in both Standard and Extended Thinking Modes: per million inputs tokens 3 dollars per million output tokens and 15 dollars per million output tokens - including thinking tokens.

Claude 3.7 Sonnet: cutting-edge reasoning goes practical

Anthropic developed Claude 3.7 Sonnet with a different philosophy than other reasoning models. Just as humans use the same brain to react quickly and think deeply, Anthropic believes that reasoning should be an inherently integrated capability of a cutting-edge model, rather than a completely separate model. This unified approach also creates a smoother experience for users.

Claude 3.7 Sonnet embodies this idea in several ways. First, Claude 3.7 Sonnet combines a normal LLM with an inference model: the user can choose when the model responds quickly in standard mode and when it thinks longer before answering. In standard mode, Claude 3.7 Sonnet is an upgraded version of Claude 3.5 Sonnet. In Extended Thinking mode, the model reflects on itself before answering, thus improving its performance on math, physics, command following, coding, and many other tasks.Anthropic found that the model is prompted in roughly the same way in both modes.

Second, when using Claude 3.7 Sonnet via the API, the user also has control over thinking about the budget: The user can tell Claude to think of up to N tokens, and the value of N can be set to an output limit of up to 128K tokens. This gives the user the flexibility to trade off speed (and cost) against answer quality as needed.

Third, during the development of the inference model, Anthropic slightly reduced its focus on math and computer science competition topics in the optimization direction, and instead focused more on real-world application scenarios to better reflect how business users actually use LLM.

Early tests have proven Claude to be a leader in all aspects of coding: Cursor notes that Claude is once again a leader in real-world coding tasks, with significant improvements in areas ranging from handling complex code bases to advanced tooling, and Cognition finds that Claude outperforms any other model when it comes to planning code changes and handling full-stack updates. Vercel emphasizes Claude's superior accuracy in complex agent workflows, while Cognition finds that Claude outperforms any other model in planning code changes and handling full-stack updates. Replit Having successfully deployed Claude to build complex web applications and dashboards from scratch, which is difficult to achieve in other models, Canva's evaluation showed that Claude consistently generates production-ready code that is not only more tastefully designed, but also dramatically reduces bugs.

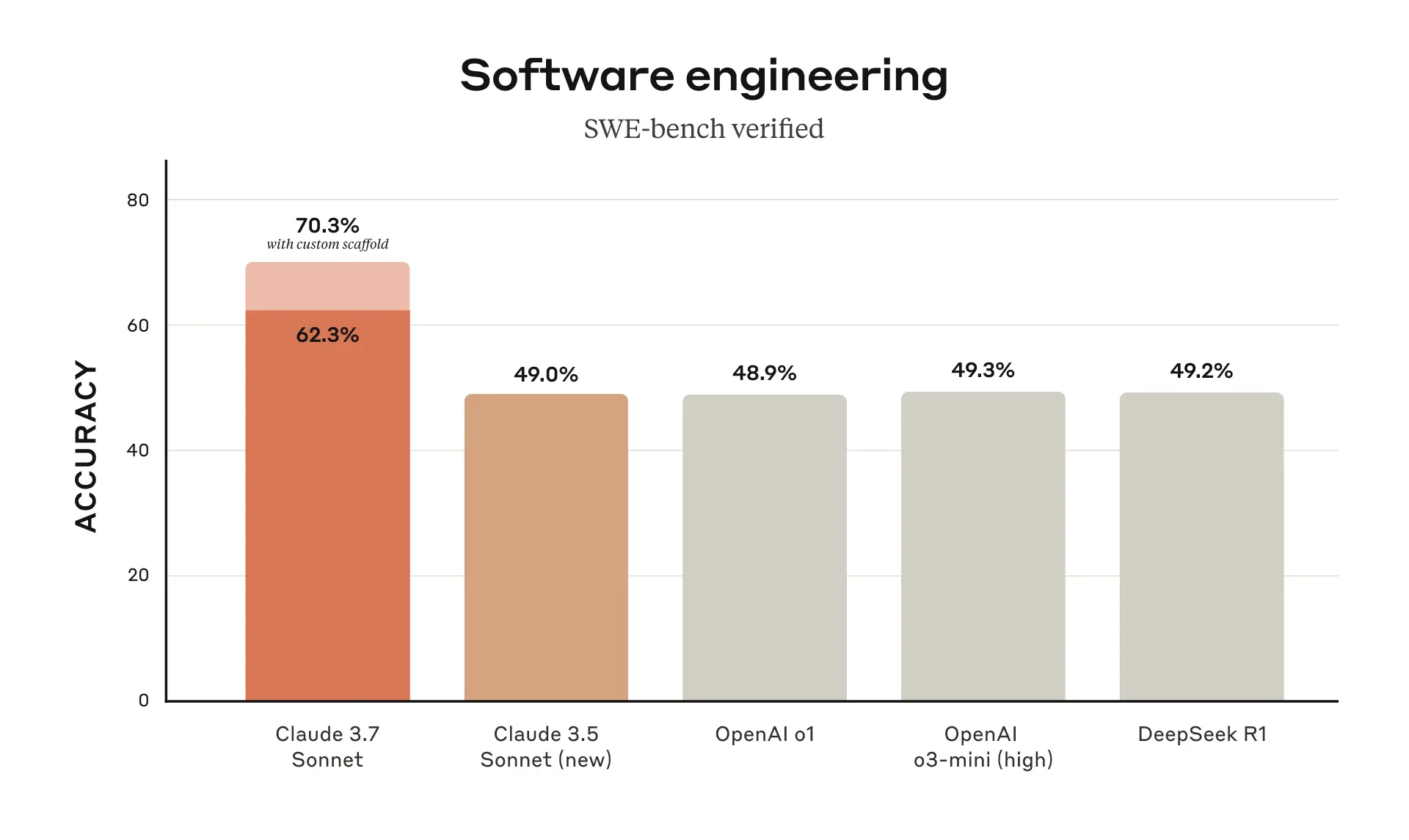

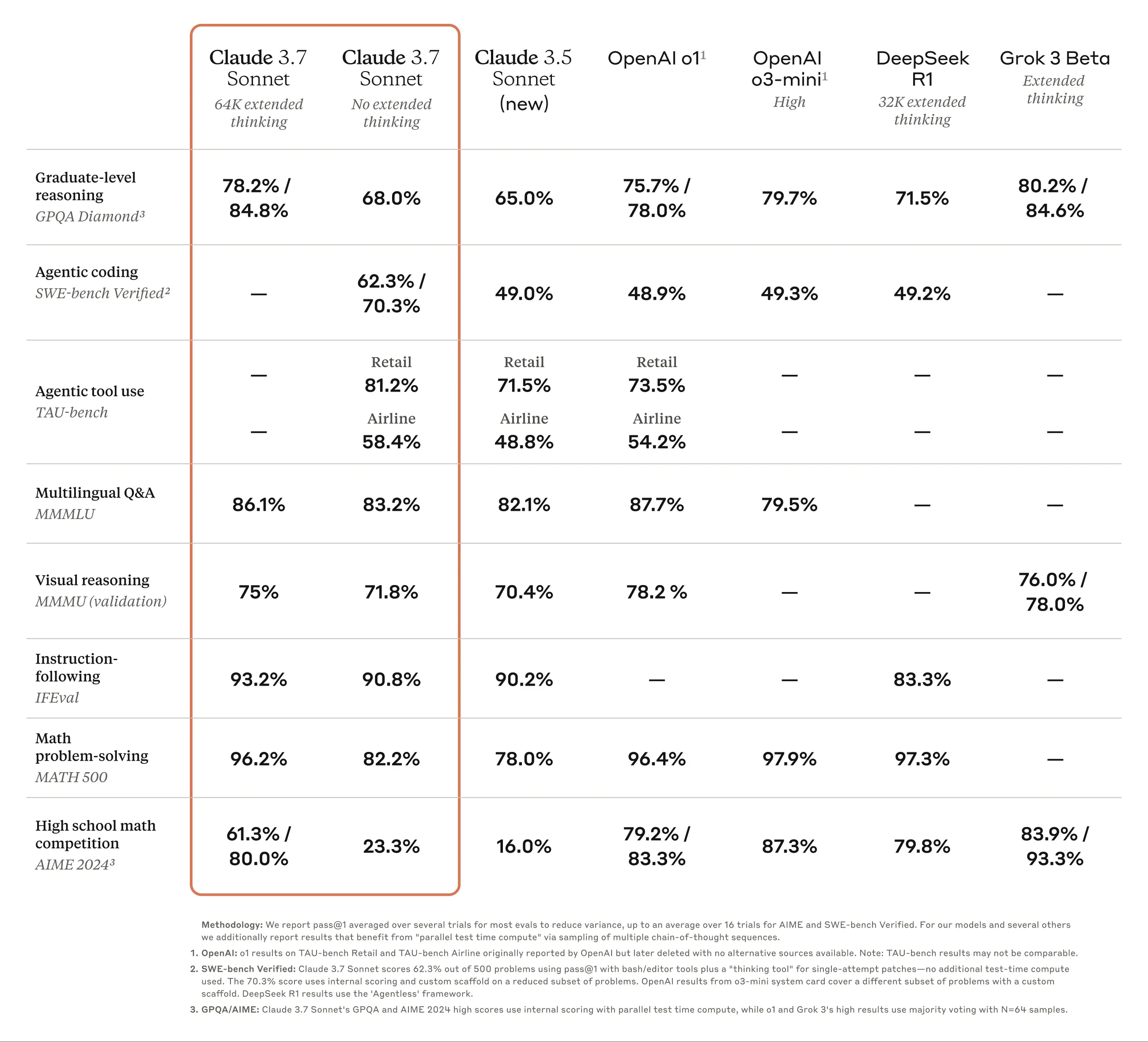

Claude 3.7 Sonnet achieved the best performance on SWE-bench Verified, a benchmark that rates the ability of AI models to solve real software problems. For more information on scaffolding, see the Appendix.

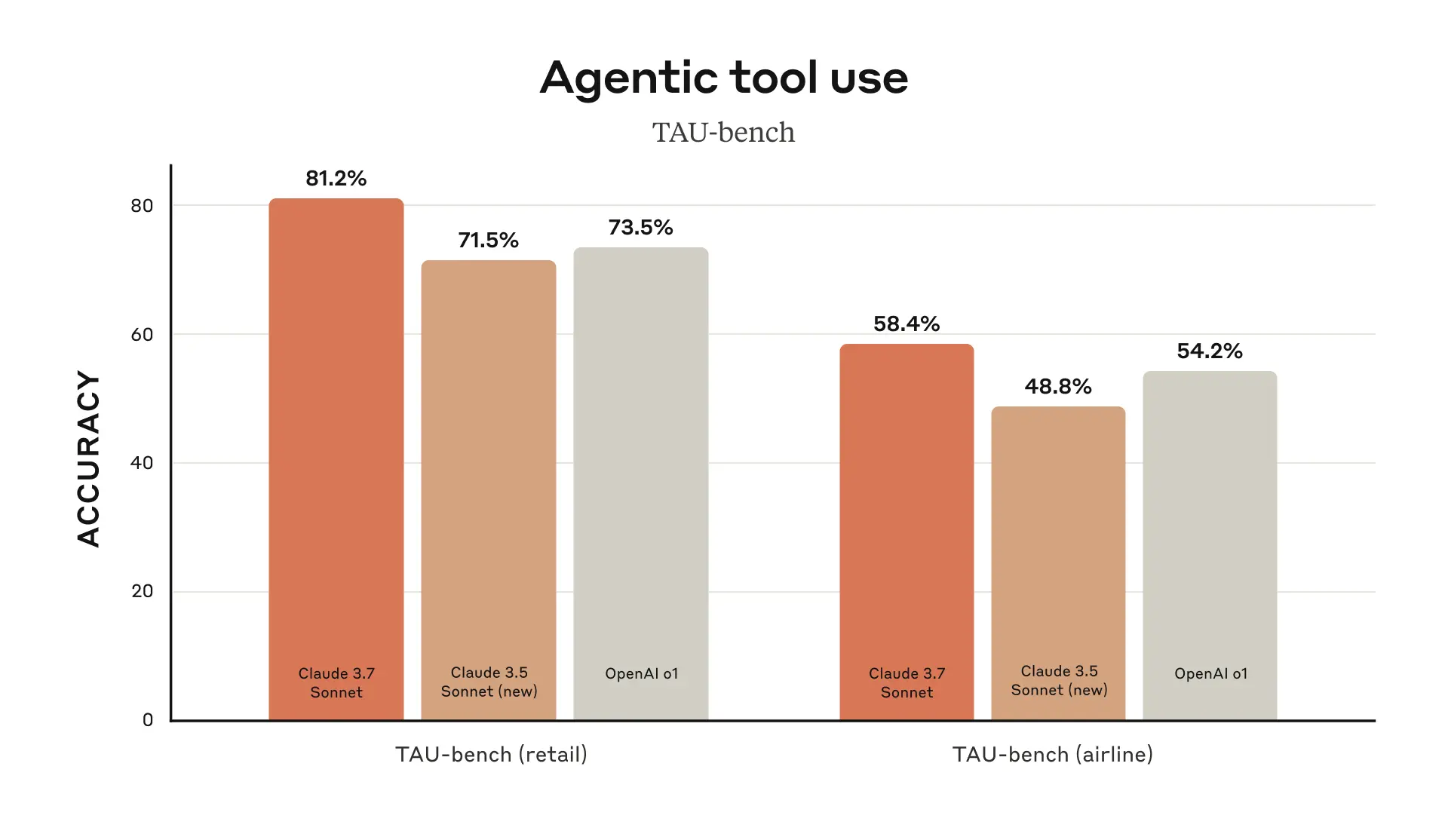

Claude 3.7 Sonnet achieved the best performance on TAU-bench, a framework for testing the performance of AI Agents on complex real-world tasks involving user-tool interactions. For more information on scaffolding, see the Appendix.

Claude 3.7 Sonnet excels in instruction following, generalized reasoning, multimodal capabilities, and agentic encoding, and the extended thinking mode significantly improves its performance in math and science. In addition to traditional benchmarks, Claude 3.7 Sonnet even outperforms all previous models in Pokémon game tests.

Claude Code: A New Assistant for Developers

Since June 2024, Sonnet has been the model of choice for developers worldwide. Now, Anthropic is available in a limited research preview version Claude Code -- Anthropic's first agentic coding tool to further empower developers.

Claude Code is an active collaborator that searches and reads code, edits files, writes and runs tests, commits code and pushes it to GitHub, and uses command line tools - keeping users informed at every step.

Claude Code is still in its early stages, but has become an indispensable tool for the Anthropic team, especially for test-driven development, debugging complex problems, and large-scale refactoring. In early testing, Claude Code accomplished in a single operation what would normally take more than 45 minutes to do manually, significantly reducing development time and administrative costs.

In the coming weeks, Anthropic plans to make ongoing improvements based on user usage, including improving tool call reliability, adding support for long-running commands, improving in-app rendering, and enhancing Claude's own understanding of its capabilities.

Anthropic's goal in launching Claude Code is to better understand how developers code with Claude to inform future model improvements. By joining this preview, users will gain access to the same powerful tools Anthropic uses to build and improve Claude, and user feedback will directly influence its future development.

Working with Claude on the Codebase

Anthropic has also improved the coding experience on Claude.ai, and Anthropic's GitHub integration is now available across all Claude programs - enabling developers to connect their code repositories directly to Claude.

Claude 3.7 Sonnet is Anthropic's best coding model to date. With a deeper understanding of users' personal, work, and open source projects, it becomes an even stronger partner for fixing bugs, developing new features, and building documentation for the most important GitHub projects.

Responsible construction

Anthropic conducted extensive testing and evaluation of Claude 3.7 Sonnet and worked with outside experts to ensure that it met Anthropic's standards for security, reliability, and safety. Claude 3.7 Sonnet is also more nuanced in distinguishing between harmful and benign requests than its predecessor, with 45% fewer unnecessary rejections.

This version of the system card covers the latest security findings across multiple categories, detailing Anthropic's Responsible Extension Policy assessments that other AI labs and researchers can apply to their own work. The system card also explores emerging risks posed by computer use, specifically prompt injection attacks, and explains how Anthropic assesses these vulnerabilities and trains Claude to resist and mitigate them. In addition, the system card examines the potential security benefits that inference models can bring: the ability to understand how models make decisions, and whether model inference is truly trustworthy and reliable. Read the full System Card for more information.

looking forward

The release of Claude 3.7 Sonnet and Claude Code marks an important step in the direction of truly empowering humans with AI systems. With their ability to reason deeply, work autonomously, and collaborate effectively, they are leading us toward a future where AI can enrich and extend human achievement.

Anthropic is excited for users to explore these new features and looks forward to seeing what users will create.Anthropic always welcomes [feedback](mailto: feedback@anthropic.com) from users so that Anthropic can continue to improve and evolve Anthropic's models.

appendice

^1 ^ Lessons on naming.

Assessment of data sources

TAU-bench

Information about scaffolding

These scores were obtained by adding a prompt appendix to Airline Agent Policy that instructed Claude to better utilize the "Planning" tool. In this mode, Anthropic encourages the model to write down its thinking process during multiple rounds of interaction in problem solving in order to fully utilize its reasoning skills, which is a departure from Anthropic's usual thinking mode. To accommodate Claude's additional tokens consumption from using more thought steps, Anthropic increased the maximum number of steps (in terms of the number of times the model completes them) from 30 to 100 (most interactions are completed within 30 steps, and only one interaction exceeds 50 steps).

In addition, the TAU-bench scores for Claude 3.5 Sonnet (new) differed from those reported by Anthropic at the time of the original release due to some minor improvements to the dataset since that time.Anthropic reran the test on the updated dataset to more accurately compare it to the Claude 3.7 Sonnet to compare more accurately with Claude 3.7 Sonnet.

SWE-bench Verified

Information about scaffolding

There are many approaches to solving open-ended agentic tasks like SWE-bench. Some approaches shift much of the complexity (e.g., deciding which files to investigate or edit, and which tests to run) to more traditional software, leaving only the core language model to generate code in predefined locations, or to choose from a more limited set of operations.Agentless (Xia et al., 2024) is an approach in which the Deepseek R1 A popular framework commonly used in the evaluation of Aide and other models, it enhances the agent's capabilities using a document retrieval mechanism for prompt and embedding, patch localization, and best-of-40 rejection sampling for regression testing. Other scaffolds (e.g., Aide) further augment the model with additional test-time computation in the form of retries, best-of-N, or Monte Carlo Tree Search (MCTS).

For Claude 3.7 Sonnet and Claude 3.5 Sonnet (new), Anthropic uses a simpler approach with minimal scaffolding. In this approach, the model decides which commands to run and which files to edit in a single session.Anthropic's main "no extended thinking" pass@1 result is simply to equip the model with two of the tools described here - the - The bash utility and a file editing tool that operates via string substitution - as well as the "planning tool" Anthropic mentions in the TAU-bench results. Due to infrastructure limitations, only 489/500 problems could actually be solved on Anthropic's in-house infrastructure (i.e., the gold solution passed the test). For the vanilla pass@1 score, Anthropic counted 11 unsolvable problems as failures to align with the official leaderboard. For the sake of transparency, Anthropic has separately published the test cases that did not work on Anthropic's infrastructure.

For "high compute" numbers, Anthropic uses additional complexity and parallel test-time computations, as shown below:

- Anthropic samples multiple parallel attempts using the above scaffold

- Anthropic discards patches that corrupt the visible regression tests in the repository, similar to the rejection sampling method used by Agentless; note that Anthropic does not use hidden test information.

- Anthropic then ranked the remaining attempts using a scoring model similar to Anthropic's GPQA and AIME results described in the research article and selected the best attempts for submission.

This yields a score of 70.3% on the subset of n=489 verified tasks that work on Anthropic's infrastructure. Without this scaffold, Claude 3.7 Sonnet scored 63.7% on SWE-bench Verified using the same subset.The 11 test cases that Anthropic's internal infrastructure is not compatible with are listed below:

- scikit-learn__scikit-learn-14710

- django__django-10097

- psf__requests-2317

- sphinx-doc__sphinx-10435

- sphinx-doc__sphinx-7985

- sphinx-doc__sphinx-8475

- matplotlib__matplotlib-20488

- astropy__astropy-8707

- astropy__astropy-8872

- sphinx-doc__sphinx-8595

- sphinx-doc__sphinx-9711

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...