ChatTTS: a speech generation model that mimics the voice of a real person speaking (ChatTTS one-click acceleration package)

General Introduction

ChatTTS is a generative speech model designed for conversational scenarios. It generates natural and expressive speech, supports multiple languages and multiple speakers, and is suitable for interactive conversations. The model outperforms most open-source speech synthesis models by predicting and controlling fine-grained rhythmic features such as laughter, pauses, and interjections.ChatTTS provides pre-trained models to support further research and development, primarily for academic purposes.

Function List

- Multi-language support: Chinese and English are supported, and more languages will be expanded in the future.

- Multi-talker support: The ability to generate multiple speakers' voices makes it suitable for interactive conversations.

- Fine-grained rhythmic control: Rhythmic features such as laughter, pauses and interjections can be predicted and controlled.

- Pre-trained models: Provides 40,000 hours of pre-trained models to support further research and development.

- open source: The code is open source on GitHub for academic and research use.

Using Help

Installation process

- Cloning Project Code::

git clone https://github.com/2noise/ChatTTS.git - Installation of dependencies::

cd ChatTTS pip install -r requirements.txt - Download pre-trained model: Download the pre-trained model from HuggingFace or ModelScope and place it in the specified directory.

Usage

- Loading Models::

from chattts import ChatTTS model = ChatTTS.load_model('path/to/pretrained/model') - Generate Speech::

text = "你好,欢迎使用ChatTTS!" audio = model.synthesize(text) - Saving audio files::

with open('output.wav', 'wb') as f: f.write(audio)

Detailed Function Operation

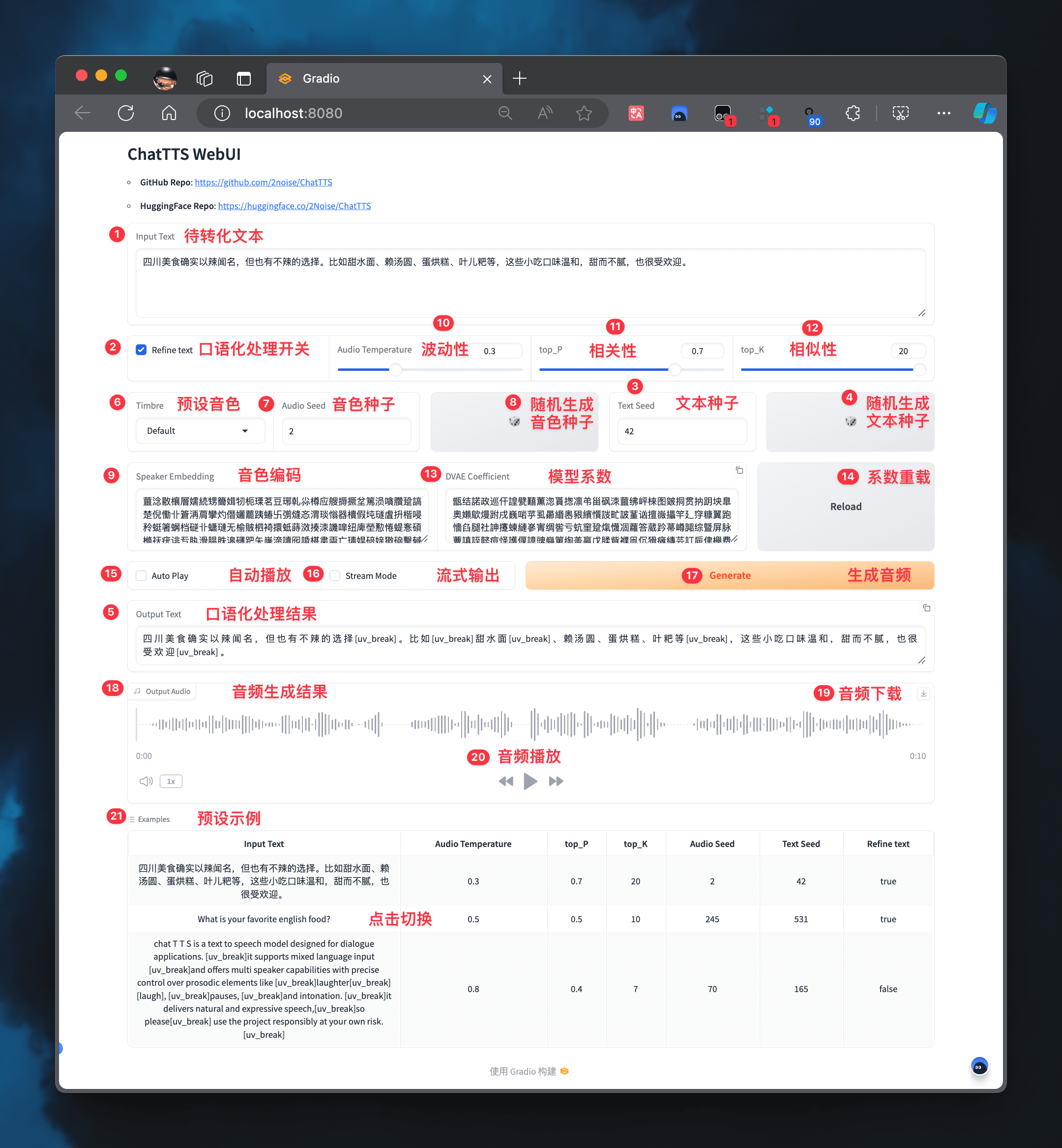

- text input: Supports mixed Chinese and English text input.

- Rhythmic control: Rhyme features such as laughter, pauses and interjections are controlled by setting parameters.

- tone control: The generated tone can be controlled by a preset tone seed value or tone code.

- emotional control: Control the emotional characteristics of the generated speech by setting the emotion volatility and relevance parameters.

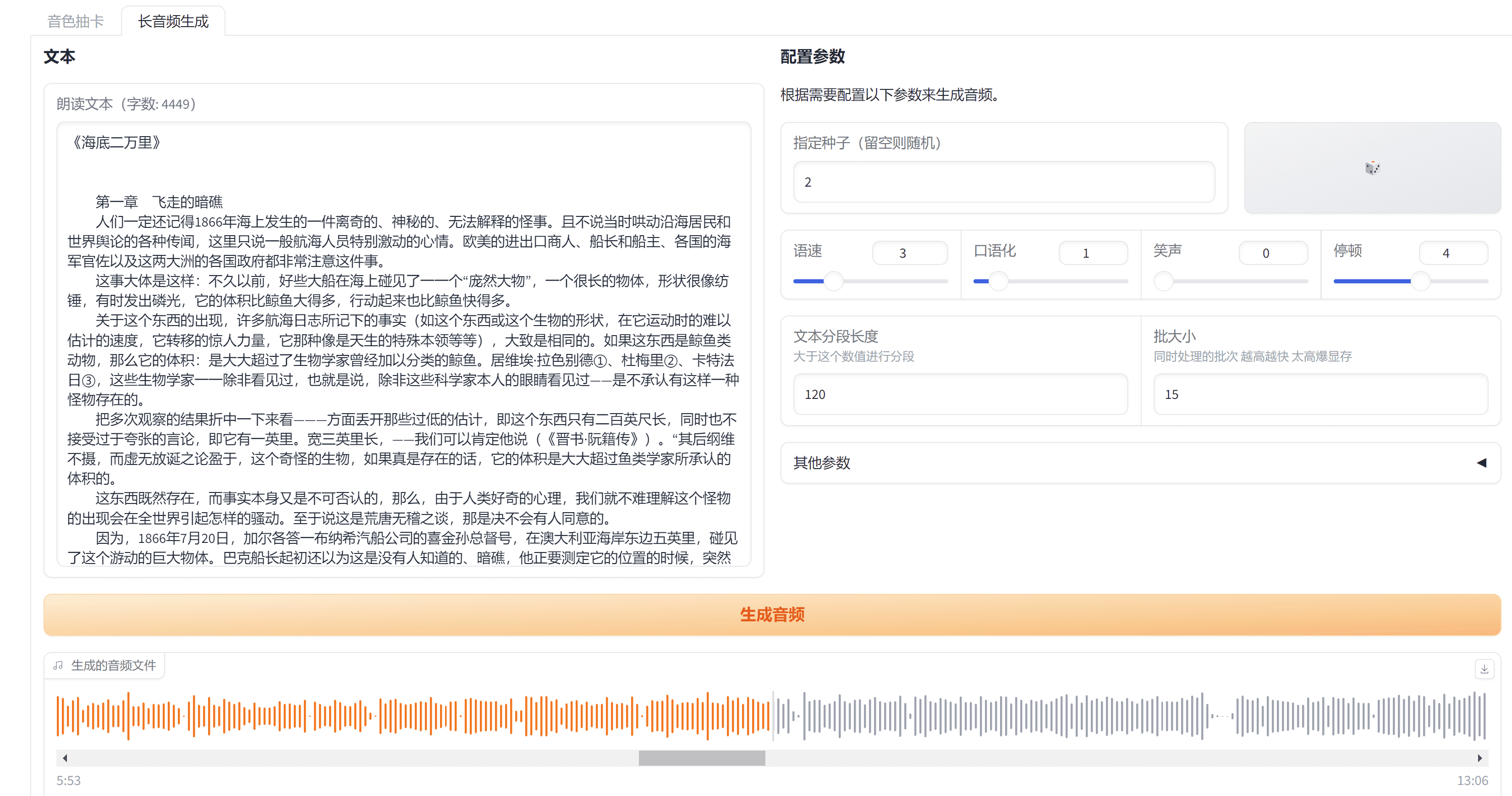

- streaming output: Supports long audio generation and split-role reading for complex dialog scenarios.

sample code (computing)

from chattts import ChatTTS

# 加载模型

model = ChatTTS.load_model('path/to/pretrained/model')

# 设置文本和韵律参数

text = "你好,欢迎使用ChatTTS!"

params = {

'laugh': True,

'pause': True,

'interjection': True

}

# 生成语音

audio = model.synthesize(text, params)

# 保存音频文件

with open('output.wav', 'wb') as f:

f.write(audio)

ChatTTS Client

Quick Experience

| web address | typology |

|---|---|

| Original Web | Original Web Experience |

| Forge Web | Forge Enhanced Experience |

| Linux | Python Installer |

| Samples | Example of a tone seed |

| Cloning | Tone Cloning Experience |

functional enhancement

| sports event | bright spot |

|---|---|

| jianchang512/ChatTTS-ui | Provides an API interface that can be called from a third-party application. |

| 6drf21e/ChatTTS_colab | Provides streaming output with support for long audio generation and split-role reading |

| lenML/ChatTTS-Forge | Provides vocal enhancement and background noise reduction, with additional cues available |

| CCmahua/ChatTTS-Enhanced | Supports batch file processing and export of SRT files. |

| HKoon/ChatTTS-OpenVoice | become man and wife OpenVoice Perform sound cloning |

Functionality Expansion

| sports event | bright spot |

|---|---|

| 6drf21e/ChatTTS_Speaker | Tone Character Marking and Stability Assessment |

| AIFSH/ComfyUI-ChatTTS | ComfyUi version, which can be introduced as a workflow node |

| MaterialShadow/ChatTTS-manager | Provides a tone management system and WebUI interface. |

ChatTTSPlus Accelerated One-Click Installation Package

ChatTTSPlus is an extended version of ChatTTS that adds TensorRT acceleration, speech cloning and mobile model deployment to the original. It is easy to use, offers a Windows one-click installer, and achieves over 3x performance improvement with TensorRT (from 28 tokens/s to 110 tokens/s on Windows 3060 GPUs). It supports speech cloning using LoRA and is developing model compression and acceleration techniques for mobile deployment.ChatTTSPlus is a powerful and easy-to-use speech synthesis tool for a wide range of scenarios, with particular advantages in applications requiring high performance and speech cloning capabilities.

Address: https://github.com/warmshao/ChatTTSPlus

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...