Unfortunately the Kimi k1.5 technical report released alongside DeepSeek-R1 improves long context and multimodal inference

Kimi k1.5 Technical Report Quick Reads

1. Powerful multimodal reasoning:

Kimi The k1.5 model achieves state-of-the-art inference performance on multiple benchmarks and modalities, including tasks such as mathematical, code, textual, and visual inference.

It not only handles plain text, but also understands combinations of images and text, enabling true multimodal reasoning.

Both the long-CoT version and the short-CoT version demonstrate strong performance.

Leadership has been achieved on datasets such as AIME, MATH 500, Codeforces, and others.

It also performs strongly on visual benchmarks such as MathVista.

2. breakthroughs in long context RL training:

By expanding the context window to 128k, the model performance continues to improve, verifying that context length is a key dimension for reinforcement learning scaling.

- A partial rollout technique is employed to significantly improve the efficiency of long context RL training, making it possible to train longer and more complex inference processes.

- It is demonstrated that long contexts are critical to the solvability of complex problems.

3. Valid long2short methods:

- An effective long2short method is proposed to utilize the knowledge of the long context model to improve the performance of the short context model and to improve the Token Efficiency.

- With techniques such as model merging, shortest rejection sampling, and DPO, performance improvement of short models is achieved and is more efficient than training short models directly.

It is shown that the performance of short models can be improved by migrating the prior knowledge of the thinking of long-CoT models.

4. Optimized RL training framework:

Improved policy optimization methods are proposed to achieve robust performance without relying on complex techniques.

Multiple sampling strategies, length penalties, and data recipe optimizations are explored to improve the efficiency and effectiveness of RL training.

The effectiveness of the proposed methodology and training techniques was verified through ablation studies.

5. Detailed training process and system design:

- A detailed training recipe for Kimi k1.5 has been made public, including pre-training, fine-tuning and intensive learning phases.

- Hybrid deployment strategies are presented to optimize resource utilization during training and inference.

- Data pipelines and quality control mechanisms are detailed to ensure the quality of the training data.

- Developed secure code sandbox for execution and evaluation of generated code.

6. Exploration of model size and context length:

The effect of model size and context length on performance is investigated, and it is found that larger models perform better, but smaller models using longer contexts can approach or even match the performance of larger models, demonstrating the enhancement of model performance by RL training.

summaries

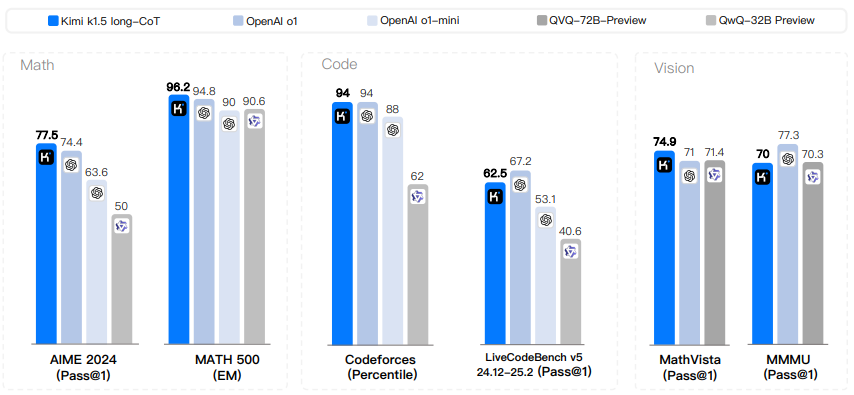

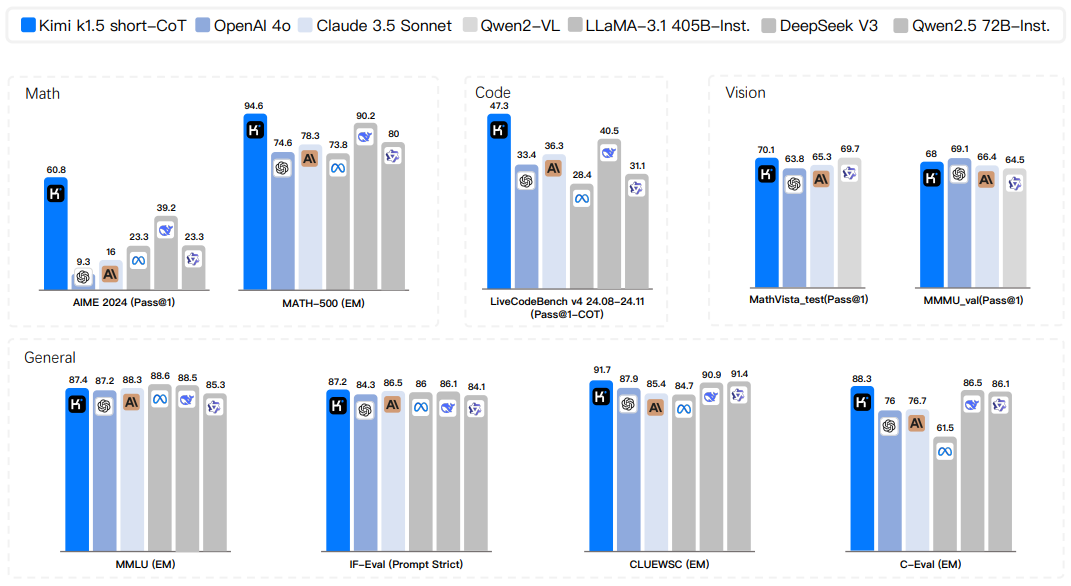

Pre-training language models using next Token predictions has been shown to be effective in scaling computationally, but is limited by the amount of training data available. Extended Reinforcement Learning (RL) opens up a new dimension for continuous improvement in AI, promising to scale training data for large language models (LLMs) by learning to explore rewards. However, previously published work has not produced competitive results. In light of this, we report on the training practices of Kimi k1.5, our latest multimodal LLM trained using RL, including its RL training techniques, multimodal data formulation, and infrastructure optimizations. Long context extension and improved policy optimization methods are key elements of our approach, which establishes a simple and effective RL framework without relying on more complex techniques such as Monte Carlo tree search, value functions, and process reward models. Notably, our system achieves state-of-the-art inference performance on multiple benchmarks and modalities - e.g., 77.5 on AIME, 96.2 on MATH 500, 94th percentile on Codeforces, MathVista 74.9 on MathVista, matching OpenAI's 01. In addition, we present effective long2short methods that use long CoT techniques to improve the short-CoT model, producing state-of-the-art short-CoT inference results - e.g., 60.8 on AIME, 94.6 on MATH500, 47.9 on LiveCodeBench, and 47.3 on LiveCodeBench, significantly outperforming existing short-CoT models such as GPT-40 and Claude Sonnet 3.5 (up to +550%).

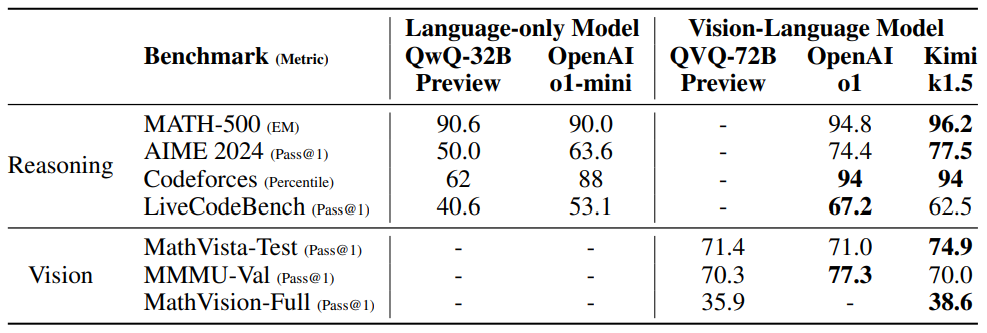

Figure 1: Kimi k1.5 long-CoT results.

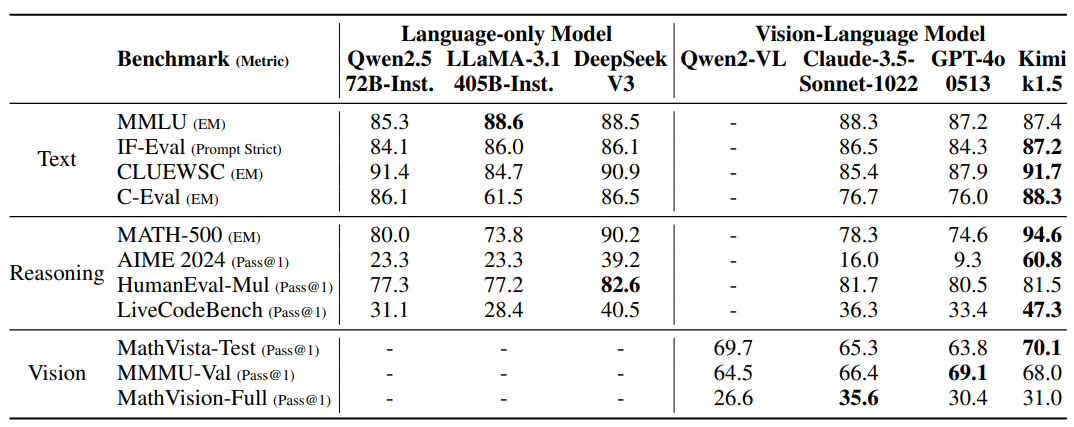

Figure 2: Kimi k1.5 short-CoT results.

1 Introduction

Pre-training of language models using the next Token prediction has been investigated in the context of the expansion lemma, where scaling model parameters and data sizes leads to continuous improvements in intelligence. (Kaplan et al. 2020; Hoffmann et al. 2022) However, this approach is limited by the amount of high-quality training data available. (Villalobos et al. 2024; Muennighoff et al. 2023) In this report, we present the training recipe for Kimi k1.5, our latest multimodal LLM trained using reinforcement learning (RL).The goal is to explore a possible new dimension of continuous expansion. Using RL with LLM, the model learns to explore rewards and is therefore not limited to pre-existing static datasets.

There are several key elements regarding the design and training of k1.5.

- long context extension: We extended the context window of RL to 128k and observed continued performance improvements as the context length increased. A key idea behind our approach is to use partial rollout to improve training efficiency - i.e., to avoid the cost of regenerating a new trajectory from scratch by reusing a large portion of the previous trajectory to sample the new trajectory. Our observations suggest that context length is a key dimension for the continuous scaling of RL in LLM.

- Improved strategy optimization: We derive the RL formulation with long-CoT and use a variant of online mirror descent for robust strategy optimization. The algorithm is further improved by our efficient sampling strategy, length penalty and optimization of the data formulation.

- Simplified framework: The combination of long context extension and improved policy optimization methods creates a simplified RL framework for learning using LLM. Since we are able to extend the context length, the learned CoT exhibits planning, reflection and correction properties. The increased context length increases the number of search steps. As a result, we show that robust performance can be achieved without relying on more sophisticated techniques (e.g., Monte Carlo tree search, value functions, and process reward models).

- multimodal: Our model is jointly trained using textual and visual data, which gives it the ability to reason jointly about both modalities.

In addition, we propose an effective long2short approach that uses long-CoT techniques to improve short-CoT models. Specifically, our approach consists of applying a length penalty with long-CoT activation and model merging.

Our version of long-CoT achieves state-of-the-art inference performance on multiple benchmarks and modalities - e.g., 77.5 on AIME, 96.2 on MATH 500, 94th percentile on Codeforces, 74.9 on MathVista, matching OpenAI 01. 74.9 on MathVista, matching OpenAI's 01. Our model also achieves state-of-the-art short-CoT inference results - e.g., 60.8 on AIME, 94.6 on MATH500, and 47.3 on LiveCodeBench - that substantially outperform existing short-CoT models such as GPT-40 and Claude Sonnet 3.5 (up to +550%). The results are shown in Figures 1 and 2.

2 Method: Reinforcement learning using LLM

The development of Kimi k1.5 consisted of several phases: pre-training, vanilla supervised fine-tuning (SFT), long-CoT supervised fine-tuning, and reinforcement learning (RL). This report focuses on RL, beginning with an overview of RL cue-set management (Section 2.1) and long-CoT supervised fine-tuning (Section 2.2), followed by an in-depth discussion of RL training strategies in Section 2.3. More detailed information on pre-training and vanilla supervised fine-tuning can be found in Section 2.5.

2.1 RL Cue Set Management

Through our preliminary experiments, we found that the quality and diversity of RL cue sets play a crucial role in ensuring the effectiveness of reinforcement learning. Carefully constructed cue sets not only guide the model to robust inference, but also mitigate the risk of reward attacks and overfitting surface patterns. Specifically, three key properties define high-quality RL cue sets:

- Diversified coverage: Tips should cover a variety of disciplines, such as STEM, coding, and general reasoning, to enhance the model's adaptability and ensure its broad applicability across different domains.

- Balanced difficulty:: Prompt sets should include evenly distributed easy, medium, and difficult problems to promote incremental learning and prevent overfitting to specific complexity levels.

- Accurate assessability: Hints should allow the validator to make objective and reliable assessments to ensure that model performance is measured based on sound reasoning rather than surface patterns or random guesses.

To achieve diverse coverage in the cue set, we use automated filters to select questions that require rich reasoning and are easy to evaluate. Our dataset includes questions from a variety of domains, such as STEM domains, competitions, and general reasoning tasks, and contains both plain text and image-text quiz data. In addition, we developed a labeling system to categorize prompts by domain and discipline to ensure a balanced representation of different topic areas (M. Li et al. 2023; W. Liu et al. 2023).

We used a model-based approach that utilizes the model's own ability to adaptively assess the difficulty of each prompt. Specifically, for each prompt, the SFT model generates ten answers using a relatively high sampling temperature. The pass rate is then calculated and used as a proxy for the difficulty of the cue - the lower the pass rate, the higher the difficulty. This approach allows the difficulty assessment to be consistent with the model's intrinsic ability, making it very effective in RL training. By utilizing this approach, we can pre-screen out most trivial cases and easily explore different sampling strategies during RL training.

To avoid potential reward attacks (Everitt et al. 2021; Pan et al. 2022), we need to ensure that we can accurately verify the reasoning process and final answer for each cue. Empirical observations have shown that some complex reasoning problems may have relatively simple and easy-to-guess answers, leading to spurious forward validation - the model reaches the correct answer through an incorrect reasoning process. To address this issue, we exclude questions that are prone to such errors, such as multiple-choice, true/false, and proof-based questions. In addition, for general question-answer tasks, we propose a simple yet effective method to identify and remove easily attackable hints. Specifically, we prompt the model to guess possible answers without any CoT inference step. If the model predicts the correct answer in N attempts, the hint is considered too easy to attack and is removed. We found that setting N = 8 removes most easily attackable hints. Developing more advanced verification models remains an open direction for future research.

2.2 Long-CoT Supervised Fine Tuning

With an improved RL cue set, we employ cue engineering to build a small but high-quality long-CoT warm-up dataset containing accurately verified inference paths for text and image inputs. This approach is similar to Rejection Sampling (RS), but focuses on generating long-CoT inference paths through cue engineering. The resulting warm-up dataset is designed to encapsulate key cognitive processes that are essential to humanoid reasoning, such as planning (where the model systematically outlines pre-execution steps); evaluation (which includes critical assessment of intermediate steps); reflection (which enables the model to reconsider and improve its approach); and exploration (which encourages the consideration of alternative solutions). By performing lightweight SFT on this warm-up dataset, we can effectively guide the model to internalize these inference strategies. As a result, the fine-tuned long-CoT model exhibits an improved ability to generate more detailed and logically coherent responses, which enhances its performance in a variety of inference tasks.

2.3 Enhanced learning

2.3.1 Problem setting

Given a training dataset D = {(xi, yi)}^n_i=1, which contains the question xi and the corresponding true answer yi, our goal is to train the policy model πθ to accurately solve the test problem. In a complex reasoning context, the mapping of problem x to solution y is not an easy task. To address this challenge, the chain-of-thinking (CoT) approach suggests using a series of intermediate steps z = (z1, z2, ... , zm) to bridge x and y, where each zi is a coherent sequence of tokens that can serve as an important intermediate step in problem solving (J. Wei et al., 2022). In solving problem x, the autoregressive sampling mind zt ~ πθ(-|x, z1, ... , zt-1), and then sampling the final answer y ~ πθ(-|x, z1,... , zm). We use y, z ~ πθ to denote this sampling process. Note that both thoughts and final answers are sampled as linguistic sequences.

To further enhance the model's reasoning capabilities, we employ planning algorithms to explore various thought processes that generate improved CoTs when reasoning (Yao et al. 2024; Y. Wu et al. 2024; Snell et al. 2024). The central insight of these approaches is the explicit construction of thinking search trees guided by value estimates. This allows the model to explore various continuations of the thought process, or to backtrack to investigate new directions when it encounters a dead end. In more detail, let T be a search tree where each node represents a partial solution s = (x, z1:|s|). Here s consists of the problem x and a sequence of thoughts z1:|s| = (z1, ... , z|s|) that lead to the node, where |s| denotes the number of thoughts in the sequence. The planning algorithm uses the critique model v to provide feedback v(x, z1:|s|), which helps to evaluate the current progress in solving the problem and to identify any errors in the existing partial solution. We note that feedback can be provided by either discriminant scores or linguistic sequences (L. Zhang et al. 2024). Guided by the feedback from all s ∈ T , the planning algorithm expands the search tree by selecting the nodes that are most likely to expand. The above process is repeated iteratively until a complete solution is reached.

We can also approach planning algorithms from an algorithmic perspective. Given the past search history available at the tth iteration (s1, v(s1), ... , st-1, v(st-1)), the planning algorithm A iteratively determines the next search direction A(st|s1, v(s1), ... , st-1, v(st-1)) and provides feedback on the current search progress A(v(st)|s1, v(s1), ... , st). , st) to provide feedback. Since both thinking and feedback can be viewed as intermediate reasoning steps, and since these components can be represented as sequences of linguistic tokens, we use z instead of s and v to simplify the representation. Thus, we view the planning algorithm as acting directly on a sequence of inference steps A(-|z1, z2, ...). ) of the mapping. In this framework, all information stored in the search tree used by the planning algorithm is spread out into the full context provided to the algorithm. This provides an interesting perspective for generating high-quality CoTs: instead of explicitly constructing the search tree and implementing the planning algorithm, we can train a model to approximate this process. Here, the amount of thinking (i.e., linguistic Token) is analogous to the computational budget traditionally allocated to planning algorithms. Recent advances in long context windows promote seamless scalability during the training and testing phases. If feasible, this approach enables the model to run implicit searches directly on the inference space via autoregressive predictions. As a result, the model not only learns to solve a set of training problems, but also develops the ability to efficiently solve individual problems, thus improving generalization to unseen test problems.

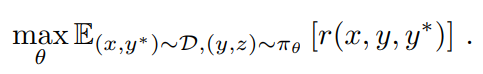

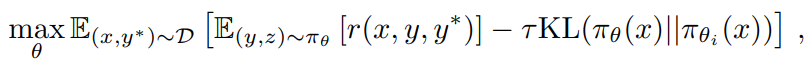

Therefore, we consider training models to generate CoTs using reinforcement learning (RL) (OpenAI 2024). Let r be a reward model that provides a basis for the correctness of the answer y proposed for a given question x based on the true answer y* by assigning a value r(x, y, y*) ∈ {0, 1}. For verifiable problems, the reward is directly determined by predefined criteria or rules. For example, in coding problems, we evaluate whether the answer passes the test case. For questions with free-form true answers, we train the reward model r(x, y, y*) to predict whether the answer matches the true answer. Given question x, the model πθ generates CoTs and final answers through a sampling process z ~ πθ(-|x), y ~ πθ(-|x, z). The quality of the generated CoT depends on whether it can produce correct final answers. In summary, we consider the following objectives to optimize the strategy

By extending RL training, we aim to train a model that takes advantage of both simple cue-based CoT and planning-enhanced CoT. The model still autoregressively samples linguistic sequences during inference, thus avoiding the complex parallelization required by advanced planning algorithms during deployment. However, a key difference from simple cue-based approaches is that the model should not just follow a series of inference steps. Instead, it should also learn key planning skills, including error identification, backtracking, and solution improvement, by utilizing the entire set of explored thoughts as contextual information.

2.3.2 Strategy Optimization

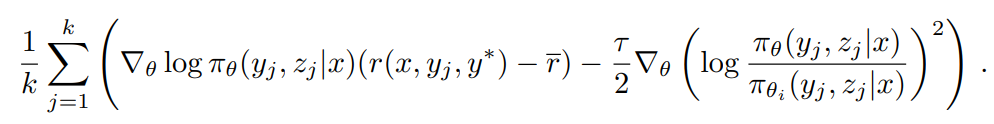

We apply a variant of the online strategy mirror descent as our training algorithm (Abbasi-Yadkori et al. 2019; Mei et al. 2019; Tomar et al. 2020). The algorithm is executed iteratively. In the i-th iteration, we use the current model πθi as the reference model and optimize the following relative entropy regularization policy optimization problem that

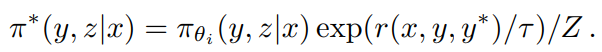

where τ > 0 is a parameter controlling the degree of regularization. This objective has a closed form solution

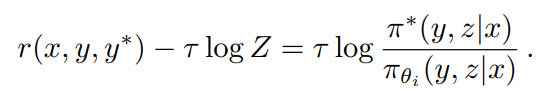

where Z = Σy',z' πθi (y', z'|x) exp(r(x, y', y*)/τ) is the normalization factor. Taking the logarithm of both sides, we obtain that for any (y, z), the following constraints are satisfied, which allows us to exploit the offline policy data during optimization

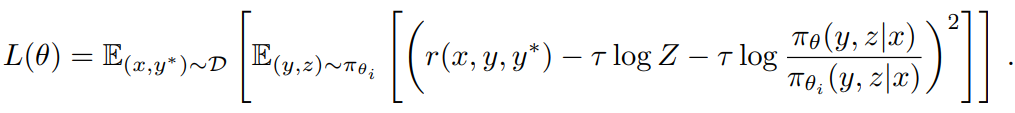

This inspired the following replacement losses

To approximate τ log Z, we use the samples (y1, z1), ... , (yk, zk) ~ πθ: τ log Z ≈ log 1/k Σ^k_j=1 exp(r(x, yj, y*)/τ). We also find that using the empirical mean of the sampling reward τ = mean(r(x, y1, y*), ... , r(x, yk, y*)) yields valid practical results. This is reasonable because as τ → ∞, τ log Z approaches the expected reward under πθi . Finally, we derive our learning algorithm by taking the gradient of the substitution loss. For each problem x, k responses are sampled using the reference policy πθi and the gradient is given by

For those familiar with strategy gradient methods, this gradient is similar to the strategy gradient of (2) that uses the mean of the sampled rewards as a baseline (Kool et al. 2019; Ahmadian et al. 2024). The main difference is that the response is sampled from πθi rather than the online strategy, and l2 regularization is applied. Thus, we can view this as a natural extension of the usual online regularized policy gradient algorithm to the offline policy case (Nachum et al. 2017). We sample a batch of problems from D and update the parameter to θi+1 , which is subsequently used as the reference policy for the next iteration. Since each iteration considers a different optimization problem due to the change in the reference strategy, we also reset the optimizer at the beginning of each iteration.

We excluded the value network from the training system, which was also utilized in a previous study (Ahmadian et al. 2024). While this design choice significantly improves training efficiency, we also hypothesize that the traditional use of value functions for credit allocation in classical RL may not be appropriate for our context. Consider a situation where the model generates a partial CoT (z1, z2, ... , zt) and has two potential next inference steps: zt+1 and z't+1. Suppose that zt+1 leads directly to the correct answer, while z't+1 contains some errors. If the oracle value function were accessible, it would indicate that zt+1 retains a higher value than z't+1. According to standard credit allocation principles, choosing z't+1 would be penalized because it has a negative advantage over the current strategy. However, exploring z't+1 is valuable for training the model to generate long CoT. By using the rationale for the final answer derived from the long CoT as a reward signal, the model can learn from the trial-and-error pattern of employing z't+1 as long as it successfully recovers and arrives at the correct answer. The key conclusion to be drawn from this example is that we should encourage the model to explore various reasoning paths to enhance its ability to solve complex problems. This exploratory approach generates a great deal of experience that supports the development of critical planning skills. Rather than improving the accuracy of training problems to the highest level, our main goal is to focus on equipping the model with effective problem-solving strategies that will ultimately improve its performance on test problems.

2.3.3 Length penalties

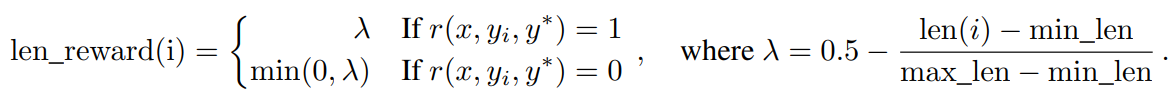

We observe an overthinking phenomenon where the length of the model's response increases significantly during RL training. While this leads to better performance, overly lengthy reasoning processes are costly during training and inference, and humans generally dislike overthinking. To address this problem, we introduce a length bonus to limit the rapid growth of Token length and thus improve the Token efficiency of the model. Given k sampled responses (y1, z1) to problem x, ... , (yk, zk) and the true answer y*, let len(i) be the length of (yi, zi), min_len = min_i len(i) and max_len = max_i len(i). If max_len = min_len, we set the length bonus to zero for all responses since they have the same length. Otherwise, the length bonus is given by

Essentially, we encourage shorter responses and penalize longer responses in correct answers, while explicitly penalizing longer responses in incorrect answers. This length-based reward with weight parameters is then added to the original reward.

In our preliminary experiments, length penalties may slow down the initial phase of training. To mitigate this problem, we suggest gradually warming up the length penalty in the initial phase of training. Specifically, we optimize with the standard strategy without a length penalty and then apply a constant length penalty for the rest of the training.

2.3.4 Sampling strategy

Although the RL algorithm itself has relatively good sampling properties (harder problems provide larger gradients), its training efficiency is limited. Therefore, some well-defined a priori sampling methods can yield greater performance gains. We utilize multiple signals to further improve the sampling strategy. First, the RL training data we collect naturally carries different difficulty labels. For example, math competition problems are more difficult than elementary school math problems. Second, since the RL training process samples the same problems multiple times, we can also track the success rate of each problem as an indicator of difficulty. We propose two sampling methods to utilize this prior knowledge to improve training efficiency.

Course Sampling We first train on simpler tasks and then gradually transition to more challenging tasks. Since the initial RL model has limited performance, spending a limited computational budget on very difficult problems typically yields few correct samples, leading to lower training efficiency. At the same time, the data we collect naturally contains grade and difficulty labels, which makes difficulty-based sampling an intuitive and effective way to improve training efficiency.

priority sampling In addition to course sampling, we use a prioritized sampling strategy to focus on problems with poor model performance. We keep track of the success rate si for each problem i and sample problems in a way that is proportional to 1/si so that problems with lower success rates receive a higher probability of sampling. This will direct the model's effort to its weakest areas, thus speeding up learning and improving overall performance.

2.3.5 More detailed information on training recipes

Test case generation for coding Since there are no available test cases for many coding problems on the web, we devised a way to automatically generate test cases that can be used as a bonus for training models using RL. Our focus is primarily on problems that do not require special referees. We also assume that real answers to these questions are available so that we can utilize them to generate higher quality test cases.

We enhance our approach with the widely recognized test case generation library CYaRon¹. We use our base Kimi k1.5 to generate test cases based on problem statements.CYaRon's usage instructions and problem descriptions serve as input to the generator. For each problem, we first use the generator to generate 50 test cases and randomly sample 10 real answer submissions for each test case. We run the test cases against the submissions. A test case is considered valid if at least 10 out of 7 submissions produce a match. After this round of filtering, we have a selected set of test cases. If at least 10 out of 9 submissions pass the set of selected test cases, the question and its associated selected test cases are added to our training set.

Statistically, from a sample of 1,000 online contest questions, approximately 614 questions did not require a special referee. We developed 463 test case generators that produced at least 40 valid test cases, resulting in our training set containing 323 problems.

Reward Modeling for Mathematics One challenge in evaluating math solutions is that different forms of writing can represent the same basic answer. For example, a² - 4 and (a + 2)(a - 2) may both be valid solutions. We used two approaches to improve the scoring accuracy of the reward model:

- Classical RM: Drawing inspiration from the InstructGPT (Ouyang et al. 2022) approach, we implemented a value-based header reward model and collected about 800,000 data points for fine-tuning. The model ultimately takes "question", "reference" and "response" as inputs and outputs a scalar indicating whether the response is correct or not.

- Chain of Thought RM: Recent studies (Ankner et al. 2024; McAleese et al. 2024) have shown that reward models augmented with chain-of-thinking (CoT) reasoning can significantly outperform classical approaches, especially in tasks that require fine-grained correctness criteria (e.g., math). Therefore, we collected an equally sized dataset of approximately 800,000 CoT-labeled examples to fine-tune the Kimi model. Based on the same inputs as the classical RM, the chain-of-thinking approach explicitly generates stepwise inference processes in JSON format, and then provides final correctness judgments, resulting in more robust and interpretable reward signals.

In our manual sampling, the accuracy of the classical RM was about 84.4, while the accuracy of the thought chain RM reached 98.5. During RL training, we used the thought chain RM to ensure more correct feedback.

Visual data In order to improve the model's real-world image inference capabilities and achieve more efficient alignment between visual inputs and large language models (LLMs), our visual reinforcement learning (Vision RL) data comes from three different categories: real-world data, synthetic visual inference data, and text-rendered data.

- The real-world data cover a wide range of scientific problems at all grade levels that require graph comprehension and reasoning; localization guessing tasks that require visual perception and reasoning; and analysis tasks that involve understanding complex diagrams, among others. These datasets improve the model's ability to perform visual reasoning in real-world scenarios.

- Synthetic visual reasoning data are human-generated and include images and scenes created by programs designed to improve specific visual reasoning skills, such as understanding spatial relationships, geometric patterns, and object interactions. These synthetic datasets provide a controlled environment for testing a model's visual reasoning abilities and provide an endless supply of training examples.

- Text-rendered data is created by converting textual content into a visual format, allowing the model to maintain consistency in processing text-based queries across different modalities. By converting text documents, code snippets, and structured data into images, we ensure that the model provides a consistent response regardless of whether the input is plain text or text rendered as an image (e.g., a screenshot or photo). This also helps to enhance the model's ability to handle text-heavy images.

Each type of data is critical to building a comprehensive visual language model that can effectively manage a variety of real-world applications while ensuring consistent performance across input modes.

2.4 Long2short: context compression for Short-CoT models

Although the long-CoT model achieves strong performance, it consumes more test-time Token than the standard short-CoT LLM. however, the a priori knowledge of the thinking in the long-CoT model can be transferred to the short-CoT model to improve performance even with a limited test-time Token budget. We propose several approaches to this long2short problem, including model merging (Yang et al., 2024), shortest rejection sampling, DPO (Rafailov et al., 2024), and long2short RL. A detailed description of these approaches is provided below:

Model Merge Model merging has been found to help maintain generalization capabilities. We have also found that it improves Token efficiency when merging long-CoT models with short-CoT models. This approach combines long-CoT models with shorter models to obtain new models without training. Specifically, we merge the two models by simply averaging their weights.

Minimum Rejection Sampling We observe that our model generates responses of widely varying lengths for the same problem. Based on this, we devised the shortest rejection sampling method. This method samples the same problem n times (n = 8 in our experiments) and selects the shortest correct response for supervised fine-tuning.

DPO Similar to shortest rejection sampling, we utilize the Long CoT model to generate multiple response samples. The shortest correct solution is selected as the positive sample, while longer responses are considered negative samples, including the longer incorrect response and the longer correct response (1.5 times longer than the selected positive sample). These positive and negative pairs constitute the paired preference data used for DPO training.

Long2short RL After the standard RL training phase, we select a model that provides the best balance between performance and Token efficiency as the base model and perform a separate long2short RL training phase. In the second phase, we apply the length penalty introduced in Section 2.3.3 and significantly reduce the maximum rollout length to further penalize responses that are longer than required but may be correct.

2.5 Other training details

2.5.1 Pre-training

The Kimi k1.5 base model is trained on a diverse, high-quality multimodal corpus. The linguistic data covers five domains: English, Chinese, code, mathematical reasoning and knowledge. The multimodal data, including subtitles, image-text interleaving, OCR, knowledge, and QA datasets, enable our model to acquire visual linguistic competence. Rigorous quality control ensures the relevance, diversity, and balance of the overall pre-training dataset. Our pre-training was conducted in three phases: (1) visual-linguistic pre-training, in which a strong linguistic foundation was established, followed by gradual multimodal integration; (2) a cool-down period, in which selected and synthetic data were utilized to consolidate capabilities, especially for inference and knowledge-based tasks; and (3) long context activation, which scaled sequence processing up to 131,072 Tokens. for more details about our pre-training efforts for more detailed information, please see Appendix B.

2.5.2 Vanilla supervisory fine-tuning

We have created a corpus of vanilla SFT covering several domains. For non-reasoning tasks, including question and answer, writing, and text processing, we initially constructed a seed dataset through manual annotation. This seed dataset is used to train seed models. Subsequently, we collect various prompts and use the seed model to generate multiple responses for each prompt. The annotator then ranks these responses and improves the highest ranked response to generate the final version. For reasoning tasks such as mathematical and coding problems, where validation based on rule and reward modeling is more accurate and efficient than manual judgment, we extend the SFT dataset using rejection sampling.

Our vanilla SFT dataset contains approximately 1 million text examples. Specifically, 500,000 examples for general quizzing, 200,000 for coding, 200,000 for math and science, 5,000 for creative writing, and 20,000 for long context tasks such as summarization, document quizzing, translation, and writing. In addition, we have constructed 1 million textual visual examples covering a variety of categories, including diagram interpretation, OCR, image-based dialog, visual coding, visual reasoning, and math/science problems with visual aids.

We first train the model for 1 epoch at a sequence length of 32k Token, and then train it again for 1 epoch at a sequence length of 128k Token. in the first phase (32k), the learning rate decays from 2 × 10-5 to 2 × 10-6 , and then rewarms to 1 × 10-5 in the second phase (128k), before finally decaying to 1 × 10-6 . To improve training efficiency, we packaged multiple training examples into each training sequence.

2.6 RL Infrastructure

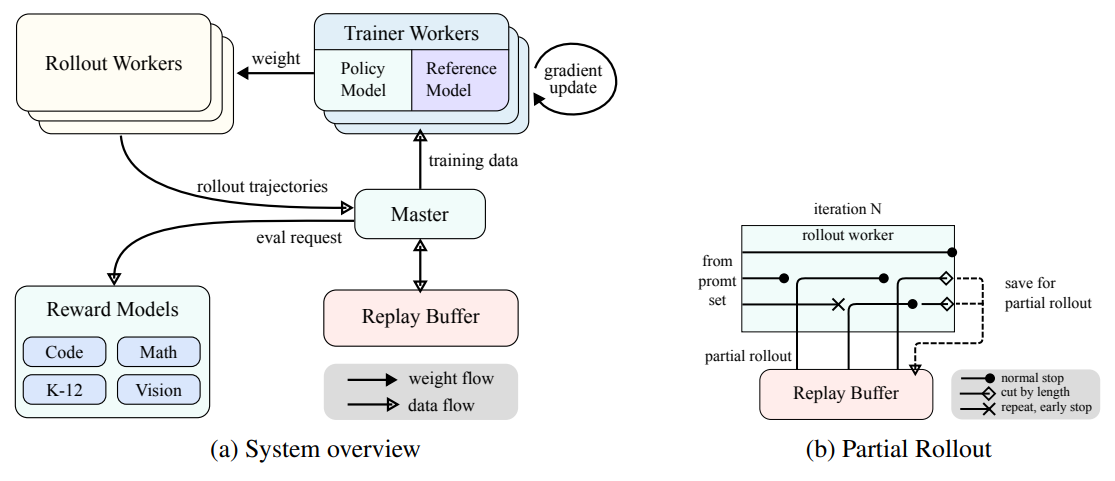

Figure 3: Large-scale reinforcement learning training system for LLMs

(a) System overview

(b) Partial rollout

2.6.1 Large Scale Reinforcement Learning Training System for LLMs

In the field of artificial intelligence, reinforcement learning (RL) has emerged as a key training method for large-scale language models (LLMs) (Ouyang et al., 2022) (Jaech et al., 2024), drawing from its success in mastering complex games such as Go, StarCraft II, and Dota 2, with systems such as AlphaGo (Silver et al. 2017), AlphaStar (Vinyals et al. 2019), and OpenAI Dota Five (Berner et al. 2019), among other systems. Following this tradition, the Kimi k1.5 system employs an iteratively synchronized RL framework that is carefully designed to enhance the model's inference through persistent learning and adaptation. A key innovation in this system is the introduction of the partial rollout technique, which is designed to optimize the processing of complex inference trajectories.

As shown in Fig. 3a, the RL training system operates through an iterative synchronization approach, where each iteration consists of a rollout phase and a training phase. During the rollout phase, a rollout work program coordinated by a central master server generates rollout trajectories by interacting with the model to produce a sequence of responses to various inputs. These trajectories are then stored in a replay buffer, which ensures a diverse and unbiased training dataset by interrupting temporal correlation. In subsequent training phases, the training work program accesses these experiences to update the model's weights. This cyclic process allows the model to continuously learn from its operations and adjust its strategy over time to improve performance.

The central master server serves as the central command, managing data flow and communication between rollout workflows, training workflows, evaluation with reward models, and replay buffers. It ensures that the system operates in a coordinated manner, balances the load and facilitates efficient data processing.

The training work program accesses these rollout trajectories, either completed in a single iteration or divided over multiple iterations, to compute gradient updates that improve the model's parameters and enhance its performance. This process is overseen by the reward model, which evaluates the quality of the model's output and provides the necessary feedback to guide the training process. The evaluation of the reward model is critical in determining the effectiveness of the model strategy and guiding the model towards optimal performance.

In addition, the system includes a code execution service that is specifically designed to handle code-related problems and is an integral part of the reward model. This service evaluates the model's output in real-world coding scenarios, ensuring that model learning is closely aligned with real-world programming challenges. By validating the model's solution against real code execution, this feedback loop is critical to improving the model's strategy and enhancing its performance in code-related tasks.

2.6.2 Partial Rollout for Long CoT RLs

One of the main ideas of our work is to extend long context RL training. Partial rollout is a key technique that effectively addresses the challenge of dealing with long-CoT characteristics by managing the rollout of long and short trajectories. This technique establishes a fixed budget of output tokens that limits the length of each rollout trajectory. If the trajectory exceeds the Token limit during the rollout phase, the unfinished portion is saved in the replay buffer and continued in the next iteration. It ensures that no single lengthy trajectory monopolizes the system's resources. In addition, because rollout work programs run asynchronously, while some work programs are processing long trajectories, others can independently process new, shorter rollout tasks. Asynchronous operation maximizes computational efficiency by ensuring that all rollout workflows actively contribute to the training process, thus optimizing the overall performance of the system.

As shown in Figure 3b, the partial rollout system works by decomposing long responses into fragments across iterations (from iteration n-m to iteration n). The replay buffer acts as the central storage mechanism for maintaining these response fragments, where only the current iteration (iteration n) needs to be computed online. Previous segments (iterations n-m to n-1) can be efficiently reused from the buffer, eliminating the need for a repeated rollout. this segmented approach significantly reduces computational overhead: instead of rolling out the entire response at once, the system processes and stores segments incrementally, allowing for longer responses to be generated while maintaining fast iteration times. During training, certain segments can be excluded from the loss calculation to further optimize the learning process, making the overall system both efficient and scalable.

The partial rollout implementation also provides duplicate detection. The system identifies duplicate sequences in generated content and terminates them early, thus reducing unnecessary computation while maintaining the quality of the output. Detected duplicates may be assigned additional penalties, effectively preventing redundant content generation from occurring in the cue set.

2.6.3 Hybrid deployment of training and reasoning

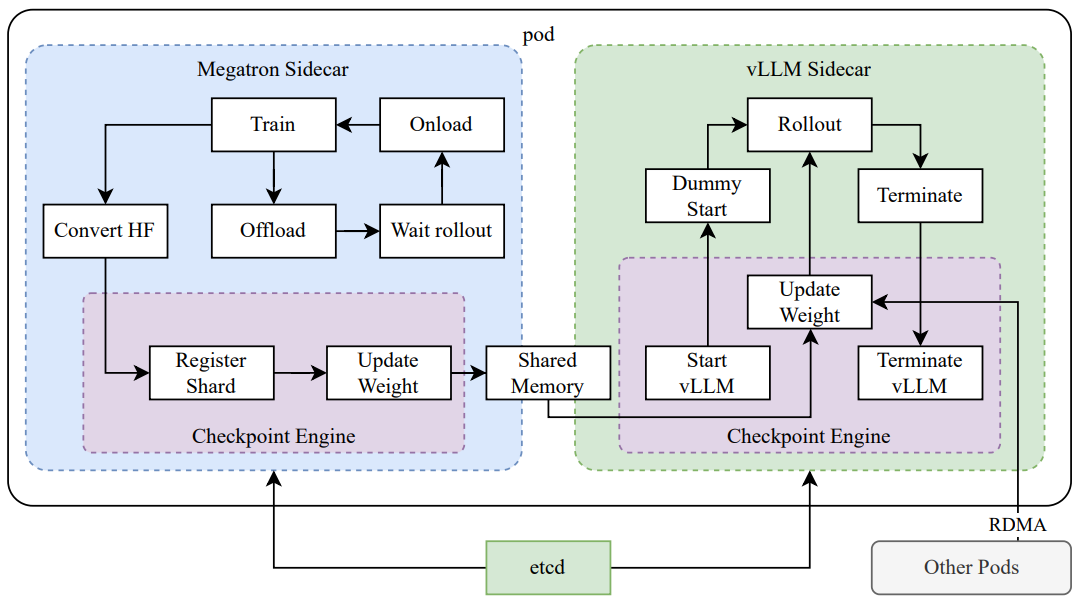

The RL training process consists of the following stages:

Figure 4: Hybrid deployment framework

- training phase: First, the Megatron (Shoeybi et al. 2020) and the vLLM (Kwon et al. 2023) are executed in separate containers encapsulated by shim processes called checkpoint engines (Section 2.6.3). megatron begins the training process. Once training is complete, Megatron unloads GPU memory and prepares to transfer the current weights to vLLM.

- inference stage: After Megatron unloading, vLLM starts with the virtual model weights and updates them with the latest weights transferred from Megatron via Mooncake (Qin et al. 2024). After the rollout is complete, the checkpoint engine stops all vLLM processes.

- Follow-up training phase: Once the memory allocated to vLLM is freed, Megatron unloads the memory and starts another round of training.

We have found it difficult to support all of the following features at the same time with existing work.

- Complex parallel strategies: Megatron may have a different parallelization strategy than vLLM. Training weights distributed across multiple nodes in Megatron may be difficult to share with vLLM.

- Minimize idle GPU resources: For online policy RL, recent work (e.g., SGLang (L. Zheng et al. 2024) and VLLM) may reserve some GPUs during training, which in turn may lead to idle training GPUs. it is more efficient to share the same devices between training and inference.

- Dynamic scalability: In some cases, significant speedups can be achieved by increasing the number of inference nodes while keeping the training process constant. Our system can efficiently utilize the required number of free GPU nodes.

As shown in Figure 4, we implemented this hybrid deployment framework on top of Megatron and vLLM (Section 2.6.3), thereby achieving a training-to-reasoning phase in less than a minute, and the reverse in about 10 seconds.

Hybrid Deployment Strategy We propose a hybrid deployment strategy for training and inference tasks that utilizes Kubernetes Sidecar containers to share all available GPUs to juxtapose both workloads in a single pod. The main advantages of this strategy are:

- It facilitates efficient resource sharing and management and prevents training nodes from being idle while waiting for inference nodes (when both are deployed on separate nodes).

- Utilizing different deployment images, training and inference can be iterated independently for better performance.

- The architecture is not limited to vLLM and other frameworks can be easily integrated.

checkpoint engine The checkpointing engine is responsible for managing the lifecycle of the vLLM process, exposing HTTP APIs that trigger various operations on vLLM. To ensure overall consistency and reliability, we utilize a global metadata system managed by the etcd service to broadcast operations and state.

When vLLM is offloaded, it can be difficult to fully free up GPU memory, mainly due to CUDA graphs, NCCL buffers, and NVIDIA drivers. To minimize modifications to vLLM, we terminate and restart it when needed for better GPU utilization and fault tolerance.

The workaround in Megatron converts owned checkpoints to Hugging Face format in shared memory. This conversion also takes into account pipeline parallelism and expert parallelism so that only tensor parallelism is preserved in these checkpoints. Checkpoints in shared memory are then fragmented and registered into the global metadata system. We use Mooncake to transfer checkpoints between peer nodes via RDMA. Some modifications to vLLM are required to load the weights file and perform the tensor-parallel transformation.

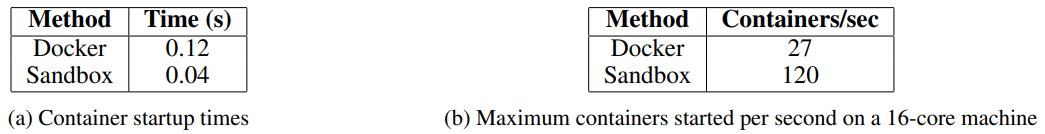

We developed the Sandbox as a secure environment for executing user-submitted code that is optimized for code execution and code benchmarking. By dynamically switching container images, the sandbox supports different use cases through MultiPL-E (Cassano, Gouwar, D. Nguyen, S. Nguyen, et al. 2023), DMOJ Judge Server², Lean, Jupyter Notebook, and other images.

For RL in coding tasks, Sandbox ensures the reliability of training data judgments by providing a consistent and repeatable evaluation mechanism. Its feedback system supports multi-stage evaluations, such as code execution feedback and repo-level edits, while maintaining a uniform context to ensure fair and unbiased benchmark comparisons across programming languages.

We deployed the service on Kubernetes for scalability and resiliency, exposing it via an HTTP endpoint for external integration.Kubernetes features such as automatic restarts and rolling updates ensure availability and fault tolerance.

To optimize performance and support RL environments, we have integrated several technologies into our code execution services to improve efficiency, speed, and reliability. These include:

- Using Crun: We use crun as the container runtime instead of Docker, which significantly reduces container startup time.

- Cgroup Reuse: We pre-create cgroups for use by containers, which is critical in highly concurrent scenarios where creating and destroying cgroups for each container can be a bottleneck.

- Disk Usage Optimization: A fixed-size, high-speed storage space is provided by controlling disk writes using an upper-level overlay file system mounted as tmpfs. This approach favors temporary workloads.

These optimizations improve RL efficiency in code execution, thus providing a consistently reliable environment for evaluating RL-generated code, which is critical for iterative training and model improvement.

3 Experiments

3.1 Assessment

Since k1.5 is a multimodal model, we performed a comprehensive evaluation for different modes on various benchmark tests. The detailed evaluation setup can be found in Appendix C. Our benchmark tests consist of the following three main categories:

- Text benchmarking: MMLU (Hendrycks et al., 2020), IF-Eval (J. Zhou et al., 2023), CLUEWSC (L. Xu et al., 2020), C-EVAL (Y. Huang et al., 2023).

- Reasoning Benchmarking: HumanEval-Mul, LiveCodeBench (Jain et al. 2024), Codeforces, AIME 2024, MATH-500 (Lightman et al. 2023)

- Visual benchmarking: MMMU (Yue, Ni, et al., 2024), MATH-Vision (K. Wang et al., 2024), MathVista (Lu et al., 2023).

3.2 Main results

K1.5 long-CoT model The performance of the Kimi k1.5 long-CoT model is shown in Table 2. The long-term inference capability of the model is significantly enhanced by long-CoT supervised fine-tuning (described in Section 2.2) and joint reinforcement learning of visual text (discussed in Section 2.3). The test-time computational extensions further enhance its performance, enabling the model to achieve state-of-the-art results in a variety of modalities. Our evaluation reveals significant improvements in the model's ability to reason, understand, and synthesize information in extended contexts, representing advances in multimodal AI capabilities.

K1.5 short-CoT model The performance of the Kimi k1.5 short-CoT model is shown in Table 3. This model integrates a variety of techniques, including traditional supervised fine-tuning (discussed in Section 2.5.2), reinforcement learning (explored in Section 2.3), and long-to-short refinement (outlined in Section 2.4). The results show that the k1.5 short-CoT model provides competitive or superior performance across a number of tasks compared to leading open source and proprietary models. These include textual, visual, and reasoning challenges, with significant advantages in natural language understanding, math, coding, and logical reasoning.

Table 2: Performance of Kimi k1.5 long-CoT and flagship open source and proprietary models.

Table 3: Performance of Kimi k1.5 short-CoT and flagship open-source and proprietary models.The performance of the VLM model was obtained from the OpenCompass benchmarking platform (https://opencompass.org.cn/).

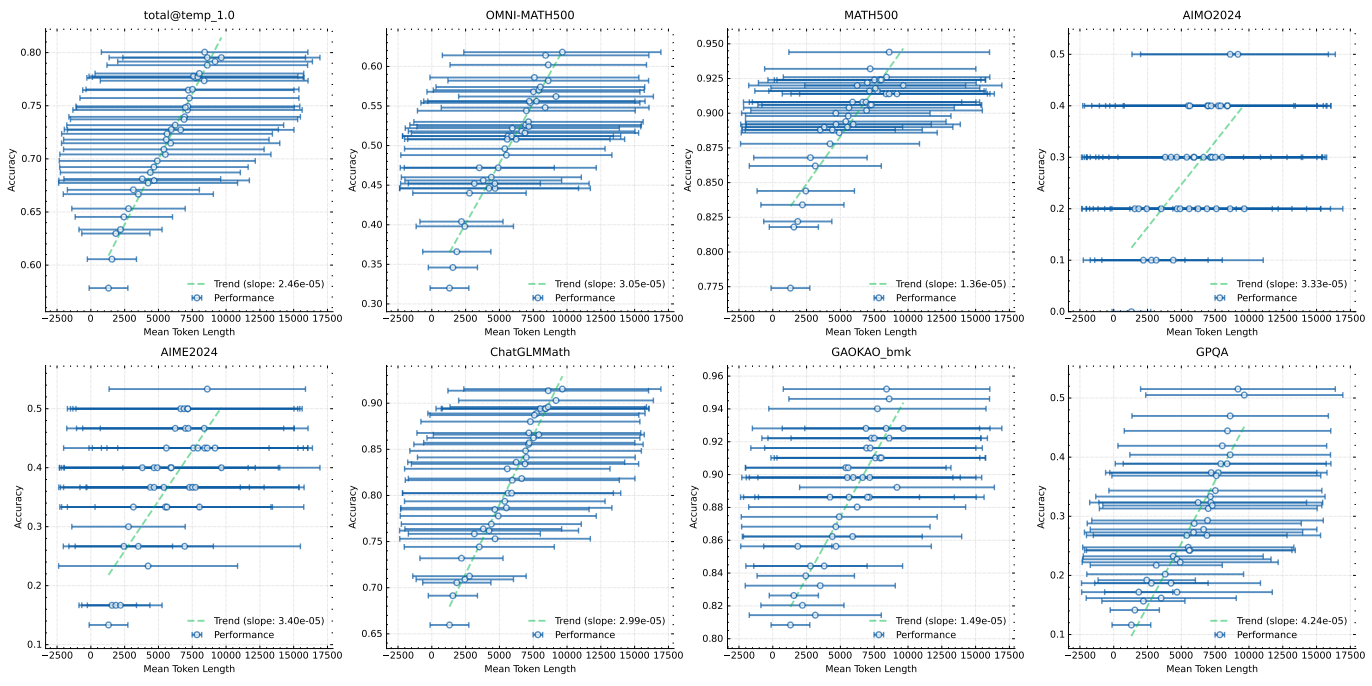

3.3 Long Context Extension

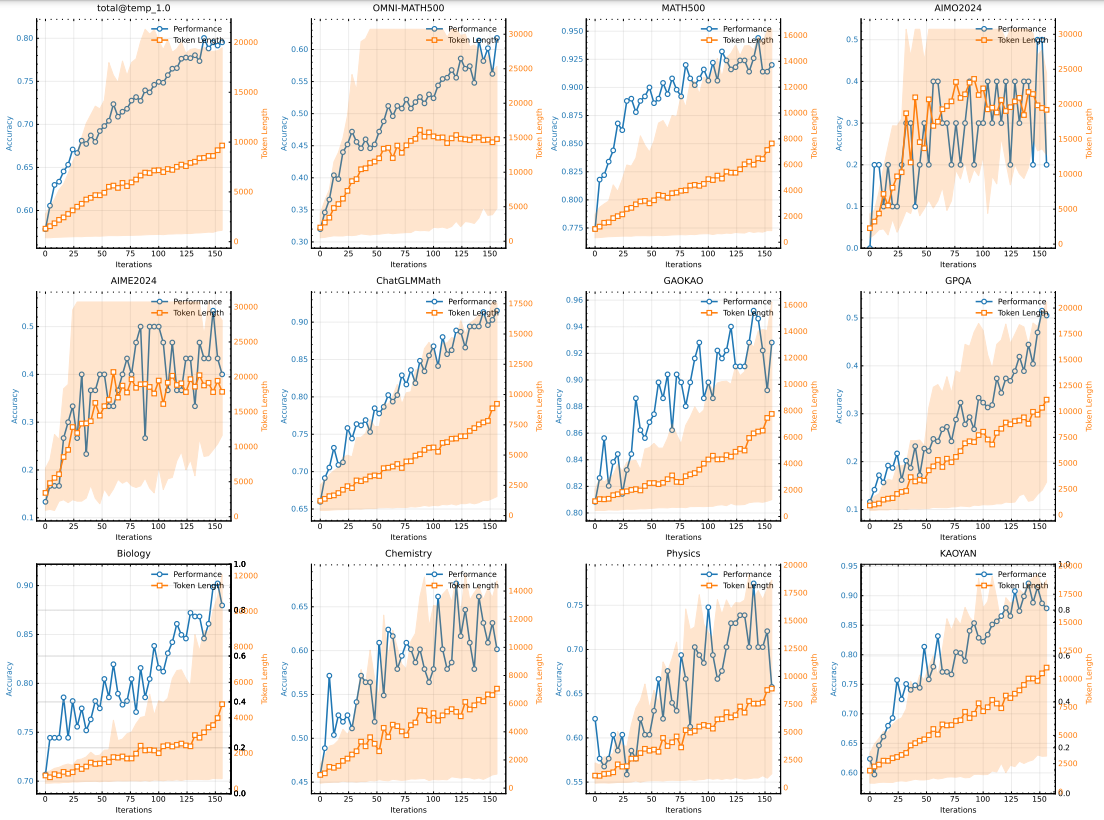

We use a medium-sized model to investigate the extended properties of RL with LLM. Figure 5 illustrates the evolution of training accuracy and response length over training iterations for the small model variant trained on the math cue set. As training proceeds, we observe a simultaneous increase in response length and performance accuracy. Notably, the response length increases faster for the more challenging benchmark tests, indicating that the model learns to generate more detailed solutions for complex problems. Figure 6 shows a strong correlation between the output context length of the model and its problem solving ability. Our final run of k1.5 extends the context length to 128k and observes continued improvement on the hard inference benchmark tests.

Figure 5: Changes in training accuracy and length as the number of training iterations increases. Note that the scores above are from the internal long-cot model, which has a much smaller model size than the k1.5 long-CoT model. The shaded area indicates the 95th percentile of the response length.

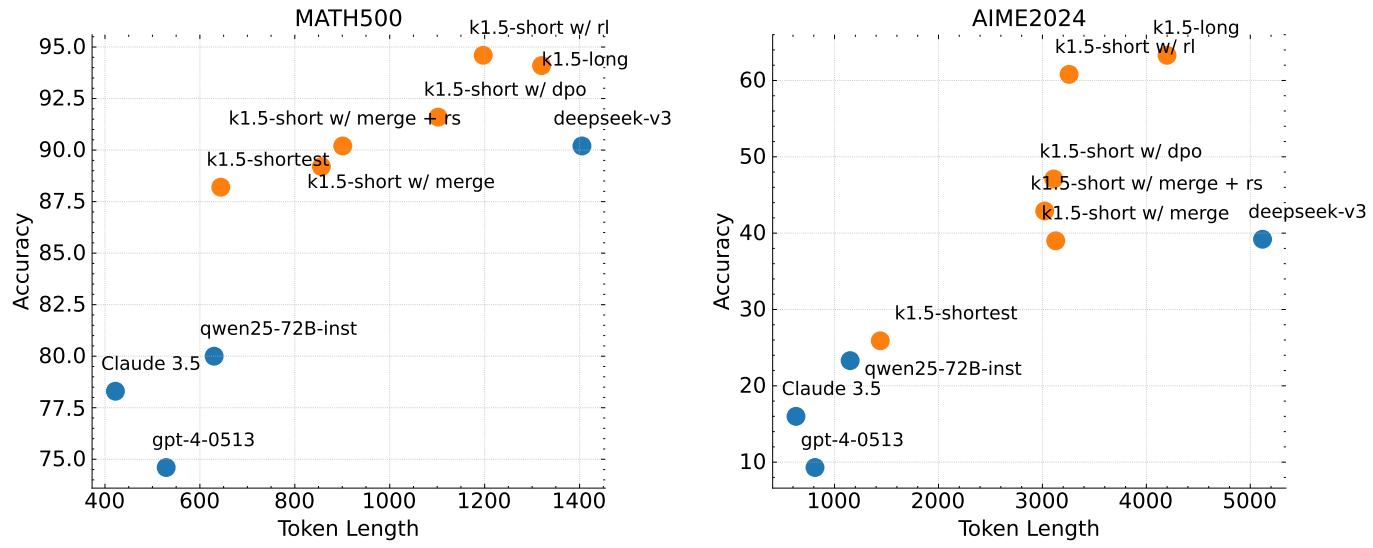

3.4 Long2short

We compare the proposed long2short RL algorithm with the DPO, shortest rejection sampling, and model merging methods introduced in Section 2.4, focusing on the Token efficiency of the long2short problem (X. Chen et al., 2024), and in particular on how to make the obtained long-cot models benefit the short models. In Fig. 7, k1.5-long denotes the long-cot model we choose for long2short training. k1.5-short w/ rl refers to the short model obtained by using long2short RL training. k1.5-short w/ dpo denotes the short model that improves the Token efficiency through DPO training. k1.5-short w/ merge denotes the model merging. k1.5-short w/ merge denotes the model merging. k1.5-short w/ merge denotes the model merging. k1.5-short w/ merge denotes the model merging. short w/ merge denotes the model after model merging, while k1.5-short w/ merge + rs denotes the short model obtained by applying shortest rejection sampling to the merged model. k1.5-shortest denotes the shortest model we obtained during long2short training. As shown in Fig. 7, the proposed long2short RL algorithm exhibits the highest Token efficiency compared to other methods such as DPO and model merging. It is worth noting that all models in the k1.5 family (labeled in orange) exhibit better Token efficiency than the other models (labeled in blue). For example, k1.5-short w/ rl achieves a Pass@1 score of 60.8 on AIME2024 (with an average of 8 runs) while only using an average of 3,272 tokens. k1.5-shortest similarly achieves a Pass@1 score of 88.2 on MATH500 while consuming roughly the same number of tokens as other short models.

Figure 6: Model performance improves with increasing response length

Figure 7: Long2Short performance. All k1.5 families demonstrate better Token efficiency than other models.

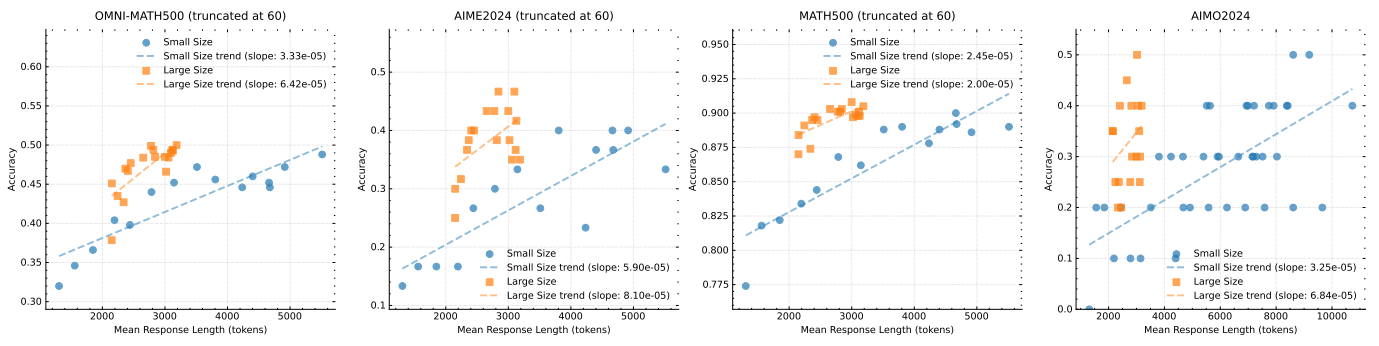

3.5 Ablation studies

Scaling of model size and context length Our main contribution is to apply RL to enhance the ability of models to generate extended CoTs and thus improve their inference. A natural question is: how does this compare to simply increasing the model size? To demonstrate the effectiveness of our approach, we trained two models of different sizes using the same dataset and recorded the evaluation results and average inference lengths at all checkpoints during RL training. These results are shown in Fig. 8. It is worth noting that although the larger model initially outperforms the smaller model, the smaller model can achieve comparable performance by exploiting the longer CoT optimized through RL. However, larger models typically exhibit better Token efficiency than smaller models. This also suggests that if the goal is to obtain the best possible performance, then extending the context length of larger models has a higher upper bound and is more Token efficient. However, training smaller models with longer context lengths may be a viable solution if the computation is budgeted for at the time of testing.

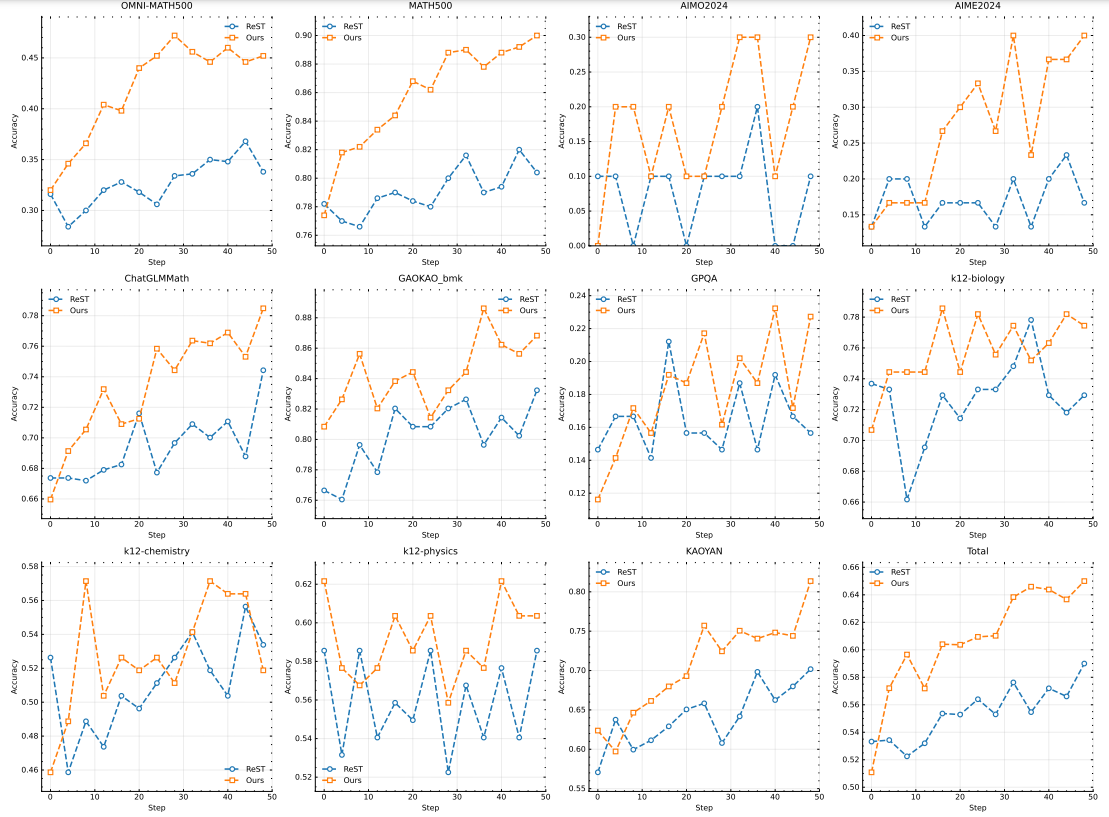

Effects of using negative gradients We investigate the effectiveness of using ReST (Gulcehre et al. 2023) as a policy optimization algorithm in our setup.The main difference between ReST and other RL-based methods, including ours, is that ReST iteratively improves the model by fitting the best response sampled from the current model without applying a negative gradient to penalize incorrect responses. As shown in Fig. 10, our method exhibits higher sample complexity compared to ReST, indicating that incorporating negative gradients significantly improves the efficiency of the model in generating long-CoTs. Our approach not only improves the inference quality, but also optimizes the training process, resulting in robust performance using fewer training samples. This finding suggests that the choice of policy optimization algorithm is crucial in our setting, as the performance gap between ReST and other RL-based methods is not as pronounced in other domains as in (Gulcehre et al. 2023). Thus, our results highlight the importance of choosing an appropriate optimization strategy to maximize the efficiency of generating long-CoTs.

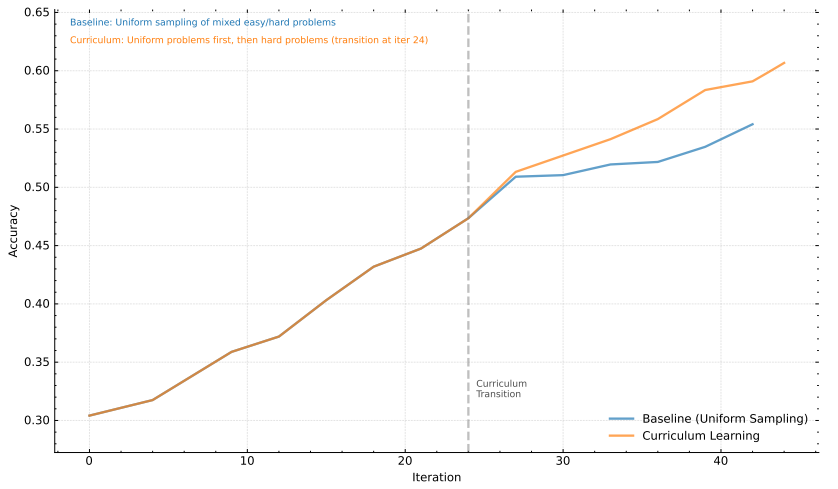

sampling strategy We further demonstrate the effectiveness of the course sampling strategy introduced in Section 2.3.4. Our training dataset D contains problems of various difficulty levels. With our course sampling approach, we initially use D for a warm-up phase and then train the model by focusing only on hard problems. This method is compared to a baseline method that employs a uniform sampling strategy without any course tuning. As shown in Fig. 9, our results clearly show that the proposed course sampling approach significantly improves the performance. This improvement can be attributed to the method's ability to progressively challenge the model, allowing it to develop a stronger understanding and ability to deal with complex problems. By focusing training efforts on more difficult problems after an initial generalization, the model can better strengthen its reasoning and problem solving capabilities.

Figure 8: Model performance versus response length for different model sizes

Figure 9: Analysis of the course learning approach to model performance.

4 Conclusion

We present the training recipe and system design for k1.5, our latest multimodal LLM trained using RL. A key insight we derive from our practice is that context length scaling is critical for continuous improvement of the LLM. We employ optimized learning algorithms and infrastructure optimizations, such as partial rollout, to achieve efficient long context RL training. How to further improve the efficiency and scalability of long context RL training remains an important way forward.

Figure 10: Comparison of policy optimization using ReST.

Another contribution we make is to combine multiple techniques so that policy optimization can be improved. Specifically, we formulated long-CoT RL using LLM and derived variants of online mirror descent for robust optimization. We also experimented with sampling strategies, length penalties, and optimized data formulations to achieve robust RL performance.

We show that even without using more sophisticated techniques (e.g., Monte Carlo tree search, value functions, and process reward models), strong performance can be achieved with long context extensions and improved policy optimization. In the future, it would also be interesting to investigate how to improve credit allocation and reduce overthinking without compromising the model's exploration capabilities.

We also observed the potential of long2short methods. These methods improve the performance of the short-CoT model to a great extent. Moreover, the long2short methods can be combined with the long-CoT RL in an iterative manner to further improve the Token efficiency and extract the best performance for a given context length budget.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...