BrowseComp: OpenAI Launches New Benchmark for Evaluating Information Retrieval Capabilities on the AI Web

Recently, OpenAI released a program called BrowseComp a new benchmark test designed to assess the ability of AI agents to navigate the Internet. The benchmark consists of 1,266 questions covering a wide range of domains, from scientific discovery to pop culture, and requires the agent to persistently navigate an open web environment in search of answers that are difficult to find and intertwined with information.

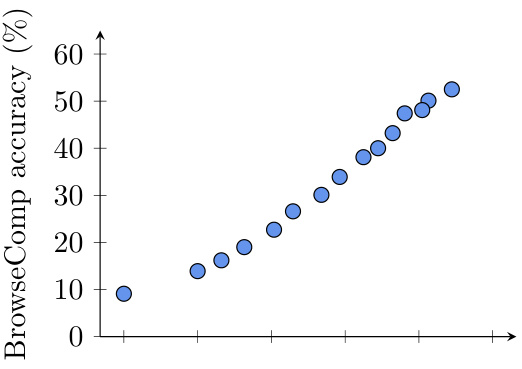

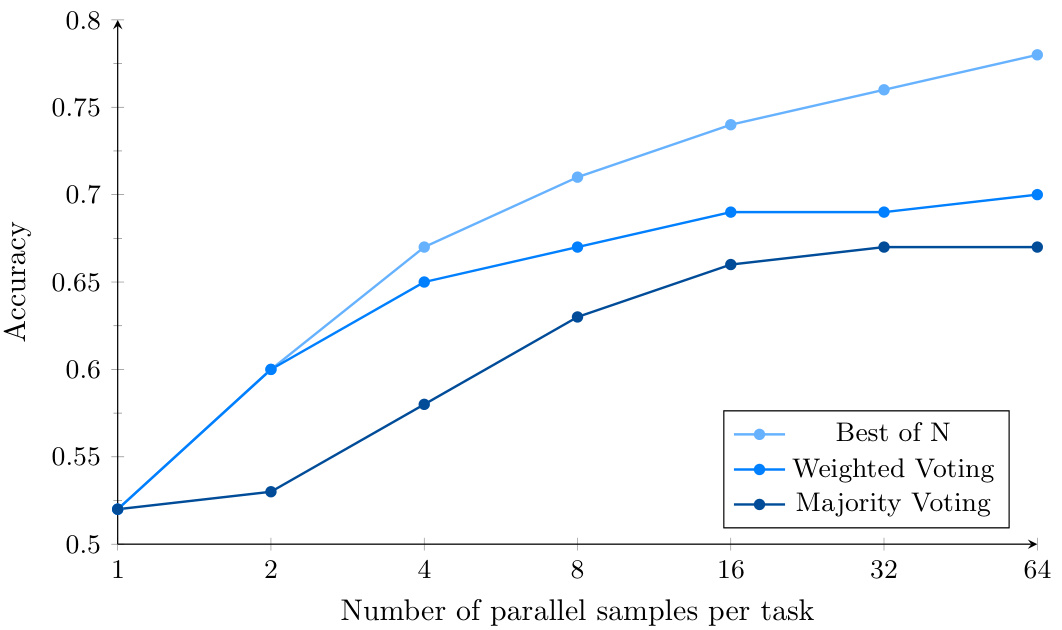

Figure 1: BrowseComp performance of an earlier version of OpenAI Deep Research under different browsing efforts. Accuracy improves smoothly as the amount of computation at the time of testing increases.

Existing Benchmarks are "Saturated" and BrowseComp has been developed for this purpose.

Currently, some popular benchmarks, such as SimpleQA, focus on a model's ability to retrieve isolated, simple facts. For advanced models like GPT-4o, which is equipped with browsing tools, such tasks have become too simple and approach performance saturation. This means that benchmarks such as SimpleQA are no longer effective in distinguishing the true capabilities of models when dealing with more complex information that requires deep network exploration to find.

It is to bridge this gap that OpenAI has developed BrowseComp (meaning "Browse Competition"). The benchmark consists of 1,266 challenging questions and is designed to assess the ability of AI agents to localize questions that are difficult to find, have intertwined points of information, and may require visits to dozens or even hundreds of websites to answer. The team has published the benchmark on OpenAI's simple evals GitHub repositoryand provides detailedResearch papersThe

BrowseComp's Design Concept

BrowseComp was originally designed to fill a gap in existing benchmark tests. While there have been a number of benchmarks for evaluating information retrieval capabilities in the past, most of them asked relatively simple questions that could be easily solved by existing language models, and BrowseComp focuses on complex questions that require in-depth searches and creative reasoning to find the answers.

Here are some sample issues in BrowseComp:

- Sample question 1: Between 1990 and 1994, which soccer teams played in matches with Brazilian referees in which there were four yellow cards (two per team), three of which were issued in the second half, and in which four substitutions were made during the match, one of them due to an injury in the first 25 minutes of the match?

- reference answer: Ireland vs Romania

- Examples of questions 2: Please identify a fictional character who occasionally breaks the fourth wall to interact with the audience, is known for his humor, and has a television show that aired between the 1960s and 1980s and had fewer than 50 episodes.

- reference answer:: Plastic Man

- Examples of questions 3: Please identify the title of a scientific paper presented at an EMNLP conference between 2018 and 2023 in which the first author had an undergraduate degree from Dartmouth College and the fourth author had an undergraduate degree from the University of Pennsylvania.

- reference answer:: Fundamentals of Breadmaking: The Science of Bread

Unique features of BrowseComp

- challenging: BrowseComp's problems are carefully designed to ensure that existing models cannot be solved in a short period of time. Human trainers perform multiple rounds of validation when creating the problems to ensure that they are difficult. Below are some of the criteria used to assess the difficulty of the problems:

- Existing models do not address: Trainers were asked to verify that GPT-4o (with and without browsing), OpenAI o1, and earlier versions of the deep research model failed to address these issues.

- Not available in search results: Trainers were asked to perform five simple Google searches and check that the answers were not on the first few pages of the search results.

- Humans can't solve it in ten minutes.:: Trainers were asked to create problems that were difficult enough that another person could not solve them in ten minutes. For some problems, a second trainer tries to find the answer. Trainers who created problems that were solved for more than 40% were asked to revise their problems.

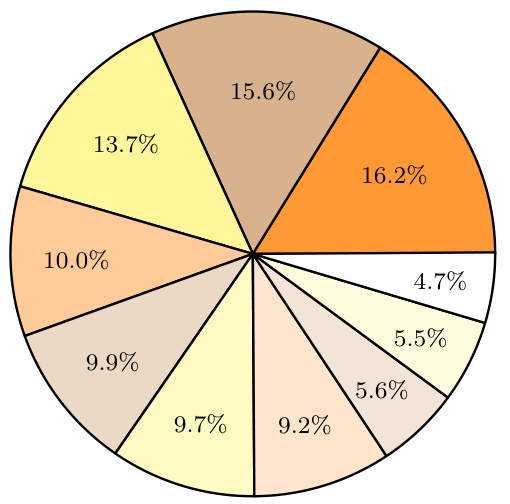

Figure 2: Distribution of topics in BrowseComp. The distribution of topics in BrowseComp is summarized by the hints of the ChatGPT The model categorizes the themes of each question after the fact. - Easy to verify:: Despite the difficulty of the questions, the answers are usually short and clear, and easily verifiable by reference answers. This design makes benchmarking challenging without being unfair.

- variegation: BrowseComp questions cover a wide range of areas, including TV and movies, science and technology, art, history, sports, music, video games, geography and politics. This diversity ensures that the tests are comprehensive.

Model performance evaluation

Tests on BrowseComp have shown that the performance of existing models is mixed:

- GPT-4o cap (a poem) GPT-4.5 Without the browsing function, the accuracy is close to zero. Even with the browse function enabled, the accuracy of GPT-4o only improves from 0.6% to 1.9%, indicating that the browse function alone is not sufficient to solve complex problems.

- OpenAI o1 The model does not have a browsing function but achieves an accuracy of 9.91 TP3T due to its strong reasoning ability, which indicates that some answers can be obtained through internal knowledge reasoning.

- OpenAI Deep Research The model was the top performer, with an accuracy of 51.51 TP3T. the model autonomously searches the network, evaluates and synthesizes information from multiple sources, and adapts its search strategy so that it can handle problems that otherwise could not be solved.

in-depth analysis

1. Calibration errors

Although the Deep Research model performs well in terms of accuracy, it has a high calibration error. This implies that the model lacks an accurate assessment of its own uncertainty when confidently giving incorrect answers. This phenomenon is particularly evident in models with browsing capabilities, suggesting that access to web tools may increase the model's confidence in incorrect answers.

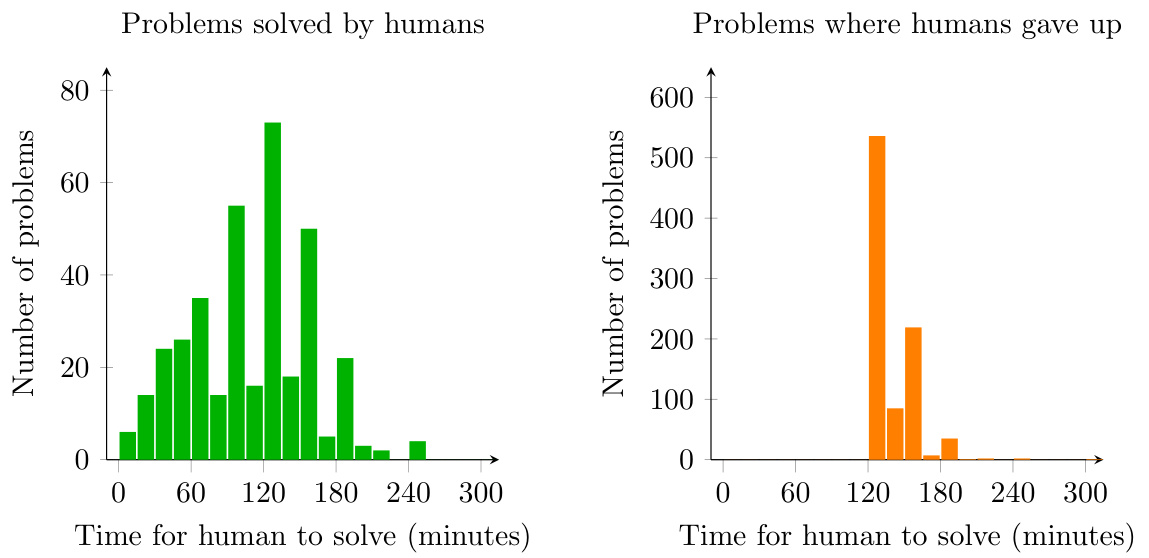

Figure 3: Histogram showing how long it takes a human to solve a BrowseComp problem or give up. Trainers were only allowed to give up after attempting to solve the problem for at least two hours.

2. Impact of computing resources

The test results show that the performance of the model gradually improves as the computational resources are increased at the time of testing. This suggests that BrowseComp's problems require a significant amount of search and reasoning effort, and that more computational resources can significantly improve the model's performance.

Figure 4: BrowseComp performance for Deep Research when using parallel sampling and confidence-based voting. The additional computational effort further enhances the model performance with Best-of-N.

3. Aggregation strategies

The performance of the model can be further improved by multiple attempts and using different aggregation strategies (e.g., majority voting, weighted voting, and best choice) 15% to 25%. where the best choice strategy performs the best, suggesting that the Deep Research model has a high degree of accuracy in identifying the correct answer.

reach a verdict

The release of BrowseComp provides a new dimension in the evaluation of AI agents. It not only tests a model's information retrieval ability, but also examines its persistence and creativity on complex problems. Although the performance of existing models on BrowseComp still needs to be improved, the release of this benchmark test will undoubtedly boost research progress in the field of AI.

In the future, as more models are engaged and technology advances, we can expect to see the performance of AI agents on BrowseComp continue to improve, ultimately leading to more reliable and trustworthy AI agents.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...