BoT: Enhanced Thinking: Solving Trial and Error Problems with Large Language Models

summaries

The reasoning performance of Large Language Models (LLMs) on a wide range of problems relies heavily on chained-thinking cues, which involves providing some chained-thinking demonstrations as examples in the cues. Recent research, e.g., thinking trees, has pointed to the importance of exploration and self-assessment in the selection of reasoning steps in complex problem solving. In this paper, we propose an automated prompting framework called Boosting of Thoughts (BoT) that iteratively explores and self-assesses a large number of Thinking Trees to obtain a collection of trial-and-error reasoning experiences, which will serve as a new form of prompting for solving complex problems. Starting from simple hints that do not require examples, BoT iteratively explores and evaluates a large number of reasoning steps and, more importantly, explicitly revises the hints using LLM's error analysis of them to augment the generation of reasoning steps until a final answer is obtained. Our experiments using GPT-4 and Llama2 on a wide range of complex mathematical problems show that BoT consistently achieves higher or comparable problem-solving rates than other state-of-the-art hinting methods. The source code is available at https://github.com/iQua/llmpebase的examples/BoTReasoning文件夹下获得.

1. Introduction

Large Language Models (LLMs) with an autoregressive paradigm have achieved significant performance on a variety of tasks due to their potential reasoning capabilities. The assurance of this capability in complex tasks relies heavily on Chained Thinking (CoT) cues, which provide step-by-step reasoning examples. This approach suggests that reasoning ability can be stimulated by a series of thinking, where thinking serves as an intermediate step in problem solving.

As a result, follow-up studies, particularly the Tree of Thought (ToT), have suggested ways to improve the CoT. To ensure validity, the prompts of these methods usually include human annotations for specific tasks. This reliance limits their scalability. Recent work, either utilizing LLMs for double-checking to improve answers or enhancing prompts based on feedback, has shown significant promise. Existing literature typically favors discarding invalid thinking in prompts. However, humans can usually learn continuously by carefully analyzing errors, thus gaining experience and gradually improving performance. Thus, we pose the question: can the thought generation of LLMs be freed from human annotations and mimic human problem solving for effective reasoning in a variety of tasks?

In this paper, we propose a novel framework, shown in Fig. 1, called Boosting of Thoughts (BoT), which implements a boosting mechanism, including aggregation and experience, so as to progressively improve unreliable reasoning steps (weak thinking) by learning from errors, and ultimately solve various problems. Starting from a simple hint without human annotation, BoT may get weak thinking. Through aggregation, the BoT is able to derive from them more logical and effective chains of thoughts that lead to subsequent improvements. Such guidance in our framework is achieved by adapting hints, which are detailed error reports, suggestions, and guidance for each reasoning step obtained by analyzing the aggregated chains using LLMs. As such experience builds up in the prompts, it gradually leads to stronger thinking.

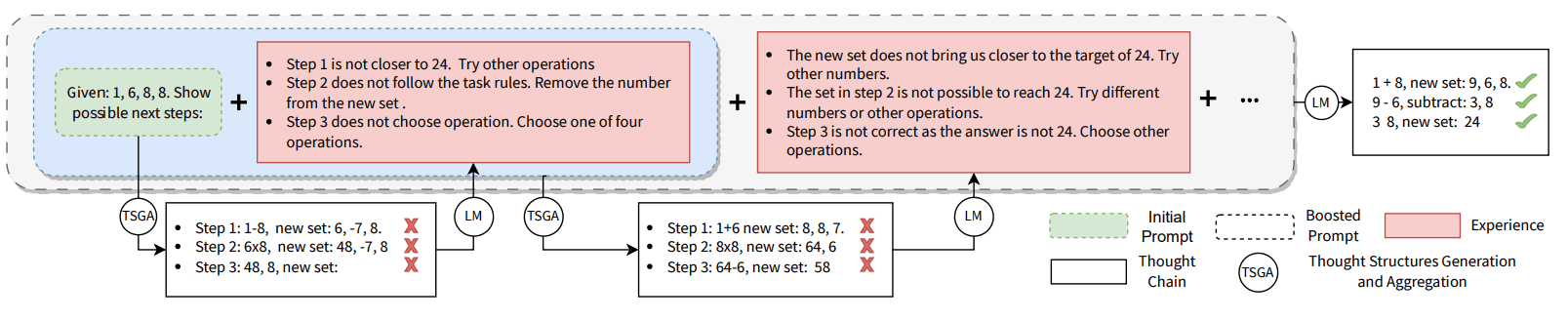

Figure 1: Enhancing cues by progressively augmenting the experience, which consists of an analysis of the generated thought chains by a large-scale language model (LLM or LM). The experience explicitly contains the chain of thoughts itself, the corresponding error reports and detailed suggestions for revising each reasoning step. Thus, those invalid thoughts marked with a red cross can also contribute to the refinement of the prompts. By accumulating experience in the prompts, the BoT can eventually generate correct thought chains starting from simple prompts. The example presented here is the result obtained by applying GPT-4 with BoT to a 24-point game task.

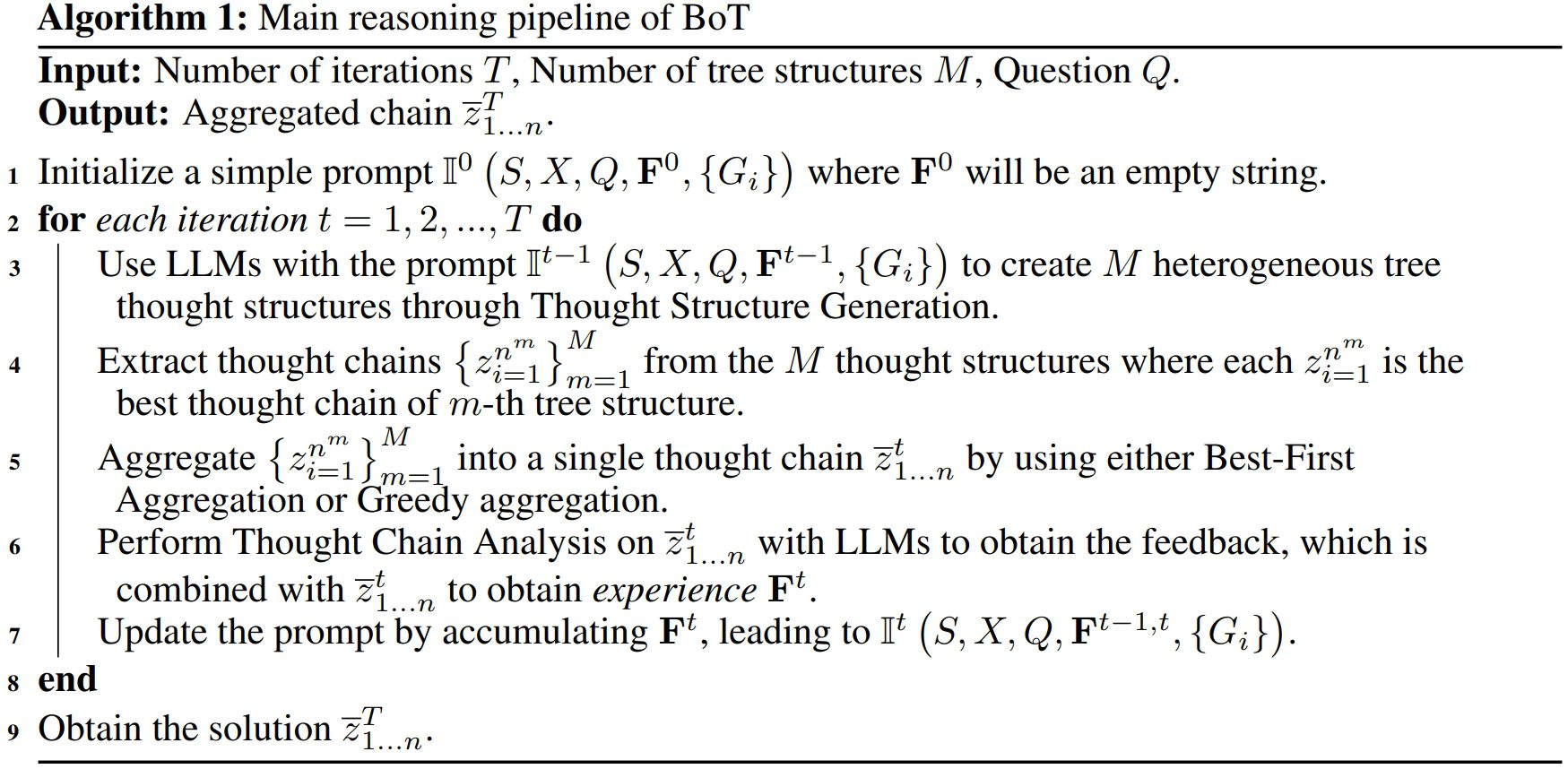

Specifically, BoT implements such a boosting mechanism as an experience-driven iterative process, as shown in Figure 1. In each iteration, for a given cue, BoT builds a large number of simple thought structures in parallel with LLM. We chose the tree structure, as shown in ToT, but for our boosting purposes, we modified it significantly to make it a weighted binary tree with various growth strategies. After extracting the highest scores of the root-to-leaf branches of each tree, the aggregation component of BoT was executed to aggregate them into a single chain of thoughts. This chain was then evaluated by the same LLM for lessons learned, which were added to the prompts as a guide for thought generation in the next iteration.

Our contribution can be summarized in three ways. First, unlike generating more complex thought structures using elaborate prompts, this paper shows that, relying only on simple initial prompts, weak thoughts can be incrementally improved to solve problems based on previous experience. Second, to realize this enhancement mechanism, we propose a novel framework called Boosting of Thoughts (BoT), which performs an experience-driven iterative process. Since it starts with simple prompts, BoT can be extended to a variety of tasks. While ensuring validity, BoT is fast because it builds simple thought structures in parallel and converges to a solution after a few iterations. Finally, we evaluated the performance of BoT on complex math problems using GPT-4 and LlamaV2. Relying on GPT-4 OpenAI (2023) and LlamaV2 Touvron et al. (2023), we evaluated the performance of BoT on complex math problems. Problem solution rates show that BoT, which employs a binary tree mindset structure, significantly outperforms the current state-of-the-art on GSM8K and AQuA, while achieving second-best results on other datasets. In particular, BoT outperforms the leading method ToT by 9.7% on the new challenging task Game of 24. Our BoT thus demonstrates that LLMs can maintain high performance across a wide range of tasks even without human annotations by augmenting cues, accumulating error analyses of ineffective chains of thought, and making corresponding suggestions.

2. Related work

multistep inference.. Prominent work on Chained Thinking (CoT) prompts suggests that the stepwise reasoning behavior of LLMs can be stimulated by providing intermediate reasoning steps in each problem prompt. Recent work, Tree of Thought (ToT) converts the sequential reasoning process into a tree structure in which each thought (node) may consider previous reasoning paths to generate multiple next thoughts. Through backtracking and extended exploration during the reasoning process, ToT performs well on problems that challenge even GPT-4. Considering its high capacity, the underlying thinking structure of BoT largely utilizes the thinking tree structure of ToT. And, due to the boosting framework, the tree structure generated by BoT in each iteration is binary and shallow, rather than ToT's complex tree in which each node corresponds to a large number of child nodes. However, the underlying structure is not limited to ToT. in contrast, BoT is flexible because the underlying thinking structure can be ToT, GoT Besta et al. (2023), or CR Zhang et al. (2023b), where Thinking Graphs (GoT) Besta et al. (2023) is the most recent work that extends the thinking structure to a graphical format. In this paper, we will focus only on ToT as the underlying thought structure and leave the use of GoT as future work.

auto-suggestion.. Releasing humans from task-specific cues attracts a lot of attention. In order to ensure the reasoning ability of LLMs, traditional CoTs rely on human a priori knowledge to manually generate task-specific demonstrations as prompts. However, zero CoT showed that even without hand-crafted examples, LLMs were able to reason step-by-step to obtain accurate answers by simply adding "let's think step-by-step" to the prompt. These insights spawned a series of follow-up studies.Auto-CoT eliminates manual effort by retrieving the available chains of reasoning generated by zero CoTs.Active-Prompt first measures the uncertainty of a set of questions, and thus selects only uncertain questions to be annotated by a human.ToT also reduces manual effort but, for each task, it still requires the expert to provide in the prompt the possible next thinking. Our paper introduces a novel approach to manual cue-free boosting. Starting with a simple cue, BoT iteratively enhances it based on LLMs analysis of the thinking.

Tip engineering through feedback. Much attention has been paid to utilizing LLMs' responses to input prompts as feedback for further prompt revisions. Those who continuously revise a given cue based on the LLMs' evaluated description of the output aim to obtain accurate answers. Using a high-level idea similar to our paper, SELF-REFINE proposes an iterative self-refinement algorithm that allows LLMs to generate feedback to further refine their output.PHP simplifies this process by adding the solution of the previous answer directly as a hint to subsequent hints.REFINER, also related to our paper, evaluates each inference step as a feedback to produce a more reasonable one. Another series of studies explored integration, specifically utilizing boosting mechanisms Freund et al. (1996) refined hints through feedback from a set of examples. They adapted the prompt by adding a few uncertain examples to the previous iteration, or relied on a feedback-reflection-refinement process.APO Pryzant et al. (2023) iteratively refined prompts, using the performance of the previous prompt to form an optimized natural language. These works demonstrate the effectiveness of boosting mechanisms in cue engineering. However, our work is the first to emphasize the importance of error analysis in enhancing hints to generate effective inference chains. The proposed BoT extends this insight to an automated prompting framework by iteratively accumulating a collection of trial-and-error reasoning experiences.

3. Enhanced thinking

3.1 Background

The goal of cue engineering is to design a cue I containing multiple language sequences to be used as input through this cue, and a pre-trained Large Language Model (LLM) denoted as pθ, parameterized by θ, which gives access to the desired language sequences y. Thus, the standard Input-Output (IO) can be expressed as y ∼ pθ (y|I (X, Q)), where I (-) denotes the cue wrapping task instruction X and the the corresponding question Q.

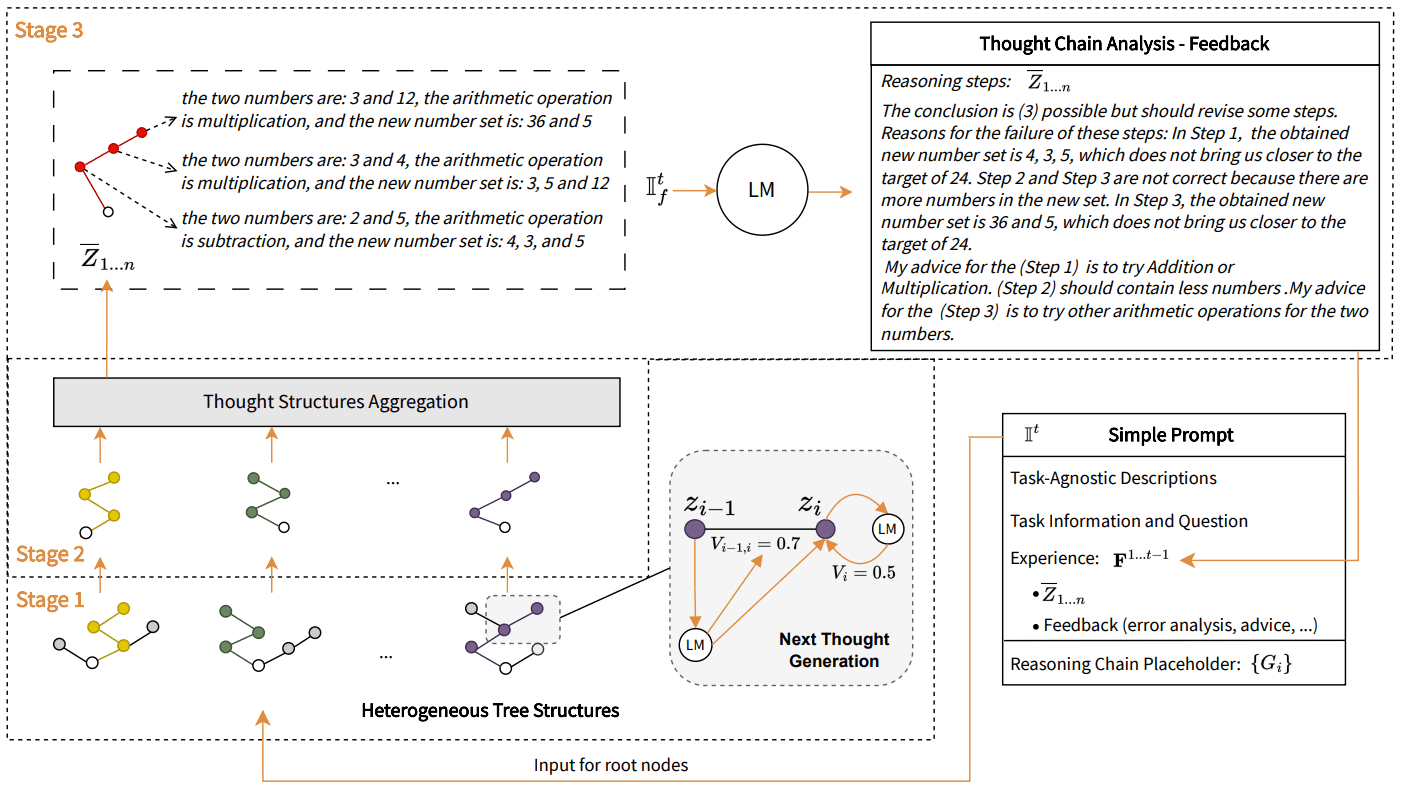

The image depicts a visual representation of the thinking process behind problem solving using arithmetic operations. It is divided into three main parts: thought structure aggregation, next thought generation and thought chain analysis - feedback.

Thought structure aggregation represents the combination of different stages of reasoning (Stage 1, Stage 2, Stage 3), each of which has its own number structure that describes the steps taken to reach a conclusion.

Next Thought Generation focuses on a specific stage, showing how to predict the next thought using a Language Model (LM), taking into account previous experience (represented by the vectors Vi-1 and Vi) and generating a new chain of reasoning.

Thought Chain Analysis - Feedback provides a detailed analysis of the reasoning steps taken, pointing out possible errors and offering suggestions for improvement. It critiques the steps by evaluating whether they bring the solver closer to the target number (in this case 24) and suggests other arithmetic operations to try.

In addition, there is a brief cueing section that outlines the inputs to the thinking process, which include task-unspecific descriptions, task information and questions, and experience gained from a previous chain of reasoning that acts as a placeholder for generating new thoughts.

Overall, the picture depicts a methodologically sound approach to reasoning and problem solving that utilizes language models to iteratively optimize and improve thought processes.

Specifically, let's look at the Thinking Structure Aggregation section. In this section, we can see three stages, each with a colored heterogeneous tree structure. In stage 1, we see some arithmetic operations, and the trend is "vi1 - 0.5". In stage two, we see more arithmetic operations and the trend is "vi2 - 0.6". Finally, in stage 3, we see a sequence of arithmetic operations with a trend of "vi3 - 0.4".

For the next thought generation section, a specific thought node, Zi, is tracked and its accompanying weight, vi, is shown, indicating the importance of these thoughts in the context of problem solving.

In the Thought Chain Analysis - Feedback section, several reasoning steps are shown (Z1,Z2.... .Zn) and evaluated. For each of these steps, "R1:-3, try +", "R2:+3, try -", and "R3: -1, try ×" represent feedback on the particular step feedback, evaluation of reasoning, and suggestions on how to improve.

In the Simple Hints section, some input hints can be seen, such as "Task non-specific description 'Play a number game'", "Task message 'Try to use the four numbers 1,3,4 ,6 (each number can only be used once) and arithmetic operations (addition, subtraction, multiplication, and division) to arrive at the number 24'" as well as the previous reasoning chain Gi.

The result of this pipeline generates a new thought process for the next reasoning.

This diagram represents the logical flow of a thinking process and reasoning methodology, showing how a language model (LM) as well as feedback/suggestion mechanisms can be used to move forward with the problem, with critical feedback and evaluation at each step.

Figure 2: Reveals an overview of the BoT process in each iteration. In order to present how an increase in effectiveness can be achieved in this empirically based iterative process, we show the exhaustive intermediate results obtained in one type of experiment on ChapGPT-4 on the 24-point game dataset. After being given the problem Q: "The four numbers provided are: 2, 4, 5, 5", the BoT proceeds through three consecutive phases. Using the simple cue It as input, Thought Structure Generation (Stage 1) outputs a large variety of heterogeneous tree-shaped thought structures. In Thought Structure Aggregation (Stage 2), they are integrated into a chain of thoughts z1.... .n, followed by analyzing this chain of thoughts in Stage 3 in order to generate experiences that can be used to further reinforce the cue.

The prompts can be designed to be more elaborate in order to guide LLMs to solve the problem in a step-by-step manner. Each intermediate reasoning step is denoted as a zi (also known as a thought).The CoT provides a handful of examples, each of whose answers contains a chain of thoughts z1.... .n. This leads to y ∼ pθ y|I [z1 . .n]N , X, Q , where N is the number of examples included in the prompt.

Instead of preparing examples in the bootstrap, a more flexible approach is to design the bootstrap to guide the LLM to gradually generate the thought zi in the reasoning process. this can be formalized as zi ∼ pθ (zi |I(z1.... .i-1, X, Q)). Finally, the solution is formalized as y ∼ pθ (y|I(z1.... .n, X, Q)).

Representatively, ToT Yao et al. (2024) further extend this sequential reasoning step into a tree structure in which C next thoughts can be generated. Thus, the structure of a reflection can be either chained or tree-like.

3.2 Framework

Existing literature aimed at generating cues with correct CoT examples or designing fine-grained thought generation structures suffers from three limitations. First, invalid thinking in these approaches is typically ignored or discarded. However, humans, especially non-experts, and in particular in other domains, rely on analyzing previous errors to gather more experience to perform correctly in the next attempt. Second, they are less scalable because for each task, examples for generating the next thought, e.g., I (z1|z0, X, Q), need to be provided in the cue. Finally, the generation of thought structures (e.g., the tree structure of Yao et al.) is too complex to explore more reasoning steps in order to obtain a better solution. This is mainly due to the fact that the obtained solutions may not be able to be revised further.

In this paper, we argue that prompts can be enhanced by continuously collecting LLMs' analyses of these invalid thoughts (faulty reasoning steps in the chain of thought). Thus, even a simple cue, e.g., I (X, Q), which may lead to invalid thinking, can be improved incrementally by relying on such analyses in order to obtain strong thinking that will lead closer to a solution.

We propose a method calledBoosting of Thoughts (BoT)'s automated cueing framework, which enables cue enhancement through an experience-driven iterative process from thesimplerthe start of the cue. To summarize as shown in Fig. 2, each iteration t of BoT consists of three phases. The thought structure generation phase is able to make full use of the inference chains generated by the LLMs with the input prompts as It. In the second phase, these thought structures are aggregated into an inference chain that will be analyzed by the LLMs in the third phase to generate feedback containing error reports and detailed revision suggestions. The aggregated chain of reasoning is combined with the feedback results to form a new experience, denoted Ft. Thus, by accumulating these experiences over the iterations F1.... .t, the cue is enhanced.

Simple Tips. For any task, at iteration t = 0, we create a simple initial cue I0 ≡ {S, X, Q, F0, {Gi}}, where S denotes the task-independent description, while X and Q denote the task information and question, respectively. The empirical part of the prompt is denoted F0 and should be empty at the beginning. {Gi}} is a placeholder that will be filled in when constructing the thought structure. In other words, when generating the next thought zi, {Gi} will be replaced with the previous thought chain z1.... ,i-1.

Thought Structure Generation. After collecting the experience Ft-1 , the cue for iteration t can be It ≡ {S, X, Q, F1, ... ,t-1, {Gi}}. Based on this cue, BoT generates in parallel M thought structures.BoT is essentially capable of containing any thought structure, e.g., chained Wei et al. structures or tree-like Yao et al. structures. Considering the exploration of reasoning steps and experimental results, we investigated tree-like thought structures. However, BoT introduces two novel modifications that make it more suitable for the lifting framework.

- weighted binary tree. Using simple cues in each round, BoT constructs weak minds with simple structures and low complexity because they can be further revised in the boosting mechanism. Thus, each thought structure of BoT is a shallow weighted binary tree. For simplicity, we keep the symbols z1.... .i-1 to denote a thought from the root node to the parent of node i. In addition to providing each node i with a thought zi and its thought evaluation score Vi ∼ pθ (z1.... .i, Ia, X, Q), we also include an edge score Vi-1,i ∼ pθ (zi-1, zi, Ie, X, Q) between a child node and its parent node, where Ia and Ie refer to the guiding descriptions of the thought and edge evaluation, respectively. vi-1,i denotes the confidence level of the LLMs in generating this reasoning step. Thus, the BoT's next thought generation in this tree structure is formalized as pθ (zi| (Vi-1,i, Vi, It, X, Q)).

- tree heterogeneity. Unlike ToT, which seeks to find solutions in a large complex tree, BoT aims to build highly heterogeneous tree-like thought structures. Thus, to increase heterogeneity, thought structure generation employs different tree growth strategies, such as layer-by-layer and leaf-by-leaf growth. The former emphasizes exploration but utilizes less Chen & Guestrin (2016), while the latter is the opposite Ke et al. (2017). Thus, the leaf-by-leaf strategy tends to continue reasoning from the current best thought to a better final thought compared to the layer-by-layer growth, but it also tends to get monotonous chains of reasoning. In addition, LLMs with different temperature and Top p settings were applied.Finally, we used a small maximum depth value in BoT and labeled a node as a leaf when Vi-1,i and Vi values were outside the specified range [0.3, 0.8].

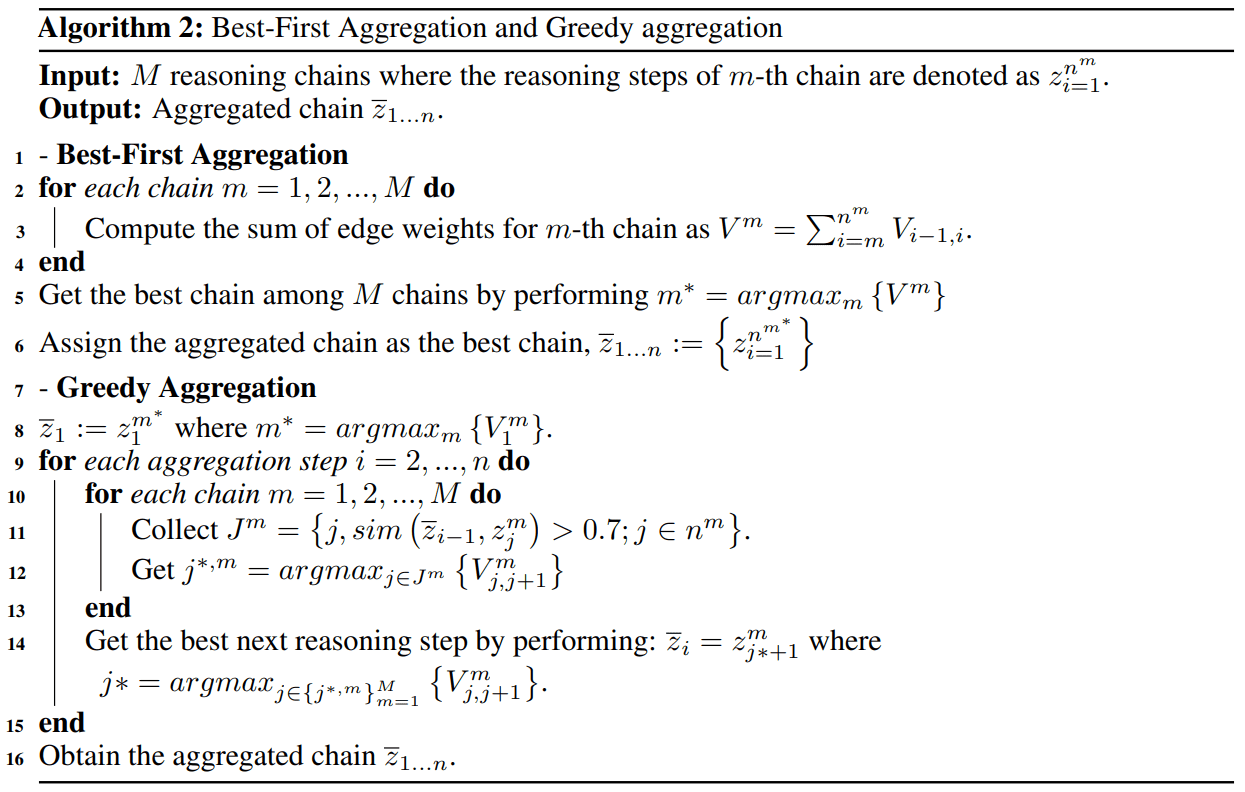

thought structure polymerization. After obtaining M thought structures, the BoT aggregates them into a chain of thoughts, denoted z1.... .n. To accomplish this, for each thought structure index m, the BoT first selects the chain with the highest evaluation score, i.e., zm 1... .nm := arg maxz1... .n∈Zm ∑i=1 Vi + Vi-1,i, where Zm denotes the set of all thought chains of the mth tree. Subsequently, there exist two strategies to obtain z1.... .n.

- Best Priority Aggregation.BoT depends on arg maxz1.... .n∈{Zm}M m=1 ∑i=1 Vi + Vi-1,i, to select the best of the M thought structures as z1... .n. This algorithm is fast, but may lead to an illogical chain that is difficult to guide subsequent improvements.

- Greed Aggregation. the BoT is allowed to perform a greedy search on {Zm}M m=1 in order to assemble a new chain of minds that may not exist, but may be globally optimal. Starting from the initial thought, usually the root node of the tree, the BoT obtains z1 = arg maxzj ∈ {zm 1 } M m=1 Vj + Vj-1,j. Subsequently, in order to obtain zi, the BoT searches for all the thoughts in {Zm}M m=1 whose previous step was zi-1.

chain of thought analysis. To gain insight into what should be adjusted to enhance the cue to generate better thinking, the BoT utilizes the LLMs' ability to self-evaluate z1.... .n. Specifically, with the cue It f (z1 . .n, X, Q) as input, the LLM outputs a feedback paragraph containing information about this chain of thoughts z1.... .n with a problem report and detailed suggestions. This feedback will be added to F1,... ,t-1 as a new experience in thought generation, resulting in F1,... ,t.

Iterative refinement.. Through the boosting mechanism, F1,... ,t is used to iteratively enhance the cue, resulting in the cue It+1 for the (t+1)th iteration ≡ {S, X, Q, F1,... ,t, {Gi}}. As the iteration proceeds, F1,... ,t may contain many typical, illogical chains of thought, as well as those closer to the solution, all with well-defined analytic results. Thus, even when starting with a simple hint, BoT iteratively refines this hint to produce the correct reasoning steps that lead to an accurate solution. After T iterations, we use It+1 as the input hint to the LLM for the final answer.

4. Experimentation

data set. Experiments were conducted on benchmark datasets containing a variety of mathematical problems, including MMLU, SVAMP, GSM8K, AQuA, and MATH.In addition, we included a challenging mathematical reasoning task, Game of 24, where the goal was to obtain 24 in 1 equation using four numbers and basic arithmetic operations (addition, subtraction, multiplication, and division).Thus, the solution includes 3 intermediate steps.

competition. In addition to the benchmark method Standard Input-Output (IO), comparative methods include Chained Thinking (CoT), CoT-SC, and Complex CoT, where the input prompts contain a handful of examples (8) and human annotations. In addition, BoT is compared with related work such as thinking trees (ToT), progressive prompts (PHP), and state-of-the-art CSVs.We conducted experiments using GPT-4 and Llama2.GPT-4 was accessed via the OpenAI API, while the llama2-13b-chat model was downloaded from MetaAI to conduct experiments locally. To construct a heterogeneous tree-thinking structure, BoT randomly selected temperatures in the temperature range [0.2, 0.4, 0.6, 0.7, 0.9, 1.1, 1.5] and top p range [0.1, 0.3, 0.5, 0.7, 0.9].

set up. Unless explicitly stated, in all experiments, BoT performs T = 10 iterations and constructs M = 15 thought structures, each of which is a weighted binary tree, as this tends to yield the best results. In addition, for these benchmark datasets, we set the depth of the tree to 5, while the corresponding depth in Game of 24 was 3. BoT + CoT means that our simple cue includes 5 examples from CoT. In ablation studies, when there is no cumulative experience in the BoT, 8 CoT examples will be provided in the prompt.

metric. We measure and report the results of all experiments in terms of the resolution rate of the task (%). From the BoT output zT1.... .n from the target answers, we set up formatted descriptions of the answers specifically for LLMs. For commonly used datasets, the format of the answer was set to "The answer is:". For the game of 24 points, we use "Step index, current set:, two numbers selected:, operation:, new number from operation:, remaining numbers:, new set of numbers:". Then, we compare the numbers in the new set of numbers directly with the ground truth. Referring to ToT Yao et al. (2024), we use the solution rate counted from 100 difficult games as a metric.

4.1 Main results

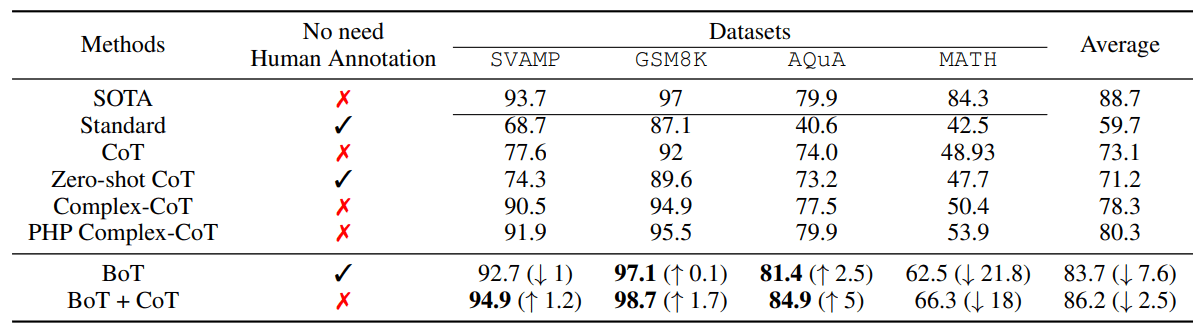

The main experimental results are summarized in Table 1 and Figure 3, where we provide insights into the overall performance of the BoT. Our findings show that the proposed BoT by boosting the mechanism 1). Obtaining competitive problem solving rates independent of human annotations in most datasets; 2). Achieves new state-of-the-art when providing CoT examples. However, experimental results also show that BoT relies heavily on experience and is therefore very sensitive to the capabilities of LLMs.

Specifically, in Table 1, BoT starts with simple initial hints and eventually outperforms the current state-of-the-art CSV by 0.11 TP3T in terms of solution rate on GSM8K, which heavily relies on the GPT-4 code interpreter. Considering AQuA, BoT outperforms SOTA by 2.51 TP3T. this shows that by adding error analysis and suggestions to hints without relying on human annotations, LLMs can perform well on complex reasoning. The main reason for this is that simple hints can be improved gradually by accumulating previous experience to solve problems accurately. After the CoT examples were included in the prompts, BoT+CoT was 1.3% higher than SOTA.We argue that CoT examples can be regarded as successful cases in experience, which directly guide the generation of subsequent thought structures in BoT. Thus, through iterative improvement, BoT+CoT reached a new SOTA.Meanwhile, BoT and BoT+CoT, especially BoT, were at least 181 TP3T lower than SOTA on MATH.This observation implies that weak LLMs may not work well with BoT due to the low ability of LLMs to analyze the reasoning chain for valid experiences.

Table 1: Using BoT in conjunction with GPT-4 significantly improves performance even without manual labeling.

When BoT's simple initial cue includes CoT examples, the corresponding BoT+CoT approach exhibits higher solution rates. Our framework is also compared to leading approaches such as Model Selection by Zhao et al. in 2023, PHP by Zheng et al. in 2023, and CSV by Zhou et al. in 2023, achieving state-of-the-art (SOTA) performance on the SVAMP, AQuA, and GSM8K & MATH datasets, respectively.

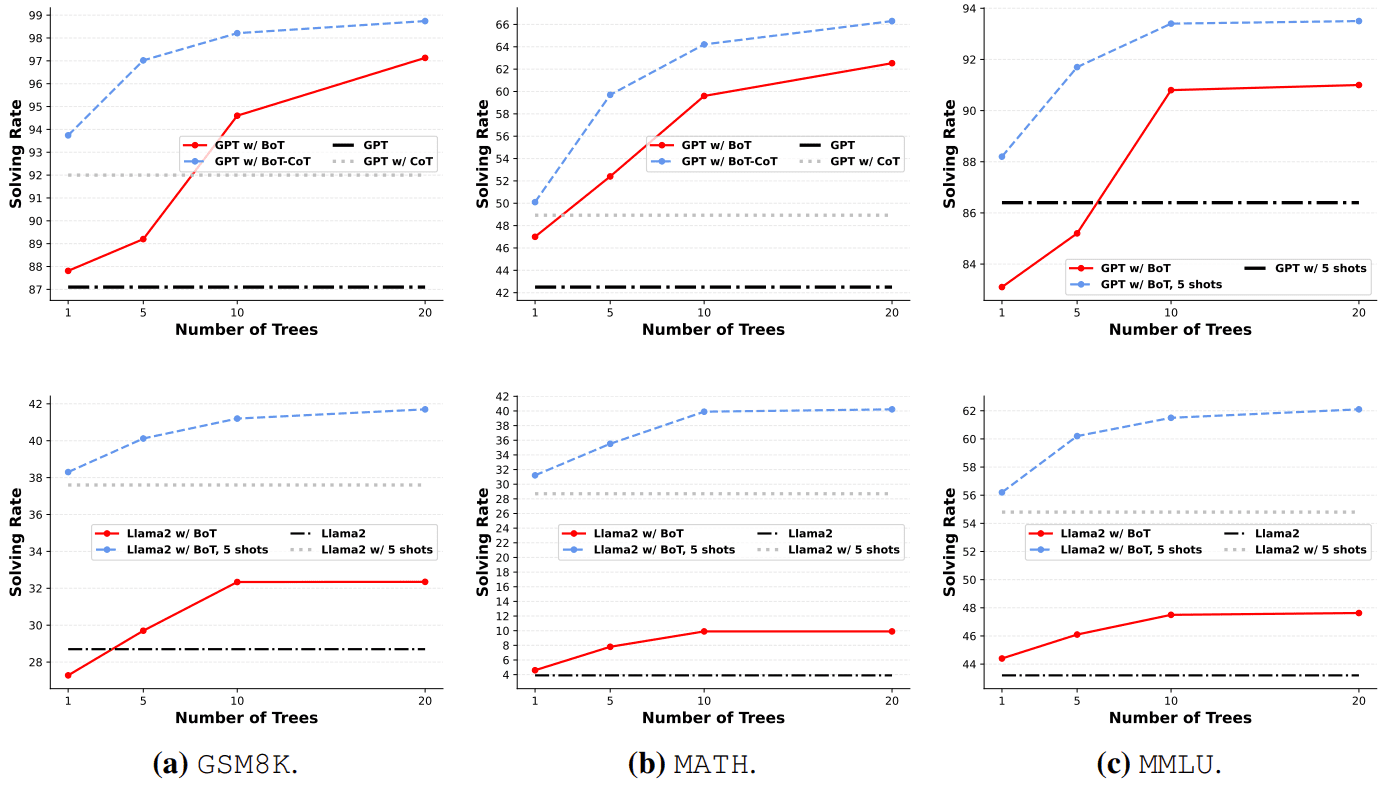

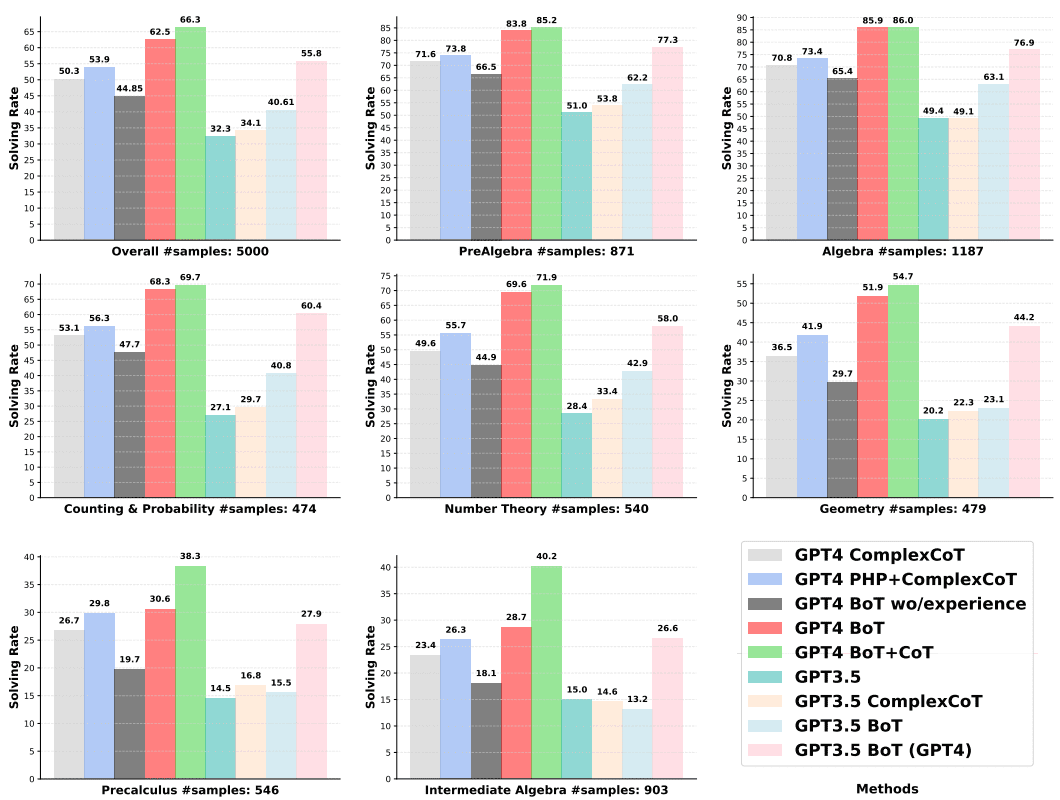

Figure 3: BoT and BoT+CoT are utilized to evaluate the problem solving rate of GPT-4 OpenAI with Llama2 Touvron et al. in 2023.

As can be seen in Fig. 3, GPT-4 and Llama2 improve the average performance of 11.61 TP3T and 4.41 TP3T on average across the three datasets under BoT conditions, respectively. These two figures show a clear trend that BoT and BoT-CoT perform similarly when LLMs are more powerful, as shown in Figure 3. In addition, their performance shows a similar upward trend as the number of trees changes from 1 to 20. Due to the weaker Llama2, BoT cannot benefit from its analysis to perform an experience-driven iterative process, especially shown in Fig. 3(a). When provided with valid success stories, i.e., 5 tests, the BoT can still help Llama2 solve more problems than the baseline through incremental improvements, albeit with limited improvements.

4.2 24-point game

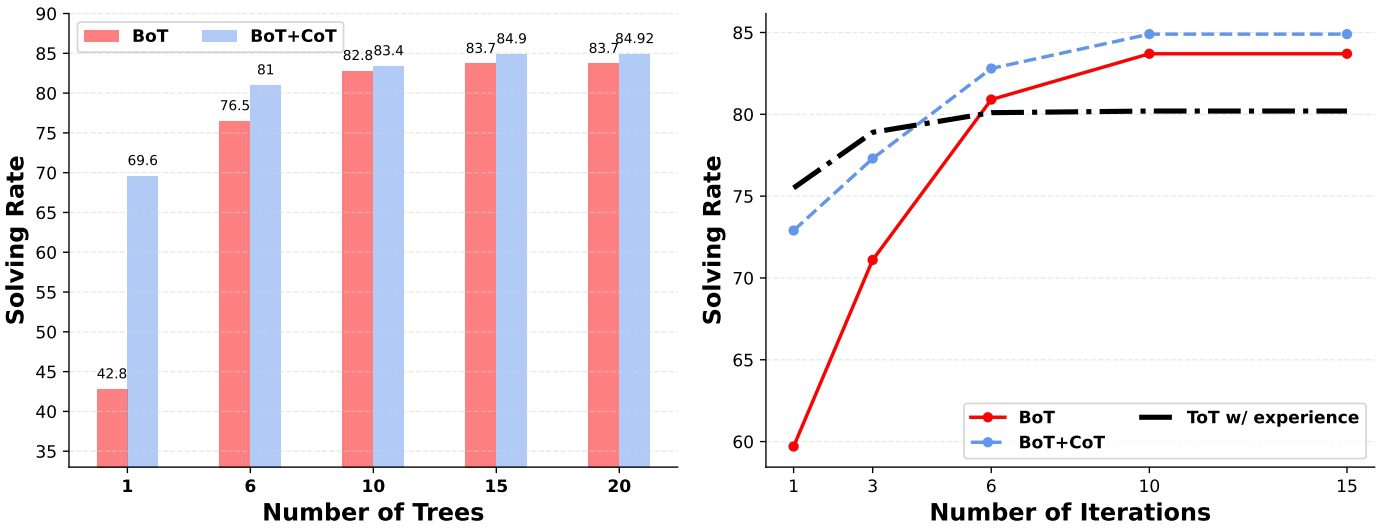

Fig. 4: Comparison of the three methods in different number of trees and iterations.

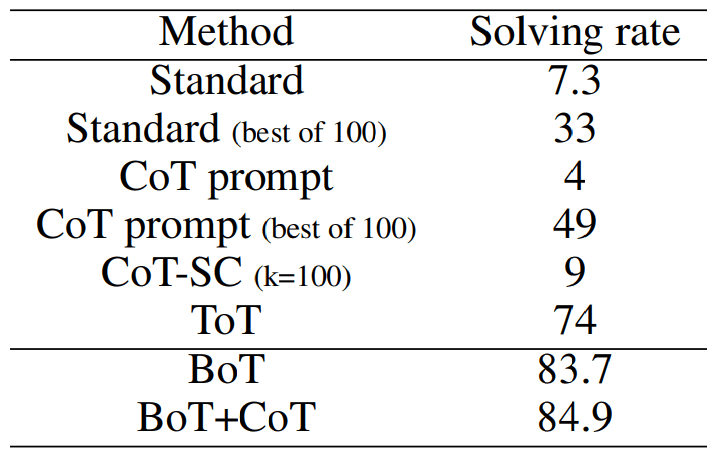

Table 2: Setup of the different methods in the 24-point game following the setup in ToT Yao et al. (2024), whose results are shown here.

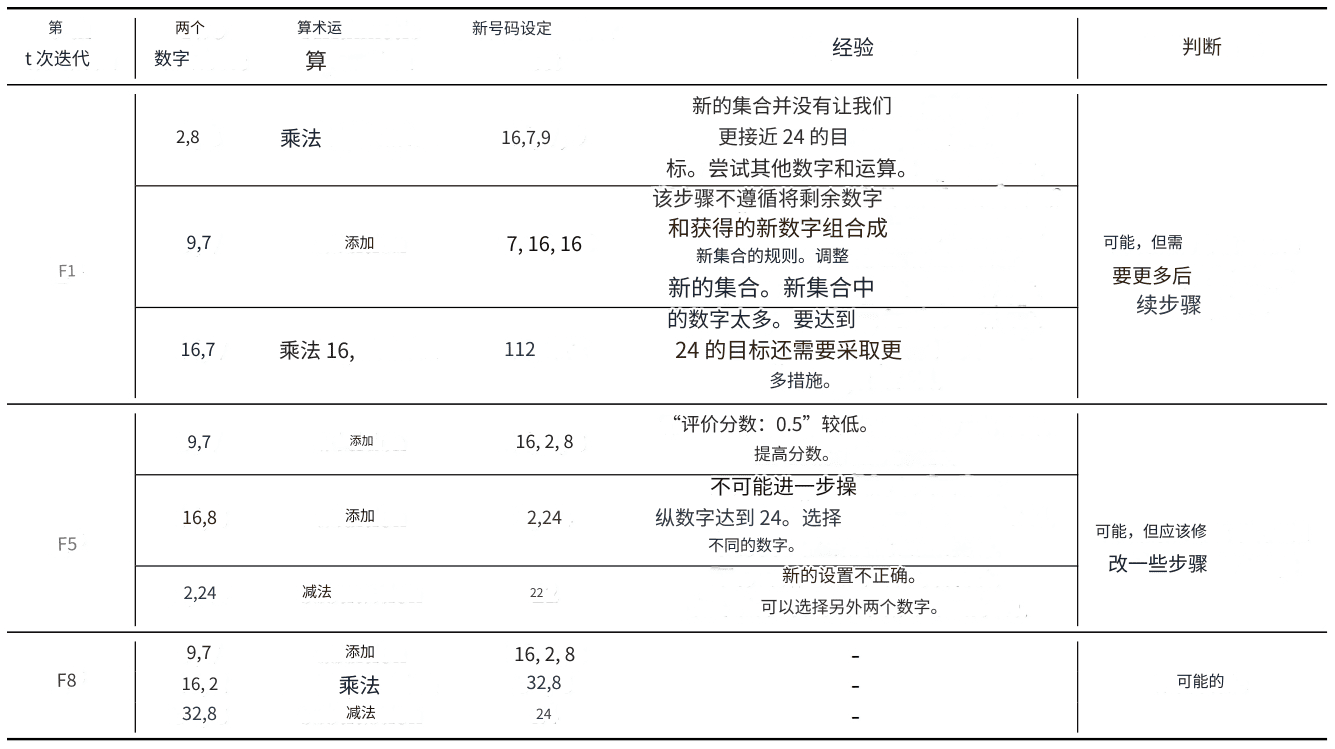

Table 3: Demonstrates the thought process that was accumulated and the experience that was able to be gained during the 1st, 5th, and 8th iterations. The four values given are: 2, 7, 8, and 9.

Due to the difficulty of the Game of 24 problem, both GPT-4 and Llama2 performed poorly on this task, even when combining the CoT and CoT-SC methods.The Llama2 model was unable to follow even the correct rules for solving the problem, making the solution rate even lower. Especially when applying BoT, which relies on experience, all the results of Llama2 are lower than 5% without significant improvement. Therefore, we only report the performance of BoT with GPT-4. To maintain a fair comparison, we follow the setup proposed by ToT Yao et al. (2024).

As shown in Table 2, the BoT without human annotations outperforms the ToT that relies on an example to show all possible next steps by 9.71 TP3 T. In addition, the BoT+CoT that contains 5 CoT shots outperforms the BoT by 1.21 TP3 T. The performance proximity between the BoT and the BoT+CoT is attributed to the boosting mechanism, which incrementally revises the weak mindset, as discussed in Subsection 4.1. Using an experience-driven iterative process, BoT exhibits enhanced performance when the number of trees M and iterations T increases. As shown in Fig. 4, BoT is more dependent on M and T than BoT+CoT because it needs to gather experience from a better chain of thoughts or longer iterations. Another observation is that when ToT is enabled to operate with experienced cues, the problem solving rate increases from 72.51 TP3T in the first iteration to 80.21 TP3T in the 10th iteration.This suggests that experience-an analysis of previous chains of reasoning-can be used by LLMs to significantly improve the solving rate. However, the score obtained by ToT is still 3.51 TP3T lower than that of BoT. this is attributed to the fact that the aggregation phase of BoT will produce the most representative chain of reasoning in the current iteration, thus channeling more meaningful experience to enhance the cue. We verified this in the ablation study section.

To better demonstrate how BoT learns from mistakes and previous suggestions, we show in Table 3 that GPT-4 is able to avoid previous mistakes and generate more specific suggestions as the number of iterations increases, eventually leading to the correct solution. In the first iteration, due to simple hints, the LLMs even made a mistake in following the task rules because the new set was wrong in step 3. After analyzing it, it made the correct suggestion for this error. However, the analysis of the initial iteration was vague, e.g. "try other numbers and operations". After five iterations, the BoT aggregated multiple such analyses, resulting in more effective hints that led the LLMs to choose the correct numbers 9 and 7. In addition, the suggestions were more specific and useful. For this correct choice, the suggestion was to increase the evaluation score accordingly. By accumulating such experience, BoT gradually refined the hints, eventually generating the correct solution directly in the 8th iteration.

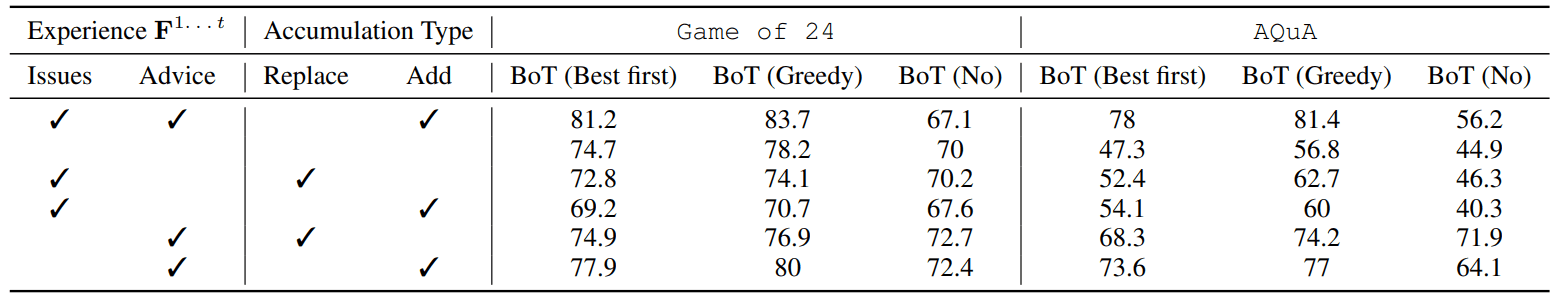

4.3 Ablation studies

Table 4: Comparison of GPT-4 with different BoT variants utilized on the 24-point game and the AQuA dataset.

All aggregation strategies produce high solution rates when problems and suggestions are pooled in an "additive" fashion. Maintaining a complete experience is particularly important for revisionist thinking, especially for the AQuA dataset, which covers a wider range of mathematical reasoning problems. However, BoT(No), which uses all the reasoning chains of the spanning tree directly without aggregation, performs the worst in all contexts, especially when the cumulative type of experience is "cumulative." BoT builds 15 trees per iteration, and putting all of them together in a cue may obscure important information, not to mention most of the information that is not available in the cue. Such experience may be invalid or harmful.

Getting advice is more critical to triggering thinking than anything else.. In all the examples mentioned in Table 4, the BoT variant that treats suggestions as experience achieves the highest solution rates. For example, in the same "additive" type case, without suggestions in the experience, the performance slipped by more than 101 TP3T and 201 TP3T in the "24-point game" and AQuA, respectively. on the other hand, incorporating problems in the experience can be used as a complementary tool to help improve the performance. BoTs with suggestions can only achieve optimal resolution rates if they are paired with problems. For example, in AQuA, the solution rate of BoT (greedy method) increased by 4.41 TP3T.

For performance reasons, Greedy Rally may be the only option that is necessary. As opposed to the highest priority approach of selecting one, or sustaining all, of the existing thought chains, greedy agglomerations can adaptively merge the tree structure into a better thought chain, which may not occur in the current iteration. In this way, LLM can perform a meaningful analysis of a more robust chain of thoughts, and as a result, generate important lessons to enhance the cue. In AQuA, which contains more math problems, this number even reaches 101 TP3 T. Furthermore, as we discussed in Figure 4, while ToT has a similar experience-driven boosting mechanism capable of reaching 801 TP3 T, it still lags behind BoT. this may be due to its inability to perform greedy clustering in its single tree structure.

5. Conclusion

In this paper, we validate that a simple cue can be used to solve a complex task by gradually accumulating error analyses of the thoughts it generates. We propose a novel framework called Boosting of Thoughts (BoT) that enables this gradual cue augmentation through an experience-driven iterative process to generate effective chains of thoughts. A simple tree-like chain of thoughts generated through iterative exploration and self-assessment allows a simple initial cue to be incrementally augmented by a series of trial-and-error reasoning experiences that lead to an accurate solution. Our extensive experiments show that BoT is able to achieve state-of-the-art performance on multiple benchmark datasets and outperforms other leading methods on the challenging mathematical reasoning task of Game of 24.

A BoT of basic cues and reasoning processes

A1 BoT for the thought generation component

This section mentions the basic hints used for the reasoning generation of the proposed Boosting of Thought (BoT). For specific related details, you can visit the example file of the source code: /examples/BoostingOfThought/BoT reasoner.py.

System Tip:

You are an expert in math problems. Perform step-by-step problem-solving reasoning by learning from a series of trials and error reasoning experiences. Such trial-and-error reasoning experiences specifically include error reports, as well as detailed suggestions on how to modify previous reasoning steps. Before generating new reasoning steps, it is important to review these listed experiences as a way to avoid repeating mistakes while using the correct steps to generate better reasoning steps for problem solving.

System prompt S.

You are an expert on mathematical problems. Perform step-by-step reasoning

toward problem solving by first learning from an ensemble of trial-and-error reasoning experiences.

Such trial-and-error reasoning experience specifically contains error reports and detailed advice on

How to revise historical reasoning steps. Always recall these listed experiences before generating a

new reasoning step, thereby avoiding making the same mistakes and reusing correct steps to generate

better reasoning steps to solve the task.

Generate a prompt for the next thought:

f"""

{Mission Alert} \n

First, recall the previous reasoning experience: \n\n

{Experience}\n\n

Please introduce the next possible reasoning step, and there can only be one. This reasoning step should be used as a sequential successor to the following ordered steps and should be accompanied by a corresponding evaluation score (higher scores indicate a higher likelihood of completing the task):\n\t

{chain hint}\n\n

Based on the above antecedent inference steps (or ignore them if the above space is empty), generate a unique next possible step based on the task rules. (IMPORTANT: Please only generate the next possible reasoning step for a given step.)

"""

f"""{task prompt}. \n First of all , Recall historical reasoning experience : \n\n {

experiences} \n\n Please make one step of reasoning to generate only one next

This next reasoning step is the sequential step from This next reasoning step is the subsequential step from

the following ordered previous steps , accompanied by their evaluated scores (A

higher score means the reasoning step is more likely to complete the task .) : \n\t{

chain prompt}\n\n Based on listed previous reasoning steps (ignore them when the

above space is empty), generate one single next possible step following the Task

rule . (Emphasize: Please generate only one single next possible reasoning step of

the given steps .) """

The task prompt contains the X and Q of the task, and the historical experience is F1.... The "chain hint" part is {Gi}, which is a placeholder that, when the current thought zi is performed, is replaced by the previous series of thoughts z1...,i-1. ,i-1.

For tips on thinking about assessment:

f"""

{Mission Alert} \n\n

Below are the reasoning steps in order, accompanied by their respective assessment scores. (A higher score means that the step is more likely to complete the task.) \n

{chain hint}\n

{Think}\n\n

What is your score for evaluating how logical, correct, and helpful these reasoning steps are to the final solution? Please choose one of [0.1, 0.3, 0.5, 0.7, 0.9, 1.0] as the score, with higher scores indicating better reasoning steps. The score should be placed after ' Evaluation score:' for the user to read.""""

f"""{task prompt}. \n\n Below are the generated reasoning steps , presented in order ,

accompanied by their evaluated scores (A higher score means the reasoning step is

more likely to complete the task .) :\n{chain prompt}\n{thought}\n\nWhat is your

evaluation score for the logic , correctness , and benefit to reaching a final

solution for these reasoning steps ?

0.7, 0.9, 1.0] as the score , where a higher score means better reasoning steps . The

score should be placed after ' Evaluation score :' for users to read .""""

In this case, thinking is the thinking Zi that is currently going on.

A2 BoT for experience generation component

To generate feedback on aggregated chains, LLMs use the following basic prompts. For more information, also visit the source code examples/BoostingOfThought/BoT commenter.py.

System Prompt S: You are an AI expert reviewer for Math Answers working on evaluating the chain of reasoning generated to solve a math problem. Please evaluate each reasoning step of this reasoning chain by providing a detailed analysis to determine whether the current step is a logical inference from the previous step and whether the reasoning step contributes to the correct solution. For each incorrect reasoning step, please provide an error report and corresponding suggested revisions. For each correct reasoning step, provide a description of the recommendation or rejection.

System Tip:

Your role is that of a skilled AI math answer reviewer focused on evaluating the reasoning process of solving math problems. You need to judge each reasoning step through an exhaustive analysis to see if it is based on the logic of the previous step and if this step contributed to finding the correct answer. If you encounter an incorrect reasoning step, you need to give suggestions and options for modification. For correct reasoning steps, you need to affirm or give different options.

System prompt S.

You are an expert AI checker for math answers, dedicated to evaluating the

reasoning chain generated towards addressing the mathematical problem. Judge each reasoning

step of this reasoning chain by providing detailed analyses on whether the current step is a logical

inference of the previous step and whether the reasoning step is beneficial to the correct solution.

Provide advice and suggestions for each reasoning step with errors. Provide recommendation or

rejection descriptions for each correct reasoning step.

Feedback Tip:

f"""

For the given task:{task hint}. \n

{Reasoning Chain Hints}\n\n

Please evaluate this chain of reasoning and provide a detailed critique that includes the following points. \n

1. {Chain Feedback Format}. 2. {Step Feedback Format}. 3. {Confidence Feedback Format}. 4. \n\n

Caveat: {Caveat}

f"""Given task:{task prompt}. \n{chain prompt}\n\n Please evaluate this reasoning chain

by giving detailed comments containing the following content . \n 1.{

chain feedback format }. 2.{ step feedback format }. 3.{ confidence feedback format }. \n

\n Notice: {Notice}.

included among theseReasoning Chain Hintsis an aggregated inference chain z1.... .n.

Chain Feedback Format: Can this chain of reasoning correctly accomplish the task and reach the goal by performing its reasoning steps? Why? Write a concluding analysis under "Analysis Report:".

Step-by-step feedback format: For each reasoning step, please provide a detailed analysis of whether the current step is a logical deduction from the previous step and whether the reasoning step contributes to the correct solution. For each incorrect reasoning step, please provide an error report and corresponding recommendations for revision. For each reasoning step, provide a description of the recommendation or rejection. Comments should be concise and follow the following format: reasoning step ⟨idx⟩. Analysis ⟨idx⟩. Recommendation:. Recommendation or Rejection Description:.

Confidence feedback format: What is your confidence score for these assessments and reviews? Please select a value from [0.1, 0.3, 0.5, 0.7, 0.9, 1.0] as the score for the user to read.

With feedback cues, LLMs generate empirical Ft containing conclusions and analyses of the reasoning chain and each reasoning step.

A3 Reasoning process

To facilitate the understanding of the proposed Boosting of Thoughts, we summarize the inference flow in Algorithm Table 1. The source code of this flow can be found in the file examples/BoostingOfThought/BoT core.py.

BoT original

The content of the image is an algorithmic step-by-step representation of a theory called "BoT", and the following is its Chinese translation, corresponding to the following algorithmic steps:

Algorithm 1: The main reasoning process of BoT

Inputs: number of iterations T, number of tree structures M, problem Q.

Output: aggregated chain z̄_1.... .n^T1. Initialize a simple hint I^0 (S, X, Q, F^0, {Gi}), where F^0 will be an empty string.

2. Each iteration t = 1, 2, ... , T

3. Use the cues I^t-1 (S, X, Q, F^t-1, {Gi}) and LLMS (Low Latent Mixed Model) to create M different thought structures in a process called "Thought Structure Generation".

4. Extract thought chains {z̄_i=1.... .n^m}, each z̄_i=1... .n^m is the best thought chain of the m-th tree structure.

5. Use "Best-First Aggregation" or "Greedy aggregation" to incorporate {z̄_i=1... .n^m} into a single thought chain z̄_1... .n^m}. .n^m} into a single thought chain z̄_1.... .n^t.

6. Use LLMS to perform "Thought Chain Analysis" on z̄_1.... .n^t, obtain feedback, and combine it with z̄_1.... .n^t and combine it with z̄_1...n^t to obtain the experience F^t.

7. Update the cue by accumulating F^t to I^t (S, X, Q, F^t-1,t, {Gi}).

8. Conclusion

9. Access to solutions z̄_1.... .n^T

B Insights to enhance thinking

Boosting of Thoughts stems from our insight that the reasoning ability of Large Language Models (LLMs) to solve mathematical problems comes directly from experiences that contain analyses and suggestions for prior errors. Once cues contain valid historical reasoning experience for LLMs to recall before performing reasoning, the resulting reasoning steps are typically more logical and rational, as shown in the comparison of Tables 5 and 6. These insights also led us to consider that LLMs do not need to rely heavily on well-prepared prompts for each task (several thought chain demonstrations in the prompts serve as examples). However, because LLMs are able to learn from experience, we can start with a simple prompt that does not require exemplars or manually-designed content, and gradually gather experience as we reason. Ultimately, by accumulating experience in the cue, LLMs achieve robust reasoning for solving complex problems. With these insights, Boosting of Thoughts is designed as an automated prompting framework that solves problems by iteratively collecting a collection of trial-and-error reasoning experiences. We argue that the proposed BoT is not an application of LLMs to a specific task, but rather builds on the insight that the reasoning power of LLMs can be obtained directly from analyzing the error analysis of incorrect inference chains without relying on human a priori knowledge.

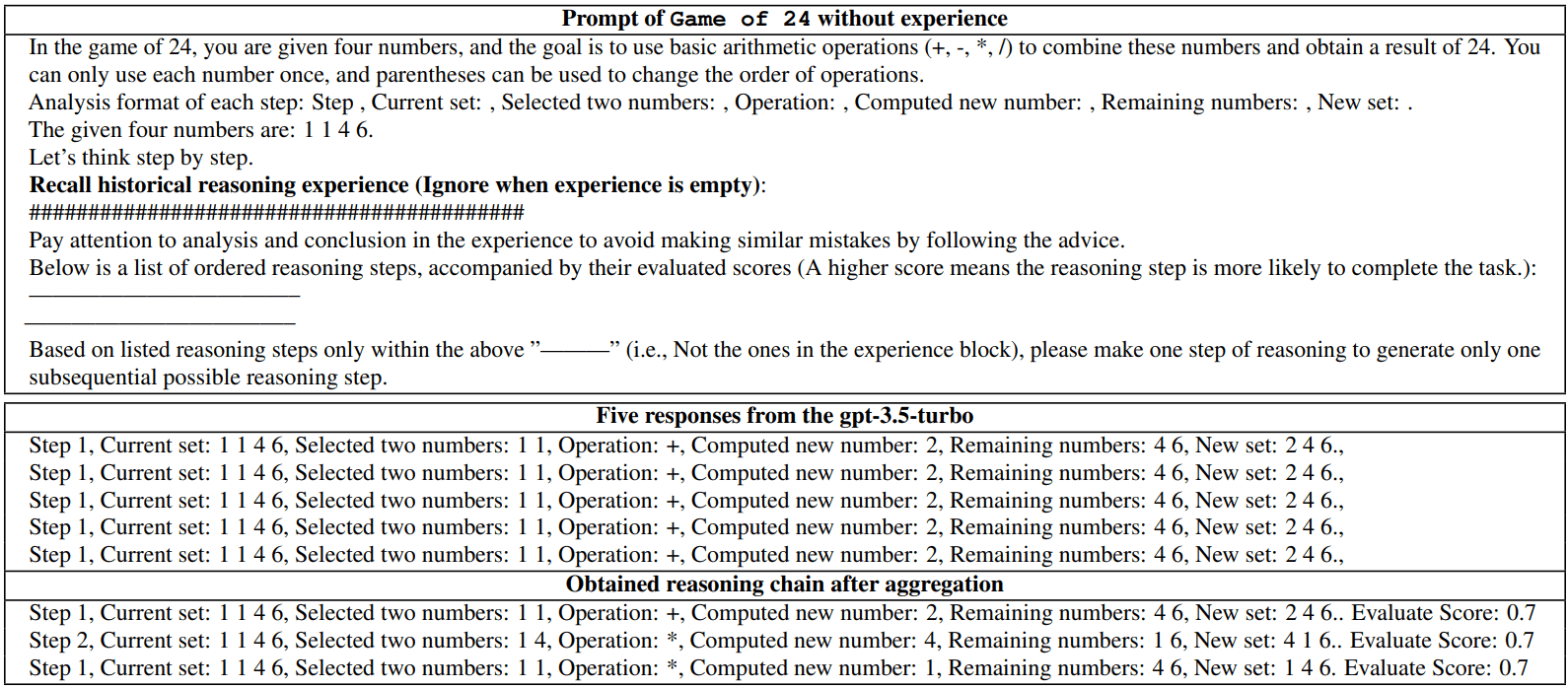

To emphasize our insights, we share the following three key observations, which are based on the following 24-game game dataset using the gpt-3.5-turbo model with a temperature of 0.7 and a top p-value of 0.7 applied.

The experience in the prompt encourages LLMs to explore more logic in their responses.As shown in Table 5, the model generated the same reasoning step five times when no experience was included in the cue. This observation shows a common problem with LLMs, which is the lack of self-motivation to explore different reasoning logics. Thus, despite their strong reasoning potential, LLMs may get stuck in a cycle that starts with the simplest reasoning step, which may never lead to a final solution. The "Acquired Reasoning Chains" section of Table 5 illustrates erroneous reasoning chains. We believe that, as in Tree of Thoughts Yao et al. (2024), where the nodes of the tree are constructed by generating multiple responses as thoughts, such repetitive inference steps may cause the algorithm to fail in some cases. However, in the second iteration of BoT, the introduction of experience into the prompts resulted in the generation of different initial inference steps, as shown in Table 6. The final inference chain can eventually arrive at the correct solution by starting from a wider range of potential logic.Weng et al. (2023)

LLMs avoid making similar mistakes highlighted in the experience.If experience is not included in the prompts, which include error analysis, LLMs will make many mistakes, such as deviating from the task rules and falling back to the initial reasoning step in the final process, as shown in the "Acquired Reasoning Chain" section of Table 5. After analyzing this chain of reasoning and incorporating its feedback as experience into the prompts for the second iteration of the BoT, it can be seen from Table 6 that LLMs will fully learn from the experience before reasoning. First, none of the responses replicated the same erroneous reasoning steps as the diverse initial reasoning steps shown in "Five responses from gpt-3.5-turbo". Second, the LLMs successfully avoided all previously identified errors by strictly adhering to the task rules, eliminating incorrect reasoning, and performing logical inference steps. Third, this ultimately leads to the correct solution for the "1 1 4 6" Game of 24 task. Other work, such as Weng et al. (2023); Madaan et al. (2023); Zheng et al. (2023), has also emphasized the importance of augmenting cues through feedback, which is a self-assessment of previous answers. However, BoT is seminal work based on the insight that experience that includes error analysis can lead to strong reasoning in LLMs.

Without human annotations, LLMs automatically organize effective chains of reasoning to solve complex problems based on experience.BoT is the pioneering initiative that proposes an automated prompting framework that leverages the insight that LLMs can acquire effective reasoning skills for problem solving through error analysis and guidance through experience alone, without the need for human annotation. As shown in Table 5, this is the first iteration of BoT, and the initial hints contain only basic task guidance and questions without contextual learning examples like those in CoT. Even though the inference chains obtained by LLMs using such prompts contain many errors and invalid inference steps, their error analyses and suggestions can be included as lessons learned in the input prompts in order to benefit the second iteration of inference, as shown in Table 6. It can be observed that without human guidance about the correct reasoning procedure, LLMs can gain knowledge from experience, including error analysis and guidance, which is gained from previously generated reasoning chains, leading to incremental improvements in reasoning problem solving.

Thus, our BoT provides long-term guidance for research as it demonstrates the importance of recall error analysis and suggestions when enabling LLMs to generate effective reasoning processes for complex tasks. With these insights, cue-engineering research on inducing reasoning abilities in LLMs can focus on how to generate experiences rather than introducing more human a priori knowledge.

C Thinking Structure Generation

BoT is an automated prompting framework that iteratively accumulates experience gained from inference chain analysis. Thus, BoT is generalized to various thought generation methods and LLMs capable of generating and evaluating inference steps.Moreover, the performance of BoT depends on the effectiveness of its thought generation structure. Therefore, BoT uses the most recent structure, the Tree of Thoughts (ToT) Yao et al. (2024), as its base model to generate chains of reasoning in each iteration. As mentioned in the main paper, the base thought generation model could also be a Thinking Graph (GoT) Besta et al. (2023), i.e., BoT with GoT. however, due to time constraints and the fact that the current GoT has not yet been applied to mathematical problems, the BoT design exclusively employs the ToT. furthermore, when used as the base model in the boosting mechanism, the thought structure in each iteration can be kept lightweight. Ultimately, the proposed BoT generates heterogeneous tree structures, each of which is a lightly weighted binary tree.

C1 Next Thinking Generation and Edge Weight Calculation

Using the next thought-generating cue discussed in Section A, LLMs can generate cues by combining the experience F1.... .t and replacing {Gi} with z1... ,i-1 replacing {Gi} to generate the next possible thought for a reasoning step zi. For a reasoning step zi, LLMs use the thought evaluation cue to generate evaluation scores as edge weights between zi and zi-1. For a detailed procedure, see the source code in examples/BoostingOfThought/BoT reasoner.py. As a direct example of BoT applied to '3 5 6 8' in Game of 24, using gpt-3.5-turbo, Tables 7 and 8 show the generation of the thought, while Table 9 shows how it is calculated.

C2 The Need for Heterogeneous Tree Structures

In each iteration of BoT, the heterogeneous tree structure is constructed to explore a wider inference search space and improve robustness. As seen in the source code under examples/BoostingOfThought/BoT core.py, the temperature and Top p values of the LLMs in each tree are selected from the ranges [0.2, 0.4, 0.6, 0.7, 0.9, 1.1, 1.5] and [0.1, 0.3, 0.5, 0.7, 0.9], respectively. The tree growth strategy can be layer-by-layer or leaf-by-leaf. We observed the following two benefits of ensuring this heterogeneity.

Heterogeneity extends the inference search space and thus increases the speed of convergence.When different trees are constructed for different purposes, e.g., explored using a layer-by-layer strategy or exploited using a leaf-by-leaf strategy, and based on randomized or deterministic LLMs, the generated reasoning steps and the resulting inference chains can exhibit significant differences, effectively covering a wider range of reasoning possibilities. For example, in one iteration, when LLMs generate the next thought with more confidence, similar thoughts are continuously explored; otherwise, LLMs with more randomness tend to generate diverse thoughts. It is often difficult to predict whether deterministic reasoning or randomness will contribute to a solution. Therefore, ensuring heterogeneity by mixing different types of logical reasoning steps allows us to fully explore the reasoning space in a single iteration, ultimately facilitating subsequent iterations. In the ablation study, we compare the BoT performance between heterogeneous and homogeneous tree structures.

Heterogeneity reduces the likelihood of generating invalid or erroneous inference chains, thus enhancing robustness.Unlike heterogeneity, in trees with a homogeneous setup, individual trees tend to follow a consistent logical generative thinking and build inference chains with the same tree structure. Then, when the logic is wrong or the underlying structure is not valid for the problem at hand, the reasoning chains of all trees obtained by BoT in each iteration can only contain noisy and incorrect reasoning steps. Even when aggregating them to obtain a more refined reasoning chain for evaluation, the experience may still deviate significantly from providing appropriate problem-solving advice. Therefore, designing the tree-thinking structure to be heterogeneous can help reduce the likelihood that there will be no valid reasoning chains available for evaluation in subsequent BoT iterations. This enhanced robustness allows the BoT to solve problems of varying difficulty levels.

This is a picture depicting the process of "top priority aggregation" and "greedy aggregation".

Input: m inference chains, where the inference step of an m-th chain is denoted as z1i = 1m.

Output: polymerized chain z1..n.1 - highest priority aggregation

2 For each chain m = 1, 2, ... , M do

3 Calculate the sum of the edge weights of the m-th chain as Vm = ∑i in the range m1m Vi-1,i.

4 End

5 Obtain the optimal chain among M chains by performing m* = arg maxm {Vm}

6 Assign the aggregated chains as optimal, z1.... .n := {z1i=1n*m*}7 - Greedy aggregation

8 z1 := z1 where m* = arg maxm {V1m}.

9 For each aggregation step i = 2, ... , n do

10 For each chain m = 1, 2, ... , M do

11 Collect Jm = {j, sim(z1i-1, z1j) > 0.7; j ∈ n1m}.

12 Obtain j*,m = arg maxj∈Jm {Vj,j+1m}

13 End

14 Perform the following to obtain the optimal next inference step: zi = z1j*+1 where j* = arg maxj∈{j*m}1M {Vj,j+1m}.

15 End

16 Obtain the polymeric chain z1.... .n.This is basically an algorithmic flowchart describing how to optimize a data chain or node and get the best results through different methods.

D Thought structure aggregation

After completing the reasoning in a heterogeneous tree structure, BoT's aggregation process first extracts the best chains of reasoning from each tree, and then combines them into a single chain of reasoning using either the best-first or greedy aggregation methods. More detailed information about these two aggregation methods can be found in the source code examples/BoostingOfThought/BoT aggregator.py.

As shown in the first block of Algorithm 16, best-first aggregation is a straightforward aggregation method that directly extracts the chain with the highest sum of edge weights. This method is fast and stable. It usually guarantees competitive performance because subsequent experiences can be generated by analyzing the obtained optimal chains. However, it can only select existing chains without efficient tuning. Greedy aggregation is more advanced because it combines the inference steps in different chains to produce a new and better inference chain with the highest edge weights. The greedy aggregation process in Algorithm 16 consists of two steps. First, it collects inference steps that are similar to the aggregated inference step zi-1 . Therefore, the next aggregated inference step is selected from the next inference step in this collection set by maximizing the edge weights. sim is a similarity function that evaluates the percentage of the same words and mathematical numbers between two passages using LLMs. 0.7 is an empirical threshold obtained from experiments.

E Impact of erroneous feedback

Feedback obtained by evaluating the aggregated inference chain may include limited use of the analysis and completely erroneous conclusions and bug reports. This problem is usually caused by the nature of LLMs, which are language models and do not inherently verify the accuracy of the generated text. In addition, the capabilities of LLMs, such as gpt-3.5-turbo, are limited when used as validators for mathematical problems.

A straightforward example is presented in Table 7. The analysis report concludes that "the final result obtained in step 3 is 80, which is mathematically equal to 24. "Worse, the experience further consists in the fact that "the chain of reasoning is correct" and that "no errors were found in the reasoning steps". errors were found." Using this experience as an input cue, in the first iteration, the BoT is misled into generating the wrong inference step, and the corresponding aggregation chain can be seen at the beginning of Table 8. It is clear that the aggregation chain is logically incorrect and does not comply with any of the rules of Game of 24.

However, we argue that erroneous feedback will not be amplified, but rather its negative impact on the generated reasoning steps can be mitigated or even fully corrected in subsequent iterations through the iterative mechanism of BoT. The main reason for this is that the generated erroneous reasoning steps will be further analyzed to generate new experiences that will be added to the cue. Specifically, since these reasoning steps contain easily recognizable and obvious errors, LLMs tend to generate correct error analyses and provide effective revision suggestions. With the new experience included in the hints, the BoT is able to generate correct reasoning steps. As the experiences in Table 8 show, the BoT generated detailed error reports and revision suggestions, resulting in a rational thought generation process.

The advantages of BoT in utilizing iterations to mitigate the detrimental effects of error feedback are evident in Figure 4. Notably, BoT's performance shows consistent enhancement as the number of iterations increases. This emphasizes the importance of cumulative experience and the ability of subsequent experience to correct prior errors.

F More results for math

Fig. 5: Reveals the effectiveness of using different strategies for solving all problems categorized in the MATH dataset. The strategies are compared based on categories including pre-algebra, algebra, counting and probability, number theory principles, geometry, pre-calculus, and intermediate algebra. The subplot labeled "overall" shows the overall solution rate for all problems in all categories.

In Figure 5, we provide the solution rates of the different methods on each category of the MATH dataset. The complexity and diversity of the various mathematical problems in these categories provide a more challenging benchmark for mathematical reasoning. Thus, the complexity and diversity of problems in MATH require a wide range of reasoning skills to solve them. Therefore, a detailed examination of our approach and its comparison with other approaches in our context can provide valuable insights.

LLMs.Experiments conducted on the MATH dataset used well-known Large Language Models (LLMs), namely GPT-3.5-Turbo, henceforth referred to as GPT3.5, and GPT-4, henceforth referred to as GPT4. We directly used the API published by OPENAI.

Rivals.

- GPT4 ComplexCoT. which is a model of GPT4 using the Complex CoT Fu et al. (2022) prompting method. Reasoning examples are used in the inference hints, which are taken from the corresponding Complex CoT publication Fu et al. (2022). Since greedy decoding is used, we do not follow the self-consistent approach Wang et al. (2022) for sampling inference paths.

- GPT3.5. standardized prompts are used and GPT3.5 models are used to generate answers.

- GPT3.5 ComplexCoT. similar to GPT4 ComplexCoT, but with the model changed to GPT3.5.

- GPT4 PHP+ComplexCoT. this is the GPT4 model using PHP Zheng et al. (2023) + Complex CoTFu et al. (2022). Specifically, in the PHP Zheng et al. (2023) framework, Complex CoT prompts are used to generate initial base answers, and then PHP+Complex CoT can develop subsequent answer-generating prompts from these base answers. Thus, at the beginning of an interaction, base answers can be generated by passing the Complex CoT base hints and the current question to the LLM. Then, relying on the Complex CoT hints revised to the PHP version with additional hint sentences, the step-by-step hinting framework executes on this base answer to generate the correct answer. We refer to this as the PHP+Complex CoT counterpart of the PHP-Complex CoT in the original work.The Complex CoT has a shot count of 8.

- GPT4 BoT wo/ experience. the GPT4 model is used to perform the reasoning of the BoT framework without accumulating experience. the basic setup of the BoT follows those presented in the main paper. Thus, after one iteration, aggregated chains will be used as the solution.

- GPT4 BoT. GPT4 is used to perform the full version of the BoT shown in the main paper.

- GPT4 BoT + CoT.In addition to the BoT framework, the prompts included 5 reasoning examples from the CoT Wei et al. (2022) publication. Thus, in each iteration, the prompts contain not only the experience, but also the additional 5 CoT reasoning examples.

- GPT3.5 BoT. similar to GPT4 BoT, but with the model changed to GPT3.5.

- GPT3.5 BoT (GPT4). In this experiment, we use GPT3.5 to reason and thus generate chains of thoughts in thought structure generation.

However, the GPT4 model was used to obtain assessment and analytic feedback when conducting thought assessment and experience generation in an aggregated thought chain analysis.

We make the following additional observations from the results in Figure 5.

BoT's outstanding performance in solving more challenging problems stems largely from experience.BoT-related methods such as GPT4 BoT and GPT4 BoT + CoT consistently achieve the highest problem resolution rates across the different subcategories of MATH. Specifically, GPT4 BoT leads the best available solution, GPT4 PHP + ComplexCoT, by 8.61 TP3T, while GPT4 BOT + CoT leads by 12.41 TP3T.In the total of seven categories, GPT4 BoT outperforms GPT4 PHP + ComplexCoT by at least 0.81 TP3T, with its superiority in algebraic problems The same is true for GPT3.5 BoT and GPT3.5 BoT + CoT. However, when there is no accumulated experience in the BoT framework, all mathematical problem solving performance is drastically reduced, as shown by the GPT4 BoT wo/ experience.

In addition to error analysis experience, including correct examples, such as simple CoT examples, is critical to improving the BoT's effectiveness in solving challenging mathematical problems.The GPT4 BoT significantly outperforms the GPT4 PHP+ComplexCoT in solving the first five subcategories of the MATH problem.However, in the areas of advanced and intermediate algebra, where the need for more complex reasoning and complex logic step solving is much higher, the BoT's improvements are only 0.8% and 2.4%.These gains are relatively limited compared to the significant enhancements observed in the solving of the simpler problem classes these gains are relatively limited compared to the significant enhancements observed when solving simpler problem categories. However, when five correct instances of CoT were added directly to the input prompt, the GPT-4 BoT + CoT greatly improved its performance in the domains of Advanced Algebra and Intermediate Algebra, outperforming the GPT-4 BoT by 7.71 TP3T and 11.51 TP3T, respectively.The underlying conclusion of these observations is that to ensure that the BoT achieves the best possible performance when solving complex mathematical problems, trial-and-error analysis to learn to reason is not the only way to ensure that the BoT can achieve the best performance in solving complex mathematical problems. error analysis to learn reasoning is not sufficient and should rely on providing correct answers to LLMs in the input prompt.

Although at first the GPT3.5 fit BoT may be slightly inferior to the GPT-4 CoT, when the experience is generated using the GPT-4 as a tool for evaluation and analysis, it is possible to make the GPT-3.5 BoT (GPT-4) outperform the GPT-4 Complex CoT.When using GPT3.5, which has a capacity smaller than GPT4, as an LLM, BoT obtained a solution rate at least 7.71 TP3T lower than GPT4 ComplexCoT (especially in the discipline of algebra). It is clear that BoT cannot outperform GPT4 ComplexCoT when the weaker performing LLMs produce lower quality trial-and-error analyses.Thus, after using GPT3.5 to generate only the inference step while GPT4 generates the experience, the GPT3.5 BoT (GPT4) shows a significant improvement in all the categories, leading to a solution rate of 55.8%, which is higher than the GPT4 ComplexCoT by 5.5%, and even 1.9% higher than the current state-of-the-art GPT4 PHP+ComplexCoT.These observations provide further evidence that experience gained by iterating over hints is the main reason for the success of the BoT framework.

G Game 24 point reasoning results

First, in Tables 5 through 9, we show the detailed cues used by BoT in the reasoning process, thus providing a comprehensive understanding of what BoT does in each iteration. Then, starting with Table 10, we show exact examples that encompass the entire reasoning process of BoT. Following the basic setup shown in the Experiments section, these experiments were obtained using the GPT-3.5-turbo model with BoT.

Table 5: Inference steps generated by gpt-3.5-turbo when no experience is included in the input prompt.We first have the model generate five inference steps to check for diversity, and then present the final inference chain after completing the first iteration of the BoT.

Tips for the game 24 points, no experience

In 24 Points you are given four numbers and the goal is to use basic arithmetic operations (+, -, *, /) to combine these numbers to get the result 24. You can only use each number once, and you can use parentheses to change the order of operations.

Analyze each step of the format:

Step X. Current set: XXXX, two numbers selected: XX XX, operation: XX, new number obtained by calculation: XX, remaining numbers: XXXX, new set: XXXX.The four numbers given are: 1 1 4 6.

Let's think step by step.Recall historical reasoning experience (ignore when experience is null):

## ############### ######## ######## # # # #######

Pay attention to the analysis and conclusions in the experience and avoid making similar mistakes by following the recommendations.

The following is a series of ordered reasoning steps, accompanied by their evaluation scores (higher scores mean that the reasoning step is more likely to complete the task.) :Based on the reasoning steps listed in "_" above (i.e., not the steps in the empirical block), please perform one step of reasoning to generate the only one possible subsequent reasoning step.

Five Responses from gpt-3.5-turbo

Step 1, Current set: 1 1 4 6, Two numbers chosen: 1 1, Operation: +, New number calculated: 2, Remaining numbers: 4 6, New set: 2 4 6., Evaluation score: 0.7

Step 1, Current set: 1 1 4 6, Two numbers chosen: 1 1, Operation: +, New number calculated: 2, Remaining numbers: 4 6, New set: 2 4 6., Evaluation score: 0.7

Step 1, Current set: 1 1 4 6, Two numbers chosen: 1 1, Operation: +, New number calculated: 2, Remaining numbers: 4 6, New set: 2 4 6., Evaluation score: 0.7

Step 1, Current set: 1 1 4 6, Two numbers chosen: 1 1, Operation: +, New number calculated: 2, Remaining numbers: 4 6, New set: 2 4 6., Evaluation score: 0.7

Step 1, current set: 1 1 4 6, two numbers chosen: 1 1, operation: *, new number calculated: 1, remaining numbers: 4 6, new set: 1 4 6., evaluation score: 0.7Inference chain obtained after aggregation

Step 1, current set: 1 1 4 6, two numbers chosen: 1 1, operation: +, new number calculated: 2, remaining numbers: 4 6, new set: 2 4 6... Evaluate the fraction: 0.7

Step 2, current set: 1 1 4 6, two numbers chosen: 1 4, operation: *, new number calculated: 4, remaining numbers: 1 6, new set: 4 1 6... Evaluation score: 0.7

Step 1, current set: 1 1 4 6, two numbers chosen: 1 1, operation: *, new number calculated: 1, remaining numbers: 4 6, new set: 1 4 6., evaluation score: 0.7

H Derivation results for "GSM8K".

BoT operates using basic prompts and specific formats similar to those shown in Tables 5 through 9. The only thing that will change is the task prompt, as shown in Table 15. And starting from Table 16, we provide a number of detailed examples that contain the complete reasoning process of BoT. These experiments are based on the base setup shown in the Experiments section, using the GPT-3.5-turbo model of BoT.

# Empirical Reasoning Prompts for Game 24

## First, review the historical reasoning experience:

### Inference chain 1 and its comments

Below is a chain of reasoning steps shown in order:

---

### Step 1

Current set: 1 1 4 6, two numbers chosen: 1 1, operation: +, new number calculated: 2, remaining numbers: 4 6, new set: 2 4 6... Evaluation score: 0.7### Step 2

Current set: 1 1 4 6, two numbers chosen: 1 4, operation: *, new number calculated: 4, remaining numbers: 1 6, new set: 4 1 6... Evaluation score: 0.7### Step 3

Current set: 1 1 4 6, two numbers chosen: 1 1, operation: *, new number calculated: 1, remaining numbers: 4 6, new set: 1 4 6. Assessment score: 0.7---

## ANALYSIS: This chain of reasoning is unable to complete the task and reach the goal correctly. There were errors in the reasoning steps that prevented the discovery of the correct solution.

### Reasoning Step 1

This step is a logical extrapolation of the previous step, as it follows the rule of selecting two numbers, performing the operation and updating the set accordingly. However, this step does not contribute to the correct solution. The new number 2 obtained from the computation does not lead to the desired result 24.

**Suggestion**: Try different operations or combinations of numbers to get a new number closer to 24.

**Recommendation or Rejection Description**: Rejected.### Reasoning Step 2

There is an error in the description of the reasoning step. It is incorrectly called "reasoning step 2" twice. However, assuming it is the only step, it is a logical deduction from the previous step. The multiplication (*) operation is correctly applied to the selected number and results in the new number 4. The remaining numbers are updated accordingly.

**Recommended or Rejected Description**: Recommended.### Reasoning Step 3

There is an error in the description of the reasoning step. It is incorrectly called "Reasoning Step 1" instead of "Reasoning Step 3". This step is not a logical deduction from the previous step. The multiplication (*) operation is applied to the same numbers as in Reasoning Step 1, but the new number 1 is calculated differently. The remaining numbers are updated accordingly.

**Bug Report**: The calculation in this step is wrong. The correct calculation should be 1 * 1 = 1.

**Recommendation**: Double-check the calculations to ensure that they are accurate.

**Recommendation or Rejection Description**: Rejected.**Confidence score**: 0.7

---

## Five Responses from gpt-3.5-turbo

---

### Step 1

Current set: 1 1 4 6, two numbers selected: 1 4, operation: *, new number computed: 4, remaining numbers: 1 6, new set: 1 4 6.....### Step 1

Current set: 1 1 4 6, two numbers selected: 1 6, operation: +, new number computed: 7, remaining numbers: 1 4, new set: 1 4 7.....### Step 1

Current set: 1 1 4 6, two numbers chosen: 1 1, operation: *, new number computed: 1, remaining numbers: 4 6, new set: 1 4 6.....### Step 1

Current set: 1 1 4 6, two numbers chosen: 1 4, operation: /, new number computed: 0.25, remaining numbers: 1 6, new set: 0.25 1 6..., and### Step 1

Current set: 1 1 4 6, two numbers chosen: 1 1, operation: -, new number computed: 0, remaining numbers: 4 6, new set: 0 4 6.---

## Inference chain after aggregation

---

### Step 1

Current set: 1 1 4 6, two numbers chosen: 1 1, operation: *, new number calculated: 1, remaining numbers: 4 6, new set: 1 4 6... Evaluation score: 0.9### Step 2

Current set: 1 4 6, two numbers chosen: 1 4, operation: *, new number calculated: 4, remaining numbers: 6, new set: 4 6... Evaluation score: 0.7### Step 3

Current set: 46, Two numbers chosen: 4 6, Operation: *, New number calculated: 1, Remaining numbers:, New set: 24. Assessment score: 0.9---

Table 6: Reasoning steps generated by gpt-3.5-turbo when incorporating experience into the input prompt. This experience is an analysis of the reasoning chain in Table 5. After setting this experience in the cue, we show the final inference chain formed after five responses from the model and the completion of the second iteration of the BoT.

# Next Thought Generation Prompts

In the 24 Points game, you are given four numbers and the goal is to combine these numbers using basic arithmetic operations (+, -, *, /) to get the result 24. Each number can only be used once, and you can use parentheses to change the order of operations.

Analysis format for each step: step , current set: , two numbers selected: , operation: , new number computed: , remaining numbers: , new set: .

The four numbers given are: 3 5 6 8.

Let's think step by step.

## First, review the historical reasoning experience:

### 1st chain of reasoning and comments

The following is a chain of reasoning containing the reasoning steps presented in order:

---

Step 1, current set: 3 5 6 8, two numbers chosen: 5 6, operation: *, new number calculated: 30, remaining numbers: 3 8, new set: 30 3 8... Evaluation score: 0.9

Step 2, current set: 30 3 8, two numbers chosen: 30 3, operation: /, new number calculated: 10, remaining numbers: 8, new set: 10 8... Evaluation score: 0.9

Step 3, current set: 10 8, two numbers selected: 10 8, operation: *, new number calculated: 80, remaining numbers: none, new set: 80... Evaluation score: 0.9.

---

## Analysis Report

This chain of reasoning allows the task to be completed correctly and the goal to be reached by performing its reasoning steps. The final result obtained in step 3 is 80, which is mathematically equal to 24.

### Reasoning Step 1

This reasoning step is a logical derivation of the previous step. Select the numbers 5 and 6 from the current set (3 5 6 8) and multiply them to get the new number 30.The remaining numbers are 3 and 8.This reasoning step helps in correct solution as it reduces the number of elements in the set and generates a new number.### Reasoning Step 2

This reasoning step is a logical derivation of the previous step. Select the numbers 30 and 3 from the current set (30 3 8) and divide them to get the new number 10. the remaining number is 8. This reasoning step contributes to the correct solution because it further reduces the number of elements in the set and generates a new number.### Reasoning Step 3

This reasoning step is a logical derivation of the previous step. Select the numbers 10 and 8 from the current set (10 8) and multiply them to get the new number 80.Since there are no numbers left, the result of the reasoning chain is 80.This reasoning step helps in correct solution as it generates the final result.Suggestion: no errors were found in the reasoning steps.

Recommended or rejected description: all reasoning steps are recommended because they are correct and help to reach the correct solution.

Confidence score: 0.9

### 2nd chain of reasoning and comments

To be continued in table 8

### Analytical and Conclusion Considerations

Avoid making similar mistakes by following recommendations based on analysis and conclusions from experience.

The following is a list of ordered reasoning steps with their assessment scores (higher scores mean that the reasoning step is more likely to accomplish the task.) :

---

Step 1, current set: 3 5 6 8, two numbers chosen: 3 8, operation: +, new number calculated: 11, remaining numbers: 5 6, new set: 11 5 6... Evaluation score: 0.7

Step 2, current set: 11 5 6, two numbers chosen: 5 6, operation: *, new number calculated: 30, number remaining: 11, new set: 30 11... Evaluation score: 0.9

---

Based on the reasoning steps listed within "---" above (i.e., not the steps in the empirical block), please perform a step of reasoning that generates the only one subsequent possible reasoning step.

## Generated Next Reasoning

Step 3, Current set: 30 11, Two numbers selected: 30 11, Operation: -, New number calculated: 19, Remaining numbers:, New set: 19.

Table 7: Examples of possible reflections of BoT in generating the corresponding inference step zi.

## 2nd chain of reasoning and comments

The following is a chain of reasoning containing the reasoning steps presented in order:

---

### Step 1

Current set: 10 8, two numbers chosen: 10 8, operation: /, new number calculated: 1.25, remaining numbers: none, new set: 1.25. Evaluation score: 0.8.### Step 2

Current set: 10 8, two numbers selected: 10 8, operation: /, new number calculated: 1.25, remaining numbers: none, new set: 1.25... Evaluation score: 0.8.### Step 3

Current set: 10 8, two numbers selected: 10 8, operation: /, new number calculated: 1.25, remaining numbers: none, new set: 1.25... Evaluation score: 0.8.---

## Analysis Report