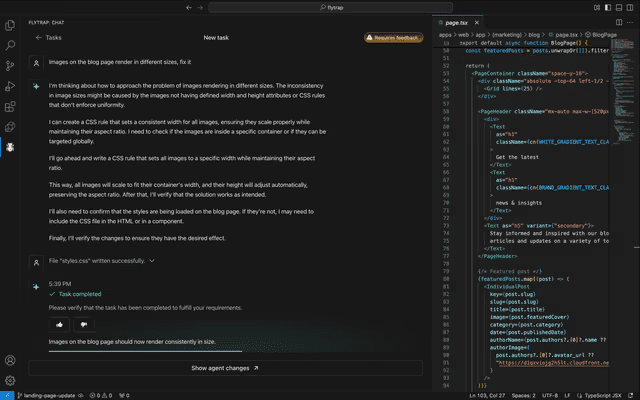

Auto-Deep-Research: Multi-Agent Collaboration to Execute Literature Queries and Generate Research Reports

General Introduction

Auto-Deep-Research is an open source AI tool developed by the Laboratory of Data Intelligence at the University of Hong Kong (HKUDS), designed to help users automate deep research tasks. It is built on the AutoAgent framework and supports a variety of Large Language Models (LLMs) such as OpenAI, Anthropic, Deepseek and Grok etc., capable of handling complex file data interactions and web searches. In contrast to high-cost tools that require a subscription, such as OpenAI's Deep Research, Auto-Deep-Research is completely free, and users only need to provide their LLM API key to use it. Known for its high performance and flexibility, the tool has performed well in GAIA benchmarks and is suitable for researchers, developers, or users who need an efficient research solution.

Function List

- Automated in-depth studies: Automatically searches and organizes relevant information to generate detailed reports based on topics entered by the user.

- Multi-model support: Compatible with a variety of large language models, users can choose the appropriate model according to their needs.

- File Data Interaction: Support for uploading and processing images, PDFs, text files, etc. to enhance data sources for research.

- one-touch start: No complex configuration is required, just enter simple commands to get up and running quickly.

- Web search capability: Combine web resources and social media (e.g., Platform X) data to provide more comprehensive information.

- Open source and free: Full source code is provided so that users can customize the functionality or deploy to a local environment.

Using Help

Installation process

The installation of Auto-Deep-Research is simple and intuitive, and relies heavily on Python and Docker environments. Here are the steps in detail:

1. Environmental preparation

- Installing Python: Ensure that Python 3.10 or later is installed on your system. Recommended

condaCreate a virtual environment:conda create -n auto_deep_research python=3.10 conda activate auto_deep_research

- Installing Docker: Since the tool uses Docker containerized runtime environment, please download and install Docker Desktop first, there is no need to pull images manually, the tool will handle it automatically.

2. Downloading the source code

- Clone a GitHub repository locally:

git clone https://github.com/HKUDS/Auto-Deep-Research.git cd Auto-Deep-Research

3. Installation of dependencies

- Install the required Python packages by running the following command in the project directory:

pip install -e .

4. Configure the API key

- In the project root directory, copy the template file and edit it:

cp .env.template .env - Open with a text editor

.envfile, fill in the LLM's API key as required, for example:OPENAI_API_KEY=your_openai_key DEEPSEEK_API_KEY=your_deepseek_key XAI_API_KEY=your_xai_keyNote: Not all keys have to be filled in, just configure the model key you plan to use.

5. Start-up tools

- Enter the following command to start Auto-Deep-Research:

auto deep-research - Optional parameter configuration, such as specifying a container name or model:

auto deep-research --container_name myresearch --COMPLETION_MODEL grok

Main function operation flow

Automated in-depth studies

- Enter a research topic: Upon startup, the tool prompts for a research topic, such as "Artificial Intelligence in Healthcare".

- auto-execution: The tool analyzes relevant information using web searches and built-in models without user intervention.

- Generating reports: Upon completion, the results will be output to the terminal in Markdown format or saved as a file containing the source and detailed analysis.

File Data Interaction

- Uploading files: Specify the file path on the command line, for example:

auto deep-research --file_path ./my_paper.pdf - Processing data: The tool parses the PDF, image or text content and incorporates it into the study.

- conjunction analysis: Uploaded file data is integrated with web search results to provide more comprehensive conclusions.

Selecting a Large Language Model

- View Support Model: Support for OpenAI, Grok, Deepseek, etc. See the LiteLLM documentation for model names.

- Specify the model: Add parameters to the startup command, for example:

auto deep-research --COMPLETION_MODEL deepseek - operational test: Adjust choices to optimize the experience based on model performance and API responsiveness.

Featured Functions

One-touch start experience

- Instead of manually configuring a Docker image or complex parameters, simply run the

auto deep-researchThe tool automatically pulls the required environments and launches them. - If customization is required, it can be done via the

--container_nameParameters named containers for easy management of multiple instances.

Web Search and Social Media Integration

- The tool has a built-in web search function that automatically crawls web content. If you want to incorporate data from the X platform, you can mention keywords in the research topic and the tool will try to search for relevant posts.

- Example: Type in "latest AI research trends" and the results may contain links to discussions and tech blogs by X users.

caveat

- API Key Security: Don't put

.envThe file is uploaded to a public repository to avoid disclosing the key. - network environment: Ensure that the Docker and network connections are working properly, as this may affect the image pulling or searching functionality.

- performance optimization: If local hardware is limited, it is recommended to use cloud-based LLM to avoid running large models that cause lag.

With these steps, users can easily get started with Auto-Deep-Research, a tool that provides efficient support for both academic research and technical exploration.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...