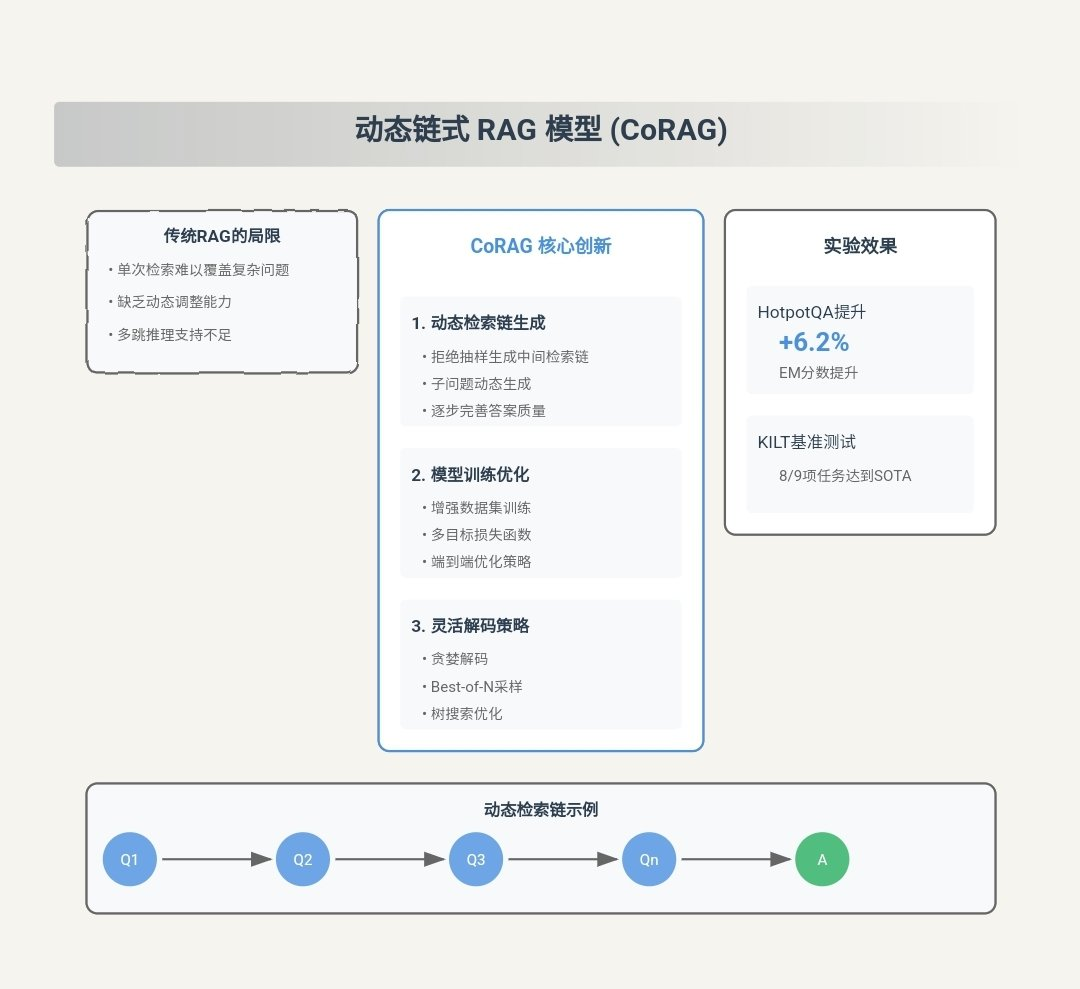

CoRAG: Dynamic chained RAG modeling using MCTS (Monte Carlo Trees)

Summary of the main contributions of CORAG CORAG (Cost-Constrained Retrieval Optimization for Retrieval-Augmented Generation) is a...

Float: a cross-language intelligent search engine to retrieve knowledge in different languages in their native language

Comprehensive Introduction FloatSearch AI is a cross-language intelligent search engine based on artificial intelligence technology, designed to provide users with a more accurate and efficient search experience. It understands users' natural language queries and provides relevant and accurate answers based on semantic analysis.FloatS...

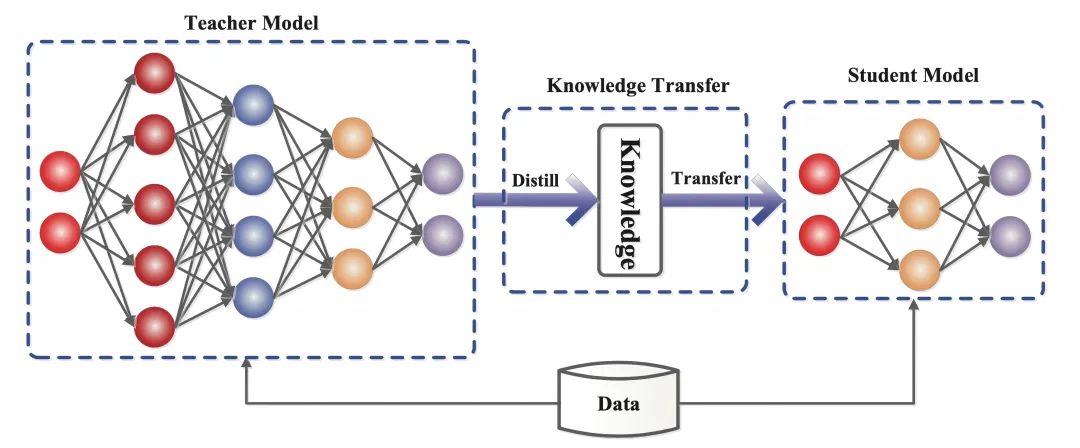

A clear article Knowledge Distillation (Distillation): let the "small model" can also have "big wisdom".

Knowledge distillation is a machine learning technique that aims to transfer learning from a large pre-trained model (i.e., a "teacher model") to a smaller "student model". Distillation techniques can help us develop lighter weight generative models for intelligent conversations, content creation, and other domains. Recently ...

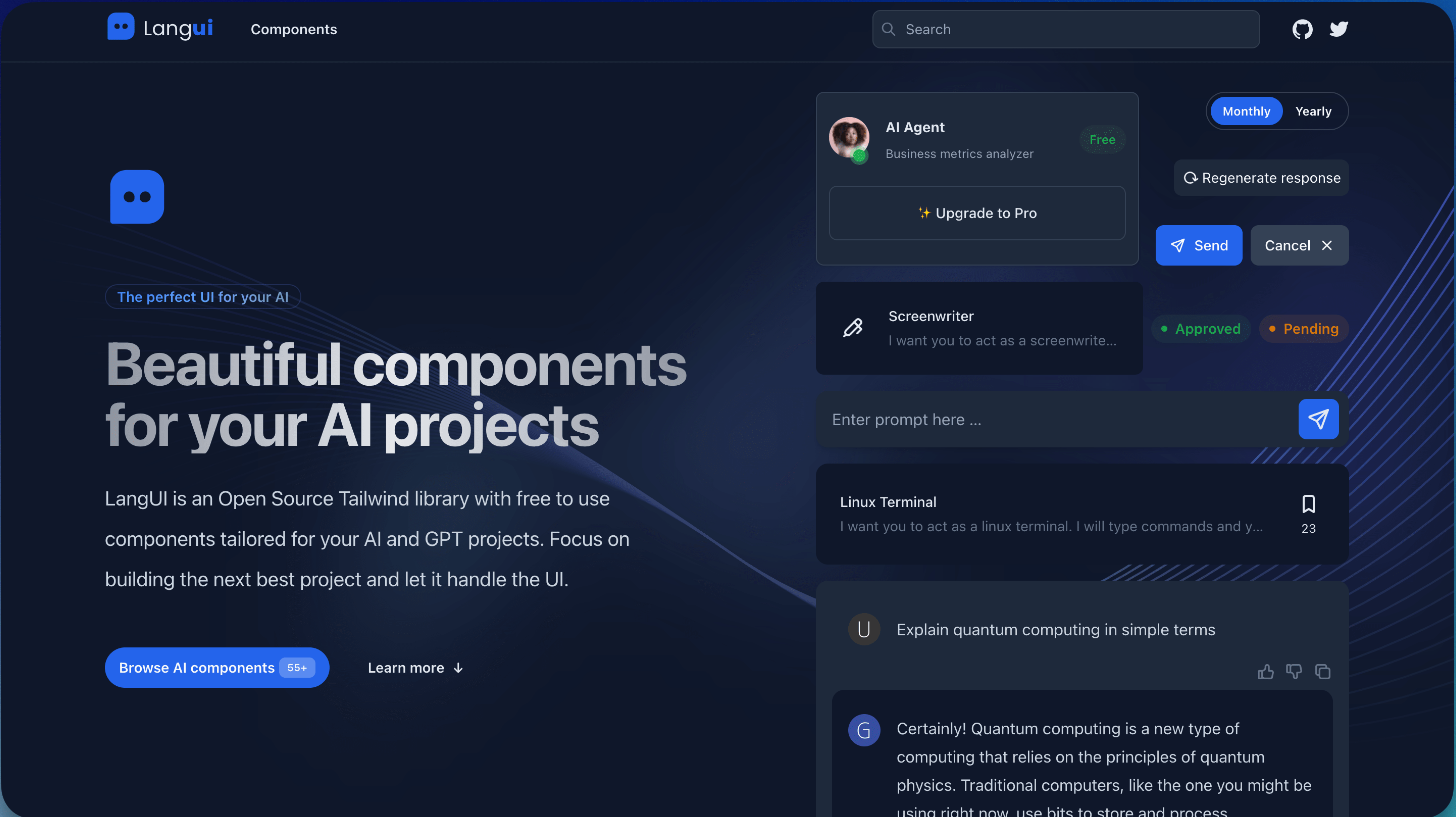

Langui: an open source library of AI user interface components

Comprehensive Introduction LangbaseInc's Langui is an open source user interface component library designed for generative AI and Large Language Model (LLM) projects. The library is based on Tailwind CSS and provides a collection of pre-built UI components to help developers quickly construct...

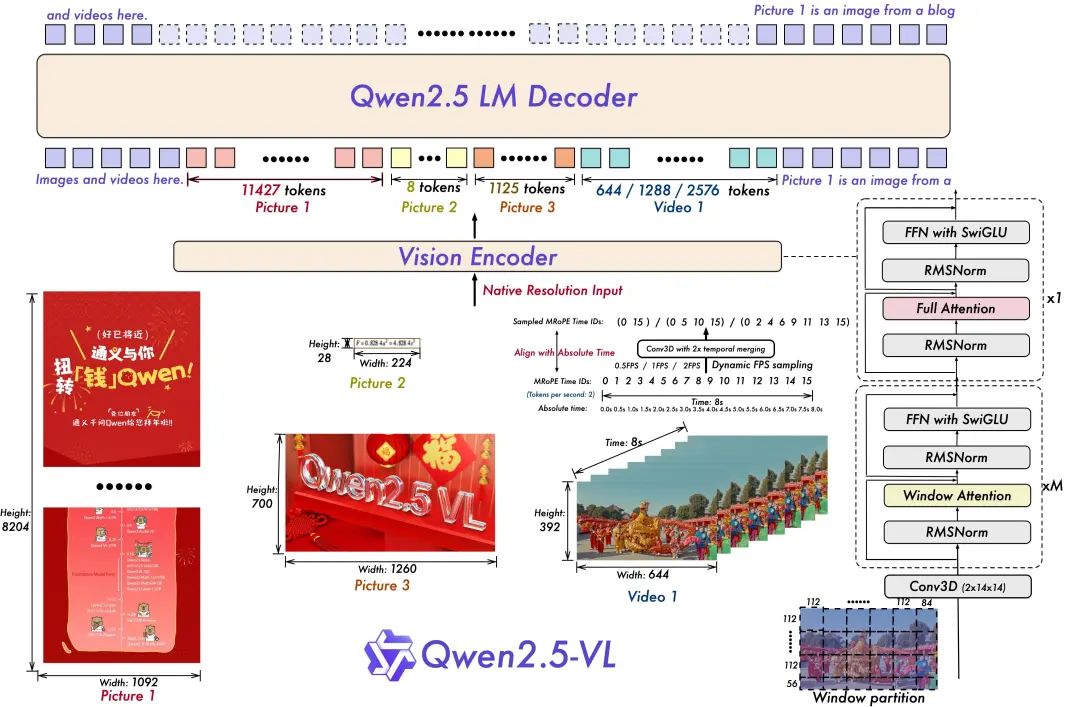

Qwen2.5-VL Released: Supports Long Video Understanding, Visual Localization, Structured Output, Open Source Fine-tunable

1.Introduction to the Model In the five months since the release of Qwen2-VL, numerous developers have built new models on top of the Qwen2-VL visual language model, providing valuable feedback to the Qwen team. During this time, the Qwen team has focused on building more useful visual language models...

How to calculate the number of parameters for a large model, and what do 7B, 13B and 65B stand for?

Recently, many people engaged in large model training and reasoning have been discussing the relationship between the number of model parameters and model size. For example, the famous alpaca series LLaMA large model contains LLaMA-7B, LLaMA-13B, LLaMA-33B and LLaMA...

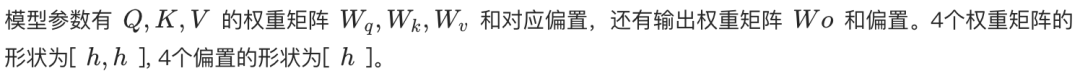

DeepSeek Releases Unified Multimodal Understanding and Generative Models: from JanusFlow to Janus-Pro

JanusFlow Quick Reads The DeepSeek team is back with a new model, launching in the early hours of the 28th morning the innovative multimodal framework Janus-Pro, a unified model that can handle both multimodal comprehension and generation tasks. The model is based on the DeepSeek-LLM...

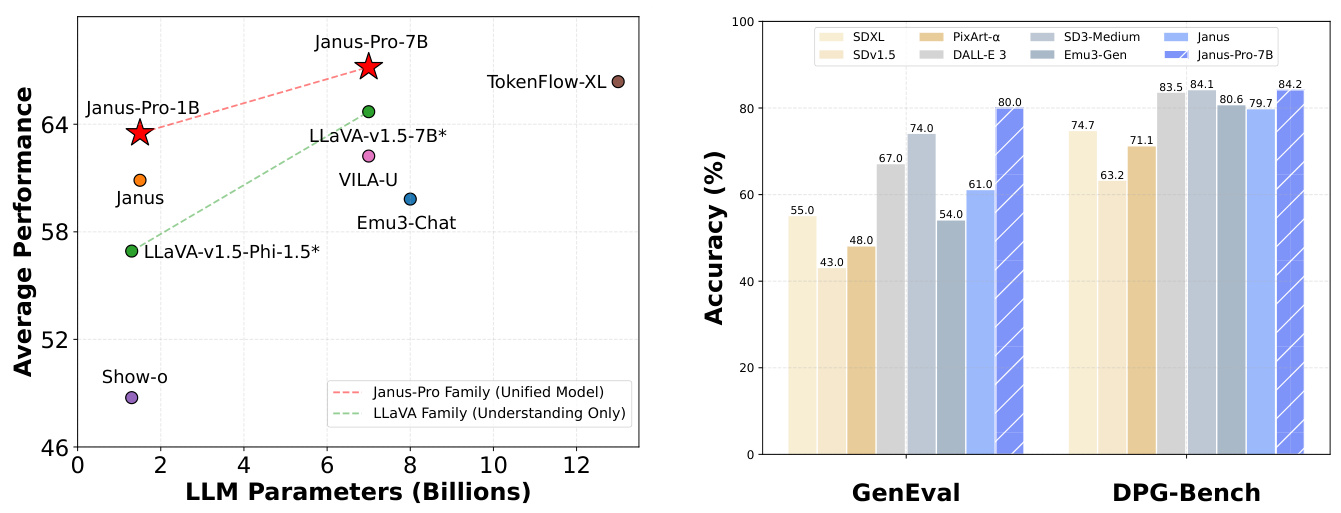

Baichuan Intelligence Releases Baichuan-Omni-1.5 Omnimodal Large Model, Surpassing GPT-4o Mini in Several Measurements

Toward the end of the year, the domestic large modeling field is again spreading good news. Baichuan Intelligence recently intensively released a number of large model products, following the full-scene deep inference model Baichuan-M1-preview and medical augmented open source model Baichuan-M1-14B, and then re-launched the full-modal...

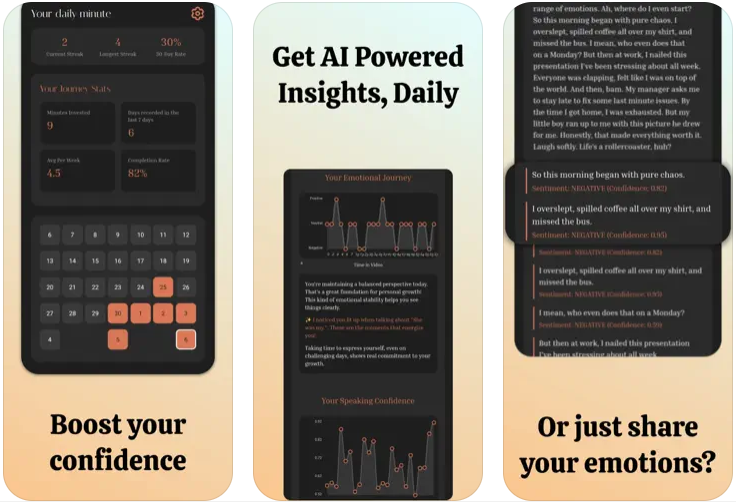

Your Daily Minute: Improving Emotional Awareness and Self-Reflection Through AI Video Journaling

General Description Your Daily Minute is an innovative video diary app that uses AI technology to help users record and understand daily emotions. Users can record a one-minute video reflection each day, and the app automatically transcribes and analyzes the emotional content to provide instant insight into their emotional state. The ...

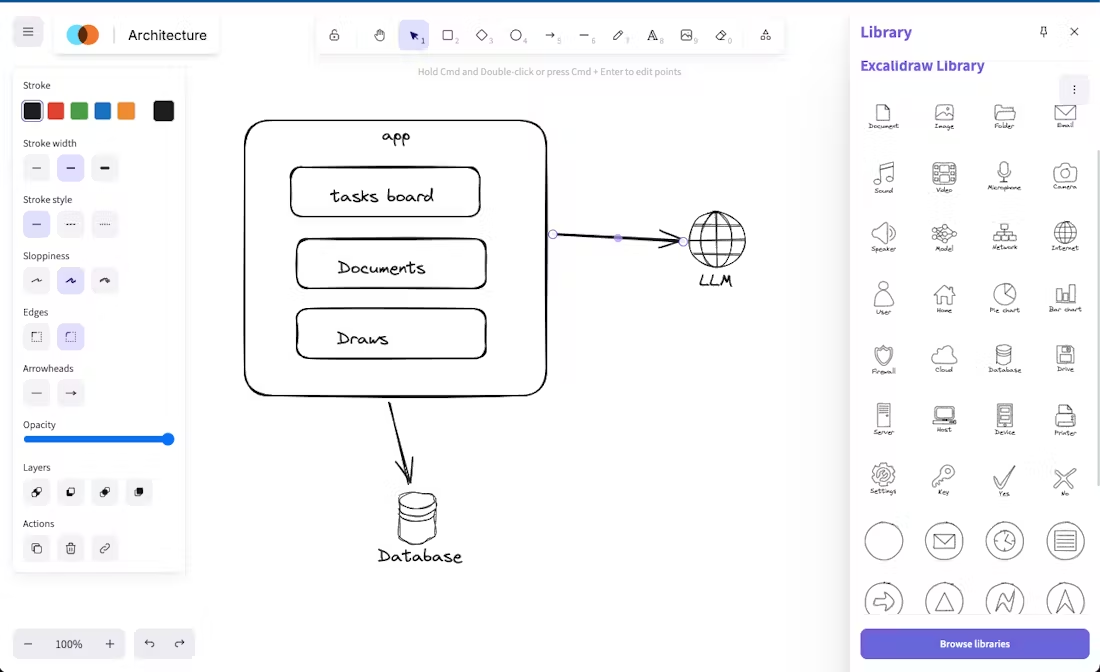

Taskek: AI-powered team collaboration and project management tool

General Description Taskek is an AI-driven productivity tool with integrated Trello, Google Docs and Miro functionality for all types of work environments, from high-rise buildings to home offices. It allows teams to start with simple drawings and quickly translate them into specific tasks, raising...