Audio2Face - NVIDIA open source AI 3D facial animation generation model

What is Audio2Face

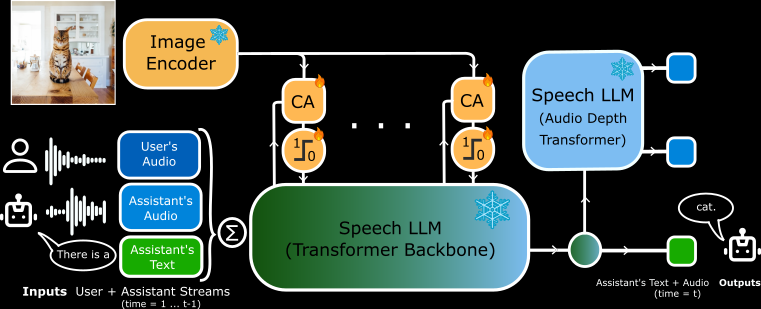

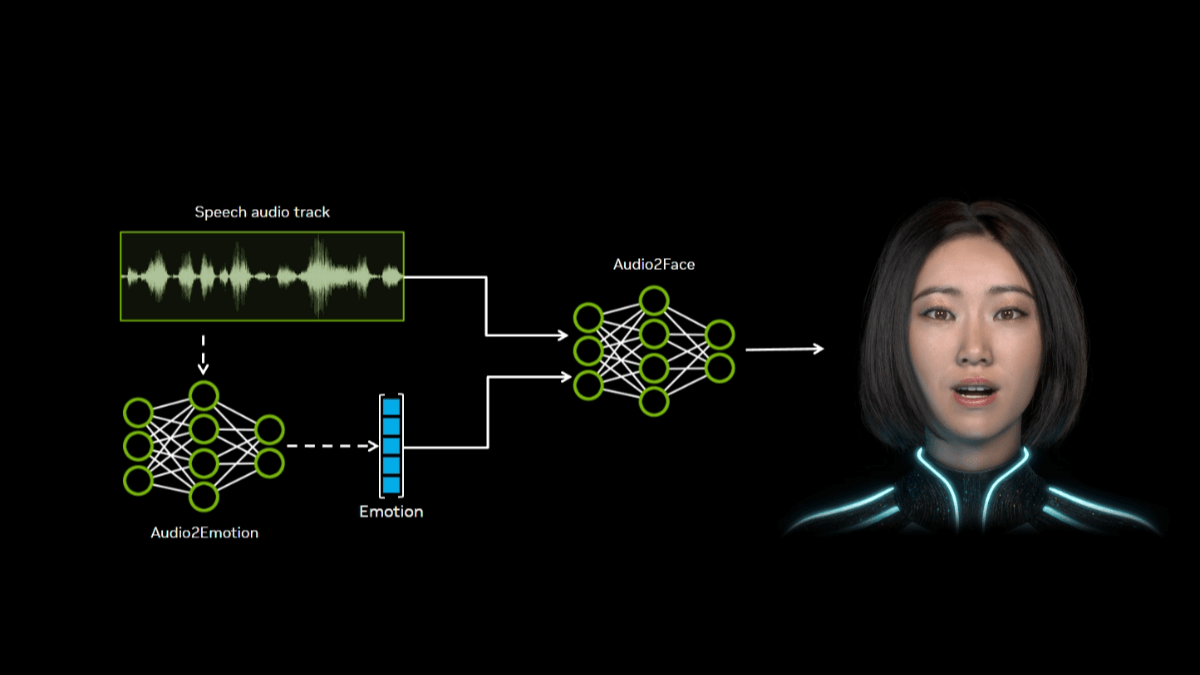

Audio2Face is NVIDIA's open source AI tool capable of transforming audio input into realistic 3D facial animation. By analyzing speech features in the audio, such as phonemes and intonation, it generates precise lip synchronization and subtle emotional expressions to give vivid human expressions to virtual characters. Developers can get free access to Audio2Face's models and SDK to integrate them into games, 3D apps or other projects to quickly create high-fidelity character animations.NVIDIA has also open-sourced the Audio2Face training framework, which lets developers use their own data to fine-tune and customize the models to meet specific needs. For example, developers can train a model to match a specific character's acting style, language, or emotional expression. audio2Face provides plug-ins for Autodesk Maya and Unreal Engine 5, making it easy for users to use the technology directly in these mainstream 3D software.

Features of Audio2Face

- Audio Driver Animation: Automatically generates realistic 3D facial animations by analyzing audio input, including lip synchronization and expression changes.

- real time performance: Supports real-time audio streaming input, generates facial animations on-the-fly, and is suitable for interactive applications and real-time rendering scenarios.

- Multi-language supportCompatible with audio input in multiple languages, it can generate facial expression and mouth shape animation in corresponding languages to meet the needs of use in different language environments.

- affective expression: It can infer and generate corresponding emotional expressions based on the intonation and emotional characteristics in the audio, making the character performance more vivid and natural.

- Model Customization: Provides a training framework that allows users to fine-tune and customize the model using their own data to fit the needs of a particular character or scenario.

- wide range of integration: Support for a wide range of 3D software and platforms, such as Autodesk Maya and Unreal Engine 5, makes it easy for developers to use the technology in different environments.

Audio2Face's core strengths

- Efficient production process: It can quickly generate high quality facial animation, which greatly saves the time and effort of manual frame-by-frame adjustment in traditional animation production.

- Natural and realistic results: The generated facial animation is natural and smooth, the lip synchronization is precise, and the emotion expression is delicate, making the virtual character more realistic and infectious.

- Easy to integrate and use: Provides a rich set of SDKs and plug-ins for developers to easily integrate it into various 3D software and game engines without complicated setup and development.

- Powerful customization capabilities: With the training framework, users can customize the model to meet their needs for animation of different characters, styles and languages.

- Real-time interactive support: Supports real-time audio input and animation generation for interactive applications that require immediate feedback, such as virtual reality, real-time games, etc.

- Reduced production costs: Open-source models and tools lower the barrier to use and reduce the reliance on professional animators, thereby lowering production costs.

- Multi-platform support: Compatible with a wide range of operating systems and hardware platforms, it has good cross-platform performance and improves the versatility and applicability of the technology.

What is Audio2Face's official website?

- Project website:: https://developer.nvidia.com/blog/nvidia-open-sources-audio2face-animation-model/

- GitHub repository:: https://github.com/NVIDIA/Audio2Face-3D

Who is Audio2Face for?

- game developer: Can be used to quickly generate in-game character facial animations to enhance character expression and game immersion.

- Film and Video Animator: Helps create pre-rendered content or real-time animation, increasing productivity and enabling more natural expressions and mouth synchronization.

- Virtual Reality (VR) and Augmented Reality (AR) Developers: Enhance the realism and interactivity of the user experience by giving vivid expressions to the virtual characters.

- 3D artists and designers: Quickly generate facial animation prototypes and explore different expressions and emotional effects during the creation process.

- Technical Director and Head of Animation Technology: Evaluate and integrate new technologies into existing production processes to enhance the team's animation production capabilities.

- Educators and students: As a teaching tool to help students learn 3D animation and AI techniques to stimulate creativity and practical skills.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...