Snowflake Releases Arctic Embed 2.0 Multilingual Vector Model for High-Quality Chinese Retrieval

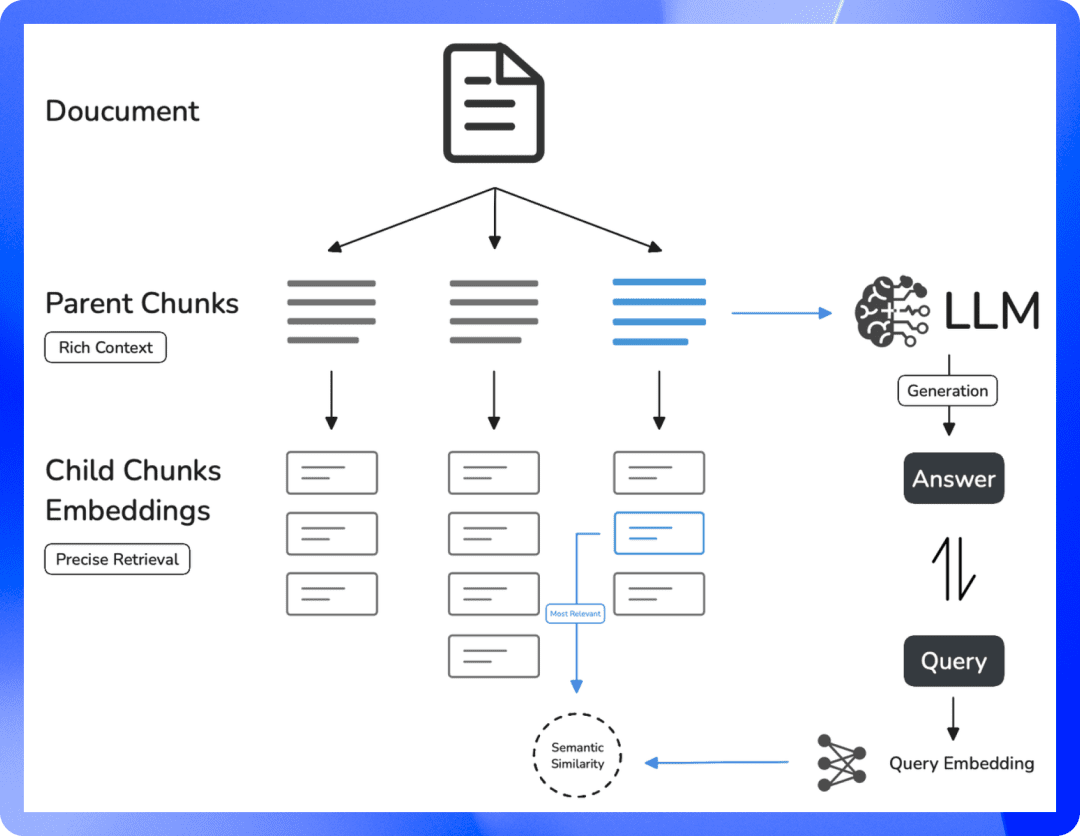

Snowflake is pleased to announce the release of Arctic Embed L 2.0 cap (a poem) Arctic Embed M 2.0 , which is the next iteration of our cutting-edge embedding model, can now support multilingual search. While our previous releases have been well received by customers, partners, and the open source community, and achieved millions of downloads, we have been receiving one request: can you make this model multilingual?Arctic Embed 2.0 builds on the strong foundation of our previous releases, adding multilingual support without sacrificing English-language performance or scalability to meet the needs of a broader user community, which spans a wide range of languages and applications.

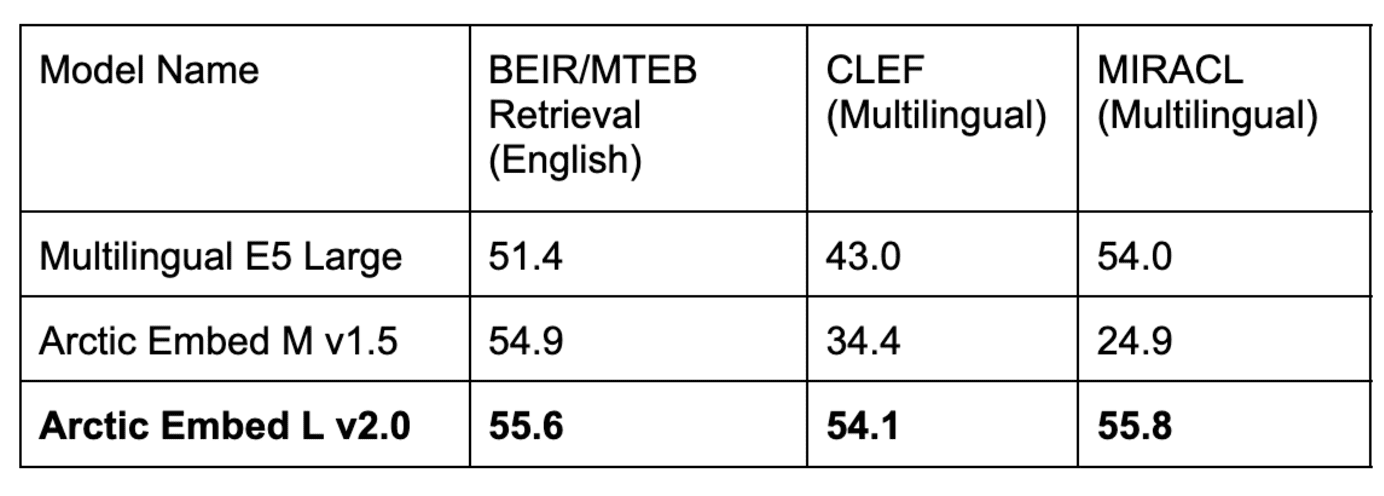

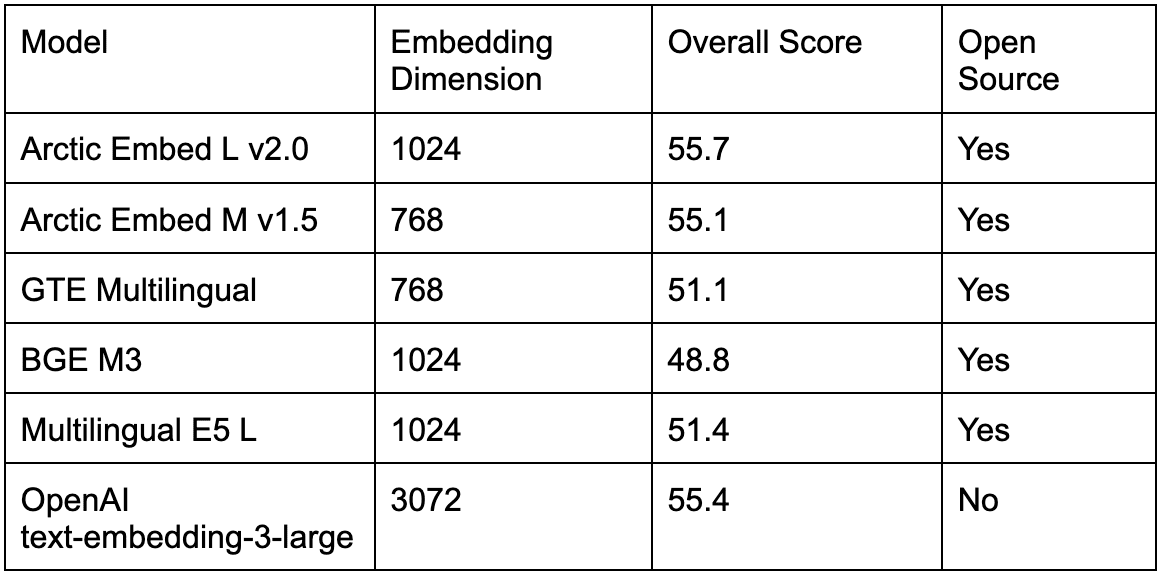

Fig. 1. Unidirectional volume-intensive retrieval performance of an open-source multilingual embedding model with fewer than 1 billion parameters. The scores are MTEB Retrieval and the average nDCG@10 on the CLEF (ELRA, 2006) subset (covering English, French, Spanish, Italian, and German).

Multilingualism without compromise

In this Arctic Embed 2.0 release, we are releasing two available variants, a medium variant focusing on inference efficiency, which is built on Alibaba's GTE-multilingual On top of that, with 305 million parameters (of which 113 million are non-embedded), another large variant focuses on retrieval quality and is built on Facebook's XMLR-Large on top of the long context variant with 568 million parameters (of which 303 million are non-embedded). Both sizes support up to 8,192 Token context length. In building Arctic Embed 2.0, we recognized a challenge faced by many existing multilingual models: optimizing for multiple languages often ends up sacrificing English retrieval quality. This has led many models in the field to have two variants for each version: English and multilingual.Arctic Embed 2.0 models are different. They offer top performance in non-English languages such as German, Spanish, and French, while also outperforming their English-only predecessors in English retrieval. Arctic Embed M 1.5The

By carefully balancing the need for multilingualism with Snowflake's commitment to excellence in English language retrieval, we have built Arctic Embed 2.0 to be a generalized workhorse model for a wide range of global use cases. Throughout this document, all qualitative assessments refer to average NDCG@10 scores across tasks, unless otherwise noted.

Table 1. our Arctic Embed L v2.0 model achieves high scores in the popular English MTEB Retrieval benchmark, as well as high retrieval quality in several multilingual benchmarks. previous iterations of Arctic Embed have performed well in English but poorly in multilingual, while popular open-source multilingual models have English performance declined. All model and dataset scores reflect the average NDCG@10. CLEF and MIRACL scores reflect the average of German (DE), English (EN), Spanish (ES), French (FR), and Italian (IT).

Arctic Embed 2.0's Diverse and Powerful Feature Set

- Enterprise-class throughput and efficiency: The Arctic Embed 2.0 model is built for the needs of large-scale organizations. Even our "large" model has parameters well under a billion and provides fast, high-throughput embedding. Based on internal testing, it easily processes over 100 documents per second (on average) on NVIDIA A10 GPUs and achieves less than 10 milliseconds of query-embedding latency, enabling real-world deployment on affordable hardware.

- Uncompromising English and non-English search quality: Despite their compact size, both Arctic Embed 2.0 models achieved impressive NDCG@10 scores on a variety of English and non-English benchmark datasets, demonstrating their good generalization ability even for languages not included in the training solution. These impressive benchmark scores make Arctic Embed L 2.0 a leader among cutting-edge retrieval models.

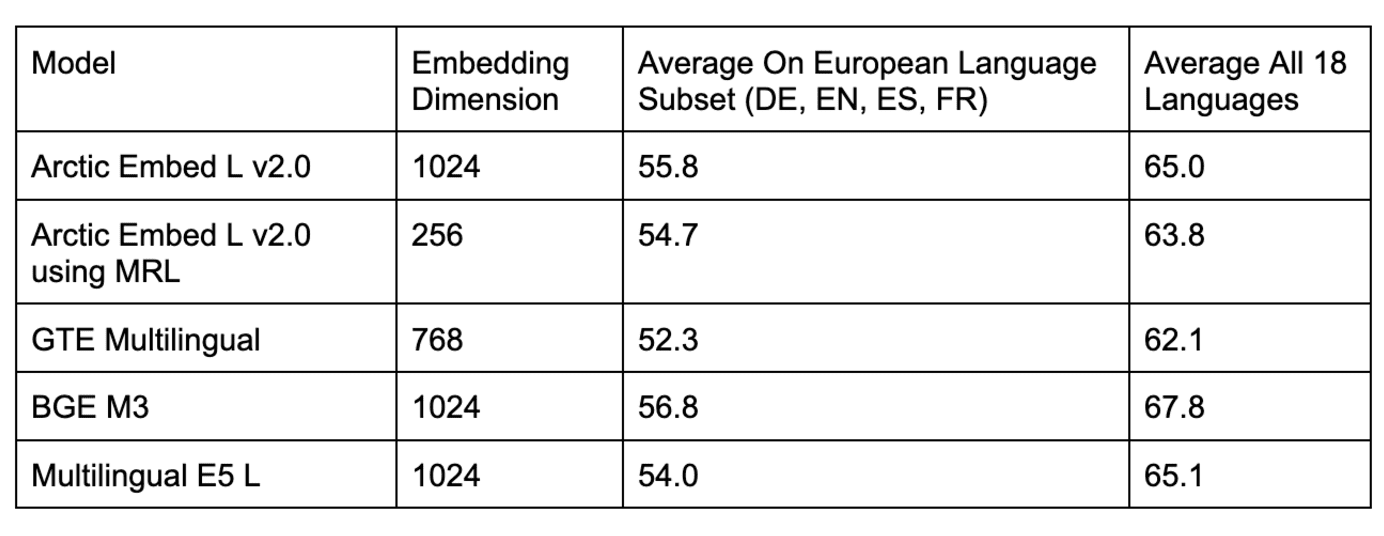

- Scalable Retrieval via Matryoshka Representation Learning (MRL): Arctic Embed version 2.0 includes the same quantization-friendly MRL functionality introduced in Arctic Embed 1.5, allowing users to reduce costs and optimize size when performing searches on large datasets. With two model sizes, users can achieve high-quality searches using only 128 bytes per vector (96 times smaller than the uncompressed embedding of OpenAI's popular text-embedding-3-large model ^1^). Like Arctic Embed 1.5, the Arctic Embed 2.0 model outperforms several of its MRL-enabled peers in a compressed state, with significantly lower quality degradation and higher benchmark scores.

- Truly open source: The Arctic Embed 2.0 model is released under the generous Apache 2.0 license.

Open Source Flexibility Meets Enterprise-Class Reliability

Like their predecessors, Arctic Embed 2.0 models are released under the generous Apache 2.0 license, enabling organizations to modify, deploy, and extend under familiar licenses. These models work out-of-the-box, support applications across verticals through reliable multi-language embedding, and have good generalization capabilities.

Clément Delangue, CEO of Hugging Face, said, "Multilingual embedding models are critical to enabling people around the globe, not just English speakers, to become AI builders. By releasing these state-of-the-art models as open source on Hugging Face, Snowflake is making a huge contribution to AI and the world."

In fact, especially among the open source options, the Arctic Embed 2.0 family deserves special attention for its observed generalization capabilities in multilingual search benchmarks. By License 2000-2003 Cross-Language Evaluation Forum (CLEF) test suite, our team was able to measure the quality of out-of-domain retrieval of various open source models and found an unfortunate trend with in-domain MIRACL poor performance compared to the scores obtained on the evaluation set. We hypothesize that some of the early open-source model developers may have inadvertently over-tuned their training regimen at the expense of improving MIRACL performance, and thus may have over-fitted the MIRACL training data. Stay tuned for an upcoming technical report on how the Arctic Embed 2.0 model was trained and what we learned during the training process.

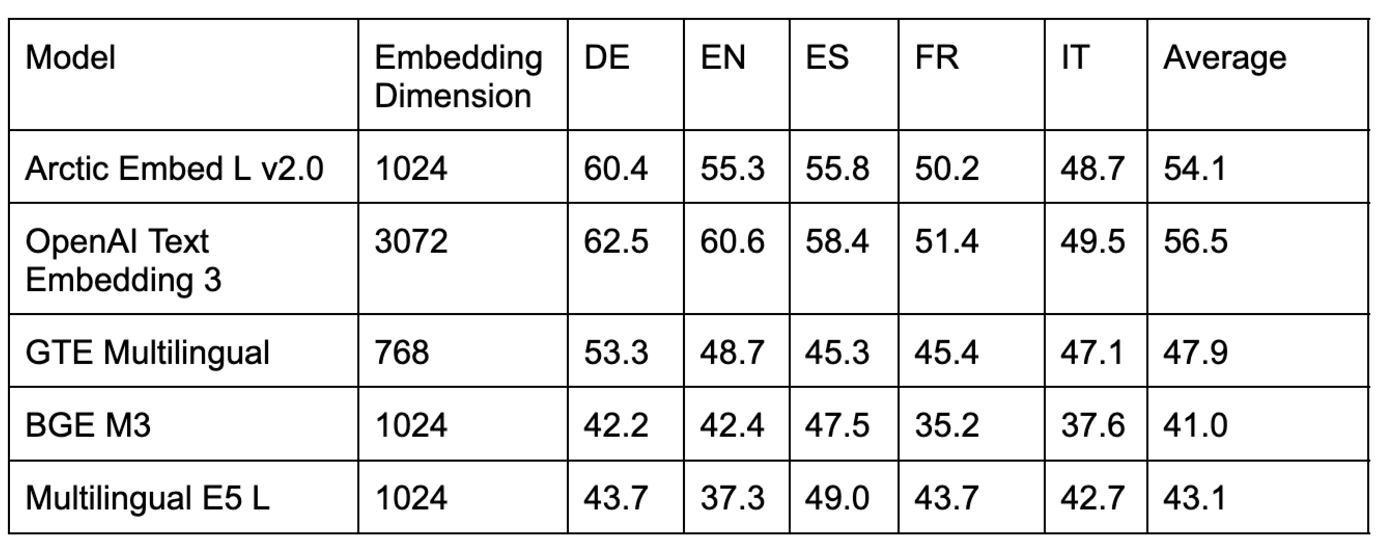

Table 2. Comparison of multilingual retrieval models on several datasets from out-of-domain CLEF benchmarking.

Table 3. Comparison of several open source multilingual retrieval models in the in-domain MIRACL benchmarking.

As shown in Tables 2 and 3, several popular open-source models for intra-domain MIRACL evaluation with Arctic Embed L 2.0 scores are comparable, but performs poorly in out-of-domain CLEF evaluations. We also benchmarked popular closed-source models (e.g., OpenAI's text-embedding-3-large model) and found that Arctic L 2.0 performs in line with leading proprietary models.

As shown in Table 4, existing open-source multilingual models are also better in the popular English MTEB Retrieval benchmarking than the Arctic Embed L 2.0 scored even worse, forcing users who wanted to support multiple languages to choose between lower English retrieval quality or the increased operational complexity of using a second model for English-only retrieval. With the release of Arctic Embed 2.0, practitioners are now able to switch to a single open source model without sacrificing English retrieval quality.

Table 4. Comparison of several top open-source and closed-source multilingual retrieval models in the in-domain MTEB Retrieval benchmarking.

Compression and efficiency without trade-offs

Snowflake continues to prioritize efficiency and scale in its embedded model design. With the help of Arctic Embed L 2.0This allows users to compress the quality features of large models into compact embeddings that require only 128 bytes of storage per vector. This makes it possible to provide retrieval services for millions of documents at low cost on low-end hardware. We also realized efficiencies in embedding throughput by compressing the impressive retrieval quality of Arctic Embed 2.0 into 100 million and 300 million non-embedded parameters for its two sizes (medium and large) respectively - only a slight increase over our earlier English-only version.

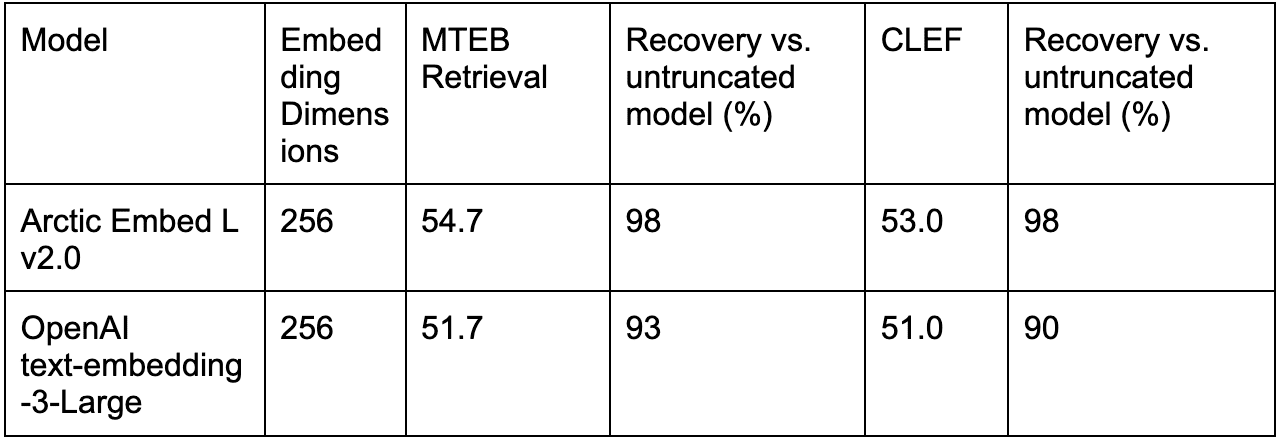

Indeed, scale-focused mechanisms are Arctic Embed L 2.0 Where it really shines, it achieves better quality under compression compared to other MRL-trained models (e.g. OpenAI's text-embedding-3-large).

Table 5. compares OpenAI's text-embedding-3-large performance with truncated embedding with Arctic Embed L 2.0 for English-only (MTEB Retrieval) and multilingual (CLEF).

Conclusion: A New Standard for Multilingual, Efficient Searching

With Arctic Embed 2.0, Snowflake has set a new standard for multilingual, efficient embedding models. In addition, we've made the frontiers of text embedding quality not only efficient, but also generously open-sourced. Whether your goal is to expand your reach to multilingual users, reduce storage costs, or embed documents on accessible hardware, Arctic Embed 2.0 provides the features and flexibility to meet your needs.

Our upcoming technical reports will delve deeper into the innovations behind Arctic Embed 2.0. In the meantime, we invite you to start embedding with Snowflake today.

^1^ This calculation uses the float32 format for the uncompressed baseline, i.e., 3,072 numbers of 32 bits each, for a total of 98,304 bits per vector, which is exactly 96 times larger than the 1,024 bits per vector (equivalent to 128 bytes per vector) used when storing 256-dimensional vectors truncated by MRL in the Arctic Embed 2.0 model in int4 format.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...