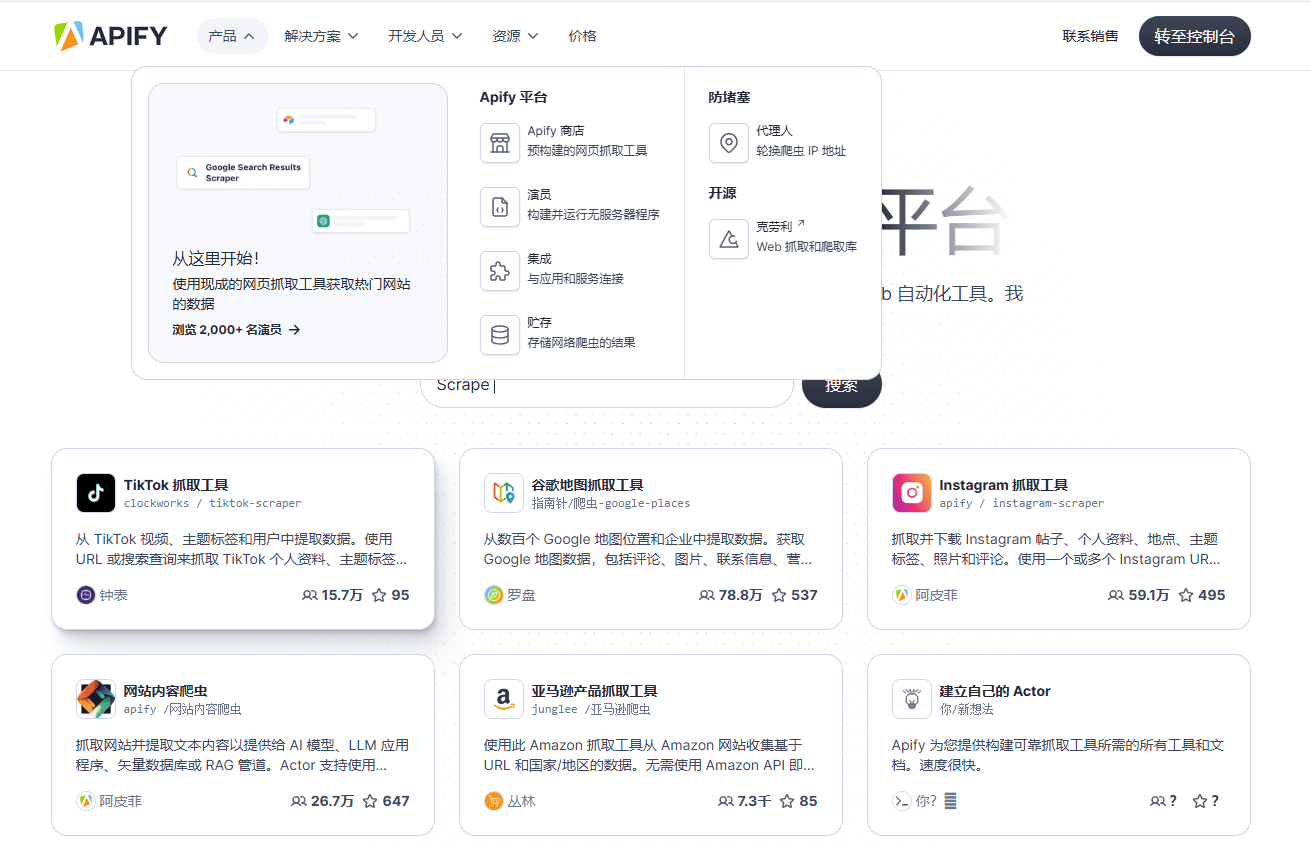

Apify: full-stack web crawling and data extraction platform, automate data collection, build custom crawlers, integrate multiple APIs

General Introduction

Apify is a full-stack web crawling and data extraction platform that provides a variety of tools and services to help users automate data extraction from any website. Users can use off-the-shelf crawling tools or build and distribute their own data extraction tools.Apify supports multiple programming languages and frameworks and provides rich API and integration options for a variety of data collection and automation needs.

The strongest data capture tool that can be quickly integrated into mainstream workflow tools and can quickly build a knowledge base.

Function List

- web crawler: Crawl any web page using Chrome, with support for recursive crawling and URL lists.

- data extraction: Extract structured data from web pages, supporting JSON, XML, CSV and other formats.

- Custom Crawler: Build and publish custom data extraction tools called Actors.

- API integration: Integration with a wide range of third-party services and tools, such as Zapier, Google Sheets, Slack, and more.

- Professional Services: Provide customized web crawling solutions, designed and implemented by a team of professionals.

- open source tool: Support for a wide range of open source tools and libraries such as Puppeteer, Playwright, Selenium, etc.

- data processing: Provide data cleaning, format conversion and other functions to support large-scale data processing.

- Monitoring and scheduling: Real-time monitoring of capture tasks, support for timed scheduling and automation.

Using Help

Installation and use

- Register & Login: Visit the official Apify website, register an account and log in.

- Selection Tools: Browse the Apify Store for ready-made crawling tools and choose the right one to use.

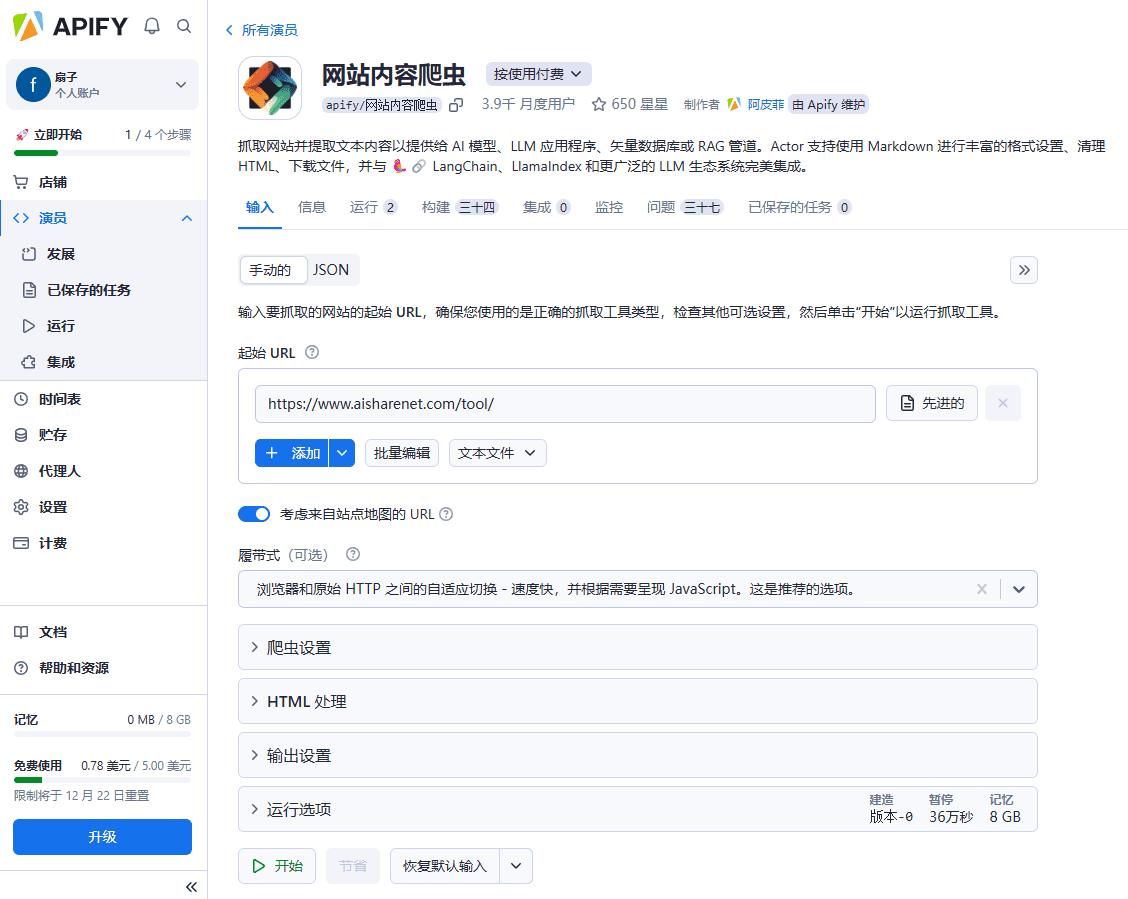

- Configure the capture task::

- Enter URL: Enter the URL of the web page to be crawled on the tool configuration page.

- Setting up Crawl Rules: Set up crawling rules as needed, such as recursive crawling, data extraction fields, and so on.

- Running Tasks: Click the "Run" button to start the capture task and view the progress and results in real time.

- Export data: After the capture is completed, the data can be exported to JSON, XML, CSV and other formats, downloaded locally or imported directly into other systems.

- Building a Custom Crawler::

- Creating an Actor: Create a new Actor on the Apify platform to write custom crawling and data extraction logic.

- Testing and Debugging: Use the development tools and debugging features provided by Apify to test the crawl logic and fix errors.

- Publish and Run: Publish Actor to Apify Store, set up timed tasks or run it manually.

- API integration::

- Getting the API key: Get the API key in your account settings for calling the Apify API.

- invoke an API: Integrate the Apify API into your own applications to automate data extraction and processing using the sample code in the API documentation.

- Professional Services::

- Contact the Apify team: If a customized solution is required, you can contact Apify's Professional Services team to provide a description of your needs.

- Project implementation: The Apify team will design and implement a customized crawling solution based on the requirements to ensure accuracy and efficiency of data extraction.

Detailed function operation flow

- web crawler::

- Select Grabber Tool: Select the "Web Scraper" tool in the Apify Store.

- Configure the capture task: Enter the URL of the web page to be crawled and set the recursive crawling and data extraction rules.

- Running the capture task: Click the "Run" button to view the progress and results of the capture in real time.

- Export data: After the capture is complete, export the data to the desired format.

- data extraction::

- Select Data Extraction Tool: Select the appropriate data extraction tool in the Apify Store, such as "Google Maps Scraper".

- Configuring Extraction Tasks: Enter the URL of the web page from which you want to extract data and set the data extraction fields and rules.

- Run the extraction task: Click the "Run" button to view the extraction progress and results in real time.

- Export data: After the extraction is complete, export the data to the desired format.

- Custom Crawler::

- Creating an Actor: Create a new Actor on the Apify platform to write custom crawling and data extraction logic.

- Testing and Debugging: Use the development tools and debugging features provided by Apify to test the crawl logic and fix errors.

- Publish and Run: Publish Actor to Apify Store, set up timed tasks or run it manually.

- API integration::

- Getting the API key: Get the API key in your account settings for calling the Apify API.

- invoke an API: Integrate the Apify API into your own applications to automate data extraction and processing using the sample code in the API documentation.

- Professional Services::

- Contact the Apify team: If a customized solution is required, you can contact Apify's Professional Services team to provide a description of your needs.

- Project implementation: The Apify team will design and implement a customized crawling solution based on the requirements to ensure accuracy and efficiency of data extraction.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...