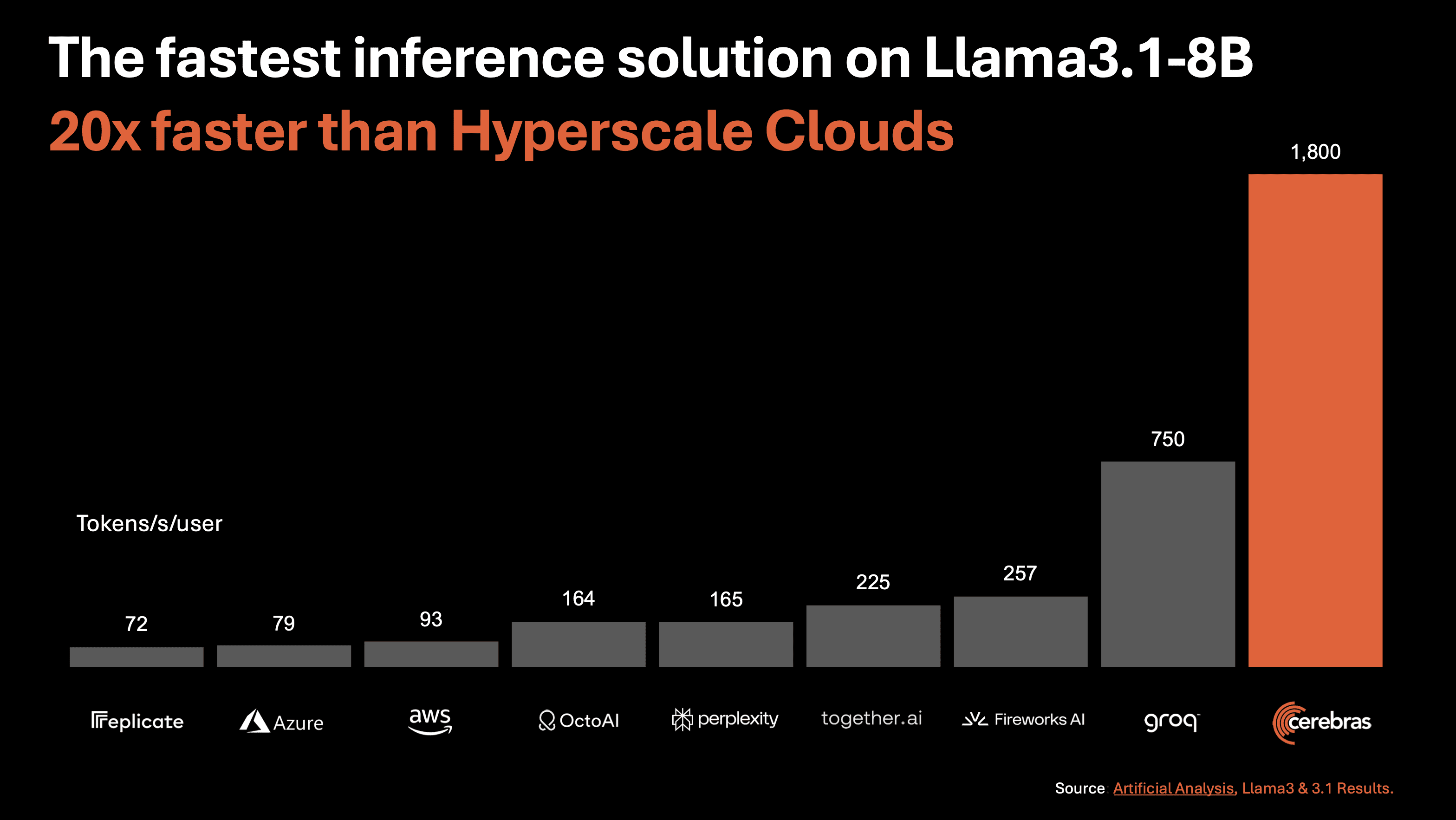

Aphrodite Engine: an efficient LLM inference engine that supports multiple quantization formats and distributed inference.

General Introduction

Aphrodite Engine is the official backend engine for PygmalionAI, designed to provide an inference endpoint for PygmalionAI sites and support rapid deployment of Hugging Face compatible models. The engine leverages vLLM's Paged Attention technology to enable efficient K/V management and sequential batch processing, which significantly improves inference speed and memory utilization.Aphrodite Engine supports multiple quantization formats and distributed inference for a wide range of modern GPU and TPU devices.

Function List

- Continuous Batch Processing: Efficiently handle multiple requests and improve inference speed.

- Paged Attention: Optimize K/V management to improve memory utilization.

- CUDA-optimized kernel: Improving inference performance.

- Quantitative support: Supports multiple quantization formats such as AQLM, AWQ, Bitsandbytes, etc.

- distributed inference: Supports 8-bit KV cache for high context length and high throughput requirements.

- Multi-device support: Compatible with NVIDIA, AMD, Intel GPUs and Google TPUs.

- Docker Deployment: Provide Docker images to simplify the deployment process.

- API compatible: Supports OpenAI-compatible APIs for easy integration into existing systems.

Using Help

Installation process

- Installation of dependencies::

- Make sure that Python versions 3.8 to 3.12 are installed on your system.

- For Linux users, the following command is recommended to install the dependencies:

sudo apt update && sudo apt install python3 python3-pip git wget curl bzip2 tar- For Windows users, a WSL2 installation is recommended:

wsl --install sudo apt update && sudo apt install python3 python3-pip git wget curl bzip2 tar - Installation of Aphrodite Engine::

- Use pip to install:

pip install -U aphrodite-engine - priming model::

- Run the following command to start the model:

bash

aphrodite run meta-llama/Meta-Llama-3.1-8B-Instruct - This will create an OpenAI-compatible API server with a default port of 2242.

- Run the following command to start the model:

Deploying with Docker

- Pulling a Docker image::

docker pull alpindale/aphrodite-openai:latest

- Running Docker Containers::

docker run --runtime nvidia --gpus all \

-v ~/.cache/huggingface:/root/.cache/huggingface \

-p 2242:2242 \

--ipc=host \

alpindale/aphrodite-openai:latest \

--model NousResearch/Meta-Llama-3.1-8B-Instruct \

--tensor-parallel-size 8 \

--api-keys "sk-empty"

Main function operation flow

- Continuous Batch Processing::

- Aphrodite Engine significantly improves inference speed by allowing multiple requests to be processed at the same time through continuous batch processing technology. Users simply specify the batch processing parameters at startup.

- Paged Attention::

- This technology optimizes K/V management and improves memory utilization. No additional configuration is required by the user and the system automatically applies the optimization.

- Quantitative support::

- A variety of quantization formats are supported, such as AQLM, AWQ, Bitsandbytes, and so on. Users can specify the desired quantization format when starting the model:

aphrodite run --quant-format AQLM meta-llama/Meta-Llama-3.1-8B-Instruct - distributed inference::

- Supports 8-bit KV cache for high context length and high throughput requirements. Users can start distributed reasoning with the following command:

aphrodite run --tensor-parallel-size 8 meta-llama/Meta-Llama-3.1-8B-Instruct - API integration::

- Aphrodite Engine provides OpenAI compatible APIs for easy integration into existing systems. Users can start the API server with the following command:

bash

aphrodite run --api-keys "your-api-key" meta-llama/Meta-Llama-3.1-8B-Instruct

- Aphrodite Engine provides OpenAI compatible APIs for easy integration into existing systems. Users can start the API server with the following command:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...